This repository is the official PyTorch implementation of Global Context Vision Transformers.

Global Context Vision

Transformers

Ali Hatamizadeh,

Hongxu (Danny) Yin, Jan Kautz,

and Pavlo Molchanov.

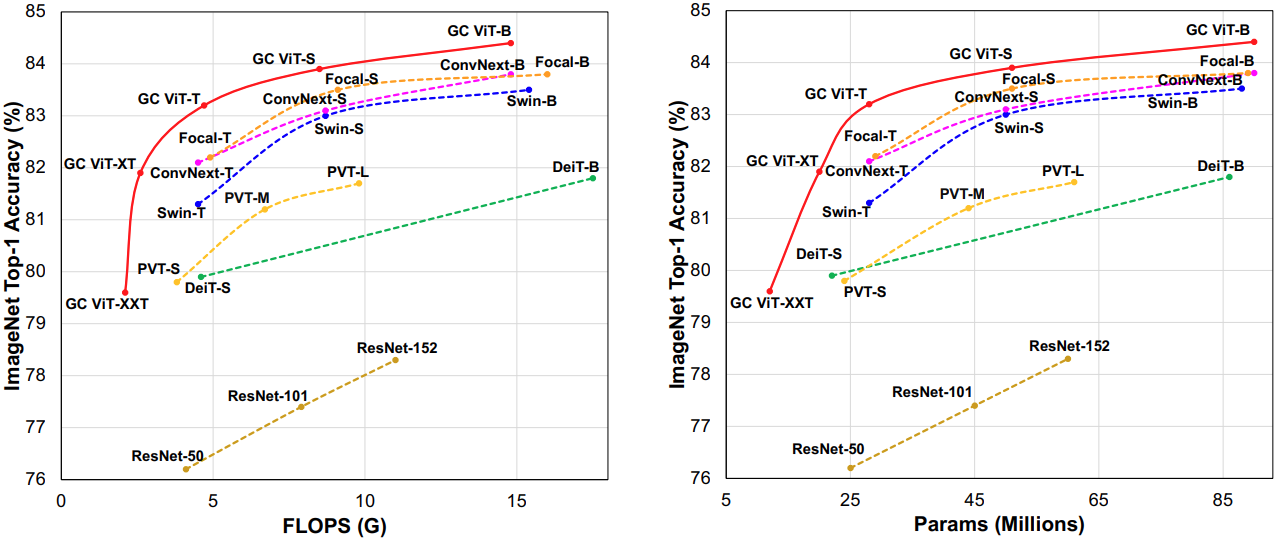

GC ViT achieves state-of-the-art results across image classification, object detection and semantic segmentation tasks. On ImageNet-1K dataset for classification, the base, small and tiny variants of GC ViT with 28M, 51M and 90M parameters achieve 83.2, 83.9 and 84.4 Top-1 accuracy, respectively, surpassing comparably-sized prior art such as CNN-based ConvNeXt and ViT-based Swin Transformer by a large margin. Pre-trained GC ViT backbones in downstream tasks of object detection, instance segmentation,

and semantic segmentation using MS COCO and ADE20K datasets outperform prior work consistently, sometimes by large margins.

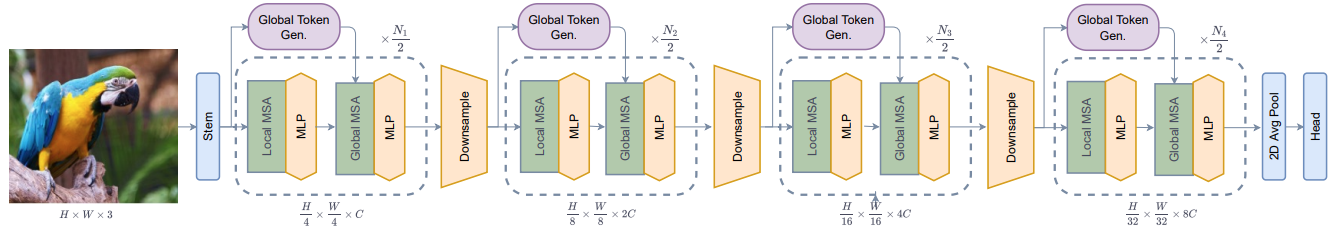

The architecture of GC ViT is demonstrated in the following:

06/17/2022

- GC ViT model, training and validation scripts released for ImageNet-1K classification.

- Pre-trained model checkpoints will be released soon.

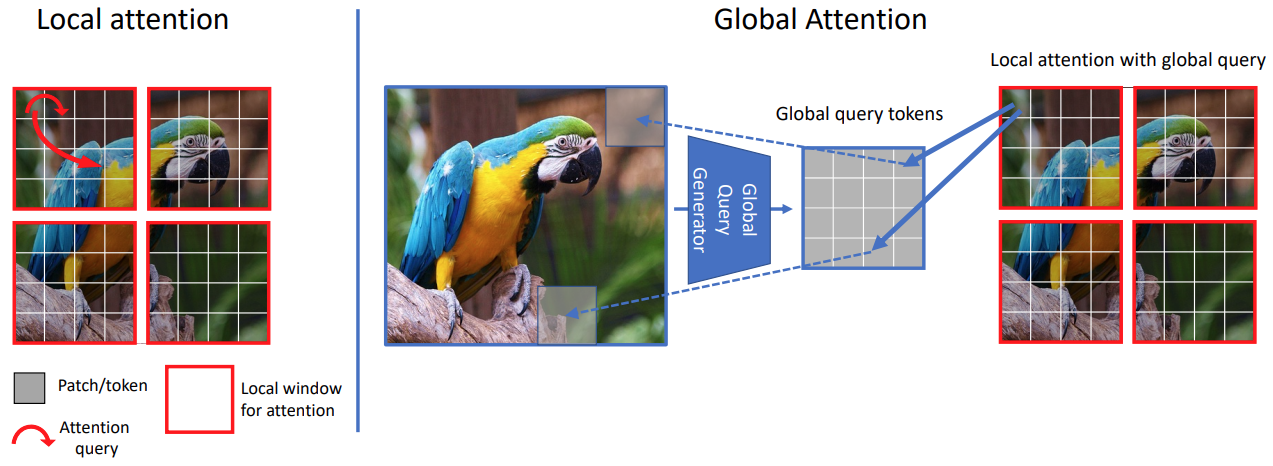

GC ViT leverages global context self-attention modules, joint with local self-attention, to effectively yet efficiently model both long and short-range spatial interactions, without the need for expensive operations such as computing attention masks or shifting local windows.

ImageNet-1K Pretrained Models

| Name | Acc@1 | Acc@5 | Resolution | #Params | FLOPs | Performance Summary | Tensorboard | Download |

|---|---|---|---|---|---|---|---|---|

| GC ViT-T | 83.2 | 96.3 | 224x224 | 28 | 4.7 | summary | tensorboard | model |

| GC ViT-S | 83.9 | 96.5 | 224x224 | 51 | 8.5 | summary | tensorboard | model |

| GC ViT-B | 84.4 | 96.9 | 224x224 | 90 | 14.8 | summary | tensorboard | model |

This repository is compatible with NVIDIA PyTorch docker nvcr>=21.06 which can be obtained in this

link.

The dependencies can be installed by running:

pip install -r requirements.txtPlease download the ImageNet dataset from its official website. The training and validation images need to have sub-folders for each class with the following structure:

imagenet

├── train

│ ├── class1

│ │ ├── img1.jpeg

│ │ ├── img2.jpeg

│ │ └── ...

│ ├── class2

│ │ ├── img3.jpeg

│ │ └── ...

│ └── ...

└── val

├── class1

│ ├── img4.jpeg

│ ├── img5.jpeg

│ └── ...

├── class2

│ ├── img6.jpeg

│ └── ...

└── ...

The GC ViT model can be trained from scratch on ImageNet-1K dataset by running:

python -m torch.distributed.launch --nproc_per_node <num-of-gpus> --master_port 11223 train.py \

--config <config-file> --data_dir <imagenet-path> --batch-size <batch-size-per-gpu> --tag <run-tag>To resume training from a pre-trained checkpoint:

python -m torch.distributed.launch --nproc_per_node <num-of-gpus> --master_port 11223 train.py \

--resume <checkpoint-path> --config <config-file> --data_dir <imagenet-path> --batch-size <batch-size-per-gpu> --tag <run-tag>To evaluate a pre-trained checkpoint using ImageNet-1K validation set on a single GPU:

python validate.py --model <model-name> --checkpoint <checkpoint-path> --data_dir <imagenet-path> --batch-size <batch-size-per-gpu>This repository is built upon the timm library.

Please consider citing GC ViT paper if it is useful for your work:

@misc{hatamizadeh2022global,

title={Global Context Vision Transformers},

author={Ali Hatamizadeh and Hongxu Yin and Jan Kautz and Pavlo Molchanov},

year={2022},

eprint={2206.09959},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Copyright © 2022, NVIDIA Corporation. All rights reserved.

This work is made available under the Nvidia Source Code License-NC. Click here to view a copy of this license.

The pre-trained models are shared under CC-BY-NC-SA-4.0. If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

For license information regarding the ImageNet dataset, please refer to the official website.