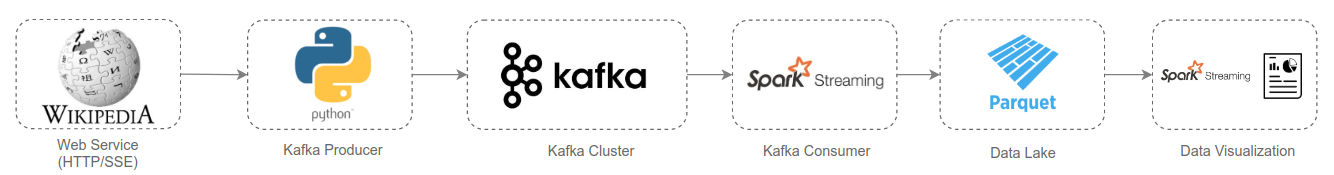

Spark application that consumes Kafka events generated by a Python producer.

- Clone the project

git clone https://github.com/cordon-thiago/spark-kafka-consumer

-

Set the

KAFKA_ADVERTISED_HOST_NAMEvariable inside thedocker-compose.ymlwith your docker host IP. Note: Do not use localhost or 127.0.0.1 as the host ip if you want to run multiple brokers. More information about the variables you can configure on the kafka docker, please refer to this repository. -

Start docker containers with compose.

cd spark-kafka-consumer/docker

docker-compose up -d

It will start the following services:

- zookeeper:

- Image: wurstmeister/zookeeper

- Port: 2181

- kafka:

- Image: wurstmeister/kafka:2.11-1.1.1

- Port: 9092

- spark:

- Image: jupyter/all-spark-notebook

- Port: 8888

- Get the Jupyter Notebook URL + Token accessing the spark container

Access the container bash

docker exec -it docker_spark_1 bash

Then, get the notebook URL. Copy and paste the URL in the browser.

jupyter notebook list

-

Run the

event-producer.ipynbnotebook to start producing events from changes in Wikipedia pages to a Kafka topic. More information about the Wikipedia event here. -

Run the

event-consumer-spark.ipynbnotebook to start consuming events from the Kafka topic and write it in parquet files. -

Run the

data-visualization.ipynbnotebook to read the parquet files as streaming and visualize the top 10 users that have more edits.