This project, ChatEase, is a web-based intelligent conversational assistant designed to provide seamless interaction with users. The assistant leverages the power of Ollama with the Meta Llama3 model to generate accurate and context-aware responses. This project was developed as part of the Study Case MSIB Batch 7 - Telkom Indonesia.

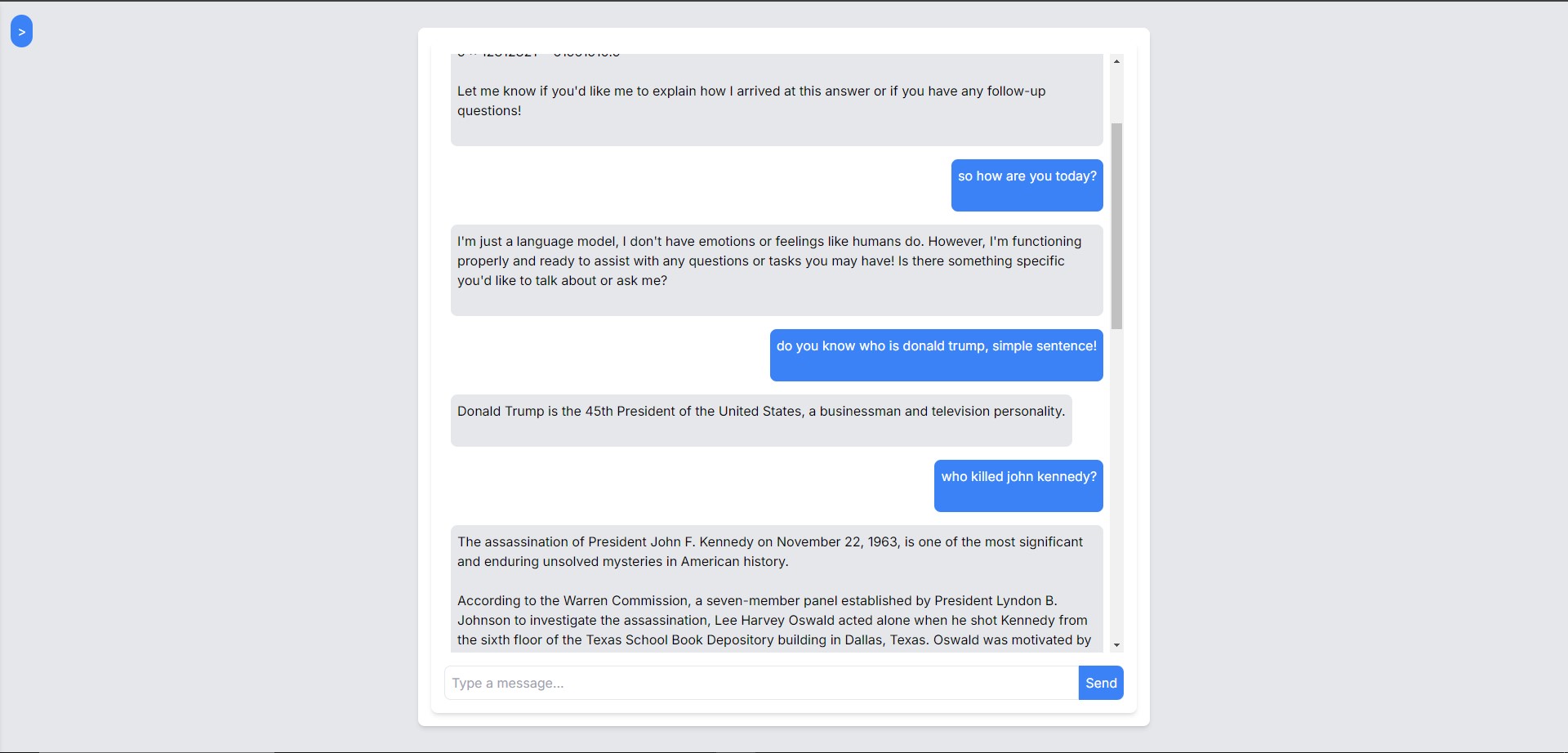

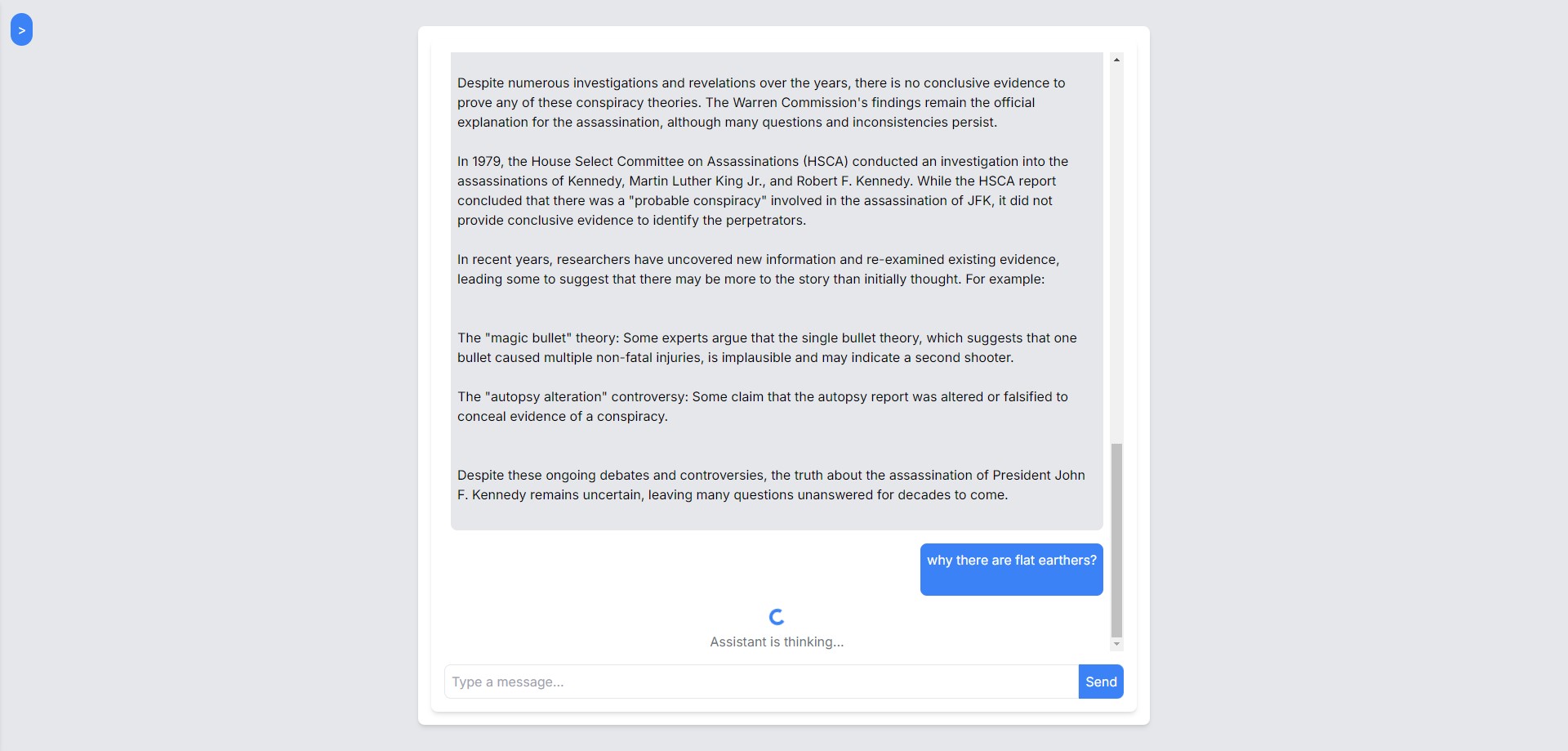

- Real-time chatbot using llama3 (8b) model.

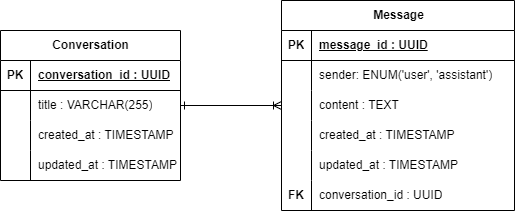

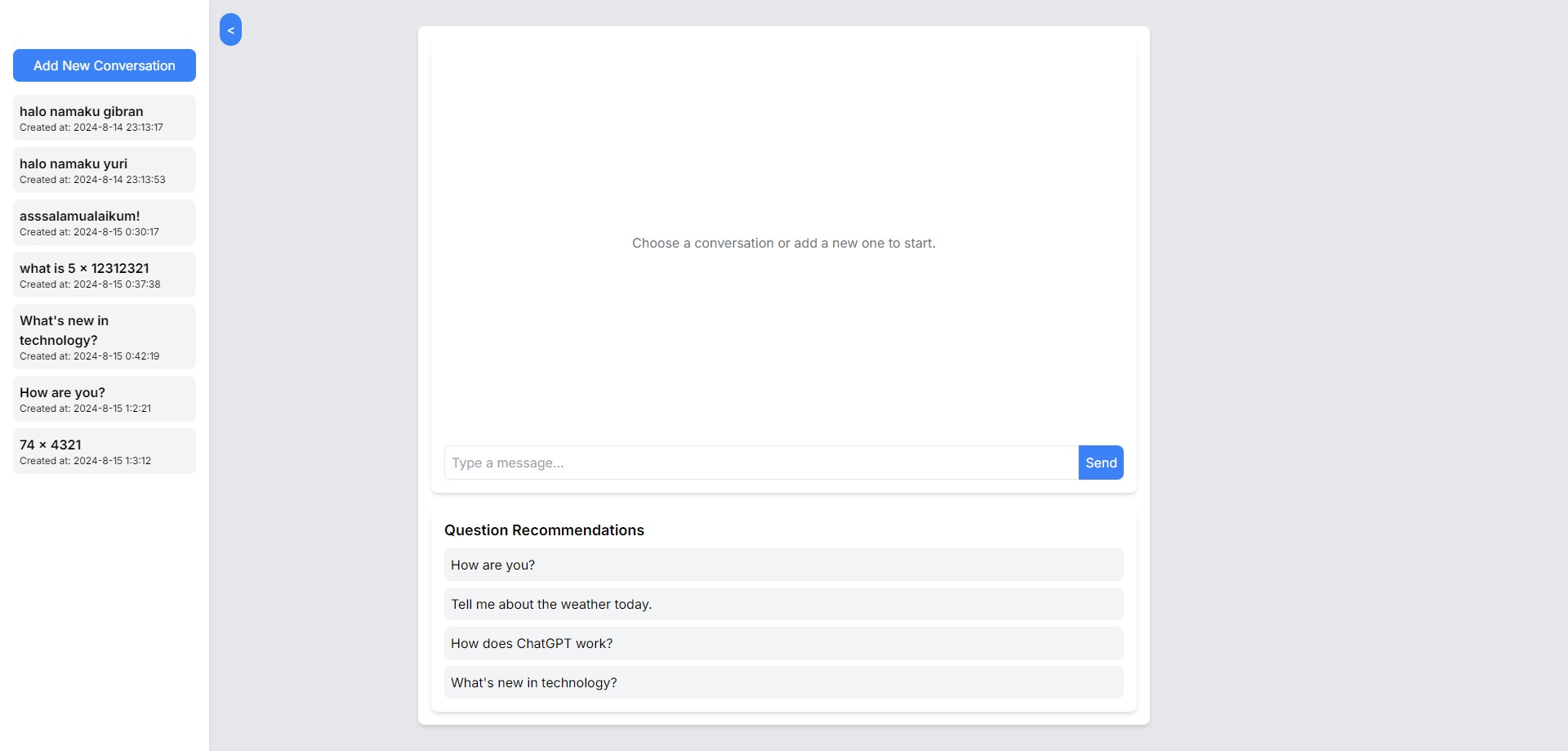

- Conversation and message history.

- Message recommendations to start a conversation.

- User-friendly interface with a clean and responsive design.

- Next.js (v14.2.5)

- TypeScript (v5)

- Tailwind CSS (v3.4.1)

- Meta llama3 (8b)

- Marked (latest version): A Markdown parser and compiler.

- Visual Studio Code: Code editor used for development.

- Git: Version control system.

- Laragon: Local development environment for PHP, including:

- Postman: API client for testing and developing APIs.

mainis the main branch that will be used for deployment (production)

feat: (new feature for the user, not a new feature for build script)fix: (bug fix for the user, not a fix to a build script)docs: (changes to the documentation)style: (formatting, missing semi colons, etc; no production code change)refactor: (refactoring production code, eg. renaming a variable)test: (adding missing tests, refactoring tests; no production code change)chore: (updating grunt tasks etc; no production code change)

- Clone this repository (branch

main) if you haven't already. - Navigate to the

clientfolder. - Open the project folder in Visual Studio Code (

code .in CLI). - Open the terminal and run

npm install. - Run

npm run dev. - Open your browser and go to

localhost:3000.

- Clone this repository (branch

main) if you haven't already. - Navigate to the

serverfolder. - Open the project folder in Visual Studio Code (

code .in CLI). - Copy .env.example to .env and fill in the necessary environment variables.

- Open the terminal and run

composer install. - Run

php artisan migrate. - Run

php artisan serve. - Open your browser and go to

localhost:8000.

- If Ollama is already installed and not yet running also llama3 (8b) model has been downloaded, open a new terminal in cmd.

- Execute

ollama serveand it should be accessible atlocalhost:11434.

- Fork this repository to your GitHub account.

- Clone the forked repository to your local machine.

- Create a new branch corresponding to the feature you want to work on or the bug you want to fix.

- Commit and push your changes to that branch (make sure not to make changes directly to the

mainbranch). - Create a pull request to the

mainbranch of this repository. - Wait for the reviewer to review and merge your pull request.

- If the reviewer requests changes, make the requested changes, commit, and push them to the same branch, then notify the reviewer that the changes have been made.

- Once your pull request is approved, it will be merged into the

mainbranch, and the branch you created will be deleted (to avoid unused branches).

Feel free to contribute to this project by fixing existing bugs, adding new features, or improving documentation. Happy coding 🎉!

For this project, I am prioritizing the role of Backend Engineer. However, I am also very interested in and excited about Fullstack Engineering. If possible, I would love to work on both frontend and backend tasks to leverage my skills across the entire stack.

- Gibran Fasha Ghazanfar - LinkedIn