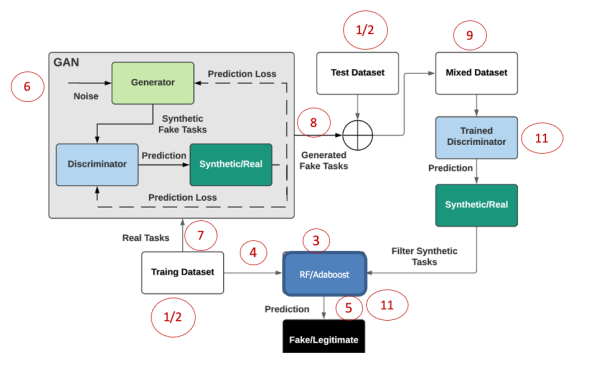

Fake task attack is critical for Mobile Crowdsensing system (MCS) that aim to clog the sensing servers in the MCS platform and drain more energy from participants’ smart devices. Typically, fake tasks are created by empirical model such as CrowdSenSim tool. Recently, cyber criminals deploy more intelligent mechanisms to create attacks. Generative Adversarial Network (GAN) is one of the most powerful techniques to generate synthetic samples. GAN considers the entire data in the training dataset to create similar samples. This project aims to use GAN to create fake tasks and verify fake task detection performance.

- Prepare the dataset For training

- Implement classic classifiers (Adaboost and RF) and train them

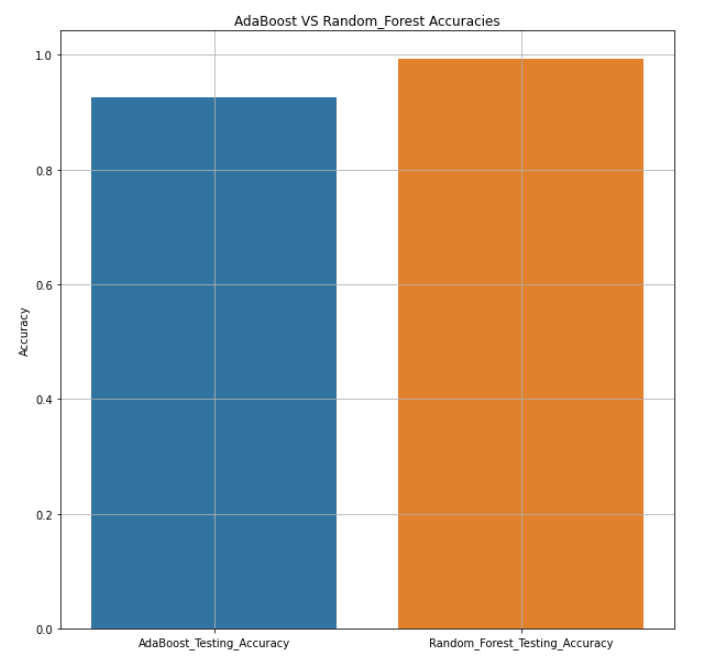

- Verify detection performance using test dataset and compare the results

- Implement Conditional GAN with Wasserstein loss [2].

- Train the CGAN on the training dataset

- Generate synthetic fake tasks via Generator network in CGAN after the training procedure

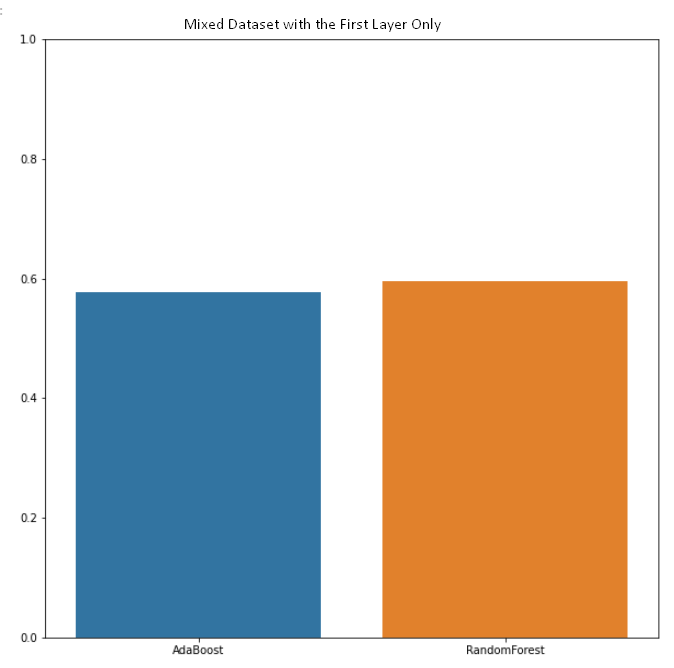

- Mix the generated fake tasks with the original test dataset to obtain a new test dataset

- Obtain Adaboost and RF detection performance using the new test dataset and compare the results

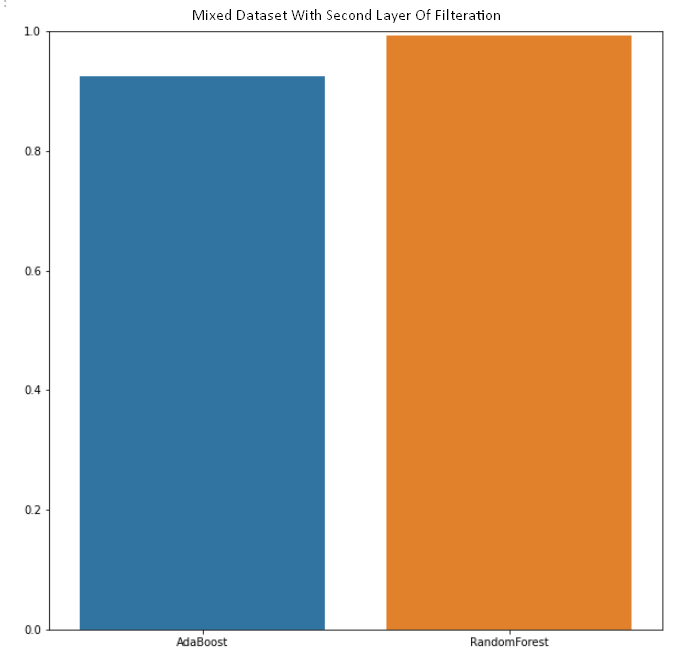

- Consider the Discriminator to as the first level classifier and RF/Adaboost as the second level classifier

pip install numpy

pip install tensorflow-gpu==2.9.1

pip install pandas

pip install seaborn

pip install matplotlib

pip install sklearn

pip install imbalanced-learn| Original test set | Mixed without disc | Cascade framework |

|---|---|---|

|

|

|

The generated tasks from the generator are robust and succussed to fault the classic ML algorithms because it is tried to generate tasks very close to the real one, so the models can’t determine it and the accuracies has been decreased from 0.92 to 0.575 in the Adaboost model and has been decreased from 0.993 to 0.590 in the Random Forest model. In the cascade approach the discriminator helped the models because it can filter the fake tasks, so after the filtering it out the accuracies increased again to 0.926 in Adaboost and to 0.993 in the Random Forest model and this result is approximately one before mixing which means that the discriminator filtered.

- GAN implementation via keras https://keras.io/examples/generative/conditional_gan/

- GAN implementation via tensorflow https://www.tensorflow.org/tutorials/generative/dcgan

- GAN tutorial https://towardsdatascience.com/generative-adversarial-network-gan-for-dummies-a-step-bystep-tutorial-fdefff170391

- Chen, Zhiyan, and Burak Kantarci. "Generative Adversarial Network-Driven Detection of AdversarialTasks in Mobile Crowdsensing."arXiv preprint arXiv:2202.07802 (2022).

- Mirza, Mehdi, and Simon Osindero. "Conditional generative adversarial nets." arXiv preprint arXiv:1411.1784 (2014).