🕷️ ScrapeWave is a starter project for any scraping task where you want to monitor the progress and save data after scarping.

This project uses multiple technologies to achieve the requirement.

-

Server

- ViteExpress - @vitejs integration module for @expressjs.

- SQlite - SQLite client wrapper around sqlite3 for Node.js applications with SQL-based migrations API written in Typescript.

- Puppeteer - Node.js API for Chrome

- https://github.com/socketio/socket.io - Realtime application framework (Node.JS server).

- Other packages worth mentioning are:-

- axios

- fast-csv

-

UI

-

Docker - Accelerated Container Application Development

Pre-requisite

- Node - ^21.7.2

- Docker - LTS

- Chromium - Needed to run puppeteer on. When deploying its automatically installed in the image.

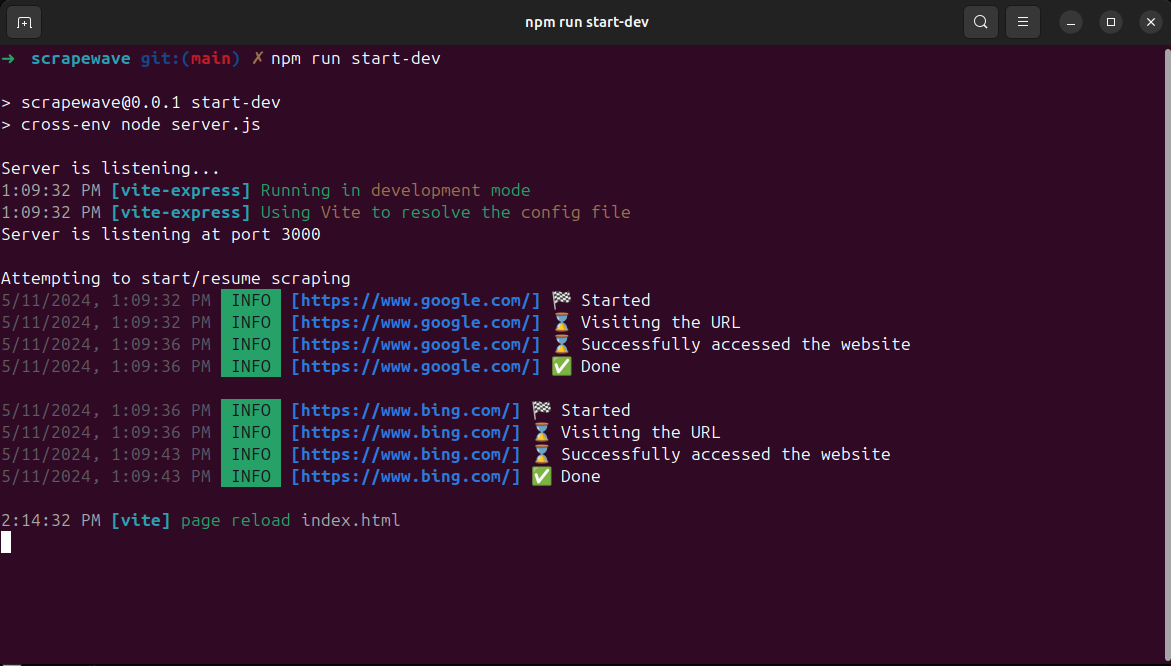

Steps to start development.

- Clone the 🕷️ ScrapeWave repository into your local system.

- Install dependencies using

npm install - And start the server using

npm run start-dev

The recommend way to extend scrapewave is as following.

Open /server.js and update the following to add more fields which you would want to save.

await database.exec(

`CREATE TABLE IF NOT EXISTS ${databaseTableName} (

'id' INTEGER PRIMARY KEY,

'url' VARCHAR(20) NOT NULL UNIQUE,

'status' TEXT(50) NOT NULL DEFAULT 'not_started',

'error' VARCHAR(1000))`

);Once this is done, you need to update it at few more places,

- For the import function to work you need to add it the

addUrlToDbfunction, for that visit./server/databaseScript.jsand findaddUrlToDbfunction, update this line code block to reflect new fields added.

await database.run(

'INSERT OR IGNORE INTO "domain" (url, status, error) VALUES (:url, :status, :error)',

{

':url': rows[i].url,

':status': rows[i].status ? rows[i].status : 'not_started',

':error': rows[i].error ? rows[i].error : '',

}

);- Visit

./server/model.jsand update the class to include all the new fields you just added in the database.

Visit ./server/puppetter-scripts/init.js async startProcessing() is the method where the actual scraping happens.

Add logic in this class which should be reflective of what scraping you are trying to do.

When you add the logic, it recommended to add logging so that you can see what's exactly happening.

You can do that like this

- To log console with an information use

utils.info(this.urlData.url, `⌛ Visiting the URL`);- To log console with an information use

utils.error(this.urlData.url, `Error occured`);In ./server/model.js we have two methods which can be used to update status and error which can be used like this

// Update status

await urlItem.setStatus('completed');

// Update Error field

await urlItem.setError(`Error: ${e}`);Extend modal class to have setters for other fields.

Recommened way to deploy this in cloud is via docker.

Create and image from your current directory

docker build -t bhanu/scrapewave:latest .Here, bhanu would be the docker hub username and scrapewave is the name of the image.

Once you have created the image, login into your docker hub from your local system and push the image which can be easily accessible from any other remote machine.

You can do that using.

dokcer push bhanu/scrapewave:latestNow your scraper is ready to be deployed to any cloud machine.

Once you have ssh'ed into your machine you need to install two softwares, docker and Nginx.

To install Docker follow the steps here: https://www.digitalocean.com/community/questions/how-to-install-and-run-docker-on-digitalocean-dorplet

To install Nginx follow the steps here: https://www.digitalocean.com/community/tutorials/how-to-install-nginx-on-ubuntu-18-04

Once you have successfully installed both,

You can use docker run -dit --name -p :3000 krenovate/data-validator:latest to create a container.

docker run -dit -v instance-1:/usr/src/app/db --name instance-1 -p 8080:3000 --restart on-failure bhanu/scrapewave:latest

This command does quite a few things:

-

Creates a container using latest tag of bhanu/scrapewave

-

Mounts the SQlite database in your host filesystem, ensuring even if you recreate the the container with instance-1 volume your data would still be persistent.

-

For any scenario if the container exists, it will try to restart the container so that your scraping doesn't stop.