This framework provides implementations for the algorithms and environments discussed in:

Negative Update Intervals in Deep Multi-Agent Reinforcement Learning

Gregory Palmer, Rahul Savani, Karl Tuyls.

Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems (AAMAS)

[ arXiv ]

Lenient Multi-Agent Deep Reinforcement Learning

Gregory Palmer, Karl Tuyls, Daan Bloembergen, Rahul Savani.

Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems (AAMAS)

[ ACM Digital Library | arXiv ]

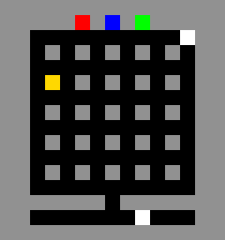

Layout 1: Observable Irrevocable Decision |

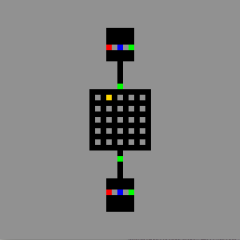

Layout 2: Irrevocable Decisions in Seclusion |

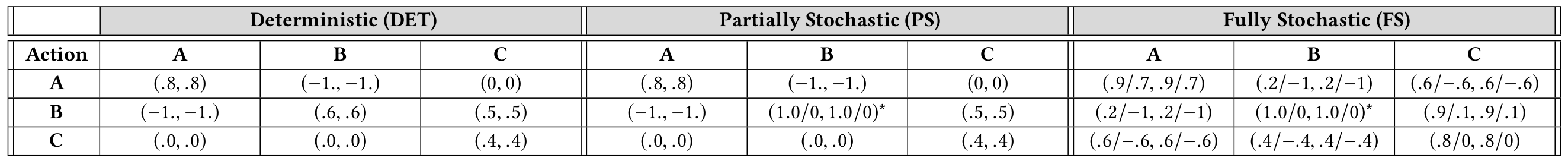

Reward structures for Deterministic (DET), Partially Stochastic (PS) and Fully Stochastic (FS) Apprentice Firemen Games, to be interpreted as rewards for (Agent 1, Agent 2). For (B,B) within PS and FS 1.0 is yielded on 60% of occasions. Rewards are sparse, received only at the end of an episode after the fire has been extinguished.

The environment flag can be used to specify the layout (V{1,2}), number of civilians (C{INT}) and the reward structure ({DET,PS,FS}):

python apprentice_firemen.py --environment ApprenticeFiremen_V{1,2}_C{INT}_{DET,PS,FS}

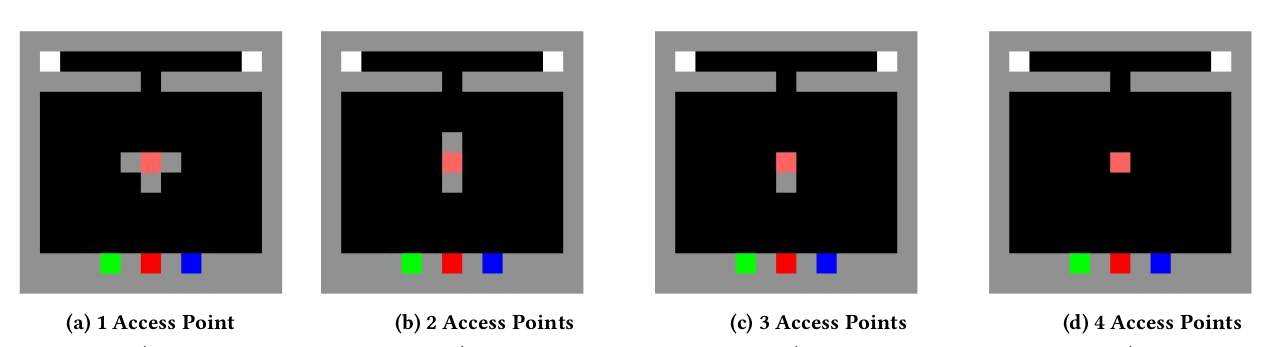

For Layout 3 the number of access points must be specified:

python apprentice_firemen.py --environment ApprenticeFiremen_V{1,2}_C{INT}_{DET,PS,FS}_{1,2,3,4}AP

Further layout config files can be added under:

./env/ApprenticeFiremen/

- CMOTP_V1 = Original

- CMOTP_V2 = Narrow Passages

- CMOTP_V3 = Stochastic Reward

Further layout config files can be added under:

./env/cmotp/

Simply copy one of the existing envconfig files and make your own amendments.

Agents can be trained via:

python cmotp.py --environment CMOTP_V1 --render False

For lenient/hysteretic/nui agents:

python {apprentice_firemen, cmotp}.py --madrl {hysteretic, leniency, nui}

Hyperparameters can be adjusted in:

./config.py

Results from individual runs can be found under:

./Results/

To run the above domains using your own agents modify the following files in

./main.py

# !---Import your agent class here ---!

from agent import Agent

# !---Import your agent class here ---! # !--- Store agent instances in agents list ---!

# Example:

agents = []

config = Config(env.dim, env.out, madrl=FLAGS.madrl, gpu=FLAGS.processor)

# Agents are instantiated

for i in range(FLAGS.agents):

agent_config = deepcopy(config)

agents.append(Agent(agent_config)) # Init agent instances

# !--- Store agent instances in agents list ---! # !--- Get actions from each agent ---!

actions.append(agent.move(observation))

# !--- Get actions from each agent ---!# !--- Pass feedback to each agent ---!

for agent, o, r in zip(agents, observations, rewards):

agent.feedback(r, t, o)

# !--- Pass feedback to each agent ---!