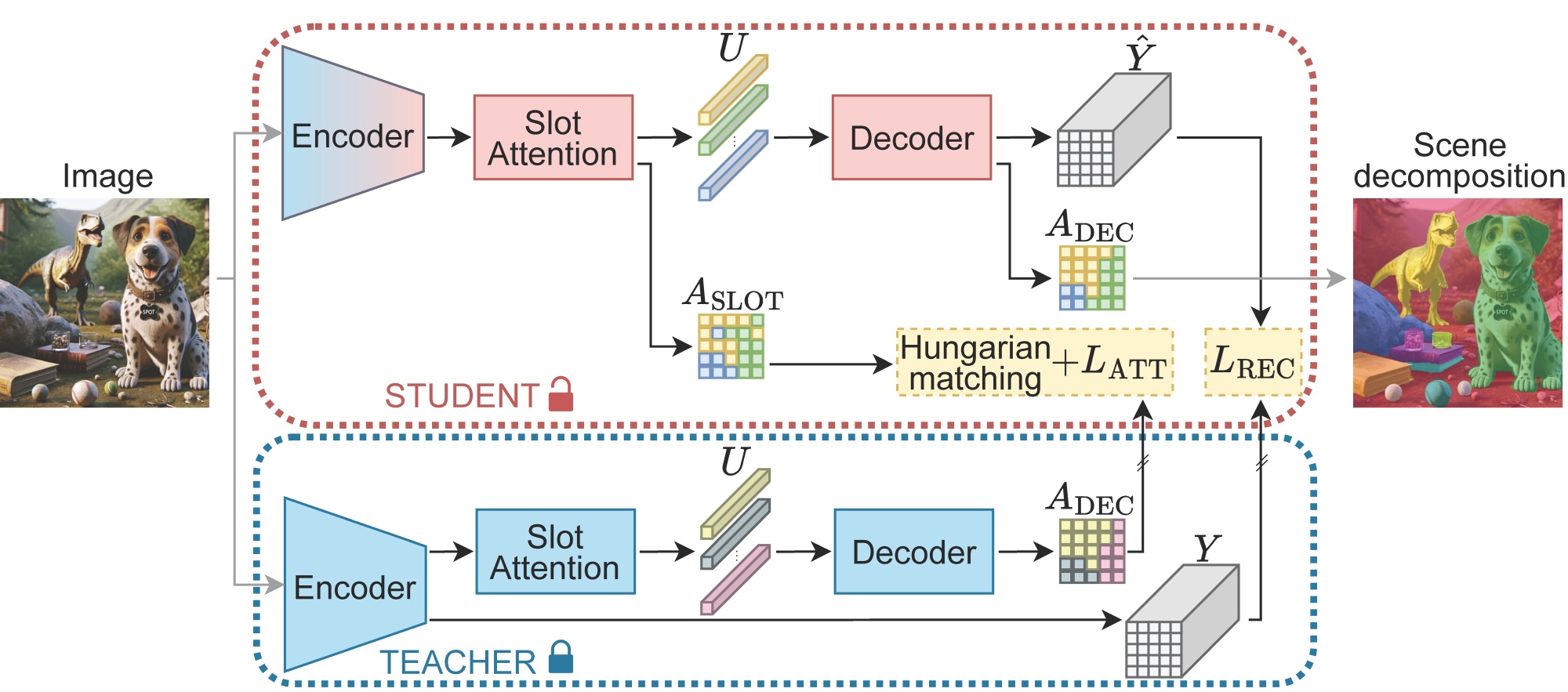

SPOT: Self-Training with Patch-Order Permutation for Object-Centric Learning with Autoregressive Transformers

[CVPR 2024 Highlight][paper][arXiv]

conda create -n spot python=3.9.16

conda activate spot

pip install -r requirements.txt --extra-index-url https://download.pytorch.org/whl/cu117All experiments run on a single GPU.

Download COCO dataset (2017 Train images,2017 Val images,2017 Train/Val annotations) from here and place them following this structure:

COCO2017

├── annotations

│ ├── instances_train2017.json

│ ├── instances_val2017.json

│ └── ...

├── train2017

│ ├── 000000000009.jpg

│ ├── ...

│ └── 000000581929.jpg

└── val2017

├── 000000000139.jpg

├── ...

└── 000000581781.jpgStage 1: Train SPOT Teacher for 50 epochs on COCO:

python train_spot.py --dataset coco --data_path /path/to/COCO2017 --epochs 50 --num_slots 7 --train_permutations random --eval_permutations standard --log_path /path/to/logs/spot_teacher_cocoYou can monitor the training progress with tensorboard:

tensorboard --logdir /path/to/logs/spot_teacher_coco --port=16000

Stage 2: Train SPOT (student) for 50 epochs on COCO (this produces the final SPOT model):

python train_spot_2.py --dataset coco --data_path /path/to/COCO2017 --epochs 50 --num_slots 7 --train_permutations random --eval_permutations standard --teacher_train_permutations random --teacher_eval_permutations random --teacher_checkpoint_path /path/to/logs/spot_teacher_coco/TIMESTAMP/checkpoint.pt.tar --log_path /path/to/logs/spot_cocoIf you are interested in MAE encoder, download pre-trained weights

ViT-Basefrom here and add:--which_encoder mae_vitb16 --pretrained_encoder_weights mae_pretrain_vit_base.pth --lr_main 0.0002 --lr_min 0.00004

Download pretrained SPOT model on COCO.

| mBOi | mBOc | Download |

|---|---|---|

| 34.9 | 44.8 | Checkpoint |

Evaluate SPOT on COCO aggregating all sequence permutations:

python eval_spot.py --dataset coco --data_path /path/to/COCO2017 --num_slots 7 --eval_permutations all --checkpoint_path /path/to/logs/spot_coco/TIMESTAMP/checkpoint.pt.tarYou can also train the baseline experiment SPOT w/o self-training & w/o sequence permutation (this is essentially DINOSAUR re-implementation) for 100 epochs on COCO:

python train_spot.py --dataset coco --data_path /path/to/COCO2017 --epochs 100 --num_slots 7 --train_permutations standard --log_path /path/to/logs/spot_wost_wosq_cocoDownload PASCAL VOC 2012 dataset from http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar, extract the files and copy trainaug.txt in VOCdevkit/VOC2012/ImageSets/Segmentation. The final structure should be the following:

VOCdevkit

└── VOC2012

├── ImageSets

│ └── Segmentation

│ ├── train.txt

│ ├── trainaug.txt

│ ├── trainval.txt

│ └── val.txt

├── JPEGImages

│ ├── 2007_000027.jpg

│ ├── ...

│ └── 2012_004331.jpg

├── SegmentationClass

│ ├── 2007_000032.png

│ ├── ...

│ └── 2011_003271.png

└── SegmentationObject

├── 2007_000032.png

├── ...

└── 2011_003271.pngStage 1: Train SPOT Teacher for 560 epochs on VOC:

python train_spot.py --dataset voc --data_path /path/to/VOCdevkit/VOC2012 --epochs 560 --num_slots 6 --train_permutations random --eval_permutations standard --log_path /path/to/logs/spot_teacher_vocStage 2: Train SPOT (student) for 560 epochs on VOC (this produces the final SPOT model):

python train_spot_2.py --dataset voc --data_path /path/to/VOCdevkit/VOC2012 --epochs 560 --num_slots 6 --train_permutations random --eval_permutations standard --teacher_train_permutations random --teacher_eval_permutations random --teacher_checkpoint_path /path/to/logs/spot_teacher_voc/TIMESTAMP/checkpoint.pt.tar --log_path /path/to/logs/spot_vocDownload pretrained SPOT model on PASCAL VOC 2012.

| mBOi | mBOc | Download |

|---|---|---|

| 48.6 | 55.7 | Checkpoint |

Evaluate SPOT on VOC aggregating all sequence permutations:

python eval_spot.py --dataset voc --data_path /path/to/VOCdevkit/VOC2012 --num_slots 6 --eval_permutations all --checkpoint_path /path/to/logs/spot_voc/TIMESTAMP/checkpoint.pt.tarTo download MOVi-C/E datasets, uncomment the last two rows in requirements.txt to install the tensorflow_datasets package. Then, run the following commands:

python download_movi.py --level c --split train

python download_movi.py --level c --split validation

python download_movi.py --level e --split train

python download_movi.py --level e --split validationThe structure should be the following:

MOVi

├── c

│ ├── train

│ │ ├── 00000000

│ │ │ ├── 00000000_image.png

│ │ │ └── ...

│ │ └── ...

│ └── validation

│ ├── 00000000

│ │ ├── 00000000_image.png

│ │ ├── 00000000_mask_00.png

│ │ └── ...

│ └── ...

└── e

├── train

│ └── ...

└── validation

└── ...Stage 1: Train SPOT Teacher for 65 epochs on MOVi-C:

python train_spot.py --dataset movi --data_path /path/to/MOVi/c --epochs 65 --num_slots 11 --train_permutations random --eval_permutations standard --log_path /path/to/logs/spot_teacher_movic --val_mask_size 128 --lr_main 0.0002 --lr_min 0.00004Stage 2: Train SPOT (student) for 30 epochs on MOVi-C (this produces the final SPOT model):

python train_spot_2.py --dataset movi --data_path /path/to/MOVi/c --epochs 30 --num_slots 11 --train_permutations random --eval_permutations standard --teacher_train_permutations random --teacher_eval_permutations random --teacher_checkpoint_path /path/to/logs/spot_teacher_movic/TIMESTAMP/checkpoint.pt.tar --log_path /path/to/logs/spot_movic --val_mask_size 128 --lr_main 0.0002 --lr_min 0.00015 --predefined_movi_json_paths train_movi_paths.jsonEvaluate SPOT on MOVi-C aggregating all sequence permutations:

python eval_spot.py --dataset voc --dataset movi --data_path /path/to/MOVi/c --num_slots 11 --eval_permutations all --checkpoint_path /path/to/logs/spot_movic/TIMESTAMP/checkpoint.pt.tarFor MOVi-E experiments use --num_slots 24

This project is licensed under the MIT License.

This repository is built using the SLATE and OCLF repositories.

If you find this repository useful, please consider giving a star ⭐ and citation:

@InProceedings{Kakogeorgiou2024SPOT,

author = {Kakogeorgiou, Ioannis and Gidaris, Spyros and Karantzalos, Konstantinos and Komodakis, Nikos},

title = {SPOT: Self-Training with Patch-Order Permutation for Object-Centric Learning with Autoregressive Transformers},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {22776-22786}

}