This repository contains material related to Udacity's Deep Reinforcement Learning course.

In this environment, 20 agents each consisting of a double-jointed arm can move to target locations (denoted by the green sphere). A reward of +0.1 is provided for each step that the agent's hand is in the goal location. Thus, the goal of your agent is to maintain its position at the target location for as many time steps as possible.

The state space consists of 33 variables corresponding to position, rotation, velocity, and angular velocities of the arm.

Each action is a vector with four numbers, corresponding to torque applicable to two joints. Joints move in an continuous vector space along the x/z axis. Every entry in the action vector is a float between -1 and 1.

This episodic task is considered solved when the average reward of the 20 agents is +30 for at least 100 episodes.

Credit goes to https://github.com/telmo-correa/DRLND-project-2 adapting code to implement the PPO network. Credit also goes to https://github.com/wpumacay/DeeprlND-projects for adapting code to implement the priority replay buffer. Credit to # https://github.com/PacktPublishing/Deep-Reinforcement-Learning-Hands-On/blob/master/ for implementing the distribution portion of the code in D4PG.

-

Download the environment from the link below. You need only select the environment that matches your operating system:

- Version 2: Twenty (20) Agents

- Linux: click here

- Mac OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

(For Windows users) Check out this link if you need help with determining if your computer is running a 32-bit version or 64-bit version of the Windows operating system.

(For AWS) If you'd like to train the agent on AWS (and have not enabled a virtual screen), then please use this link (version 1) or this link (version 2) to obtain the "headless" version of the environment. You will not be able to watch the agent without enabling a virtual screen, but you will be able to train the agent. (To watch the agent, you should follow the instructions to enable a virtual screen, and then download the environment for the Linux operating system above.)

- Version 2: Twenty (20) Agents

-

Place the file in the root of the repository and unzip (or decompress) the file. Then, install several dependencies.

git clone https://github.com/gktval/Continuous_Control

cd python

pip install .- Navigate to the

python/folder. Run the filemain.pyfound in thepython/folder.

Running the code without any changes will start a unity session and train the DDPG agent. Alternatively, you can change the agent model in the run method. The following agents are available as options:

DDPG

D4PG

PPO

In the initialization method of main.py, you can change the type of network to run. This will take you to the 'Run' method in the ddpg, d4pg, and ppo files. In the 'Run' method, you can change the configuration and parameters for each of the networks. In the config file, you can also find addition parameters for each o#gef the networks.

The scores of the training will be stored in a folder called scores. Saved agents will be stored in a folder called checkpoints. After running several of the networks, you can replay the trained agent by changing the isTest variable from the initialization in main.py

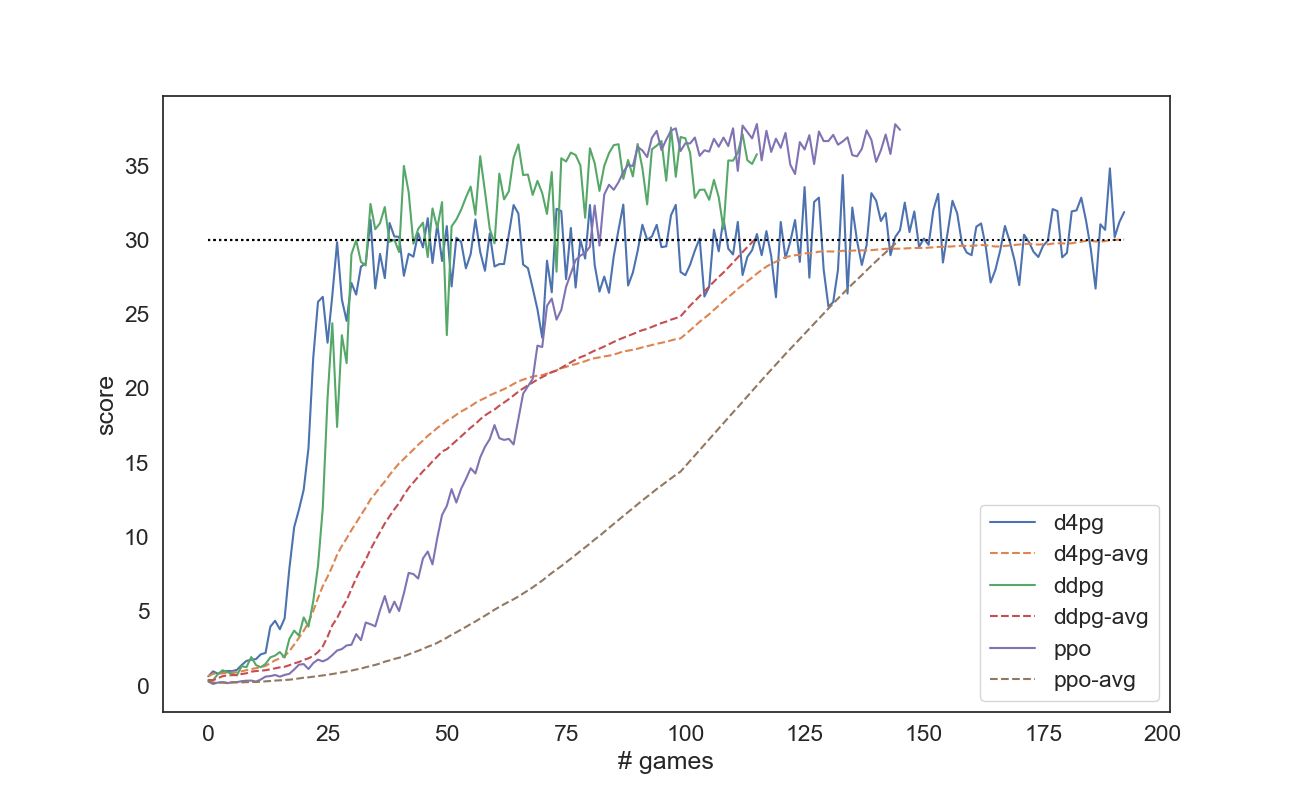

This graph shows the scores of the various trained continuous control agents used in this project. The results of the training will be stored in a folder called scores location in the python folder. After running several of the deep neural networks, you can replay the trained agent by changing the isTest variable passed into the run() method.

Each of the algorithms described above achieved an average score of +30 over 100 episodes as listed below: DDPG - 116 episodes D4PG - 193 episodes PPO - 146 episodes

The PPO algorithm achieved the results in more episodes than DDPG, but the process complete more quickly. Once PPO reach a score of +30, the distribution of rewards was much more compact than DDPG. The D4PG achieved a score of 30 the same number of episodes (35), but it took the D4PG algorithm much longer to reach the average score of 30 over 100 episodes because it plateaued and did not achieve as high of scores as DDPG or PPO.