Modifying...

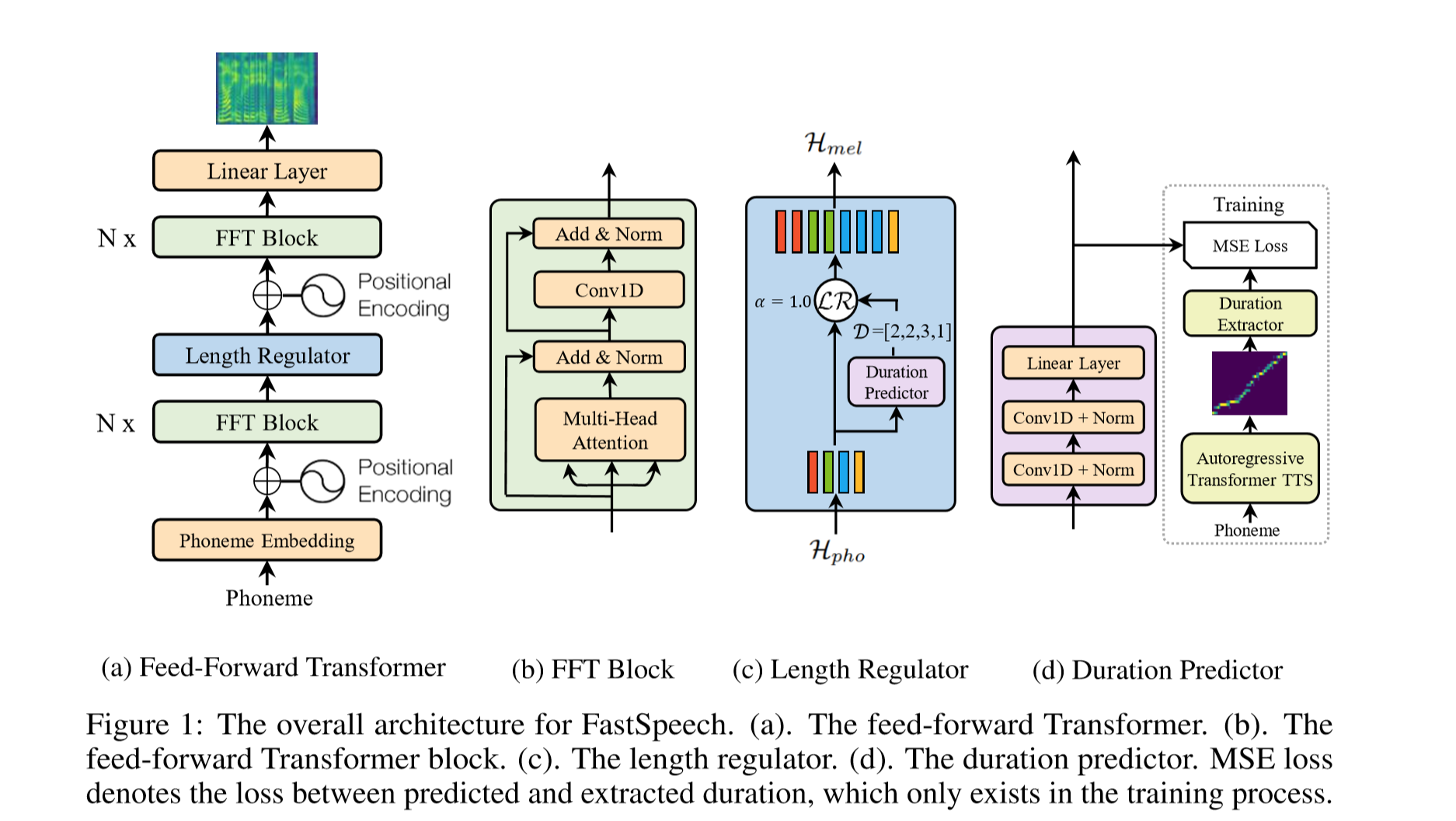

The Implementation of FastSpeech Based on Pytorch.

- python 3.6

- CUDA 10.0

- pytorch 1.1.0

- numpy 1.16.2

- scipy 1.2.1

- librosa 0.6.3

- inflect 2.1.0

- matplotlib 2.2.2

- Download and extract LJSpeech dataset.

- Put LJSpeech dataset in

data. - Run

preprocess.py.

In the paper of FastSpeech, authors use pre-trained Transformer-TTS to provide the target of alignment. I didn't have a well-trained Transformer-TTS model so I use Tacotron2 instead.

Change pre_target = False in hparam.py

- Download the pre-trained Tacotron2 model published by NVIDIA here.

- Put the pre-trained Tacotron2 model in

Tacotron2/pre_trained_model - Run

alignment.py, it will spend 7 hours training on NVIDIA RTX2080ti.

I provide LJSpeech's alignments calculated by Tacotron2 in alignment_targets.zip. If you want to use it, just unzip it.

In the turbo mode, a prefetcher prefetches training data and this operation may cost more memory.

Run train.py.

Run train_accelerated.py.

Run synthesis.py.

- The examples of audio are in

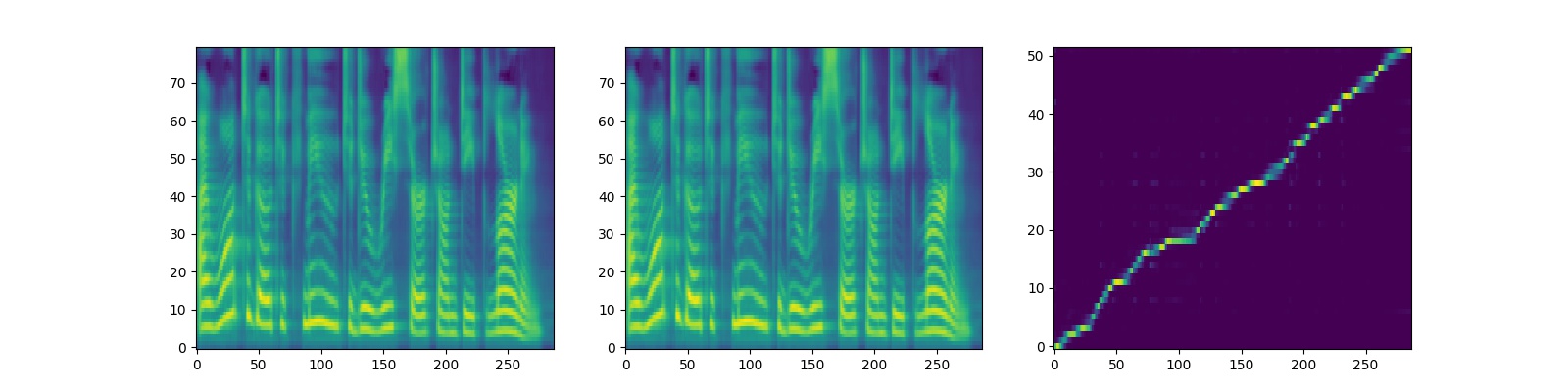

results. The sentence for synthesizing is "I am very happy to see you again.".results/normal.wavwas synthesized whenalpha = 1.0,results/slow.wavwas synthesized whenalpha = 1.5andresults/quick.wavwas synthesized whenalpha = 0.5. - The outputs and alignment of Tacotron2 are shown as follows (The sentence for synthesizing is "I want to go to CMU to do research on deep learning."):

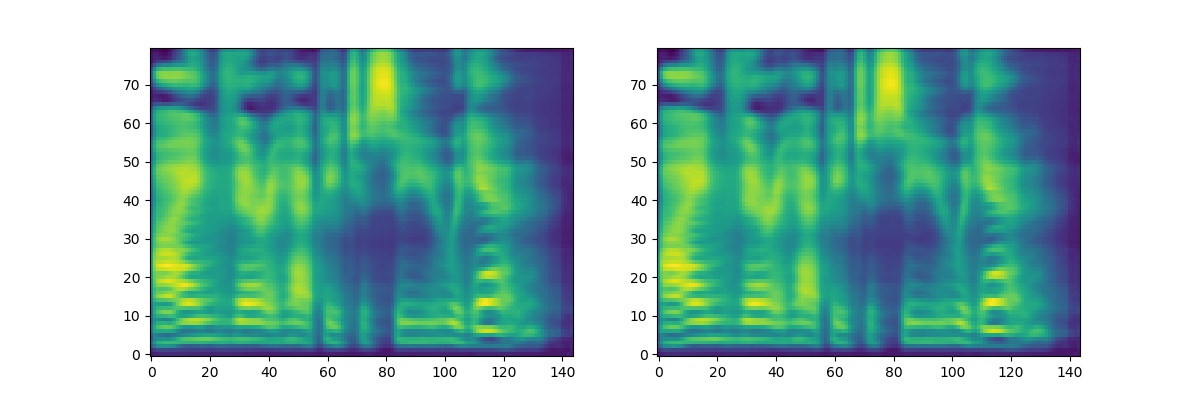

- The outputs and alignment of FastSpeech are shown as follows (The sentence for synthesizing is "I want to go to CMU to do research on deep learning."):

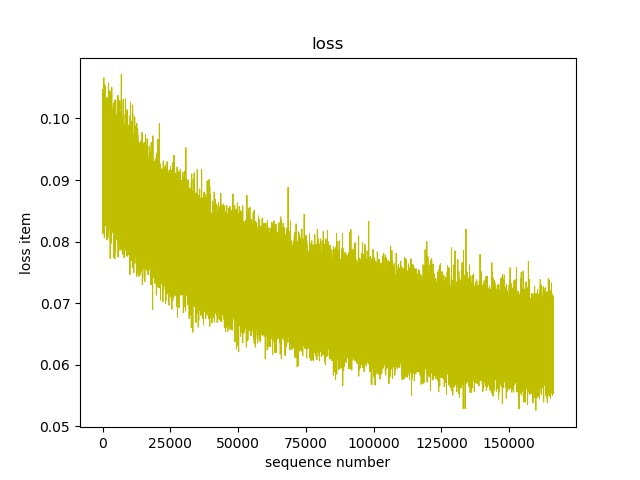

The total_loss curve recorded from 30000th step to 140000th step is shown as follows:

- If you want to use another model to get alignments, you need rewrite

alignment.py. - The returned value of

alignment.pyis a tensor whose value is the multiple that encoder's outputs are supposed to be expanded by. For example:

test_target = torch.stack([torch.Tensor([0, 2, 3, 0, 3, 2, 1, 0, 0, 0]),

torch.Tensor([1, 2, 3, 2, 2, 0, 3, 6, 3, 5])])- The output of LengthRegulator's last linear layer passes through the ReLU activation function in order to remove negative values. It is the outputs of this module. During the inference, the output of LengthRegulator pass through

torch.exp()and subtract one, as the multiple for expanding encoder output. During the training stage, duration targets add one and pass throughtorch.log()and then calculate loss. For example:

duration_predictor_target = duration_predictor_target + 1

duration_predictor_target = torch.log(duration_predictor_target)

duration_predictor_output = torch.exp(duration_predictor_output)

duration_predictor_output = duration_predictor_output - 1