We train a small aimbot detector on individual gunfights, boosting the supervision and detection signal.

Data is parsed from 64 hz SourceTV demos, sampled at 32 hz.

Primary features are view angle deltas, and the relative angular position of targets.

The model is a vanilla LSTM-512.

Training data:

- ~15,000 data points (~5,000 if trained on kills instead of bullet impacts)

- All generated by me

- All cheat samples from one commercial "legit" aimbot

- All kills using ak-47 on bots in deathmatch

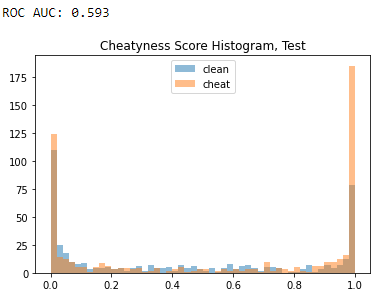

Test accuracy on data from a different player using the same cheat:

This toy experiment determines that a small neural network can, with moderate discriminative ability, determine if an engagement was assisted by a legit aimbot. A highlight is that the model maintains decent accuracy (~0.6 AUC) when tested against data from a player it had not seen before.

But this model is quite academic. A production model would have to be validated against a representative sample of players to ensure acceptable performance across the board. Even assuming good generalization, we require much more data to boost our discriminative ability to useful levels. The current model can achieve ~90% specificity at ~20% sensitivity on a balanced dataset--which is unacceptable on the true, imbalanced distribution of cheaters. Assuming that 1% of kills in real games are assisted by an aimbot, the model would flag 50 clean kills per assisted kill.

On the bright side, the model is per-engagement, not per player. So one might be able to improve per-player specificity and sensitivity by running the model on many of their shots. But I doubt that measurements from the model are perfectly independent, so one might also exhaust the predictive power of the test. Lastly, real cheaters could adapt and toggle their aim assistance to hide within the noise of the model.

Overall, I think it's quite difficult to detect legit aim assistance with the resources of a single engineer and a few community server owners. I think it's even difficult for humans to detect it, and I hope to post results on human benchmarks in a few weeks. In the future, I think I will try to incrementally improve the detection of rage and semi-rage aim assistance.

Lastly, to make an actual impact, I need to integrate with and improve an existing ban pipeline.

- More feature engineering

- Model other cheats

- Get cheat samples from real games

- Benchmark human detection ability

- Self-/semi-supervision to improve sample efficiency

- Multi-task with player skill modeling

- Scale