This repo contains the dataset and sample code for the paper: Hand and Object Segmentation from Depth Image using Fully Convolutional Network.

If you find our work useful, please consider citing

@inproceedings{hoseg:2019,

title = {Hand and Object Segmentation from Depth Image using Fully Convolutional Network},

author = {Guan Ming, Lim and Prayook, Jatesiktat and Christopher Wee Keong, Kuah and Wei Tech, Ang},

booktitle = {41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC)},

year = {2019}

}-

Synthetic train set contains 10,000 images for training the FCN model.

-

Synthetic test set contains 1000 images for evaluation.

-

Real test set contains 1000 images captured from a Kinect V2 RGBD camera for evaluation.

-

Synthetic train set (Fixed body shape) contains 10,000 images that is similar to Synthetic train set, except that all the human models have the same body shape. Its main purpose is to compare and show the improvement in FCN performance when the FCN is trained on Synthetic train set with varying body shapes.

Code snippet for loading and displaying dataset

import cv2

import numpy as np

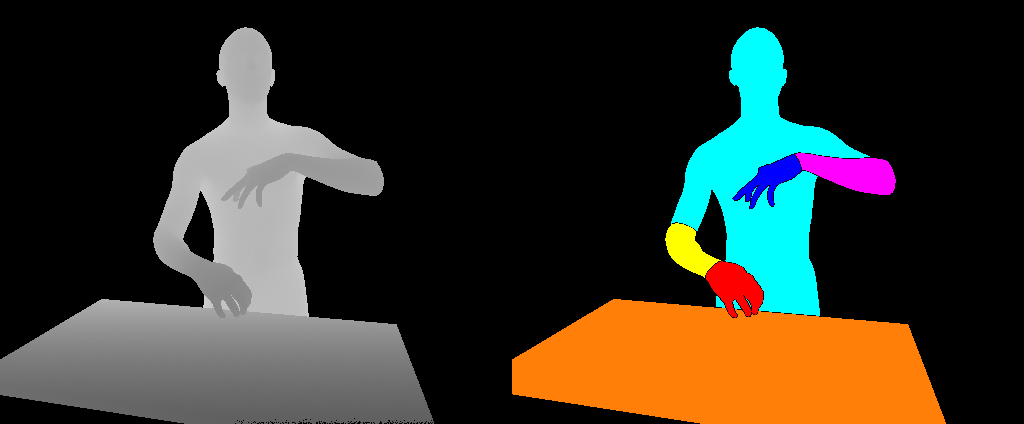

def convertLabel2Color(label):

height, width = label.shape

label_color = np.zeros((height, width,3), np.uint8)

label_color[label == 1] = [255,255,0] # Cyan -> Foreground Note BGR

label_color[label == 2] = [255,0,0] # Blue -> Left hand

label_color[label == 3] = [0,0,255] # Red -> Right hand

label_color[label == 4] = [255,0,255] # Magenta-> Left Arm

label_color[label == 5] = [0,255,255] # Yellow -> Right Arm

label_color[label == 6] = [0,255,0] # Green -> Obj

label_color[label == 7] = [9,127,255] # Orange -> Table

return label_color

depth = cv2.imread('../dataset/train_syn/depth/0000000.png', cv2.IMREAD_ANYDEPTH)

label = cv2.imread('../dataset/train_syn/label/0000000.png', cv2.IMREAD_GRAYSCALE)

cv2.imshow('depth', cv2.convertScaleAbs(depth, None, 255/1500, 0))

cv2.imshow('label', convertLabel2Color(label))

cv2.waitKey(0)Refer to model and the trained weights

Refer to kinect.py