This is the official implementation of ICLR2023 Spotlight paper

by Xingchao Liu, Lemeng Wu, Mao Ye, Qiang Liu from UT Austin

We present a simple and unified framework to learn diffusion models on constrained and structured domains. It can be easily adopted to various types of domains, including product spaces of any type (be it bounded/unbounded, continuous/discrete, categorical/ordinal, or their mix).

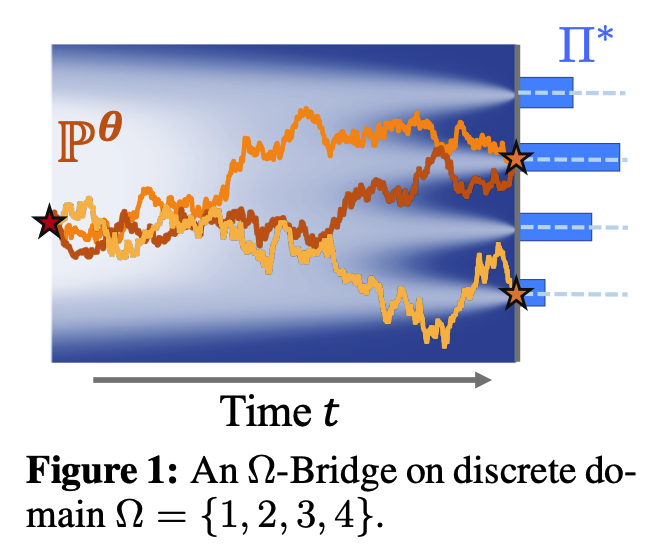

In our model, the diffusion process is driven by a drift force that is a sum of two terms: one singular force designed by Doob’s h-transform that ensures all outcomes of the process to belong to the desirable domain, and one non-singular neural force field that is trained to make sure the outcome follows the data distribution statistically.

An interactive Colab notebook on a toy example is provided here

See instructions in ./MixedTypeTabularData