This is the code for the paper:

Text as Neural Operator: Image Manipulation by Text Instruction

Tianhao Zhang, Hung-Yu Tseng, Lu Jiang, Weilong Yang, Honglak Lee, Irfan Essa

Please note that this is not an officially supported Google product. And this is the reproduced, not the original code.

If you find this code useful in your research then please cite

TODO: Citation

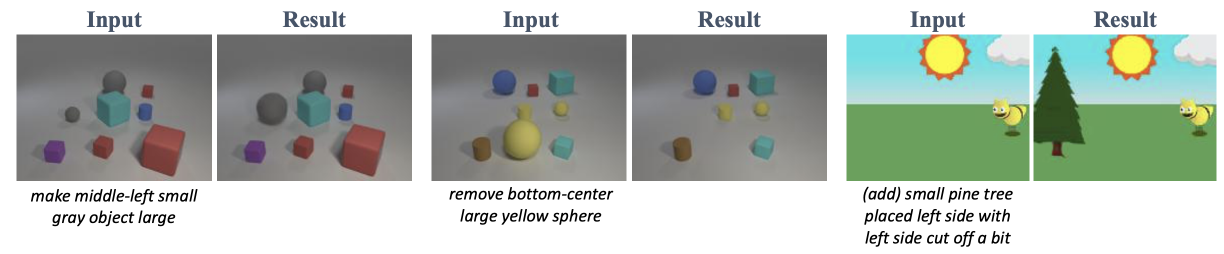

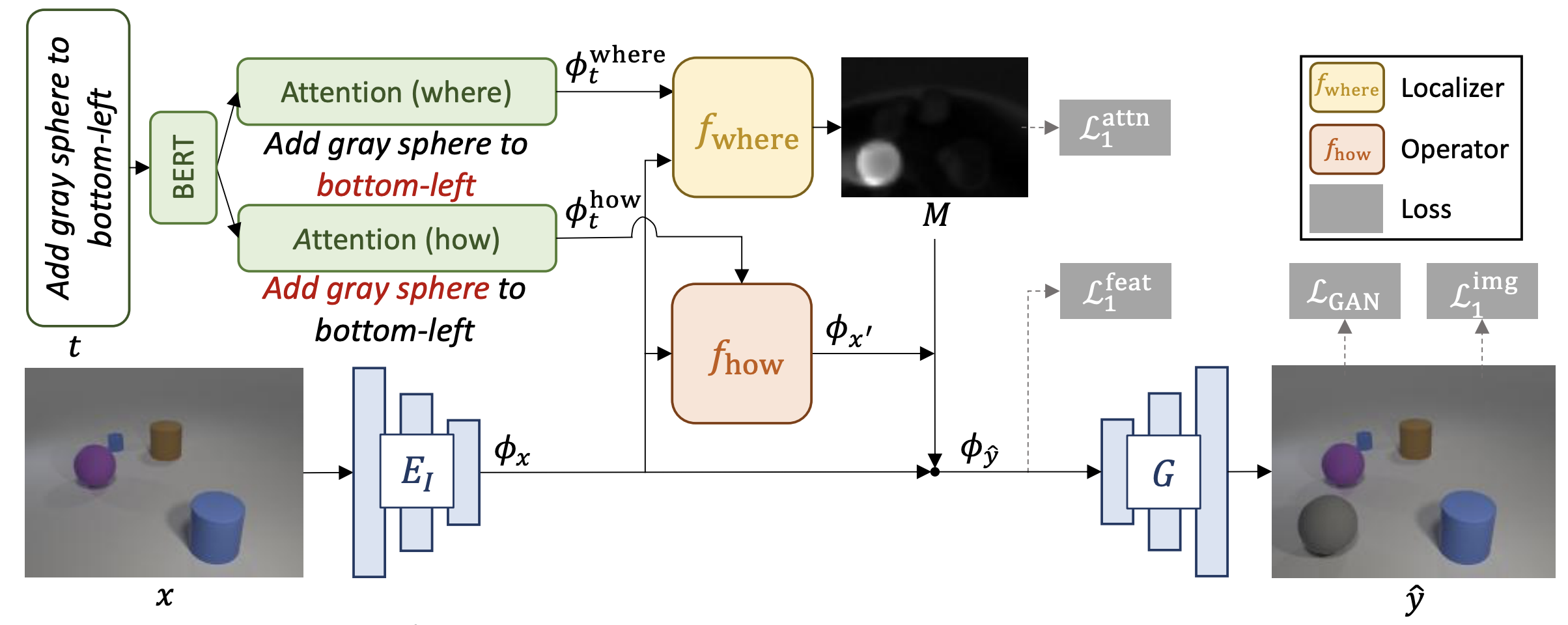

In this paper, we study a new task that allows users to edit an input image using language instructions.

The key idea is to treat language as neural operators to locally modify the image feature. To this end, our model decomposes the generation process into finding where (spatial region) and how (text operators) to apply modifications. We show that the proposed model performs favorably against recent baselines on three datasets.

Clone this repo and go to the cloned directory.

Please create a environment using python 3.7 and install dependencies by

pip install -r requirements.txtTo reproduce the results reported in the paper, you would need an V100 GPU.

The original Clevr dataset we used is from this external website. The original Abstract Scene we used is from this external website.

Pretrained models (Clevr and Abstract Scene) can be downloaded from here. Extract the tar:

tar -xvf checkpointss.tar -C ../

Once the dataset is preprocessed and the pretrained model is downloaded,

-

Generate images using the pretrained model.

bash run_test.sh

Please switch parameters in the script for different datasets. Testing parameters for Clevr and Abstract Scene datasets are already configured in the script.

-

The outputs are at

../output/.

New models can be trained with the following commands.

-

Prepare dataset. Please contact the author if you need the processed datasets. If you are to use a new dataset, please follow the structure of the provided datasets, which means you need paired data (input image, input text, output image)

-

Train.

# Pretraining

bash run_pretrain.sh

# Training

bash run_train.shThere are many options you can specify. Training parameters for Clevr and Abstract Scene datasets are already configured in the script.

Tensorboard logs are stored at [../checkpoints_local/TIMGAN/tfboard].

Testing is similar to testing pretrained models.

bash run_test.shTesting parameters for Clevr and Abstract Scene datasets are already configured in the script.

The FID score is computed using the pytorch implementation here. Image retrieval script will be released soon.

| FID | RS@1 | RS@5 | |

|---|---|---|---|

| Clevr | 33.0 | 95.9±0.1 | 97.8±0.1 |

| Abstract Scene | 35.1 | 35.4±0.2 | 58.7±0.1 |

run_pretrain.sh,run_train.sh,run_test.sh: bash scripts for pretraining, training and testing.train_recon.py,train.py,test.py: the entry point for pretraining, training and testing.models/tim_gan.py: creates the networks, and compute the losses.models/networks.py: defines the basic modules for the architecture.options/: options.dataset/: defines the class for loading the dataset.

Please contact bryanzhang@google.com if you need the preprocessed data or the Cityscapes pretrained model.