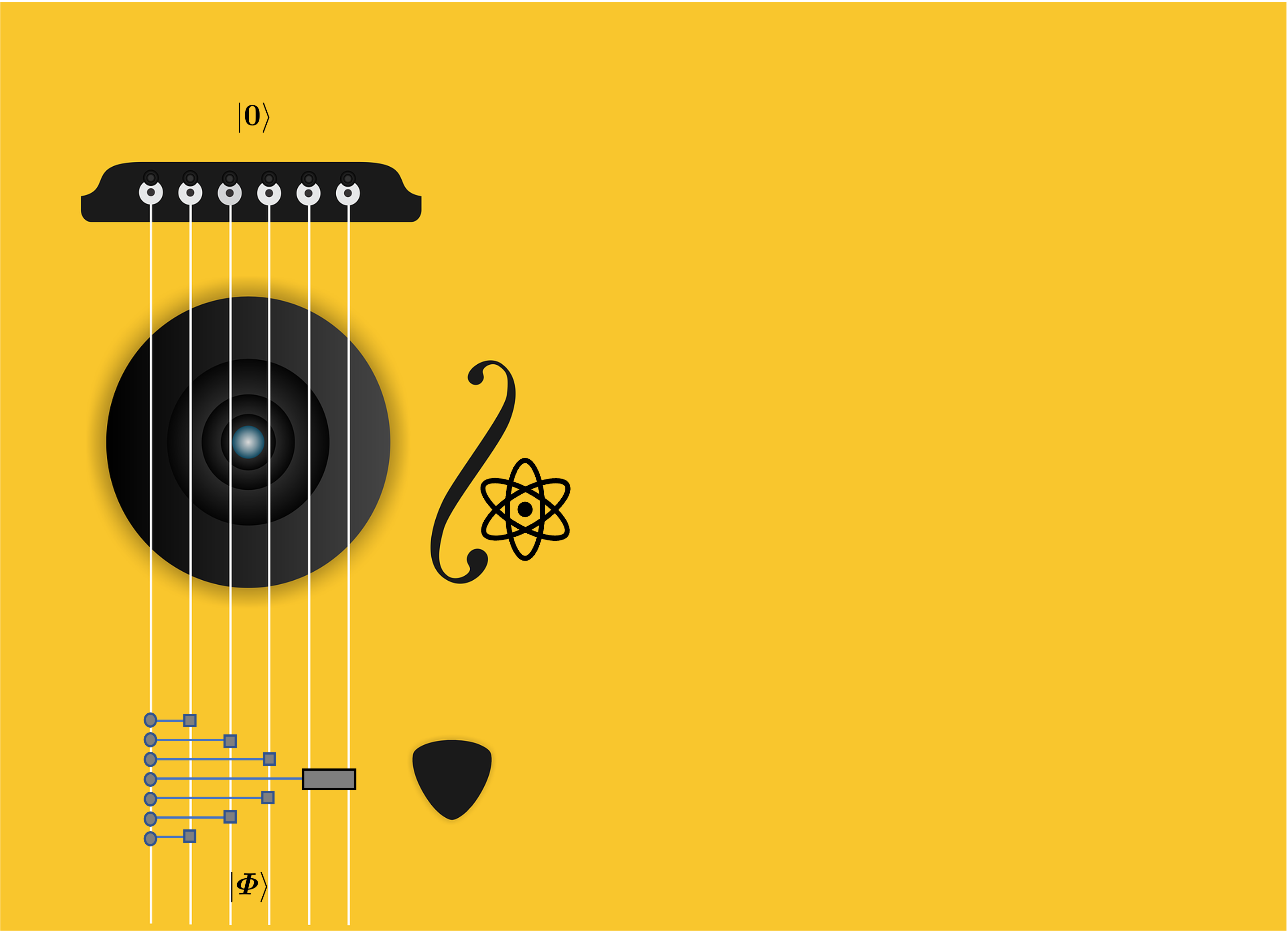

This project is to use a variational quantum circuit (VQC) for music playing, inspired by a logo of QHack 2022.

A user may give a melody, while we wish a VQC would generate a matched harmony. One simplest possibility is the use of specific chords depending on notes of the melody.

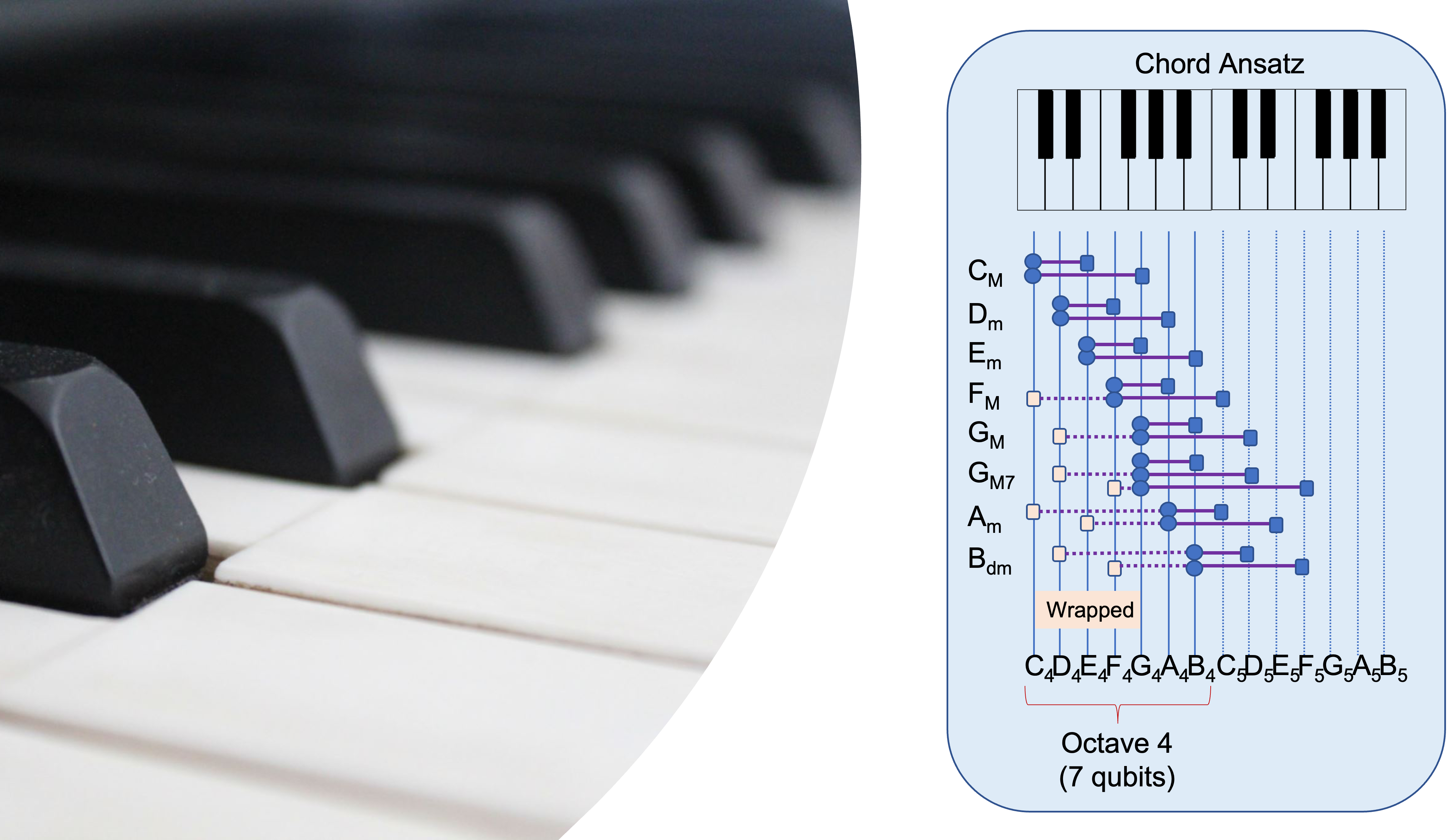

Let's consider 7-qubit quantum processor, where each qubit is associated with a piano string of C, D, E, F, G, A, and B notes at an octave 4. Given a melody note, the quantum measurement may produce a chord; e.g., C-major chord given C-note melody. Such chord ansatz may be realized by controlled-nots (CNOTs) like the figure below.

Given limited number of qubits, some may need to compromise with 'wrapped' chords. More qubits may be able to realize more variations (more octaves and flat/sharp strings).

Nevertheless, it should be noted that each chord ansatz will interfere each other, and thus we need more tricks. You may see the interference when running chord.py with more chord ansatz.

python chord.py

C [1 1 1 1 0 0 1] # C-D-E-F-B ... anti-harmony

D [1 1 0 1 0 0 0]

E [0 1 1 0 1 0 0]

F [0 0 1 1 0 1 0]

G [0 0 0 1 1 0 1]

A [1 0 1 0 0 1 0] # Am ... OK

B [0 1 0 1 0 0 1] # Bdm ... OKOnly exclusive chords in the end like a pair of Am and Bdm would work together. For example, we may pick exclusive chord ansatzs like:

python chord.py --chords CM Dm

C [1 0 1 0 1 0 0] # CM ... OK

D [0 1 0 1 0 1 0] # Dm ... OK

E [0 0 1 0 0 0 0] # no harmony ... just melody of E-note.The above CNOTs chord ansatz has no variational parameters, and less interesting.

In this project, we wish to design VQC to raise a quantum pianist or a quantum guitarist (hopefully with a passion to deviate from regular behaviors depending on his mood: i.e., quantum states/errors). Let's solve it with quantum machine learning (QML) framework.

We may use the package manager pip for python=3.9. We use Pennylane for QML.

pip install pennylane=0.21.0

pip install pygame=2.1.2

pip install argparse

pip install dill=0.3.4

pip install tqdm=4.62.3

pip install matplotlib=3.5.1Other versions should work.

We first synthesize sounds, based on a submodule Synthesizer_in_Python, licensec under MIT. A wrapper script synth.py is to generate wav files in Sounds directory, e.g., for piano and guitar sounds as

python synth.py --octaves 4 --notes C D E F G A B --sound piano acousticWe may hear a piano note like C4.wav.

The synthesized sound may be checked with twinkle.py as:

python twinkle.py --duration 0.1You should hear twinkle star like twinkle.mp4, otherwise revisit above.

Let's invite three of our qusicians, who prefers a particular ansatzs:

- qusician-Alice: BasicEntanglerLayers

- qusician-Bob: StronglyEntanglingLayers

- qusician-Charlie: RandomLayers

A qusician plays the piano based on a melody line, by embedding with BasisEmbedding. Our qusician-Charlie may look like below:

wires = ['C', 'D', 'E', 'F', 'G', 'A', 'B'] # 1-octave strings

insights = np.random.randn(layer, 7, requires_grad=True) # variatinal param weights

@qml.device(device, wires=wires, shots=1)

def qusician(melody):

# melody line given; 'C'-note for [1,0,0,0,0,0,0]

qml.BasisEmbedding(features=melody, wires=wires)

# think better harmony

qml.RandomLayers(weights=insights, wires=wires)

# type keyboard

return qml.sample(wires=wires) # hope 'CM' chord [1,0,1,0,1,0,0] for 'C'-note melodyThe qusician python class is introduced in qusic.py. Our qusician-Charlie (with 2-layer insights) would visit us by calling as:

python qusic.py --ansatz RandomLayers --layers 2 7Note that, without teaching them, they are just novice-level players because the variational parameters are random at beginning.

Also note that the tarent depends on how he/she thinks (i.e., quantum ansatz) and how deep his/her insights are (i.e., the number of quantum layers).

Let's introduce our qmaestro who may instruct them to play the piano.

Our qaestro teacher is introduced in qaestro.py. He may use a particular teaching style, like AdamOptimizer. He tries to minimize students' mis-fingering (as a cost), where he asked how likely the students want to type keyboards given melody note, and judges a penalty, e.g., based on binary cross-entropy (BCE) loss, like below.

qaestro = qml.AdamOptimizer() # different teaching style is available

def mis_finger(insights):

melogy = np.random.choice(wires) # melody note given

typing = qusician(melody, insights) # qusician answers melody typing

harmony = chords[melody] # target harmony

penalty = BCE(typing, harmony) # how much close to the target

return penalty

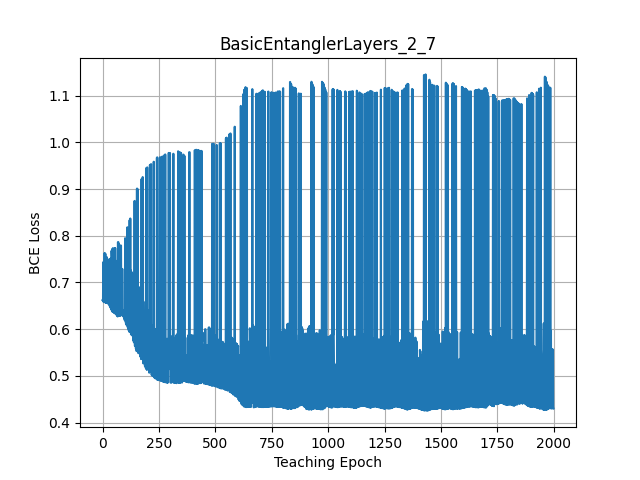

insights = qaestro.step(mis_finger, insights) # qaestro's guideLet's see how qusician-Alice would be trained by our qaestro:

python qaestro.py --ansatz BasicEntanglerLayers --layers 2 7 --epoch 2000 --showfigIt saves the learning trajectry figure in plots/BasicEntanglerLayers_2_7.png in default setting.

Her skill is improved with large deviation as below.

After training, she looks like below:

>> model.draw()

C: ──RX(-6.88e-06)──╭C────────────────────────────────────────────────────────────────────────╭X──RX(-0.000264)──╭C──────────────────────╭X──┤ ⟨Z⟩

D: ──RX(1.09e-05)───╰X──╭C───RX(2.09e-05)─────────────────────────────────────────────────────│──────────────────╰X──╭C──────────────────│───┤ ⟨Z⟩

E: ──RX(-1.56)──────────╰X──╭C──────────────RX(-0.853)────────────────────────────────────────│──────────────────────╰X──╭C──────────────│───┤ ⟨Z⟩

F: ──RX(-1.3)───────────────╰X─────────────╭C────────────RX(1.66)─────────────────────────────│──────────────────────────╰X──╭C──────────│───┤ ⟨Z⟩

G: ──RX(1.36)──────────────────────────────╰X───────────╭C──────────RX(-2.37)─────────────────│──────────────────────────────╰X──╭C──────│───┤ ⟨Z⟩

A: ──RX(-2.15)──────────────────────────────────────────╰X─────────╭C──────────RX(-0.000427)──│──────────────────────────────────╰X──╭C──│───┤ ⟨Z⟩

B: ──RX(8.41e-05)──────────────────────────────────────────────────╰X─────────────────────────╰C──RX(-0.00425)───────────────────────╰X──╰C──┤ ⟨Z⟩ The trained model is saved in models/BasicEntanglerLayers_2_7.pkl in default.

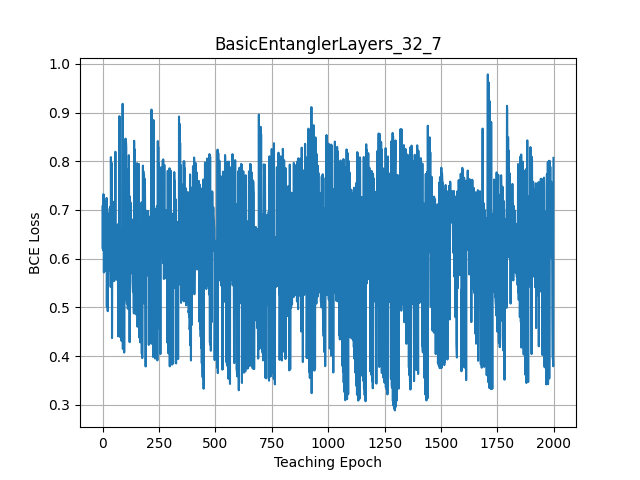

Unfortunately, even with more deep thinking (32-layer ansatz), her skill seems not that great:

python qaestro.py --ansatz BasicEntanglerLayers --layers 32 7 --epoch 2000 --showfigLet's check how our quasician-Bob would do:

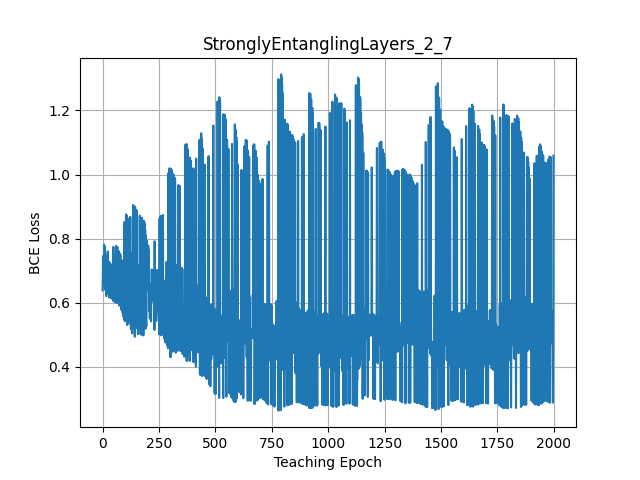

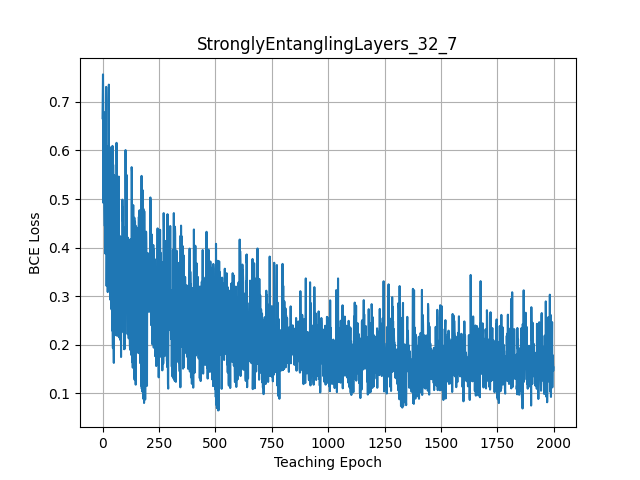

python qaestro.py --ansatz StronglyEntanglingLayers --layers 2 7 --epoch 2000 --showfigIt seems that his skill is comparable to qusician-Alice. However, he seems more serious in the piano training if he uses deeper insights with 32-layer ansatz:

python qaestro.py --ansatz StronglyEntanglingLayers --layers 32 7 --epoch 2000 --showfigHis playing looks more stable with less scolding by qaestro. Note that StronglyEntanglingLayers have three-fold more variational parameters per layer than BasicEntanglerLayers.

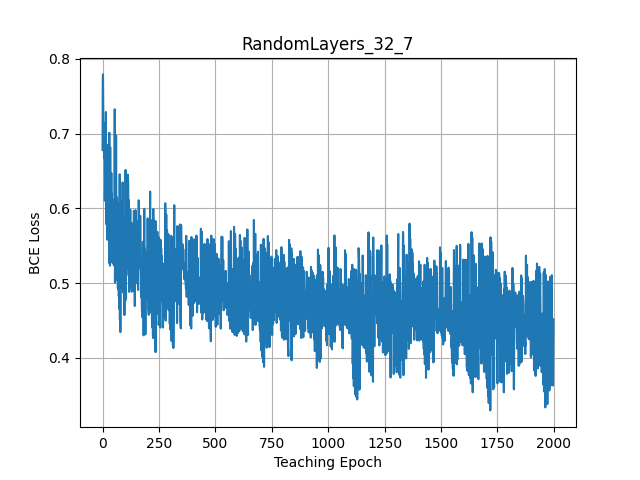

How skillful is our qusician-Charlie?

python qaestro.py --ansatz RandomLayers --layers 2 7 --epoch 2000 --showfigHis playing seems awful and receives a lot of penalty by qaestro. However, we found that he would be more serious in the piano training if he considers deeper insights with 32-layer ansatz:

python qaestro.py --ansatz RandomLayers --layers 32 7 --epoch 2000 --showfigThen, he may be more skillful than qusician-Alice, while the number of variational parameters is equivalent.

Let's listen to how our qusicians Alice, Bob, and Charlie would play the piano for twinkle star. Cour qoncert will be held by calling qoncert.py as follows (inviting a trained qusician model):

python qoncert.py --load models/RandomLayers_2_7.pklIt saves play.npy and play.wav in default. How good are they?

- qusician-Alice: BasicEntanglerLayers_64_7.wav; umm, she mis-fingers at a break.

- qusician-Bob: StronglyEntanglingLayers_64_7.wav; he sounds good, at least knows when not to type keys at break.

- pre-training qusician-Bob: StronglyEntanglingLayers_64_7_epoch0.wav; his playing was indeed improved when comparing with his past playing before qaestro's instruction.

- qusician-Charlie: RandomLayers_64_7.wav; ... close but he still mis-fingers like Alice ...

- careless qusician-Charlie: RandomLayers_1_7.wav; nevertheless, his playing with deeper insights (64-layer) was indeed better than his playing with shallow thought (1-layer), as he is typing same keys most of the time regardless of melody line.

Not so great? Please do not blame them as our qaestro teacher does not play like a real maestro (qoncert.py --qaestro); qaestro.mp4. Also, they just spent a few minutes to learn how to play. In addition, their playing may be still better than the orignal melody alone twinkle.mp4.

Playing the guitar is similar but different from playing the piano. How to associate qubits with 6 strings and fingering? Let's investigate it in detail someday, but for now let's just discuss different styles of arpeggio and stroke for playing the guitar. Let our qianists play the guitar as quitarists:

python qoncert.py --verb --load models/StronglyEntanglingLayers_64_7.pkl --sound acoustic --delay 3000 --stroke 2Here, quitarist-Bob uses slow 2-stroke arpeggio. Let's enjoy his quitar concert:

- Rapid stroke (delay=100, stroke=1); umm, not impressive

- Slow stroke (delay=3000, stroke=1); sounds ok

- Long wrapped stroke (delay=8000, stroke=1); interesting

- Slow 2-stroke (delay=3000, stroke=2); feels good

Could be improved by adding more variants of arpeggio.

It is straightforward to use a real quantum processing unit (QPU) for testing our qusic. For example, we may use IBM Q Experience. You may specify the account token via Pennylane configulation file, and a scpecific backend of real QPU, such as 'ibmq_london'. To run our qusic.py on a real quantum computer, you just need to change the device as follows:

pip install pennylane-qiskit=0.21.0 # qiskit plugin

python qusic.py --dev qiskit.ibmqWe can use different backends such as Amazon braket. For example, we may run as

pip install amazon-braket-pennylane-plugin=1.5.6 # AWS plugin

python qusic.py --dev braket.aws.qubitFurther examples and tutorials are found at amazon-braket-example and README.

In future, our quantum musicians may consider:

- Volume

- Tempo/Pitch

- More chords/more strings

- Trend of melody

- Orchestra

- Reinforcement learning with rewards by social audiences

- Derivation of cost Hamiltonian for QAOA

- ...

MIT. Copyright (c) 2022 Toshi Koike-Akino. This project is provided 'as-is', without warranty of any kind. In no event shall the authors be liable for any claims, damages, or other such variants.