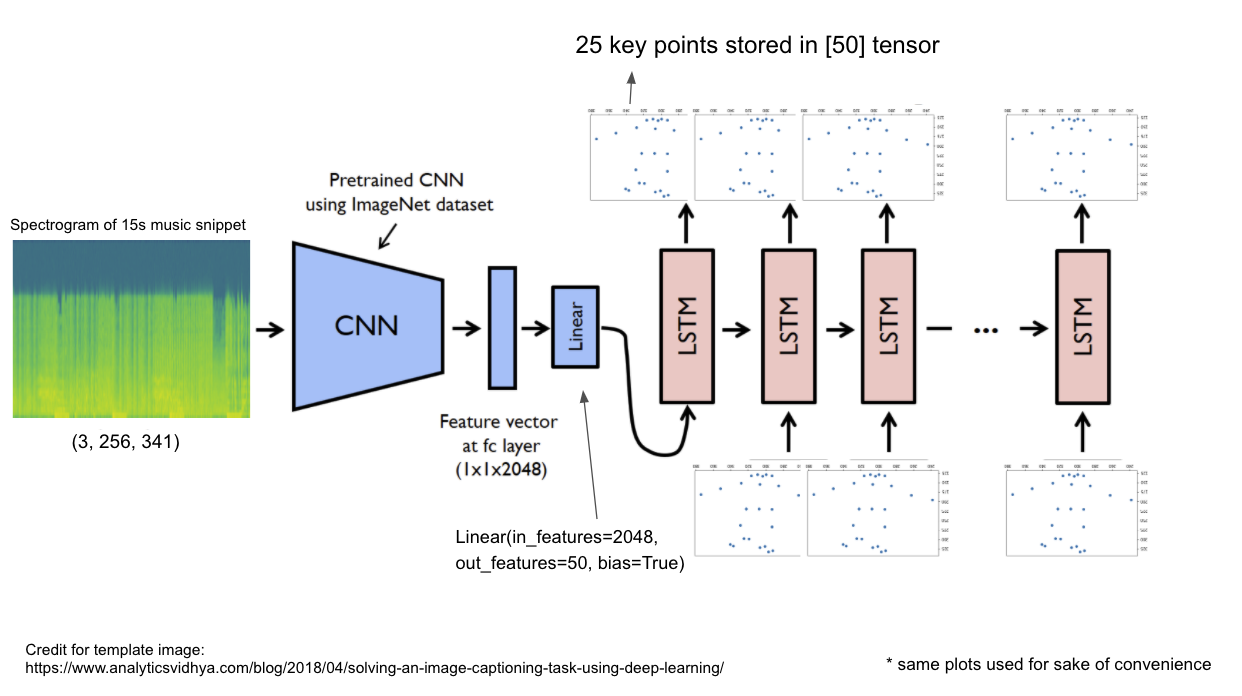

Training an encoder-decoder (CNN-LSTM) model to generate a dance (or a sequences of poses) from spectrograms of music snippets.

I was inspired by the recent TikTok trend. I figured since there are so many users on TikTok, it would be quite easy obtaining large amounts of dance video data. This along with my knowledge on pose estimation from doing Udacity's Intel Edge AI for IoT Developers Nanodegree and image captioning from doing Udacity's Computer Vision Nanodegree, I felt that this project would be accessible to me.

OpenPose needs to be installed

Refer to Jupyter Notebook in the order corresponding to their indexes

- (041120) AI only 'sees' left channel of music as you can only create spectrogram from one channel

- (091120) 15 second spectrogram might cause data to be lost

- (091120) In image captioning I dealt with around 10 words, but for a 15 second video at 15fps I will have 225 frames. I don't think the LSTM may work well with a 225 word long sentence

- (141120) Not able to use cv2 and openpose within the same script because of a memory allocation error (TCMalloc); was working fine until I decided not to work in a virtual env for scikit learn to work

- (281120) My pose plots are upside down but I don't think it should matter

- (011220) Tried to overfit the model just for a proof of concept but couldn't get my l1loss below 20. Model will repeat the same predictions after a few frames.

- (041220) After revisting my code, I realised that I assumed the input shape to lstm as (batch, seq_len, input_size). I found out from the documentation that the default is (seq_len, batch, input_size). This is huge! I have since 'set batch_first=True' in the lstm params and will be training my model from scratch.

Contributions are what make the open source community such an amazing place to be learn, inspire, and create. Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the MIT License. See LICENSE for more information.

Gordon - gordonlim214@gmail.com