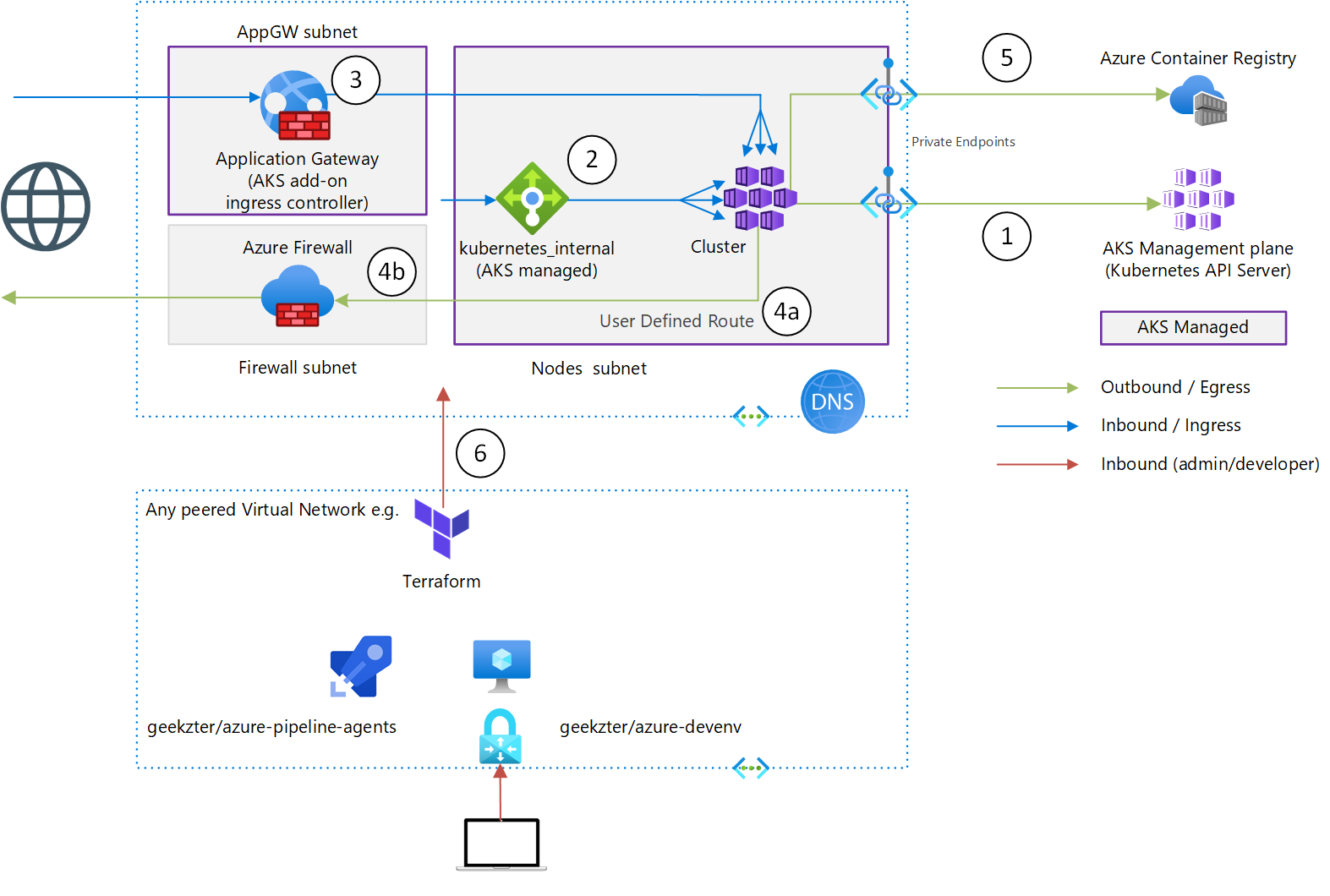

This repo lets you provision a network isolated Azure Kubernetes Service, customizing egress, ingress with both Internal Load Balancer and Application Gateway, and the Kubernetes API Server (AKS management nodes) connected via Private Link. It uses Terraform as that can create all the Azure AD, Azure and Kubernetes resources required.

When you create an Azure Kubernetes Service (AKS) in the Azure Portal, or with the Azure CLI, by default it will be open in the sense of traffic (both application & management) using public IP addresses. This is a challenge in Enterprise, especially in regulated industries. Effort is needed to embed services in Virtual Networks, and in the case of AKS there are quite a few moving parts to consider.

To constrain connectivity to/from an AKS cluster, the following available measures are implemented in this repo:

- The Kubernetes API server is the entry point for Kubernetes management operations, and is hosted as a multi-tenant PaaS service by Microsoft. The API server can be projected in the Virtual Network using Private Link (article)

- Instead of an external Load Balancer (with a public IP address), use an internal Load Balancer (article) limit-egress-traffic#restrict-egress-traffic-using-azure-firewall))

- Application Gateway can be used to manage ingress traffic. There are multiple ways to set this up, by far the simplest is to use the AKS add on. This lets AKS create the Application Gateway and maintain it's configuration (article)

- Use user defined routes and an Azure Firewall to manage egress, instead of breaking out to the Internet directly (article)

- Connect Azure Container Registry directly to the Virtual Network using a Private Endpoint.

- To prevent yourself from being boxed in, CI/CD should be able to access the cluster. See connectivity below.

Note 2. and 3. are overlapping, you only need one of both.

AKS supports two networking 'modes'. These modes control the IP address allocation of the agent nodes in the cluster. In short:

- kubenet creates IP addresses in a different address space, and uses NAT (network address translation) to expose the agents. This is where the Kubernetes term 'external IP' comes from, this is a private IP address known to the rest of the network.

- Azure CNI uses the same address space for agents as the rest of the virtual network. See comparison

I won't go into detail of these modes, as the network mode is irrelevant for the isolation measures you need to take. Choosing one over the other does not make a major difference for network isolation. This deployment has been tested with Azure CNI.

- To get started you need Git, Terraform (to get that you can use tfenv on Linux & macOS, Homebrew on macOS or chocolatey on Windows)

- Some scripts require PowerShell

- Application deployment requires kubectl

If you're on macOS, you can run brew bundle in the repo root to get the required tools, as there is a Brewfile.

As this provisions an isolated AKS, how will you be able to access the AKS cluster once deployed? If you set the peer_network_id Terraform variable to a network where you're running Terraform from (or you are connected to e.g. using VPN), this project will set up the peering and Private DNS link required to look up the Kubernetes API Server and access cluster nodes. Without this you can only perform partial deployment, you won't be able to deploy applications.

Example connectivity scenarios:

- The pipeline in this repository uses a Scale Set Agent pool deployed into a virtual network by geekzter/azure-pipeline-agents

- You can use geekzter/azure-devenv to create a fully prepped development VM in a VNet, and deploy from there

-

Clone repository:

git clone https://github.com/geekzter/azure-aks.git -

Change to the

terraformdirectrory

cd azure-aks/terraform -

Login to Azure with Azure CLI:

az login -

This also authenticates the Terraform azuread and azurerm providers. Optionally, you can select the subscription to target:

az account set --subscription 00000000-0000-0000-0000-000000000000

ARM_SUBSCRIPTION_ID=$(az account show --query id -o tsv)(bash, zsh)

$env:ARM_SUBSCRIPTION_ID=$(az account show --query id -o tsv)(pwsh) -

You can then provision resources by first initializing Terraform:

terraform init -

And then running:

terraform apply -

Demo applications are deployed with the following script:

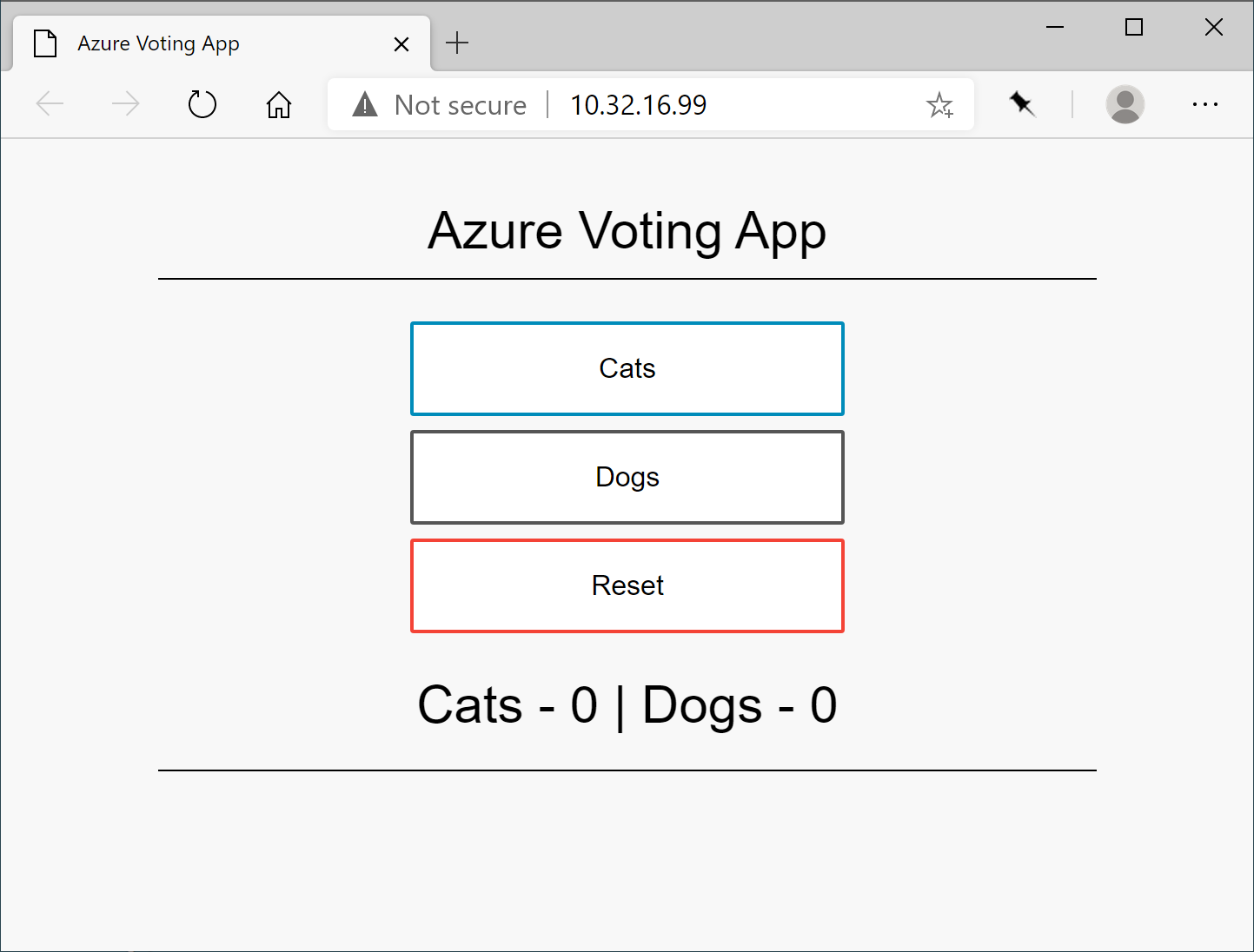

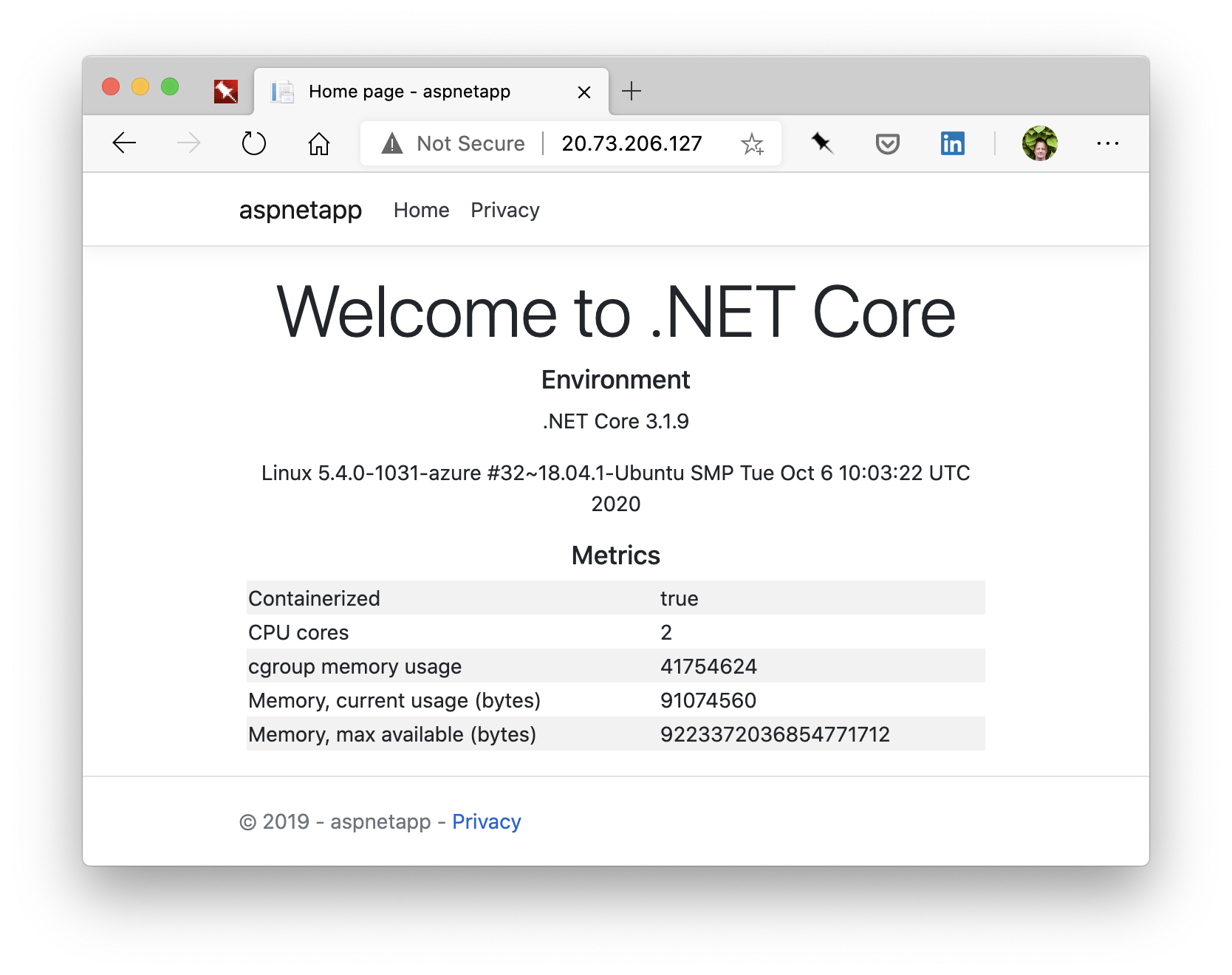

scripts/deploy_app.ps1This script will output the url's used by the demo applications. One application is exposed via Application Gateway and is publically accessible, the other over the internal Load Balancer.

Once deployed the applications will look like this:

| Internal Load Balancer: Voting App | Application Gateway: ASP.NET App |

|---|---|

|

|

When you want to destroy resources, run:

terraform destroy