pytorch implementation Actor-Critic and openAI clipped PPO in gym cartpole-v0 and pendulum-v0 environment

implement A2C and PPO in pytorch

- tensorflow (for tensorboard logging)

- pytorch (>=1.0, 1.0.1 used in my experiment)

- gym

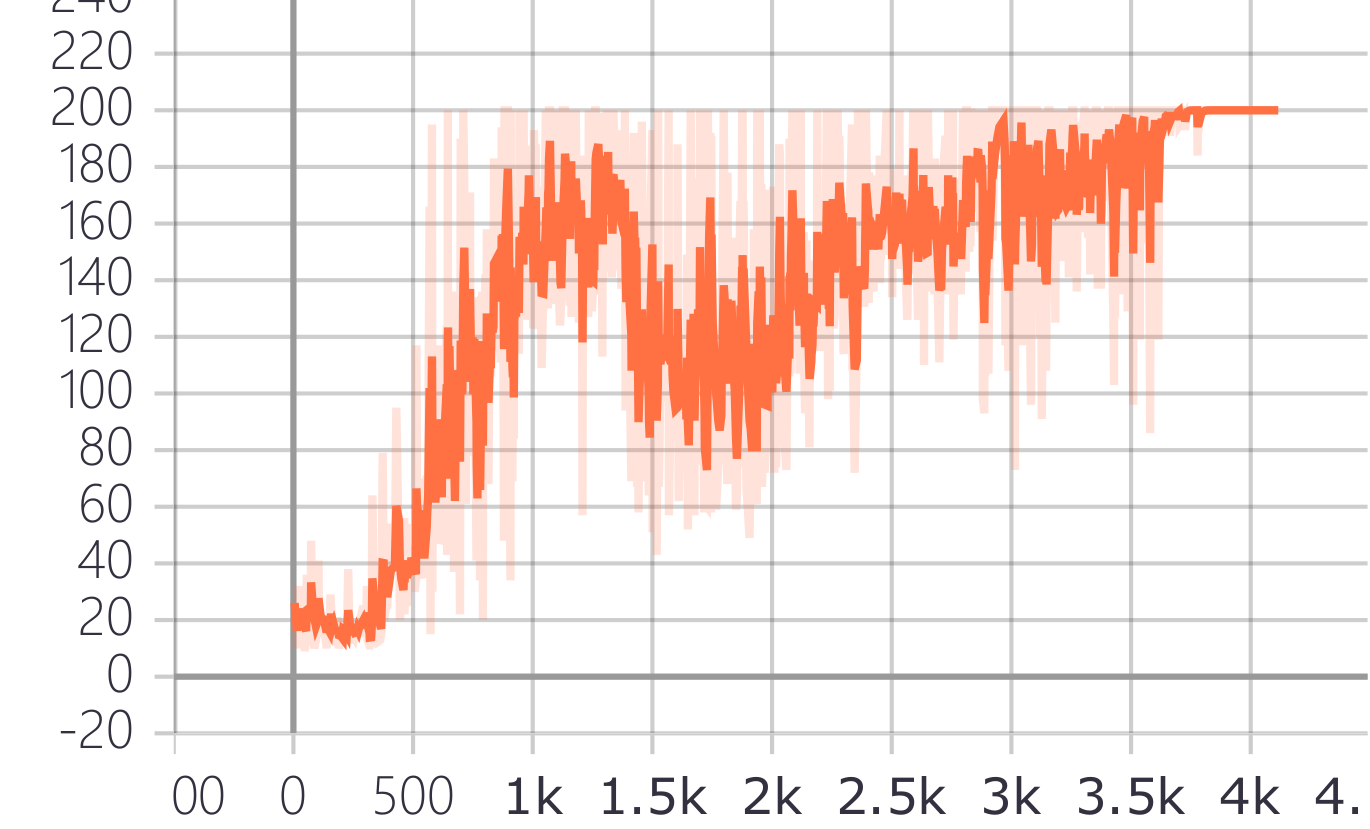

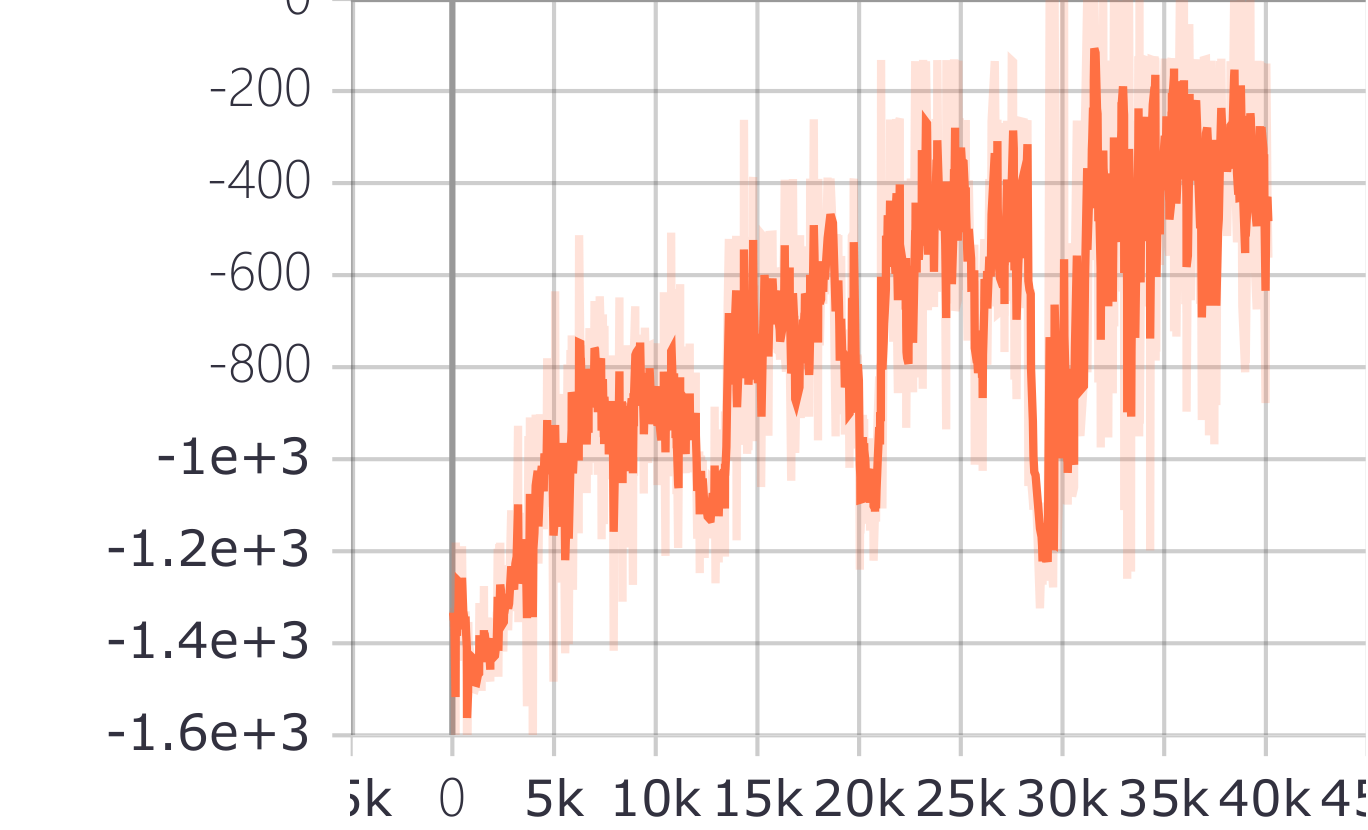

a2c in cartpole and pendulum, the training result shows below

a2c.py result of a2c in cartpole-v0

a2c_pen.py result of a2c in pendulum-v0, it's quite hard for a2c converge in pendulum..

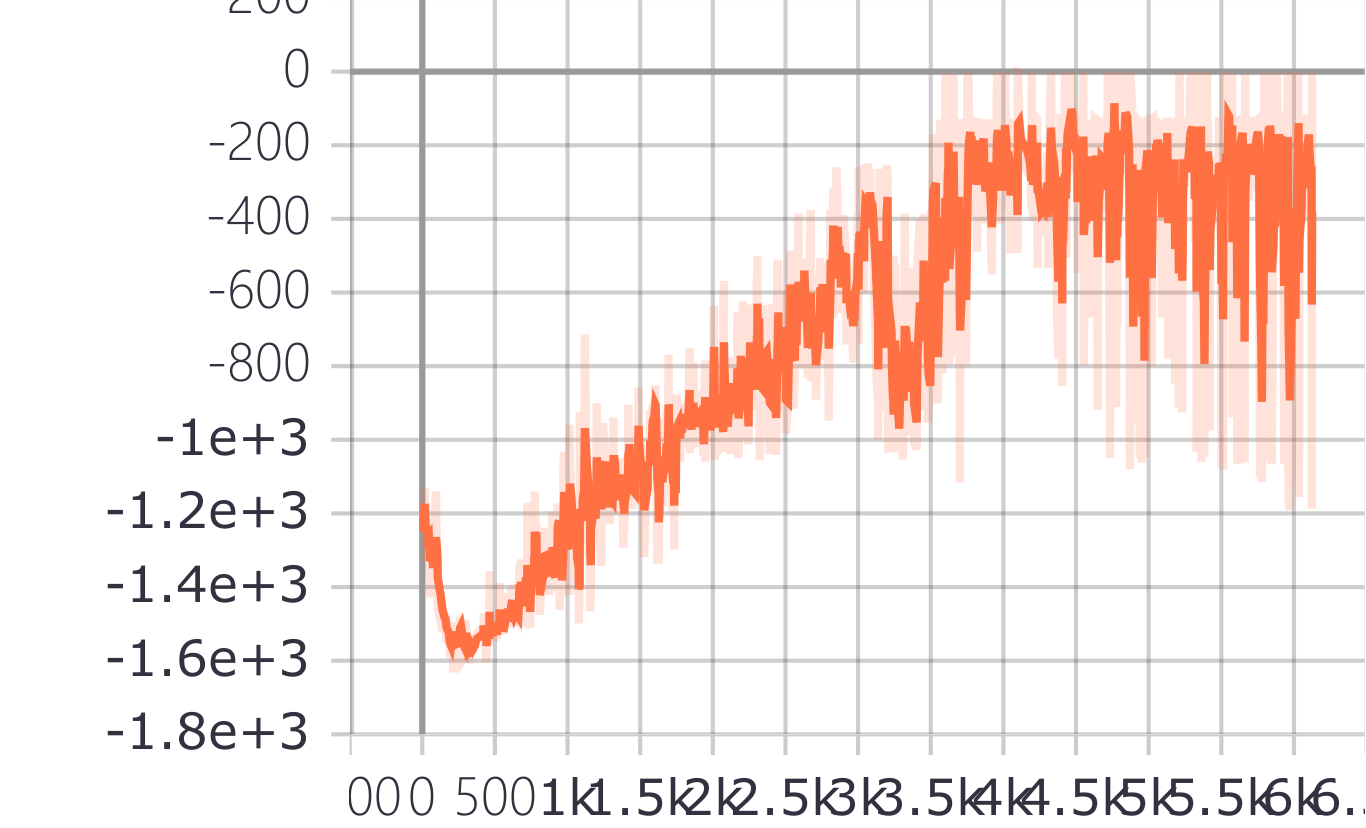

PPO.py result of ppo in pendulum-v0, somehow still hard to converge..don't know why, any one helps?

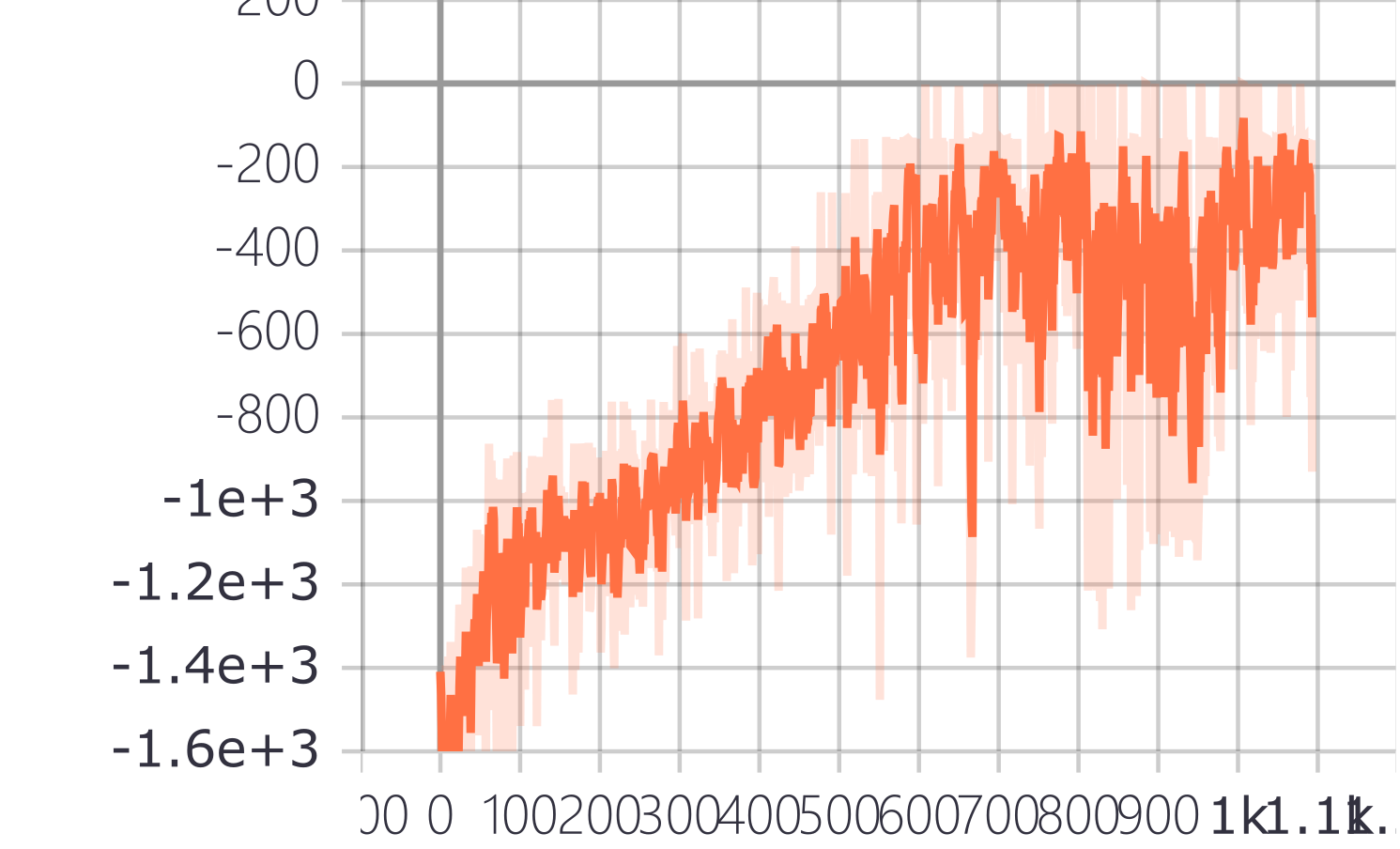

PPO_advantage.py more efficient update with generalized advantage estimator (GAE)