a SImple MUlti-GPU LATtice code for QCD calculations

SIMULATeQCD is a multi-GPU Lattice QCD framework that makes it simple and easy for physicists to implement lattice QCD formulas while still providing the best possible performance.

You will need to install git-lfs before continuing or you will need to use a git client which natively supports it.

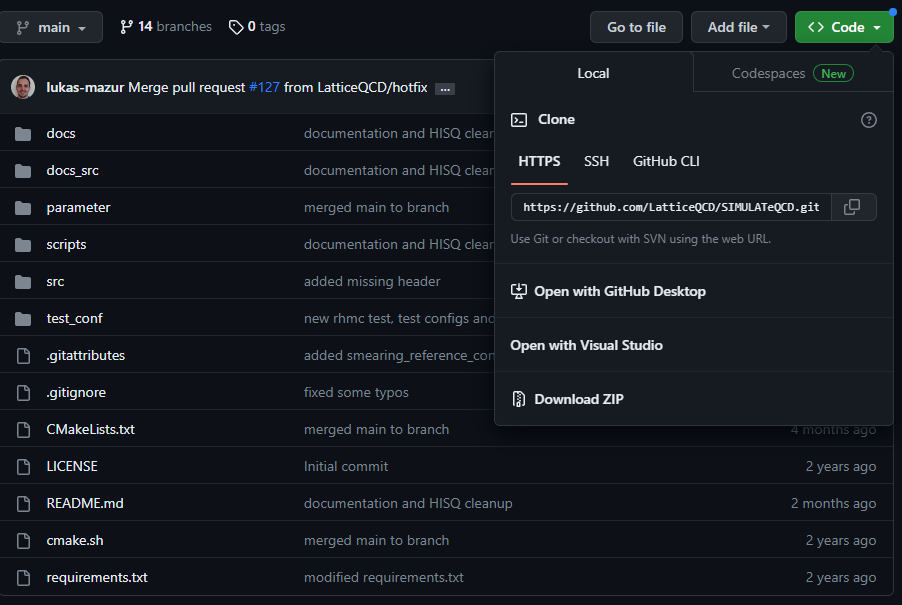

- Go to SIMULATeQCD's website

- Click the green Code button and then click Download Zip

- Extract the zip in a location of your choosing and extract it

Run git clone https://github.com/LatticeQCD/SIMULATeQCD.git

On RHEL-based (Rocky/CentOS/RHEL) systems

Before continuing make sure there are no updates pending with sudo dnf update -y && sudo dnf install -y podman and then reboot with sudo reboot. The reboot just makes avoiding permissions / kernel issues easy because that stuff is reread on boot.

Other *NIX Systems

If you have a non RHEL-based OS see here for installation instructions.

Run podman run hello-world as your user to test your privileges. If this does not run correctly, simulateqcd will not run correctly.

WARNING: If you are SSH'ing to your server, make sure you ssh as a user and not root. If you SSH as root and then su to user, podman will issue ERRO[0000] XDG_RUNTIME_DIR directory "/run/user/0" is not owned by the current user. This happens because the user that originally setup /run is root rather than your user.

- Update config.yml with any settings you would like to use for your build.

- You can run

<where_you_downloaded>/simulate_qcd.sh listto get a list of possible build targets.

- You can run

- Run

chmod +x ./simulate_qcd.sh && ./simulate_qcd.sh build

You will need to download the following before continuing:

cmake(Some versions have the "--phtread" compiler bug. Versions that definitely work are 3.14.6 or 3.19.2.)C++compiler withC++17support.MPI(e.g.openmpi-4.0.4).CUDA Toolkitversion 11+.pip install -r requirements.txtto build the documentation.

To setup the compilation, create a folder outside of the code directory (e.g. ../build/) and from there call the following example script:

cmake ../SIMULATeQCD/ \

-DARCHITECTURE="70" \

-DUSE_GPU_AWARE_MPI=ON \

-DUSE_GPU_P2P=ON \Here, it is assumed that your source code folder is called SIMULATeQCD. Do NOT compile your code in the source code folder!

You can set the path to CUDA by setting the cmake parameter -DCUDA_TOOLKIT_ROOT_DIR:PATH.

-DARCHITECTURE sets the GPU architecture (i.e. compute capability version without the decimal point). For example "60" for Pascal and "70" for Volta.

Inside the build folder, you can now begin to use make to compile your executables, e.g.

make NameOfExecutableIf you would like to speed up the compiling process, add the option -j, which will compile in parallel using all available CPU threads. You can also specify the number of threads manually using, for example, -j 4.

Popular production-ready executables are:

# generate HISQ configurations

rhmc # Example Parameter-file: parameter/applications/rhmc.param

# generate quenched gauge configurations using HB and OR

GenerateQuenched # Example Parameter-file: parameter/applications/GenerateQuenched.param

# Apply Wilson/Zeuthen flow and measure various observables

gradientFlow # Example Parameter-file: parameter/applications/gradientFlow.param

# Gauge fixing

gaugeFixing # Example Parameter-file: parameter/applications/gaugeFixing.paramIn the documentation you will find more information on how to execute these programs.

(See Full code example.)

template<class floatT, bool onDevice, size_t HaloDepth>

struct CalcPlaq {

gaugeAccessor<floatT> gaugeAccessor;

CalcPlaq(Gaugefield<floatT,onDevice,HaloDepth> &gauge) : gaugeAccessor(gauge.getAccessor()){}

__device__ __host__ floatT operator()(gSite site) {

floatT result = 0;

for (int nu = 1; nu < 4; nu++) {

for (int mu = 0; mu < nu; mu++) {

result += tr_d(gaugeAccessor.template getLinkPath<All, HaloDepth>(site, mu, nu, Back(mu), Back(nu)));

}

}

return result;

}

};

(... main ...)

latticeContainer.template iterateOverBulk<All, HaloDepth>(CalcPlaq<floatT, HaloDepth>(gauge))Please check out the documentation to learn how to use SIMULATeQCD.

Open an issue, if...

- you have troubles compiling/running the code.

- you have questions on how to implement your own routine.

- you have found a bug.

- you have a feature request.

If none of the above cases apply, you may also send an email to lukas.mazur(at)uni-paderborn(dot)de.

L. Mazur, S. Ali, L. Altenkort, D. Bollweg, D. A. Clarke, H. Dick, J. Goswami O. Kaczmarek, R. Larsen, M. Neumann, M. Rodekamp, H. Sandmeyer, C. Schmidt, P. Scior, H.-T. Shu, G. Curell

If you are using this code in your research please cite:

- L. Mazur, Topological aspects in lattice QCD, Ph.D. thesis, Bielefeld University (2021), https://doi.org/10.4119/unibi/2956493

- L. Altenkort, D.Bollweg, D. A. Clarke, O. Kaczmarek, L. Mazur, C. Schmidt, P. Scior, H.-T. Shu, HotQCD on Multi-GPU Systems, PoS LATTICE2021, Bielefeld University (2021), https://arxiv.org/abs/2111.10354

- We acknowledge support by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) through the CRC-TR 211 'Strong-interaction matter under extreme conditions'– project number 315477589 – TRR 211.

- This work was partly performed in the framework of the PUNCH4NFDI consortium supported by DFG fund "NFDI 39/1", Germany.

- This work is also supported by the U.S. Department of Energy, Office of Science, though the Scientific Discovery through Advance Computing (SciDAC) award Computing the Properties of Matter with Leadership Computing Resources.

- We would also like to acknowedge enlightening technical discussions with the ILDG team, in particular H. Simma.