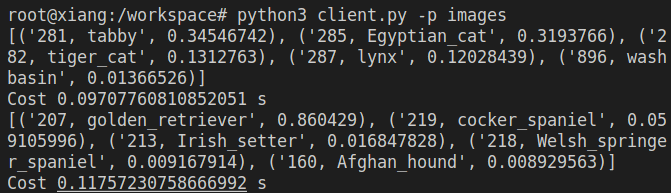

We are using PyTorch ResNet18, converted to ONNX, to perform inference on images. The communication method being used is the WebSocket function.

nvidia-driver

WSL

DOCKER DESKTOP

NGINX Because WLS and the local Windows service have different IP addresses, you need to install nginx to proxy the IP.

Install nvidia-driver, nvidia-docker and docker before installing the docker container.

-

Add docker to sudo group

sudo groupadd docker sudo usermod -aG docker $USER sudo chmod 777 /var/run/docker.sock

sudo chmod u+x ./docker/*.sh

sudo ./docker/build.shsudo chmod u+x ./docker/*.sh

sudo ./docker/run.shgunicorn -b 0.0.0.0:5000 --workers 1 --threads 100 webapi:app- If you want to change port numbers, you shold change 0.0.0.0:5000.

python3 client/client_ws_100pics.py -p <folder/files>

python3 client/client_ws_batch_pickle.py -p <folder/files> --batch 8- p: The path of inference images. you can input the folder path or the image path.

- If an error occurs with the WebSocket's create_connection function, you should do the following:

pip uninstall websocket pip uninstall websocket-client pip install websocket pip install websocket-client

- flask-sock