Great Expectations is an open source tool that data teams use to validate, profile an document data to ensure the integrity of their pipelines.

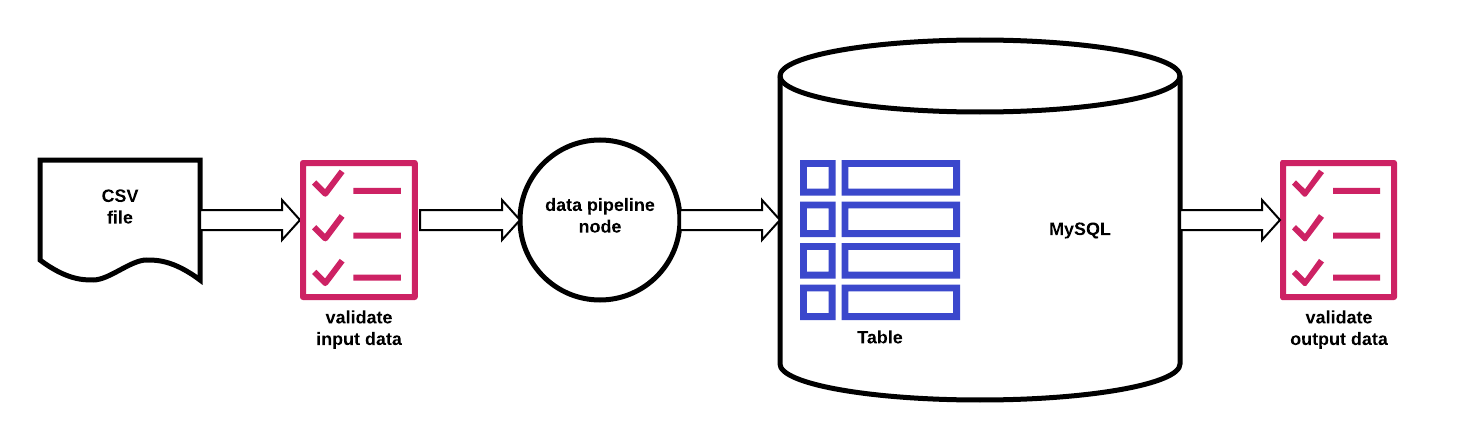

This repo demonstrates a common pattern of deploying Great Expectations in a data pipeline.

The pipeline loads an NPI CSV file into a table in MySQL database.

The NPI (National Provider Identifier) dataset contains publicly available information about all healthcare providers in the US. The dataset is maintained by the Center for Medicare & Medicaid Services which publishes periodic updates as CSV files.

This example demonstrates how you can use Great Expectations to protect the node against unexpected input data and to test the node's output before additional nodes downstream use this data. In a real deployment our pipeline would be a node in a larger pipeline that would transform the loaded data into some data product.

Video: TBD

How to use Great Expectations' Validation Operators to:

- validate data (both a CSV file and a database table)

- save the results of validation and render them into HTML

- use Slack to notify when validation happen

The example does not walk you through the process of bringing Great Expectations into an existing pipeline project or the process of creating expectations.

These topics are covered in tutorials and videos here: https://docs.greatexpectations.io/en/latest/getting_started.html

- A Python environment (Great Expectations supports both Python 2 and 3, but this example was tested only on Python 3)

- A MySQL server

- Create a fresh Python virtual environment (recommended)

- Install Great Expectations in this environment

- Clone this repo and cd into it

- Create

great_expectations/uncommitted/config_variables.ymlfile with the following content:

mysql_db:

url: mysql://user:password@host/database (TODO: replace with real credentials)

validation_notification_slack_webhook: (TODO: Slack webhook URL for notifications)

- Create the table in your MySQL database:

python aux_scripts/create_table_from_npi_file.py npi_import_raw - Build the local Data Docs website:

great_expectations build-docs

After each run go to great_expectations/uncommitted/data_docs/local_site/index.html in your web browser.

- Run the pipeline on a good file:

./pipeline/data_pipeline.sh npi_data/npi_files/npidata_pfile_20050523-20190908_0.csv npi_import_rawValidation will be successful. - Run the pipeline on a good file again:

./pipeline/data_pipeline.sh npi_data/npi_files/npidata_pfile_20050523-20190908_0.csv npi_import_rawValidation will fail, because we did not truncate the table and the row count in the table is greater than the row count in the file. - Run the pipeline on a "bad file":

./pipeline/data_pipeline.sh new_data/npidata_pfile_20050523-20191001_1.csv npi_import_rawThe first validation step (validating the file) will fail.