DriftLens is an unsupervised drift detection framework for deep learning classifiers on unstructured data.

This repo contains the code for the DriftLens tool, a web application that allows users to run controlled drift experiments on pre-uploaded use cases or run drift detection on user-provided data.

The Demo has been presented in the following demo paper:

DriftLens: A Concept Drift Detection Tool (Greco et al., 2024, Proceedings of the 27th International Conference on Extending Database Technology EDBT)

The priliminary version of the DriftLens methodology was first proposed in the paper:

Drift Lens: Real-time unsupervised Concept Drift detection by evaluating per-label embedding distributions (Greco et al., 2021, International Conference on Data Mining Workshops (ICDMW))

If you want to learn more or use the DriftLens methodology, please visit the following repository

You can read more about the DriftLens methodology in this paper.

DriftLens is an unsupervised drift detection technique based on distribution distances within the embedding representations generated by deep learning models when working with unstructured data.

The technique is unsupervised and does not require the true labels in the new data stream. Due to its low complexity, it can perform drift detection in real time.

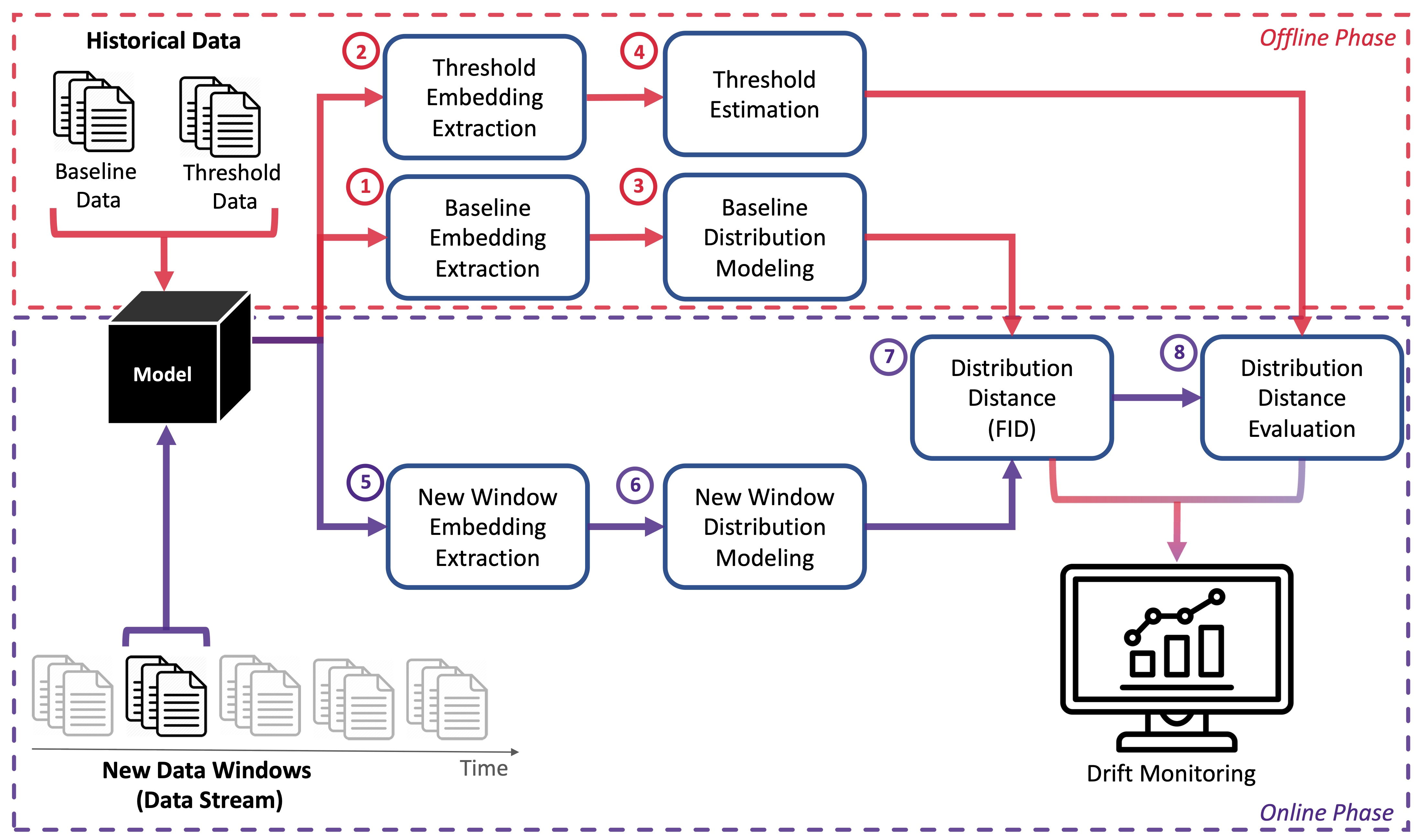

The methodology includes an offline and an online phase, as summarized in the following figure. However, the data modeling and distribution distances computation are performed in the same way in both phases.

A given batch of data is modeled by estimating the multivariate normal distribution of the embedding vectors. Specifically, the distribution is represented by the embeddings’ mean vector and covariance matrix.

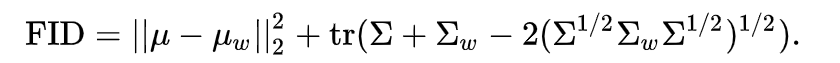

The Frechét Inception Distance (FID) score is used to compute the distance between two multivariate normal distributions.

The Frechét Inception Distance between two multivariate normal distributions is computed as:

It is a real [0, ∞] range value. The higher the score, the greater the distance and the more likely the drift.

In the offline DriftLens estimates the distributions of a historical dataset, called baseline, which represents the concepts that the model has learned during training. It then estimates thresholds that discriminate between normal and abnormal (i.e., possible drift) distribution distances using a threshold dataset. Notice that the baseline and threshold datasets can be the same.

To this end, the baseline ① and the threshold ② data is first feed into the model to extract the embedding vectors and the predicted labels.

Then, the baseline dataset is used to model the distributions of the baseline ③ (i.e., computing the baseline embeddings’ mean vector and covariance matrix). Specifically, the baseline dataset is used to model the per-batch and per-label multivariate normal distributions.

- The per-batch models the entire vector distributions independently of the class label. The mean and covariance are computed on the entire set of embeddings.

- For the per-label, n normal distributions are estimated, where n is the number of labels the model was trained on. Each one is modeled by grouping the embeddings by predicted labels and computing the mean and covariance of each label separately.

To estimate the thresholds ④, a large number of windows are sampled from the threshold dataset. The distances are then calculated between the baseline and each window, and sorted in descending order. The maximum value represents the maximum distance of a set of samples considered without drift. This value is set as the threshold.

In the online phase, DriftLens analyzes the new data stream in fixed-size windows. For each window, the process is similar to the offline phase.

- The embedding vectors and the predicted labels are produced by the model ⑤.

- The per-batch and per-label distributions are modeled by computing the mean and covariance of the embedding for the entire window and the samples predicted for each label separately ⑥.

- The per-batch and per-label distribution distances between the embeddings of the current window and the baseline are computed ⑦.

- Drift is predicted if the distribution distances exceed the threshold values for the current window ⑧.

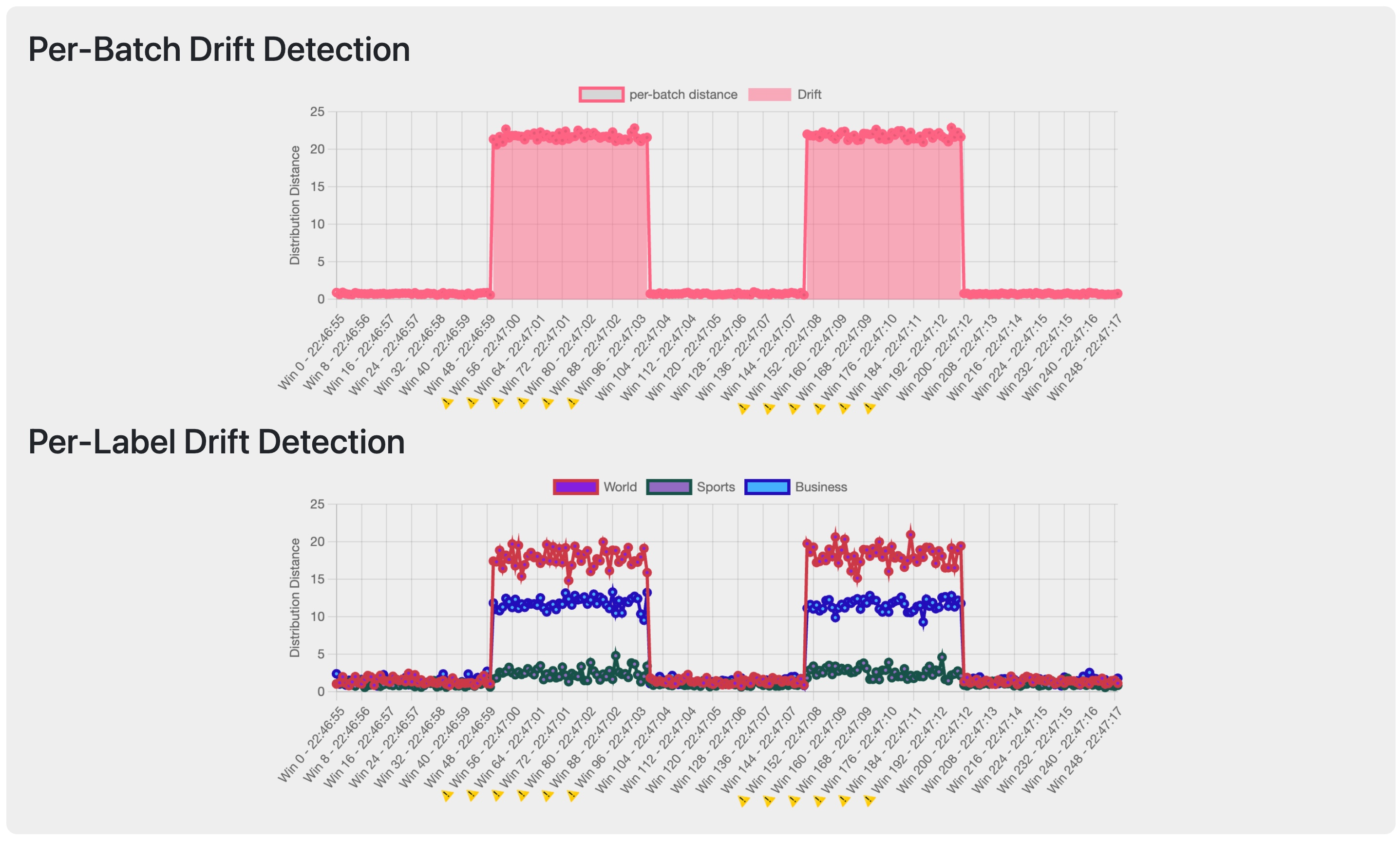

The process is repeated for each window. A drift monitor plots the distribution distances per-batch and per-labels separately over time.

The tool is a web application implemented in Flask based on the DriftLens methodology.

Within the tool, you can perform two types of experiments:

- Run controlled drift experiments on pre-uploaded use cases (Page 1).

- Run drift detection on your own data (Page 2).

Both pages run the online phase of DriftLens to perform drift detection to understand if, where, and how drift occurs on the controlled or your data streams by opening the DriftLens drift detection monitor (Page 3).

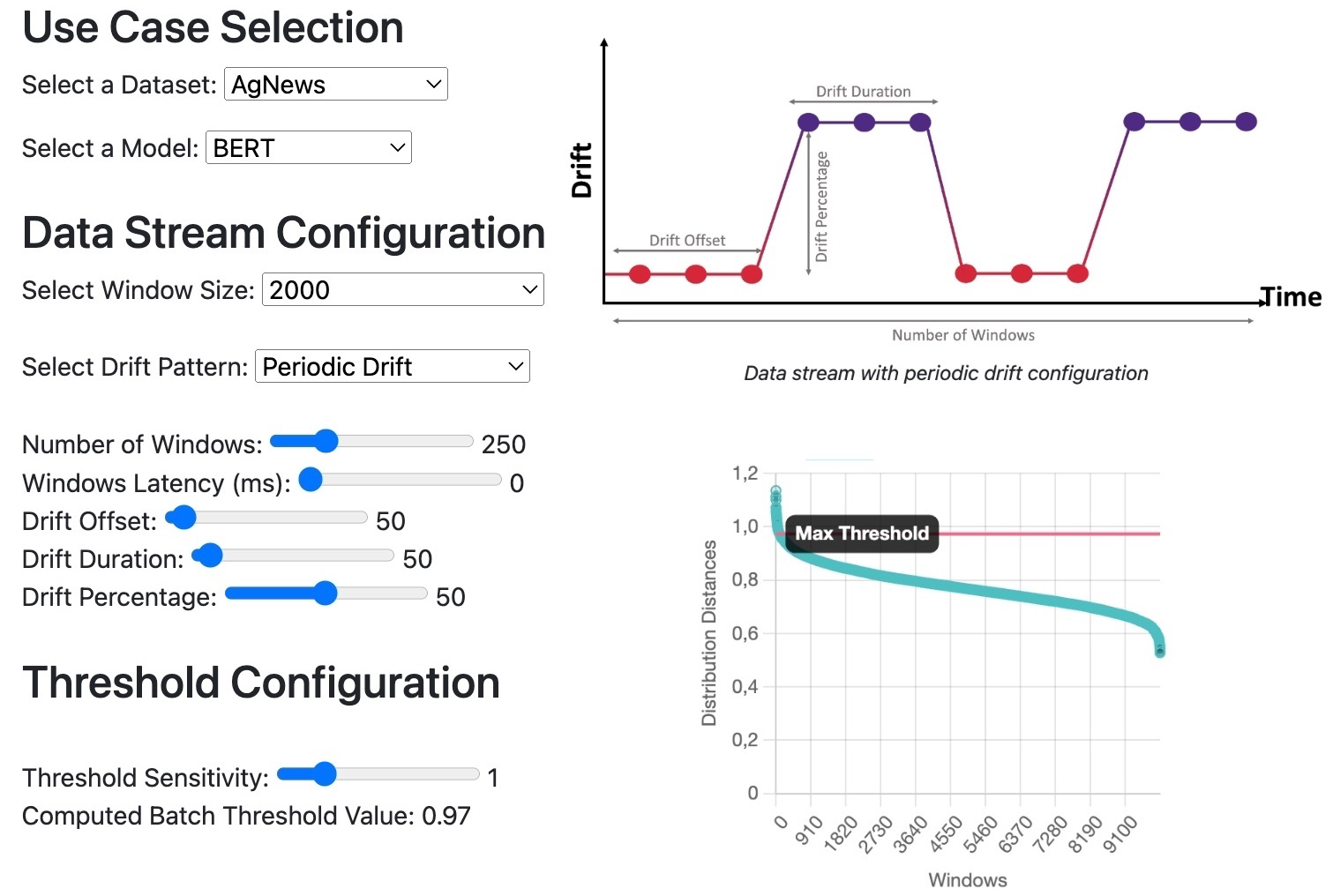

The first page allows you to configure a controlled drift experiment using DriftLens on a set of pre-uploaded use cases.

| Use Case | Dataset | Domain | Models | F1 | Description |

|---|---|---|---|---|---|

| 1.1 | Ag News | Text | BERT | 0.98 | Task: Topic Classification. Training Labels: World, Business, and Sport Drift: Simulated with one new class label: Science/Tech |

| 1.2 | DistillBERT | 0.97 | |||

| 1.3 | RoBERTa | 0.98 | |||

| 2.1 | 20 Newsgroup | Text | BERT | 0.88 | Task: Topic Classification. Training Labels: Technology, Sale-Ads, Politics, Religion, Science Drift: Simulated with one new class label: Recreation |

| 2.2 | DistillBERT | 0.87 | |||

| 2.3 | RoBERTa | 0.88 | |||

| 3.1 | Intel-Image | Computer Vision | VGG16 | 0.89 | Task: Image Classification. Training Labels: Forest, Glacier, Mountain, Building, Street Drift: Simulated with one new class label: Sea |

| 3.2 | VisionTransformer | 0.90 | |||

| 4.1 | STL | Computer Vision | VGG16 | 0.82 | Task: Image Classification. Training Labels: Airplane, Bird, Car, Cat, Deer, Dog, Horse, Monkey, Ship Drift: Simulated with one new class label: Truck |

| 4.2 | VisionTransformer | 0.96 | |||

For each use case, you can generate a data stream by setting two parameters: the number of windows and the window size. A data stream consisting of number of windows will be created, each composed of window size samples.

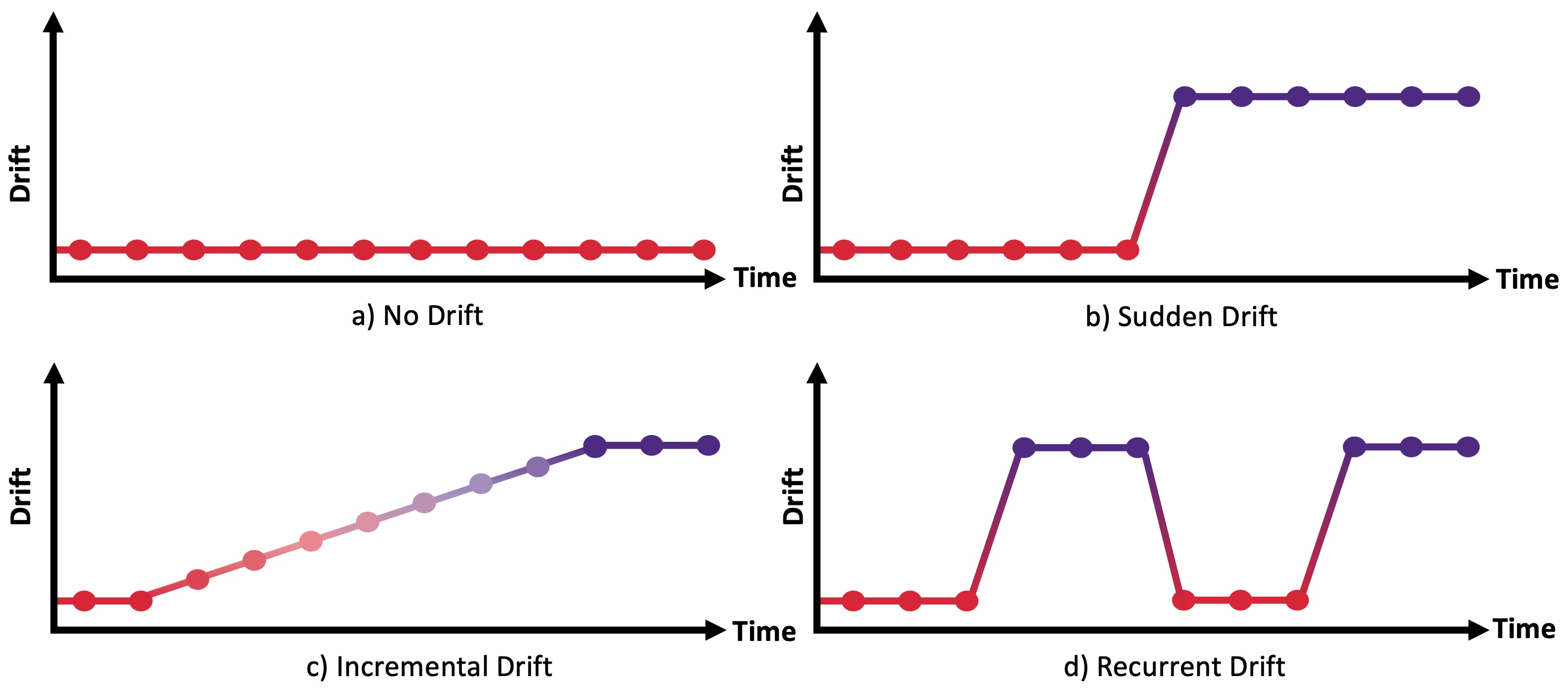

In the generation of the data stream, you can simulate four types of drift scenarios:

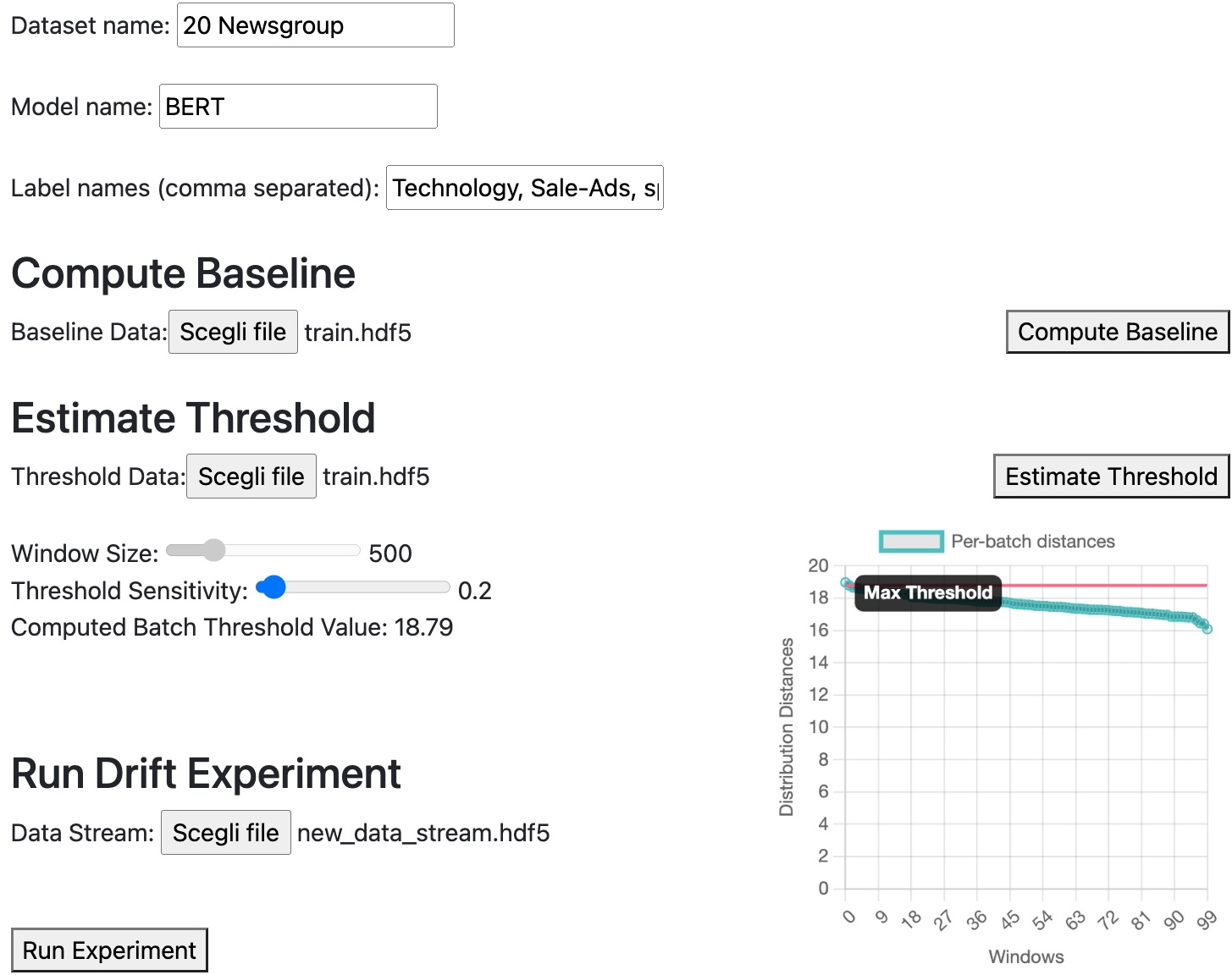

The second page allows you to experiment with drift detection on your data and models.

To this end, you should provide the embedding and predicted labels for the baseline and threshold datasets (e.g., training and test set) to execute the offline phase of DriftLens. Finally, you should provide an order data stream to perform the drift detection.

You can read the correct format for your data here.

The tool processes each data window in real-time using the DriftLens methodology. The drift monitor page shows the distribution distances for the entire window (per-batch) and separately per label in two charts (per-label). The charts are dynamically updated once DriftLens has processed each window until the end of the data stream.

You can use the drift detection monitor to understand:

- when drift occurs by looking at the windows in which drift was predicted;

- how drift occurs in terms of severity of the drift and patterns;

- where drift occurs by analyzing the labels the most affected by drift.

To use the DriftLens tool locally:

conda create -n driftlens-demo-env python=3.8 anaconda

conda activate driftlens-demo-envpip install -r requirements.txt./download_data.sh You can also download the zip file manually and replace the 'use_cases' folder in the 'static' folder with the one in the zip file. The zip file can be downloaded from here:

python driftlens_app.pyThe DriftLens app will run on localhost: http://127.0.0.1:5000

If you want to perform a drift detection on your own data, you need to provide the following files:

- baseline_embedding: the embedding of the baseline dataset (e.g., training set)

- threshold_embedding: the embedding of the threshold dataset (e.g., test set or training set)

- data_stream: the data stream to be used for drift detection

Each file should be in the HDF5 format and contain the following columns:

- "E": the embedding of the samples in your dataset, as a numpy array of shape (n_samples, embedding_dimension).

- "Y_predicted": the predicted label ids of the samples in your dataset, as a list of n_samples elements.

If you use the DriftLens methodology or tool, please cite the following papers:

DriftLens Tool

@inproceedings{greco2024driftlens,

title={DriftLens: A Concept Drift Detection Tool},

author={Greco, Salvatore and Vacchetti, Bartolomeo and Apiletti, Daniele and Cerquitelli, Tania and others},

booktitle={Advances in Database Technology},

volume={27},

pages={806--809},

year={2024},

organization={Open proceedings}

}DriftLens Preliminary Methodology

@INPROCEEDINGS{driftlens,

author={Greco, Salvatore and Cerquitelli, Tania},

booktitle={2021 International Conference on Data Mining Workshops (ICDMW)},

title={Drift Lens: Real-time unsupervised Concept Drift detection by evaluating per-label embedding distributions},

year={2021},

volume={},

number={},

pages={341-349},

doi={10.1109/ICDMW53433.2021.00049}

}