This README is a literate programming document. When this document is loaded in Emacs with Org Mode, it can generate scripts and configuration for the documented steps.

For some interesting examples – including getting into complexities of chaining multiple code blocks, see Introduction to Literate Programming by Howard Abrams

This starts up or resuses a shell session named homelab-sh for interactive use. Some

setup in this document may use this session for stateful operations.

(switch-to-buffer (shell "homelab-sh"))

(switch-to-buffer "README.org")

(delete-other-windows )

(switch-to-buffer-other-window "homelab-sh")

(switch-to-buffer-other-window "README.org")- /r/homelab

- /r/selfhosted

- Home Lab Beginners guide - Hardware

Assure fortune and cowsay are installed

- Some additional cows

# Optionally specify a cow - whether or not in the safe list

mycow=$1

# cowfiles are in different paths on openSUSE, Debian and Ubuntu, so include them all

export COWPATH=/usr/share/cows:/usr/share/cowsay/cows:~/projects/homelab/cows:/usr/share/games/fortunes:/usr/share/games/fortunes-de

if [ -z ${mycow} ] ; then

IFS=',' read -r -a safe_cows <<< 'default,default,default,default,bud-frogs,duck,elephant,three-eyes,tux,rancher,rancher-trademarked,rancher-k3os,rancher-k3os-trademarked,kitten,robotfindskitten,owl,hellokitty,pony-smaller,unipony-smaller,moofasa,skeleton,www'

mycow=${safe_cows[$((RANDOM % ${#safe_cows[@]}))]}

fi

# Choose fortune cookie files based on selected cow

case ${mycow} in

rancher*|tux|chameleon)

db='linux computers debian science startrek'

;;

owl|satanic|eyes)

db='cookie definitions steven-wright deep-thoughts zippy mitch-hedberg'

;;

*)

db=

esac

cowcmd='cowsay'

if [[ $((RANDOM % 2)) == 0 ]]; then

cowcmd='cowthink'

fi

if [[ $(command -v fortune 2>/dev/null) && $(command -v cowsay 2>/dev/null) ]] ; then

IFS=',' read -r -a cowopts <<< "b,g,p,s,t,w,y,d"

f=$(fortune -c -e ${db})

# split source and content when using -c parameter

cookiefile=$(echo $f | cut -d'%' -f1)

fcontent=$(echo $f | cut -d'%' -f2-)

echo $fcontent | ${cowcmd} -f ${mycow} -${cowopts[$((RANDOM % ${#cowopts[@]}))]}

echo ${cookiefile}

echo

fiIt’s harder to pass multiple paths to fortune (at least on Debian), so links to

additional fortune files may be advised.

sudo ln -s ~/projects/homelab/fortunes/* /usr/share/games/fortunes/strfile -c % mitch-hedberg mitch-hedberg.datif [ $# -lt 1 ]

then

cat << HELP

$0 -- list all tags for a Docker image on a remote registry.

EXAMPLE:

- list all tags for ubuntu:

$0 ubuntu

- list all php tags containing apache:

$0 php apache

HELP

exit 0

fi

namespace=$(echo "${1}" | cut -s -d'/' -f1)

image=$(echo "${1}" | cut -s -d'/' -f2)

if [ -z "${namespace}" ]; then

namespace="library"

image="${1}"

fi

tags=$(curl --silent "https://registry.hub.docker.com/v2/repositories/${namespace}/${image}/tags?page_size=1000" | jq -r '.results[].name' | sort --version-sort)

if [ -n "$2" ] ; then

tags=$(echo ${tags} | grep "$2")

fi

echo "${tags}"- pass in URL to video that can be processed by

yt-dlp- N.B. we start with assumptions that may be relevant only to YouTube URLs

- Detect whether the video has sections/chapters

- Initiate transcription of single video or video chapters using

whisper - Format the transcript into paragraphs with the

wtpsplitlibrary - Generate a Markdown formatted transcript with video title and section titles

- Sign off and call it a day

- path of downloaded “parent”

.mp3audio file- this matches

'%(title)s/%(title)s.%(ext)s'fromyt-dlp

- this matches

TODO: GET RID OF THIS SHELL SCRIPT - it is deprecated in favor of the python script

WHISPER_MODEL=large-v3

SSH_HOST=aziriphale # null for hosted on current machine

SSH_USER=${USER}

WHISPER_CMD=

function transcribe_audio_file() {

# Send audio to whisper to produce transcription

# $1 is audio file path - NOTE: path is shell-escaped already

xaudiopath=$1

xaudiodir=$(dirname ${xaudiopath})

echo " Audio extraction of ${xaudiopath}"

echo " command will be rsync -av ${xaudiopath} ${SSH_USER}@${SSH_HOST}:${xaudiopath}"

if [ -n "${SSH_HOST}" ]; then

# use rsync to create new directories on target system

rsync -av ${xaudiopath} ${SSH_USER}@${SSH_HOST}:/tmp/transcribedir/

WHISPER_CMD="ssh ${SSH_USER}@${SSH_HOST} "

fi

WHISPER_CMD="${WHISPER_CMD} /home/${SSH_USER}/.local/bin/whisper --task transcribe --model ${WHISPER_MODEL} --word_timestamps True --output_format all --output_dir /tmp/transcribedir /tmp/transcribedir/$(basename ${xaudiopath})"

echo " ${WHISPER_CMD}"

${WHISPER_CMD}

return

}

function format_transcript() {

# $1 is a plain text file

# $2 is optional section title

# this function writes a markdown file with sentence and paragraph formatting

# output of script must be captured in the lovely global var concept that bash provides us

FORMAT_CMD="~/.local/python-venvs/wtpsplit/bin/python ~/gort.py ${1}"

formatted_transcript=$(ssh ${SSH_USER}@${SSH_HOST} ${FORMAT_CMD})

return

}

videourl="$1"

echo "Executing yt-dlp"

audiopath=$(yt-dlp \

-f 'bestaudio' \

--write-thumbnail \

--convert-thumbnails png \

--embed-metadata \

--check-formats \

--no-mtime \

--write-description \

--write-info-json \

--restrict-filenames \

--extract-audio \

--audio-format mp3 \

"${videourl}" \

--paths home:/tmp/ \

-o '%(title)s/%(title)s.%(ext)s' \

--split-chapters \

--write-info-json \

--print after_move:filepath \

-o "chapter:%(title)s/[%(section_number)02d]-%(section_title)s.%(ext)s")

safepath=$(printf '%q' "${audiopath}")

infofile=$(ls $(dirname ${audiopath})/*.info.json|head -1)

numchapters=$(jq -r '.chapters | length' ${infofile})

if [[ ${numchapters} == 0 ]] ; then

echo "there ain't no chapters in this one"

echo "TODO: transcribe unchaptered video file ${audiopath}"

transcribe_audio_file "${safepath}"

else

indexmax=$((${numchapters} - 1))

echo "there are ${numchapters} chapters in this video"

for indexnum in $(seq 0 ${indexmax}); do

chapnum=$((${indexnum} + 1))

chaptitle=$(jq -r ".chapters[${indexnum}] | .title" ${infofile})

chapfile=$(ls $(dirname ${safepath})/\[$(printf '%02d' ${chapnum})\]*mp3|head -1)

# chapfile=$(printf '%q' "${chapfile}")

echo "Chapter ${chapnum}: ${chaptitle} - file ${chapfile}"

echo "Transcribing... ${chapfile}"

transcribe_audio_file ${chapfile}

format_transcript /tmp/transcribedir/$(basename ${chapfile} | sed 's,mp3$,txt,')

echo "## ${chaptitle}" >> /tmp/gort.md

cat "${formatted_transcript}" >> /tmp/gort.md

done

fiThis came almost entirely from a gpt-4o session asking to transform all functionality from the bash script into python

- TODO: trim trailing whitespace from formatted transcript

+

#!/usr/bin/env python3

import os

import subprocess

import json

import argparse

# TODO: add exception handling and logging overall (replace print with logging)

# TODO: check for non-zero exit status for all SSH and RSYNC

# TODO: check for non-zero exit status for shell command

def clean_whitespace(text):

# Split the text into individual lines

lines = text.splitlines()

# Strip trailing whitespace from each line

stripped_lines = [line.rstrip() for line in lines]

# Rejoin the lines

return '\n'.join(stripped_lines)

def run_command(command):

process = subprocess.Popen(command, stdout=subprocess.PIPE, stderr=subprocess.PIPE, shell=True)

output, error = process.communicate()

return output.decode('utf-8'), error.decode('utf-8')

def transcribe_audio_file(audio_path, ssh_host, ssh_user, whisper_model):

print(f"Transcribing {audio_path}")

if ssh_host:

command = f"rsync -av {audio_path} {ssh_user}@{ssh_host}:/tmp/transcribedir/"

run_command(command)

command = f"ssh {ssh_user}@{ssh_host} /home/{ssh_user}/.local/bin/whisper --task transcribe --model {whisper_model} --word_timestamps True --output_format all --output_dir /tmp/transcribedir /tmp/transcribedir/{os.path.basename(audio_path)}"

else:

command = f"/home/{ssh_user}/.local/bin/whisper --task transcribe --model {whisper_model} --word_timestamps True --output_format all --output_dir /tmp/transcribedir {audio_path}"

output, error = run_command(command)

print(output)

print(error)

def format_transcript(transcript_path, ssh_host, ssh_user):

command = f"ssh {ssh_user}@{ssh_host} ~/.local/python-venvs/wtpsplit/bin/python ~/gort.py {transcript_path}"

output, error = run_command(command)

return clean_whitespace(output)

def main(video_url, whisper_model, ssh_host, ssh_user):

command = f"""yt-dlp -f 'bestaudio' --write-thumbnail --convert-thumbnails png --embed-metadata --check-formats --no-mtime --write-description --write-info-json --restrict-filenames --extract-audio --audio-format mp3 '{video_url}' --paths home:~/YouTube/ -o '%(channel)s/%(title)s/%(title)s.%(ext)s' --print after_move:filepath --split-chapters -o 'chapter:%(channel)s/%(title)s/[%(section_number)02d]-%(section_title)s.%(ext)s'"""

output, error = run_command(command)

audio_path = output.strip()

print(f"Audio path: {audio_path}")

info_file = next(f for f in os.listdir(os.path.dirname(audio_path)) if f.endswith('.info.json'))

with open(os.path.join(os.path.dirname(audio_path), info_file), 'r') as f:

video_info = json.load(f)

num_chapters = len(video_info.get('chapters', []))

video_title = video_info.get('title', 'Video') # Use 'Video' as a fallback

markdown_path = os.path.join(os.path.dirname(audio_path), os.path.basename(audio_path).removesuffix('.mp3') + '.org')

if num_chapters == 0:

print("No chapters found in the video")

transcribe_audio_file(audio_path, ssh_host, ssh_user, whisper_model)

transcript_path = os.path.join('/tmp/transcribedir', os.path.splitext(os.path.basename(audio_path))[0] + '.txt')

formatted_transcript = format_transcript(transcript_path, ssh_host, ssh_user)

with open(markdown_path, 'w') as f:

f.write(f"#+title: {video_title}\n\n")

# f.write(f"* {video_title}\n")

f.write(formatted_transcript)

else:

print(f"Found {num_chapters} chapters in the video")

with open(markdown_path, 'w') as f:

f.write(f"#+title: {video_title}\n\n")

# f.write(f"* {video_title}\n")

for index, chapter in enumerate(video_info['chapters'], start=1):

chap_title = chapter['title']

print("Debug: markdown path is " + markdown_path)

chap_file = next(f for f in os.listdir(os.path.dirname(audio_path)) if f.startswith(f"[{index:02d}]") and f.endswith('.mp3'))

print(f"Chapter {index}: {chap_title} - file {chap_file}")

transcribe_audio_file(os.path.join(os.path.dirname(audio_path), chap_file), ssh_host, ssh_user, whisper_model)

transcript_path = os.path.join('/tmp/transcribedir', os.path.splitext(chap_file)[0] + '.txt')

formatted_transcript = format_transcript(transcript_path, ssh_host, ssh_user)

with open(markdown_path, 'a') as f:

f.write(f"\n* {chap_title}\n")

f.write(formatted_transcript)

# TODO: validate args: non-empty URL, valid model names, accessible SSH host

if __name__ == '__main__':

parser = argparse.ArgumentParser(description='Transcribe YouTube videos using Whisper AI')

parser.add_argument('video_url', help='URL of the YouTube video to transcribe')

parser.add_argument('--model', default='large-v3', help='Whisper AI model to use for transcription')

parser.add_argument('--ssh-host', help='SSH host to run the transcription on')

parser.add_argument('--ssh-user', default=os.environ['USER'], help='SSH username')

args = parser.parse_args()

# hard-coded section

ssh_host = 'aziriphale'

ssh_user = 'gregj'

main(args.video_url, args.model, args.ssh_host, args.ssh_user)/tmp/yt-audio.sh 'https://www.youtube.com/watch?v=eoFlbna9-cY'/tmp/yt-audio.sh 'https://www.youtube.com/watch?v=nbSRBs0EMlE'cd /mnt/arch # or where you are preparing the chroot dir

mount -t proc /proc proc/

mount --rbind /sys sys/

mount --rbind /dev dev/TODO: update how-to for Synology DSM

Instructions for setting up on Debian

sudo apt install squidAdd caching for large objects! Put this in /etc/squid/conf.d/gregs-cache.conf

# http_port 3128 transparent

http_access allow all

# ref https://superuser.com/a/972702/74209

# we want to cache large objects

maximum_object_size 6 GB

cache_dir ufs /var/spool/squid 30720 16 256

cache_mem 256 MB

maximum_object_size_in_memory 512 KB

cache_replacement_policy heap LFUDA

range_offset_limit -1

quick_abort_min -1 KB

in /etc/apt/apt.conf.d/proxy.conf

Acquire::http::Proxy "http://172.16.17.5:3128/";

Acquire::https::Proxy "http://172.16.17.5:3128/";

Change the following in /etc/sysconfig/proxy

PROXY_ENABLED="yes"

HTTP_PROXY="http://172.16.17.5:3128/"

HTTPS_PROXY="http://172.16.17.5:3128/"

NO_PROXY="localhost,127.0.0.1,172.16.17.0/24,.magichome"

When setting up a Kubernetes RKE cluster, the same Docker image gets pulled on separate connections to each of the nodes. A pull-through Docker registry would solve the problem, acting as a caching server for Docker images.

However, Docker’s built-in support will only work with images in the primary Docker registry.

docker-registry-proxy works with multiple registries.

This is a proxy that also defaults to 3128 (already used by Squid) - so I’m forwarding to port 6128

docker run -d --rm --name docker_registry_proxy -it \

-p 0.0.0.0:6128:3128 \

-v /data/docker_mirror_cache:/docker_mirror_cache \

-v /data/docker_mirror_certs:/ca \

-e REGISTRIES="k8s.gcr.io gcr.io quay.io" \

-e AUTH_REGISTRIES="auth.docker.io:gregoryg:NLCsEKtk6cNeE5 quay.io:gregoryg:AJYgeUXbfjiRFNPiyM5Wrc+NiEBkIPe1lpjkp2erB6xaETMZowuaU6qLEkbFB7h+Rr4ExAoRrstcpLSt4c3zJtEJM/+mLQ3GCaQ9OeQ1Plc=" \

rpardini/docker-registry-proxy:latest

# tiangolo/docker-registry-proxy:latest

# -e REGISTRIES="k8s.gcr.io gcr.io quay.io your.own.registry another.public.registry" \

# -e AUTH_REGISTRIES="auth.docker.io:dockerhub_username:dockerhub_password your.own.registry:username:password" \Create file /etc/systemd/system/docker.service.d/http-proxy.conf

sudo mkdir -p /etc/systemd/system/docker.service.d[Service]

Environment="HTTP_PROXY=http://172.16.17.5:6128/"

Environment="HTTPS_PROXY=http://172.16.17.5:6128/"

Environment="NO_PROXY=localhost,127.0.0.1,docker-registry.example.com,.corp,quay.io"

Get the CA certificate from the proxy and make it a trusted root. The directory for the certificate differs on OpenSUSE and Ubuntu

if [ -d "/etc/pki/trust/anchors" ] ; then

certdir=/etc/pki/trust/anchors

else

certdir=/usr/share/ca-certificates

fi

curl http://172.16.17.5:6128/ca.crt | sudo tee ${certdir}/docker_registry_proxy.crt

echo "docker_registry_proxy.crt" | sudo tee -a /etc/ca-certificates.conf

sudo update-ca-certificates --freshReload and restart

sudo systemctl daemon-reload

sudo systemctl restart docker- Clear

dockerdof everything not currently running:docker system prune -a -f beware. - Pull something, like

docker pull ubuntu:20.04 - Watch the caching proxy logs on Lab-Server1

docker logs docker_registry_proxy --followThen do, for example, docker pull k8s.gcr.io/kube-proxy-amd64:v1.10.4 and watch the logs on the caching proxy, it should list a lot of MISSes.

Then, clean again, and pull again. You should see HITs! Success.

Do the same for docker pull ubuntu and rejoice.

Test your own registry caching and authentication the same way; you don’t need docker login, or .docker/config.json anymore.

- kubernetes/01-k8s-distribution/rancher-rke/

- Have a look at the two canonical starter configs from Rancher:

- My specific homelab config

- Once you have the config to your liking, run

# rke up rke up --config cluster.yaml --ssh-agent-auth KUBECONFIG=kube_config_cluster.yaml kubectl get nodes

helm install rancher rancher-latest/rancher \

--namespace cattle-system \

--create-namespace \

--set hostname=rancher.example.com- To get the

cattle-cluster-agentDeployment to resolve myrancher.example.comserver URL, I had to add the following toDeployment.spec.template.spechostAliases: - hostnames: - rancher.example.com ip: 172.16.17.14

- SKIP THIS for RKE - Canal is already installed and configured

- At the end of this step you should see all nodes reporting

readystatuskubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

- There are some options for getting a Web UI overview of either a single cluster or multiple clusters. These will usually offer the ability to display resource usage, view and edit running resources, and create new resources. Some allow higher level options like setting workloads to run on multiple clusters, deploying secrets and config maps across clusters, etc.

- A great choice for this is Rancher (not RKE or K3s, which are Kubernetes distributions

offered by Rancher Labs). All you have to do to get started is to follow the guide at

Rancher Docs: Manual Quick Start. The TL;DR is here.

docker run --name rancher -d --restart=unless-stopped -p 0.0.0.0:80:80 -p 0.0.0.0:443:443 rancher/rancher - Run this on any server you wish that can be seen by your cluster. It can also be run on one of your cluster nodes, of course.

- I’m putting this step ahead of higher-level networking or any new objects that might create persistent volume claims

- OSS project created by Rancher Labs

kubectl apply -f https://raw.githubusercontent.com/longhorn/longhorn/master/deploy/longhorn.yaml - If you want to create easy access to the Longhorn UI, change the

longhorn-frontendservice to either NodePort or LoadBalancer. If the latter, you will need to implement a load balancer solution such as MetalLB (see below)

- Add annotation to the desired StorageClass resource

annotations: storageclass.kubernetes.io/is-default-class: "true"

- Check with

kubectl get sc - Note that you can also install Longhorn using the Rancher UI if you are using that: Rancher -> Apps -> Launch -> Longhorn

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: longhorn-prometheus-servicemonitor

namespace: cattle-monitoring-system

labels:

name: longhorn-prometheus-servicemonitor

spec:

selector:

matchLabels:

app: longhorn-manager

namespaceSelector:

matchNames:

- longhorn-system

endpoints:

- port: manager- First step, let’s make it possible to create

LoadBalancerresources - On our bare metal cluster, we’ll use MetalLB - be sure to check releases to get the right URL

- TODO: Investigate reserving host network IPs

# use new namespace metallb-system kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml # kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.11.0/manifests/namespace.yaml # kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.11.0/manifests/metallb.yaml # On first install only # kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

- Give MetalLB a pool of IPs

- Here I’m using a pool from the primary home network

apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: homelab-pool namespace: metallb-system spec: addresses: - 172.16.17.230-172.16.17.250

apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: example namespace: metallb-system - The older way to do it via a ConfigMap

apiVersion: v1 kind: ConfigMap metadata: namespace: metallb-system name: config data: config: | address-pools: - name: default protocol: layer2 addresses: - 172.16.17.230-172.16.17.250

- Here I’m using a pool from the primary home network

- First have a

values.yamlfile readyconfigInline: address-pools: - name: default protocol: layer2 addresses: - 172.16.17.230-172.16.17.250

- Now use Helm to do the thang

helm repo add metallb https://metallb.github.io/metallb helm install --namespace metallb-system --create-namespace metallb metallb/metallb -f /tmp/metallb-values.yaml

- Install the Helm 3.x client from Helm releases

- That’s all there is to do! Installing a Helm chart will put required resources on the server

- Install the KUDO client from Kudo releases

- This is a

kubectlplugin; the binary is namedkubectl-kudo. It can be invoked as-is, but is meant to be used in conjunction withkubectl. Place it in the Path and test it withkubectl kudo version - Install server components with

kubectl kudo init

- We will do this with the mature Helm chart

- Change the root password below

# Create the namespace we will use kubectl create ns sunshine helm install mysql stable/mysql \ -n sunshine \ --set mysqlRootPassword=adminpass,persistence.storageClass=longhorn,persistence.size=20Gi - Note that the Longhorn UI should show a 20Gi volume.

- To use the

mysqlCLI or other client, figure out whether you want to forward the port, use a NodePort or create a load balancer

- For this we will use KUDO, which offers a mature, purely declarative operator

- Zookeeper first

kubectl kudo install zookeeper --instance=zk - Wait until all Zookeeper pods in your chosen namespace are ready, then

kubectl kudo install kafka \ --instance=kafka \ -p ZOOKEEPER_URI=zk-zookeeper-0.zk-hs:2181,zk-zookeeper-1.zk-hs:2181,zk-zookeeper-2.zk-hs:2181

rke remove --config cluster.yaml --ssh-agent-authsudo apt purge `dpkg -l | grep pf9|cut -d' ' -f3`

sudo rm -rf /var/opt/pf9/ /opt/pf9/ /var/log/pf9/ /var/log/podssudo rm -rf /var/lib/longhorn

sudo rm -rf /etc/cni/net.d/# Add config to dnsmasq used by kube-dns

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

data:

myhosts: |

172.16.17.5 gorto gorto.magichomespec:

template:

spec:

volumes:

- name: extra-hosts

configMap:

name: kube-dns

volumeMounts:

- name: extra-hosts

mountPath: /etc/hosts.d

args:

- --hostsdir=/etc/hosts.d- State “DONE” from [2020-02-23 Sun 12:10]

- A volume that handles persistent storage using a PersistentVolumeClaim will survive Pod restarts. This is true of Konvoy’s default storage class on any cloud platform, and is true of persistent storage providers such as Portworx and Mayadata.

- Define a PersistentVolumeClaim using the

awsebscsiprovisionerstorage classapiVersion: v1 kind: PersistentVolumeClaim metadata: labels: app: hello-world name: hello-pvc spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: awsebscsiprovisioner volumeMode: Filesystem

- Create a PVC using the above manifest. List the resulting PVC resource and see

that it is created and in a

Pendingstate:kubectl create -f manifests/hello-pvc.yaml kubectl get pvc -o wide

- Create a PVC using the above manifest. List the resulting PVC resource and see

that it is created and in a

- Define a Pod that makes use of the PVC

apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: app: myhello name: myhello spec: containers: - image: nginxdemos/hello name: myhello resources: {} volumeMounts: - name: myhellovol mountPath: /data dnsPolicy: ClusterFirst restartPolicy: Never volumes: - name: myhellovol persistentVolumeClaim: claimName: hello-pvc status: {}

- Create the Pod, then list both the pod and the PersistentVolume that was created

from the PVC.

kubectl create -f manifests/myhello-pod.yaml until [ $(kubectl get pods myhello -o jsonpath='{.status.phase}') == 'Running' ]; do sleep 1; done kubectl get pod,pv,pvc

- Create a file on the mounted volume, delete the pod, recreate the pod and verify

that the file is still there

kubectl exec myhello -- sh -c "touch /data/persistent.flag && ls /data/" kubectl delete pod myhello && kubectl create -f manifests/myhello-pod.yaml until [ $(kubectl get pods myhello -o jsonpath='{.status.phase}') == 'Running' ]; do sleep 1; done kubectl exec myhello -- sh -c "ls /data/"

- Create the Pod, then list both the pod and the PersistentVolume that was created

from the PVC.

- State “DONE” from [2020-02-23 Sun 12:10]

- This would be a volume used by each pod, and valid for the life of the individual

Pod. One reason to have this would be for multiple containers in the pod to indicate

readiness and “liveness”. For this reason, the example will be a multi-container pod

with an Init container writing a file to indicate readiness, and a container that

periodically writes status for a liveness probe.

apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: multivol name: multivol spec: replicas: 3 selector: matchLabels: app: multivol strategy: {} template: metadata: creationTimestamp: null labels: app: multivol spec: initContainers: - name: init1 image: busybox command: ["sh", "-c", "touch /status/running"] volumeMounts: - name: statusdir mountPath: /status containers: - name: nginx image: nginx resources: {} readinessProbe: exec: command: ["sh", "-c", "ls /opt/status/running && true"] volumeMounts: - name: statusdir mountPath: /opt/status volumes: - name: statusdir emptyDir: {} status: {}

- Create, then describe the deployment. Note the same volume is deployed at

different mount points in each container

kubectl create -f manifests/multivol-deployment.yaml until [ $(kubectl get pods -l app=multivol -o jsonpath='{.items[*].status.phase}' | grep 'Running' -o | wc -l) -eq 3 ]; do sleep 1; done kubectl describe deployment multivol | grep Mounts: -A 4

- Create, then describe the deployment. Note the same volume is deployed at

different mount points in each container

- State “MAYBE” from “STARTED” [2020-02-24 Mon 08:36]

- State “STARTED” from [2020-02-23 Sun 12:10]

- This is a matter of mounting the volume as ReadWriteMany. The underlying file system must support sharing across multiple nodes. Examples of this type of file system include NFS and cloud implementations such as AWS EFS.

- Create an EFS file system in the AWS Console or CLI

- Konvoy comes pre-installed with Helm and Tiller. Install the EFS Provisioner using

a Helm chart. You will need the EFS file system ID and the AWS region it’s in. Use

the below as a guide

helm install --name efs-provisioner \ --namespace default \ --set efsProvisioner.efsFileSystemId=fs-d7a62e7d \ --set efsProvisioner.awsRegion=us-west-2 \ stable/efs-provisioner

- We will define a deployment with 3 replicas. Each pod will mount the same persistent

volume. As before, the pods will mount a volume based on a PersistentVolumeClaim.

apiVersion: v1 kind: PersistentVolumeClaim metadata: labels: app: diskshare name: diskshare-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 6Ki storageClassName: aws-efs volumeMode: Filesystem

apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: diskshare name: diskshare spec: replicas: 3 selector: matchLabels: app: diskshare strategy: {} template: metadata: creationTimestamp: null labels: app: diskshare spec: containers: - name: nginx image: nginx command: ["sh", "-c", "echo 'Wondrous Disk Content at WDC!' > /usr/share/nginx/html/index.html"] resources: {} volumeMounts: - name: sharevol mountPath: /usr/share/nginx/html volumes: - name: sharevol persistentVolumeClaim: claimName: diskshare-pvc status: {}

- Create PVC and Deployment, verify all pods share the disk

- CSI Volume Expansion (k8s.io)

- Resizing in-use volumes can only be done on specific storage classes that support dynamic resizing. It is effected by editing the PersistentVolumeClaim object.

docker run superseb/ranchercheck https://rancher.hypecyclist.org:8443# on all nodes - get rid of ALL docker containers -- too general if anything else may be running

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'docker stop `docker ps -aq`'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'docker rm `docker ps -aq`'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh '# remove CNI and Longhorn remnants'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo rm -rfv /var/lib/longhorn/* /data/longhorn/* /etc/cni/* /var/lib/kubelet /etc/rancher /var/lib/rancher /etc/kubernetes'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh '# iptables'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo iptables -F -t nat'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo iptables -X -t nat'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo iptables -F -t mangle'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo iptables -X -t mangle'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo iptables -F'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo iptables -X'

WCOLL=~/projects/homelab/pdsh/all-nodes.txt pdsh -R ssh 'sudo systemctl restart docker'

As of OpenSSH server 9.x, there is a change needed to make SSH work with k3s

in /etc/ssh/sshd_config make sure you have the following settings

PubkeyAuthentication yes

PubkeyAcceptedKeyTypes=+ssh-rsa

Working directory (from base of the homelab directory)

- ref Get started with Kubernetes network policy

This requires running pods with color labels (blue, red) and namespace labels*

kubectl create namespace k8snetpol

kubectl -n k8snetpol run blue --image=nginx --labels app=blue,color=blue

kubectl -n k8snetpol run red --image=nginx --labels app=red,color=redIn this first example, inoming traffic to pods with label color=blue are allowed only

if they come from a pod with color=red on port 80

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-same-namespace

namespace: k8snetpol

spec:

podSelector:

matchLabels:

color: blue

ingress:

- from:

- podSelector:

matchLabels:

color: red

ports:

- port: 80kubectl apply -f k8s-red-is-cool-for-blue-netpol.yamlFollowing along with Calico for Kubernetes networking: the basics & examples

One nodejs app deployment that has access to redis. A php deployment that has no

access to redis

1058 k create deploy redis –image=redis 1059 kx 1060 k -n default get all 1061 k scale deployment redis –replicas=3 1062 k get all 1063 k get pods -o wide 1064 k get pods -o wide -w 1065 k create deploy redis –image=readytalk/nodejs 1066 k create deploy nodejs –image=readytalk/nodejs 1067 k get all 1068 k get ev 1069 k get all 1070 docker search php 1071 k create deploy phpmyadmin –image=phpmyadmin/phpmyadmin 1072 k get all 1073 k get deployment –show-labels 1074 pwd 1075 history

Created using

kubectl create deploy redis --image=readytalk/nodejs -o yaml --dry-run=clientkubectl expose deployment redis --port=6379 --target-port=6379 -o yaml --dry-run=client

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: redis

name: redis

spec:

replicas: 1

selector:

matchLabels:

app: redis

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: redis

spec:

containers:

- image: readytalk/nodejs

name: nodejs

resources: {}

status: {}

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: redis

name: redis

spec:

ports:

- port: 6379

protocol: TCP

targetPort: 6379

selector:

app: redis

status:

loadBalancer: {}Created using

kubectl create deploy nodejs --image=readytalk/nodejs -o yaml --dry-run=clientapiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: nodejs name: nodejs spec: replicas: 1 selector: matchLabels: app: nodejs strategy: {} template: metadata: creationTimestamp: null labels: app: nodejs spec: containers: - image: readytalk/nodejs name: nodejs resources: {} status: {}

Created using

kubectl create deploy nodejs-hello --image=heroku/nodejs-hello-world -o yaml --dry-run=clientapiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: nodejs-hello name: nodejs-hello spec: replicas: 1 selector: matchLabels: app: nodejs-hello strategy: {} template: metadata: creationTimestamp: null labels: app: nodejs-hello spec: containers: - image: heroku/nodejs-hello-world name: nodejs-hello-world - image: gregoryg/sh-net-utils name: utils command: ["sleep"] args: ["1d"]

Created using

kubectl create deploy phpmyadmin --image=phpmyadmin/phpmyadmin -o yaml --dry-run=clientkubectl expose deploy phpmyadmin --port=80 --target-port=80 -o yaml --dry-run=clientapiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: phpmyadmin name: phpmyadmin spec: replicas: 1 selector: matchLabels: app: phpmyadmin strategy: {} template: metadata: creationTimestamp: null labels: app: phpmyadmin spec: containers: - image: phpmyadmin/phpmyadmin name: phpmyadmin resources: {} status: {} --- apiVersion: v1 kind: Service metadata: creationTimestamp: null labels: app: phpmyadmin name: phpmyadmin spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: phpmyadmin status: loadBalancer: {}

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-redis-nodejs

spec:

podSelector:

matchLabels:

service: redis

ingress:

- from:

- podSelector:

matchLabels:

service: nodejs

ports:

- protocol: TCP

port: 6379Ref: illuminatio: the Kubernetes Network Policy Validator - inovex Blog

pip3 install illuminatiokubectl create deployment web --image=nginx

kubectl expose deployment web --port=80 --target-port=80apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: web-deny-all

spec:

podSelector:

matchLabels:

app: web

ingress: []kubectl apply -f illuminatio-example-deny-all-netpol.yamlilluminatio runGitHub - kubevious/kubevious: Kubevious - application centric Kubernetes UI a…

kubectl create namespace kubevious

helm repo add kubevious https://helm.kubevious.io

helm upgrade --atomic -i kubevious kubevious/kubevious --version 0.7.26 -n kubevious

kubectl port-forward $(kubectl get pods -n kubevious -l "app.kubernetes.io/component=kubevious-ui" -o jsonpath="{.items[0].metadata.name}") 8080:80 -n kubevious- ref: GitHub - PhilipSchmid/k8s-home-lab: Setup for a K8s home lab running on a sin…

nfs-commonmust be installed on all k8s nodesnfs: server: 172.16.17.5 path: /volume3/ISOs storageClass: create: true defaultClass: false name: nfs accessModes: ReadWriteMany

helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/ helm repo update helm upgrade -i --create-namespace --atomic nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \ --version 4.0.14 \ --namespace nfs-subdir-provisioner \ -f /tmp/nfs-values.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-k8s-pv

spec:

storageClassName: "" # ignore default storage class

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /data/data-files/k8s

server: glados.magichome

readOnly: falseapiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data-k8s-pv

spec:

storageClassName: "" # ignore default storage class

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1GiapiVersion: v1

kind: Pod

metadata:

name: nginx-nfs-pod

labels:

name: nginx-nfs-pod

spec:

containers:

- name: nginx-nfs-pod

image: fedora/nginx

ports:

- name: web

containerPort: 80

volumeMounts:

- name: nfsvol

mountPath: /usr/share/nginx/html

securityContext:

supplementalGroups: [1000]

# privileged: false

volumes:

- name: nfsvol

persistentVolumeClaim:

claimName: data-k8s-pvapiVersion: v1

kind: Pod

metadata:

name: busybox-nfs-pod

labels:

name: busybox-nfs-pod

spec:

containers:

- name: busybox-nfs-pod

image: busybox

command: ["sleep", "60000"]

volumeMounts:

- name: nfsvol-2

mountPath: /usr/share/busybox

readOnly: false

securityContext:

supplementalGroups: [1000]

# privileged: false

volumes:

- name: nfsvol-2

persistentVolumeClaim:

claimName: data-k8s-pvhelm repo add jetstack https://charts.jetstack.io

helm repo update

helm upgrade -i --create-namespace --atomic cert-manager jetstack/cert-manager \

--namespace cert-manager \

--set installCRDs=true \

--version v1.6.1- Examples of working parent-authenticated URLs

- Rancher Server

- Managed Cluster

- Grafana dashboard

- Longhorn UI

- Could it be this

L/M Rancher URL local vs managed cluster ID API namespace services URL to service etc M https://rancher.hypecyclist.org:8443 null or k8s/clusters null or c-r5dj9 api/v1 longhorn-system ‘services’ http:longhorn-frontend:80 proxy M https://rancher.hypecyclist.org:8443 k8s/clusters c-r5dj9 api/v1 cattle-monitoring-system ‘services’ http:rancher-monitoring-grafana:80 proxy/+orgId=1 L https://rancher.hypecyclist.org:8443 null null api/v1 cattle-monitoring-system ‘services’ http:rancher-monitoring-grafana:80 proxy/+orgId=1

set env vars rancher_access and rancher_secret to the Access Key and Secret Key

values from the Rancher UI API Keys

For now, do this manually in the homelab-sh session

read -p "Password: " rancher_access

read -p "Password: " rancher_secret

export rancher_access rancher_secretcurl -s -k \

-u "${rancher_access}:${rancher_secret}" \

-X GET \

-H 'Accept: application/json' \

-H 'Content-Type: application/json' \

'https://rancher.hypecyclist.org:8443/v3/clusters/' > /tmp/rancher-clusters.jsoncat /tmp/rancher-clusters.json | \

jq -r '.data[] | "\(.name)\t\(.id)"'cat /tmp/rancher-clusters.json | \

jq -r '.data[] | select (.name == "goozilla") | {"name": .name, "id": .id, "links": .links}'{ “name”: “goozilla”, “id”: “c-vb78v”, “links”: { “apiServices”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/apiservices”, “clusterAlertGroups”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clusteralertgroups”, “clusterAlertRules”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clusteralertrules”, “clusterAlerts”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clusteralerts”, “clusterCatalogs”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clustercatalogs”, “clusterLoggings”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clusterloggings”, “clusterMonitorGraphs”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clustermonitorgraphs”, “clusterRegistrationTokens”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clusterregistrationtokens”, “clusterRoleTemplateBindings”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clusterroletemplatebindings”, “clusterScans”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/clusterscans”, “etcdBackups”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/etcdbackups”, “namespaces”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/namespaces”, “nodePools”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/nodepools”, “nodes”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/nodes”, “notifiers”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/notifiers”, “persistentVolumes”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/persistentvolumes”, “projects”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/projects”, “remove”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v”, “self”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v”, “shell”: “wss://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v?shell=true”, “storageClasses”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/storageclasses”, “subscribe”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/subscribe”, “templates”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/templates”, “tokens”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v/tokens”, “update”: “https://rancher.hypecyclist.org:8443/v3/clusters/c-vb78v” } }

Using a tiling window manager such as EXWM, you don’t have the window dressing of graphical utilities to set default apps. Here are some steps to setting those things at a lower level

sudo update-alternatives --config /usr/bin/x-www-browser x-www-browser /usr/bin/microsoft-edge 10In ~/.config/mimeapps.list

[Default Applications]

x-scheme-handler/http=microsoft-edge.desktopxdg-settings set default-web-browser microsoft-edge.desktopWhy is this so hard? Let’s make it easier

- ref: Let’s Encrypt for Private Networks - Without The Sarcasm

- dnsapi · acmesh-official/acme.sh Wiki · GitHub

- I use Cloudflare, so this seems the best option

- Cloudflare’s

origin-ca-issuer - The following steps worked [2022-05-31 Tue]

- On the Rancher cluster

- Install cert-manager

helm upgrade -i --create-namespace --atomic cert-manager jetstack/cert-manager \ --namespace cert-manager \ --set installCRDs=true \ --version v1.7.0- Install Rancher with cert-manager as ingress

helm install rancher rancher-latest/rancher \ --namespace cattle-system \ --create-namespace \ --set hostname=ixnay.hombre.com \ --set ingress.tls.source=letsEncrypt \ --set letsEncrypt.email=ixnay.hombre@poodle.com

- Chrome may need cookies cleared, restart or whatever - try in incognito tab if you get “certificate is valid, but site is unsafe”

- On the Rancher cluster

The key is to set an option in the snd-hda-intel module. power_save=0 means never

go into power saving mode

- On Ubuntu 20.4

in

/etc/modprobe.d/alsa-base.conf# GJG stop the buzzing from headphone jack options snd-hda-intel power_save=0 power_save_controller=N - On openSUSE Tumbleweed

in

/etc/modprobe.d/42-power-audio.confoptions snd_hda_intel power_save=0 power_save_controller=0

The problem: USB devices may be set to suspend after being idle

The fix: in /etc/pulse/default.pa comment out the line reading load-module

module-suspend-on-idle

Test using pacmd list-sources

Oh it can be quite the trick finding a working URL

sudo apt -y install cups cups-bsd cups-filters

sudo lpinfo -v -l

system-config-printer# Find the correct printer name

sudo cat /etc/cups/printers.conf

# Set pages on printer to print in reverse order

sudo lpadmin -p HP-Officejet-Pro-8030 -o outputorder-default=reversesudo apt install --reinstall cups cups-filters-core-drivers cups-filtersAnd then REBOOT - not kidding

Still searching for what various apps are looking at when doing ‘follow system theme’

The gsettings command affects Open File dialog and … was noch?

Also look at gtk-theme

gsettings set org.gnome.desktop.interface color-scheme 'prefer-dark'

gsettings get org.gnome.desktop.interface gtk-theme

gsettings set org.gnome.desktop.interface gtk-theme 'Adwaita-dark'gsettings set org.gnome.desktop.interface color-scheme 'prefer-light'

gsettings get org.gnome.desktop.interface gtk-theme

gsettings set org.gnome.desktop.interface gtk-theme 'Adwaita'- Ref: Ubuntu 16 and touchscreen scrolling in FireFox - Ask Ubuntu

- Change config param in Firefox from 2 to 1 about:config param: dom.w3c_touch_events.enabled=1

- Add env var to (believe it or not)

/etc/security/pam_env.confMOZ_USE_XINPUT2 DEFAULT=1

It may be necessary to create a Longhorn Volume/PVC named data-rabbit-rabbitmq-0

helm install rabbit bitnami/rabbitmq \

--set persistence.storageClass=longhorn \

--namespace rabbit \

--set metrics.enabled=true \

--set metrics.serviceMonitor.enabled=truePainful, but necessary for now ref: What commands (exactly) should replace the deprecated apt-key? - Ask Ubuntu ref: apt-key Is Deprecated. How To Add OpenPGP Repository Signing Keys Without It …

- Set up the key url and file name, and open a shell in

/tmp

(cd "/tmp")

(shell "gg-tmp-sh")(read-string "URL for signing key: ")- Download the key: Org-babel note: specify the org variables only on this first session definition to avoid being re-prompted for URL

export keyfile=$(basename ${keyurl})

curl -s -L -O ${keyurl}- Verify that the filetype is

PGP public key block Public-Key (old)

file /tmp/${keyfile}- If your key is in a different format, convert it by importing it into a temp keyring,

then exporting it again

Here we go through the steps regardless because it doesn’t hurt to “convert” in any case

gpg --no-default-keyring --keyring ./temp-keyring.gpg --import ${keyfile}

gpg --no-default-keyring --keyring ./temp-keyring.gpg --export --output ${keyfile}_keyring.gpg

rm temp-keyring.gpg- Now that you have your converted key, do not add it to apt’s trusted keystore by

copying it into

/etc/apt/trusted.gpg.d/. Instead, put it somewhere like/usr/local/share/keyrings/. (You’ll need to create that keyrings directory first.)sudo mkdir -p /usr/local/share/keyrings/ sudo mv -iv ${keyfile}_keyring.gpg /usr/local/share/keyrings/ - At this point, nothing has changed and apt doesn’t know the key exists. The last step

is to modify the specific

.listfile for the repository to tell apt where to find the key for that specific repo.Edit the file

/etc/apt/sources.list.d/<example>.list, and in between deb and the url, add[signed-by=/usr/local/share/keyrings/<your-keyfile-name>.gpg]Now apt will accept that key’s signature for all packages in that repo and only that repo.

e appear in dired when I type

C-; - its stunning usefulness is allowing you a shortcut to enter any of several

thousand emojis.

Only works if it doesn’t remove programs you want that depend on it

sudo apt purge ibus23.2.2 Second and maybe best choice - keep Ibus but change settings to rid yourself of the emoji key

Test this afterward to make sure nothing in ibus is stealing keys you want

Do it via gui: ibus-settings, emoji tab

Do it via command line:

gsettings set org.freedesktop.ibus.panel.emoji hotkey "@as []"Zoom packages this as a dependency - which is quite idiotic.

If there is no software you care about that depends on ibus, you can simply do

sudo apt purge ibus

Otherwise, plead with package maintainers to remove the dependency.

In the case of Zoom that approach has so far proven fruitless, so remove the dependency

in the .deb package as explained here:

hashman.ca :: Repack Zoom .debs to remove the `ibus` dependency

scratch=$(mktemp -d)

# Extract package contents

dpkg -x ~/Downloads/zoom_amd64.deb $scratch

# Extract package control information

dpkg -e ~/Downloads/zoom_amd64.deb $scratch/DEBIAN

# Remove the ibus dependency

sed -i -E 's/(ibus, |, ibus)//' $scratch/DEBIAN/control

# Rebuild the .deb

dpkg -b $scratch patched_zoom_amd64.debhelm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

helm -n mariadb install mariadb bitnami/mariadb \

--set auth.rootPassword=${mariadbRootPass} \

--set auth.username=${mariadbUser},auth.password=${mariadbUserPass} \

--set primary.persistence.storageClass=longhorn,primary.persistence.size=82Gi \

--set primary.service.type=NodePort,primary.service.nodePorts.mysql=30306 \

--set metrics.enabled=false,metrics.serviceMonitor.enabled=false \

--create-namespacehelm repo add spark-operator https://googlecloudplatform.github.io/spark-on-k8s-operator

helm install my-release spark-operator/spark-operator --namespace spark-operator --create-namespace

helm install sparky spark-operator/spark-operator \

--namespace sparky \

--create-namespaceManage my virtual environments

mkdir -p ~/.local/python-venvs/sudo apt -y install python3-venv

cd ~/.local/python-venvs/

python3 -m venv jupyter

source ~/.local/python-venvs/jupyter/bin/activateUse the Emacs python-venv package (ref my Emacs setup in Python Mode Setup ≫ github.com

M-x pyvenv-create M-x pyvenv-workon

pip-search is a given for all environments

configparser

findspark

google-auth

google-auth-httplib2

google-search-results

jupyter

jupyterlab

pandas

pip-search

pyspark

pystardog

beautifulsoup4

bs4

cachetools

certifi

charset-normalizer

google

google-api-core

google-api-python-client

google-auth

google-auth-httplib2

google-auth-oauthlib

google-pasta

googleAPI

googleapis-common-protos

httplib2

idna

markdown-it-py

mdurl

oauth2client

oauthlib

pip

pip-search

protobuf

pyasn1

pyasn1-modules

Pygments

pyparsing

requests

requests-oauthlib

rich

rsa

setuptools

six

soupsieve

uritemplate

urllib3As opposed to myspark

databricks-cli

databricks-connect

pip-search

This environment can grow huge quickly and will merit careful management: uninstall outdated methods & APIs for example

streamlit

python-dotenv

faiss-cpu

langchain

openai

pip-search

- Installing the R kernel in Jupyter Lab

sudo apt -y install libffi-dev libssl-dev libxml2-dev libxslt1-dev libjpeg-dev zlib1g-dev libcurl4-nss-devinstall.packages('devtools') devtools::install_github("IRkernel/IRkernel") IRkernel::installspec()

In addition to jupyter and/or jupyterlab

pip install pypandoc pyspark findspark pyTigerGraphimport findspark

findspark.init()

import pyspark

import os

os.environ['PYSPARK_SUBMIT_ARGS'] = '--jars /opt/spark/user-jars/tg-jdbc-driver-1.2.jar pyspark-shell'

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StructField

from pyspark.sql.types import StringType, IntegerType

spark = SparkSession.builder.appName("TigerGraphAnalysis").getOrCreate()jdbcDF = spark.read \

.format("jdbc") \

.option("driver", "com.tigergraph.jdbc.Driver") \

.option("url", "jdbc:tg:http://protomolecule.magichome:32176") \

.option("user", "tigergraph") \

.option("password", "Tigergraph") \

.option("graph", "Northwind") \

.option("dbtable", "vertex Orders") \

.option("limit", "10") \

.option("debug", "0") \

.load()

jdbcDF.show()helm upgrade -i neo4j . -f values.yaml \

--set core.service.type=LoadBalancer \

--set imageTag: "community" \

--set core.persistentVolume.size=20Gi \

--set core.persistentVolume.storageClass=longhorn \

--set readReplica.persistentVolume.size=20Gi \

--set readReplica.persistentVolume.storageClass=longhorn \

--namespace neo4j \

--create-namespaceQuite interesting - a simple running of a jar file gives a Web UI

- ref Quick Start

+

helm upgrade -i stardog . -f values.yaml \

--set image.tag="7.9.0-java11-preview" \

--set persistence.storageClass="longhorn",persistence.size="50Gi" \

--set securityContext.enabled=true \

--set securityContext.runAsUser=1000,securityContext.runAsGroup=1000,securityContext.fsGroup=1000 \

--set zookeeper.persistence.storageClass=longhorn- Install LSP server ref: GitHub - stardog-union/stardog-language-servers: Language Servers for Stardog…

npm install -g sparql-language-server - Set

sparql-default-base-urlto http://<server>:5820/<database>/query - Update LSP mode to use language server

(add-to-list 'lsp-language-id-configuration '(sparql-mode . ".sparql"))

- Load

ob-sparqlif not already included inorg-babel-load-languages(load-library "ob-sparql")

- Test using music database

SELECT ?s ?song WHERE { ?s :sings ?song . } LIMIT 5

- Detailed Instructions

- Download the TigerGraph Enterprise Free Edition for Linux: https://dl.tigergraph.com/download.html

- Direct download for 3.1.6

- 3.1.6 on erebor in

archive/Linux/software - On Ubuntu 18.04, install these packages prior to running Tigergraph install

sudo apt -y install net-tools ntp iptables-persistent - The

iptables-persistentpackage will prompt during install, preventing a scripted installation viapdsh

Run this standalone on the “m1” pod

export LICENSE=""

export HA=1

if [[ ! -f /home/tigergraph/tigergraph/data/installation_flag ]] && [[ $(ls -A /home/tigergraph/tigergraph/data/|grep -v lost|tail -1) ]]; then

echo 'found lagacy data, skip installation'

else

touch /home/tigergraph/tigergraph/data/installation_flag;

export PATH=/home/tigergraph/tigergraph/app/cmd:$PATH;

cp /tmp/init_tg_cfg /tmp/tg_cfg;

sed -i 's/\=/\: /g' /tmp/tg_cfg;

echo >> /tmp/tg_cfg;

jq -j '.System | "System.AppRoot: ",.AppRoot' ~/.tg.cfg >> /tmp/tg_cfg;

echo >> /tmp/tg_cfg;

if [[ -z "$LICENSE" ]]; then

jq -j '.System | "System.License: ",.License' ~/.tg.cfg >> /tmp/tg_cfg;

else

echo "System.License: ${LICENSE}" >> /tmp/tg_cfg;

fi;

gadmin config init -i /tmp/tg_cfg --file /tmp/tg.cfg --ha ${HA};

cp --remove-destination /tmp/tg.cfg ~/.tg.cfg;

gadmin init cluster -y --skip-stop;

rm /home/tigergraph/tigergraph/data/installation_flag;

fi- State “DONE” from “STARTED” [2021-06-27 Sun 15:45]

- State “STARTED” from “TODO” [2021-06-19 Sat 18:56]

CREATED: [2021-06-19 Sat 18:55]

- Generate startup script - very large image (~3GB)

docker run -d \ -p 14022:22 \ -p 3306:3306 \ -p 9000:9000 \ -p 14240:14240 \ --name tigergraph_server \ --ulimit nofile=1000000:1000000 \ -v ~/data:/home/tigergraph/mydata \ tigergraphbootcamp/tigergraph-image:latest - If docker instance was stopped, just run

docker start tigergraph_server

In computing, a graph database is a database that uses graph structures for semantic queries with nodes (AKA vertices), edges, and attributes to represent and store data

- Nodes / Vertices

- A vertex represents anything: a company, person, product etc

- Edges

- lines that connect vertices

- Attributes

- associated with vertices

- Hops

- distance between 2 vertices - the number of edges traversed

- Directionality (?)

- A single graph may have multiple vertex types (i.e. Person and Store), and multiple edge types (i.e. Friendship and Buys From)

- Friendship goes both ways - we call this an undirected edge

- Buys From would be a directed edge

- Graph visualizations typically show an arrowhead on the edges between vertices

+

+

- relational

- acid compliant

- great for transactions

- good for analytics

- standard SQL

- nosql

- unstructured - or … less structured

- limited ACID compliance

- identifying relationships is hard

- graph

- relationships

- AWS Neptune, Neo4j, Tigergraph

- no standard query language has emerged

- not well suited to traditional analytics

- speed

- native graph storage

- data compression

- MPP

- efficient distributed computation

- Scale-out +

- Deep-link analytics

- Queries can traverse 10 or more hops

- Graph Query Language

- GraphSQL or GSQL

- Multigraph

- multiple groups can share the same master database

- Visual Interface

- Developer, Cloud, Enterprise

- Run or start up the script generated under Pre-requisites section

sudo systemctl start docker # ~/bin/start-tigergraph.sh docker run tigergraph_server

- We will use GSQL to

- define a graph schema

- create a graph

- load data into the graph

- run graph queries

- define a graph schema: vertices and edges

- for data files to be loaded: drop them into

~/dataprovided by thedocker runvolume mount

ssh-add ~/.ssh/tigergraph_rsa(call-process-shell-command "ssh -o StrictHostKeyChecking=no -p 30022 tigergraph@protomolecule id")

(cd "/ssh:tigergraph@protomolecule:#30022:")

(shell "tigergraph-sh")time gadmin start allgsql

CREATE VERTEX person (PRIMARY_ID id INT, first_name STRING, last_name STRING, age INT, email STRING, gender STRING, phone STRING)CREATE UNDIRECTED EDGE friendship (FROM person, TO person, friendship_date DATETIME)CREATE GRAPH friends (person, friendship)- Shortcuts to create empty graph or a graph using all global edges and vertices

and edges

CREATE GRAPH GraphName() CREATE GRAPH GraphName(*)

USE GRAPH friends- When we create a graph, we are techincally creating a “local graph” TigerGraph already has one graph named Global. It can be leveraged by other graphs

- To exit the scope of your graph:

USE GRAPH global

CREATE LOADING JOB friends_data FOR GRAPH friends { DEFINE FILENAME people = "/home/tigergraph/mydata/people.csv"; DEFINE FILENAME friendship = "/home/tigergraph/mydata/friendship.csv"; LOAD people TO VERTEX person VALUES ($"id", $"first_name", $"last_name", $"age", $"email", $"gender", $"phone") USING header="true", separator=","; LOAD friendship TO EDGE friendship VALUES ($"from_id", $"to_id", $"friendship_date") USING header="true", separator=",";}- Pro tip: you can map using position or field name: ($1,

$2…) instead of ($ ”id”, $”first_name”…)

run loading job friends_data- take note of job id

show loading status friends.friends_data.file.m1.1624199308621

- default data storage path is

/tigergraph/data/gstore - when in doubt use

gstatusgraphto check the graph data storage path

DROPdrop vertex <vertex_name> drop edge <edge_name> drop job <job_name> drop graph <graph_name> -- delete all vertices/edges, jobs, queries and data! drop all

+

- interactive and saved queries

select * FROM person-(friendship)->person WHERE from_id == 456 CREATE QUERY close_friends(VERTEX <person>p) FOR GRAPH friends{Start = {p}; Result = SELECT tgt FROM Start:src -(friendship:e) -> person:tgt; PRINT Result;} INSTALL QUERY close_friends RUN QUERY close_friends(456)

- running saved queries using API

curl -X GET http://localhost:9000/query/friends/close_friends?p=456 - Built-in queries - available using the API

# --List all information about a specific vertex # curl -X GET "http://server:port/graph/<graph_name>/vertices/<vertex_name>/<vertex_id>" # --List all vertices originating from a specific vertex, traversing a specific edge type # curl -X GET "http://server:port/graph/<graph_name>/edges/<source> <vertex_name>/<source> <vertex_id>/<edge_name>/" # --List TigerGraph version information # curl -X GET "http://server:port/version" curl -s -X GET "http://localhost:9000/graph/friends/vertices/person/123" | jq -r '.' curl -s -X GET "http://localhost:9000/graph/friends/vertices/person/496"|jq -r '.'

- GSQL: return specific vertex information

SELECT * FROM person where email =="gmaslen3e@fastcompany.com"

- return multiple edges meeting conditions

- list all friendship edge connected to Gabriella

SELECT friendship_date FROM person-(friendship)->person WHERE from_id == 123

- list all friendship edge connected to Gabriella

- Named query syntax

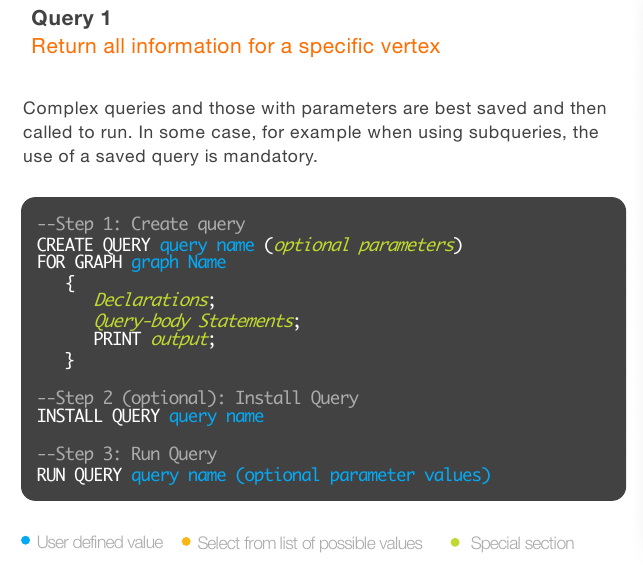

- Query 1: Return all info for a specific vertex

CREATE QUERY personal_info (STRING email) FOR GRAPH friends { all_people = {person.*}; info = SELECT s FROM all_people:s WHERE s.email==email; PRINT info; } INSTALL QUERY personal_info RUN QUERY personal_info("apeekeb6@chronoengine.com") RUN QUERY personal_info("gmaslen3e@fastcompany.com") - Query 2: list all women aged 20-30 who are friends with person X

CREATE QUERY female_20s_friends (VERTEX <person> p) FOR GRAPH friends { start = {p}; result = SELECT tgt FROM start:src -(friendship:e)- person:tgt WHERE tgt.gender == "Female" AND tgt.age >= 20 AND tgt.age <= 30; PRINT result;} INSTALL QUERY female_20s_friends RUN QUERY female_20s_friends(123)

- http://localhost:14240/

- create a professional social network (more complex graph than previous)

- Subtle differences in terminology

GraphStudio GSQL Create Graph CREATE GRAPH Design Schema CREATE VERTEX / CREATE EDGE Map Data to Graph CREATE LOADING JOB Load Data RUN LOADING JOB - Do all the following steps

- Start TigerGraph

- Open GraphStudio at http://localhost:14240/

- Create a Graph a. Click Global View, then Create a graph. Set the name to LinkedUp

- Create Vertices (Local)

- Vertex type name:

Account- Primary id: id

- Primary id type: INT

- Color: #FF6D00

- Icon: person

- Attributes (attribute type is STRING unless indicated otherwise)

- FirstName

- LastName

- Gender

- JobTitle

- Salary (DOUBLE)

- Recruitable (BOOL)

- Vertex type name:

Company- Primary id: id

- Primary id type: INT

- Color: #C1D82F

- Icon: company

- Attributes (attribute type is STRING unless indicated otherwise)

- name

- Vertex type name:

City- Primary id: id

- Primary id type: INT

- Color: #F8B717

- Icon: upload and use city icon

- Attributes (attribute type is STRING unless indicated otherwise)

- Name

- Vertex type name:

State- Primary id: id

- Primary id type: INT

- Color: #FF3E02

- Icon: upload and use state icon

- Attributes (attribute type is STRING unless indicated otherwise)

- name

- Vertex type name:

Industry- Primary id: id

- Primary id type: INT

- Color: #6871FF

- Icon: upload and use industry icon

- Attributes (attribute type is STRING unless indicated otherwise)

- Name

- Vertex type name:

- Create Edges (local)

- Edge type name:

connected_to- From -> To: Account -> Account

- Directed: No

- Color: #FF6D00

- Edge type name:

works_in- From -> To: Account -> Company

- Directed: Yes

- Color: #C1D82F

- Edge type name:

in_industry- From -> To: Company -> Industry

- Directed: Yes

- Color: #6871FF

- Edge type name:

located_in- From -> To: Company -> City

- Directed: Yes

- Color: #F8B717

- Edge type name:

is_in- From -> To: City -> State

- Directed: Yes

- Color: #FF3E02

- Edge type name:

- Download and save CSV files from this video.

- Into your

datafolder. You may create a subfolder namedLinkedUpto keep your files organized.

- Into your

- Map Data to Graph

- In GraphStudio, select the

Map Data to Graphlink from the menu. - Click Add data file, browse to your LinkedUp folder and upload all 10 files.

- Select the

account.csvfile from theFiles on Serversection, and check theHas Headercheckbox, then clickAdd. - Click

map data file to vertex or edge, then select theaccount.csvfile icon, and then theaccountvertex. This will result in the mapping pane being displayed on the right.- Map the fields by clicking the field name in the source table (which is the CSV file), then selecting the field to map in the target (which is a vertex or edge).

- Repeat the file mapping process for the remaining 9 vertices and edges

- Click

publish data mapping.

- In GraphStudio, select the

- Load Data

- In GraphStudio, select

Load Datafrom the menu. - Click the

Start/Resume Loadingbutton. - Click

Confirmto start loading - Wait until all files have the

FINISHEDbadge.

- In GraphStudio, select

transcript: graph/tigergraph/udemy/documents/GraphStudio-exploregraph.org

- THE QUERY

// 1. Return all companies to which I am connected through my direct neighbours. Only include companies in city New York City and Industry Aerospace. // Vertex to test with: 291 CREATE QUERY company_network(VERTEX <account> p) FOR GRAPH linkedup { //Define the Start point as the person in the parameter Start = {p}; // Get all the contacts to said person contact_list= SELECT tgt_p FROM Start:src - (connected_to) - account:tgt_p ; // Get all the companies in which contacts work contacts_companies= SELECT c FROM contact_list:src - (works_in) -> company:c; // Get all the companies with location in New York companies_new_york= SELECT src FROM contacts_companies:src - (located_in) - city:c WHERE c.city_name=="New York"; // Get all the companies in Aerospace Industry companies_new_york_industry = SELECT src FROM companies_new_york:src - (in_industry) - industry:i WHERE i.industry_name=="Aerospace"; //Return all the companies PRINT companies_new_york_industry; }

- Question: which companies are operating in the Aerospace industry, have an offfice based in New York and employ a contact of (person)?

+

- Identify the most influential member in a group and the community around them

account

CREATE VERTEX account (

PRIMARY_ID account_id INT,

user_name STRING,

first_name STRING,

last_name STRING,

email STRING,

gender STRING,

age INT)

WITH PRIMARY_ID_AS_ATTRIBUTE = "true"

hobby

CREATE VERTEX hobby (

PRIMARY_ID hobby_id INT,

description STRING)

WITH PRIMARY_ID_AS_ATTRIBUTE = "true"

CREATE DIRECTED EDGE follows (FROM account, TO account)

CREATE DIRECTED EDGE referred_by (FROM account, TO account, referral_date DATETIME)

CREATE DIRECTED EDGE interested_in (FROM account, TO hobby)

influencers

CREATE GRAPH influencers(account, hobby, follows, referred_by, interested_in)

USE GRAPH influencers

BEGIN

CREATE LOADING JOB load_influencers FOR GRAPH influencers {

DEFINE FILENAME person_data="/home/tigergraph/mydata/insta-follow/users.csv";

DEFINE FILENAME followers_data="/home/tigergraph/mydata/insta-follow/followers.csv";

DEFINE FILENAME referred_data="/home/tigergraph/mydata/insta-follow/referrals.csv";

DEFINE FILENAME interests="/home/tigergraph/mydata/insta-follow/interests.csv";

DEFINE FILENAME people_interests="/home/tigergraph/mydata/insta-follow/people_interests.csv";

LOAD person_data TO VERTEX account VALUES ($"id", $"user_name",

$"first_name", $"last_name", $"email", $"gender",$"age")

USING header="true", separator=",";

LOAD interests TO VERTEX hobby VALUES ($"id", $"interest")

USING header="true", separator=",";

LOAD followers_data TO EDGE follows VALUES ($"from_id", $"to_id")

USING header="true", separator=",";

LOAD referred_data TO EDGE referred_by VALUES ($"from_id", $"to_id",

$"referral_date")

USING header="true", separator=",";

LOAD people_interests TO EDGE interested_in VALUES ($"id", $"int_id")

USING header="true", separator=",";

}

END

USE GRAPH influencers

RUN LOADING JOB load_influencers

- Sample queries for this module

graph/tigergraph/udemy/documents/InstaFollow+Sample+Queries.sql

- Search a graph for all occurences of a

CREATE VERTEX customer (

PRIMARY_ID customer_id INT,

first_name STRING,

last_name STRING,

user_name STRING,

email STRING,

gender STRING,

date_of_birth DATETIME)

WITH primary_id_as_attribute="true"

CREATE VERTEX product (

PRIMARY_ID product_id INT,

product_name STRING,

product_category STRING)

WITH primary_id_as_attribute="true"

CREATE DIRECTED EDGE has_purchased (FROM customer, TO product, purchase_date DATETIME)CREATE GRAPH ecommerce( customer, product, has_purchased)USE GRAPH ecommerce

BEGIN

CREATE LOADING JOB load_ecommerce FOR GRAPH ecommerce {

DEFINE FILENAME customer_data = "/home/tigergraph/mydata/ecommerce/customer.csv";

DEFINE FILENAME product_data = "/home/tigergraph/mydata/ecommerce/product.csv";

DEFINE FILENAME purchase_data = "/home/tigergraph/mydata/ecommerce/purchases/";

LOAD customer_data TO VERTEX customer

VALUES (

$"customer_id",

$"first_name",

$"last_name",

$"user_name",

$"email",

$"gender",

$"date_of_birth")

USING header="true", separator=",";

LOAD product_data TO VERTEX product

VALUES (

$"product_id",

$"product_name",

$"product_category")

USING header="true", separator=",";

LOAD purchase_data TO EDGE has_purchased

VALUES (

$"customer_id",

$"product_id",

$"purchase_date")

USING header="true", separator=",";

}

ENDUSE GRAPH ecommerce

RUN LOADING JOB load_ecommerce- GraphStudio was used in the course

- Query 1: Return Customers Adhering to Given Pattern

// Products: 1,2,3,4,5,6

use graph ecommerce /* 1. Return Customers Adhering to Given Pattern */ /* Products: 1,2,3,4,5,6 */ CREATE QUERY find_customers_with_pattern(Vertex<product>p_1, Vertex<product>p_2, Vertex<product>p_3, Vertex<product> p_4, Vertex<product> p_5, Vertex<product>p_6) FOR GRAPH ecommerce SYNTAX v2 { /* Define the Start point as all customers in the Shop */ Start = {customer.*}; /* Get all the customers exhibiting the pattern */ customers_with_pattern= SELECT src FROM Start:src - (has_purchased>:h) - product:p, Start:src - (has_purchased>:h2) - product:p2, Start:src - (has_purchased>:h3) - product:p3, Start:src - (has_purchased>:h4) - product:p4, Start:src - (has_purchased>:h5) - product:p5, Start:src - (has_purchased>:h6) - product:p6 WHERE h.purchase_date< h2.purchase_date AND h2.purchase_date< h3.purchase_date AND h3.purchase_date< h4.purchase_date AND h4.purchase_date < h5.purchase_date AND h5.purchase_date< h6.purchase_date AND p==p_1 AND p2==p_2 AND p3==p_3 AND p4==p_4 AND p5==p_5 AND p6==p_6; /* Return all the customers */ PRINT customers_with_pattern; }

- Query 2: Return customers adhering to start of given pattern

use graph ecommerce /* 2. Return Customers Adhering to Start of Given Pattern */ /* Products: 1,2,3 */ /* End_Pattern: 4,5,6 */ CREATE QUERY find_customers_with_first_3_pattern(Vertex<product>p_1, Vertex<product>p_2, Vertex<product>p_3, SET<int> end_pattern) FOR GRAPH ecommerce SYNTAX v2 { SetAccum<Vertex<customer>> @@customers_with_pattern_b; SetAccum<Vertex<customer>> @@customers_with_pattern_e; SetAccum<Vertex<customer>> @@final_list; /* Define the Start point as all customers in the Shop */ Start = {customer.*}; /* Get all the customers exhibiting the beginning pattern */ customers_with_pattern_b= SELECT src FROM Start:src - (has_purchased>:h) - product:p, Start:src - (has_purchased>:h2) - product:p2, Start:src - (has_purchased>:h3) - product:p3 WHERE h.purchase_date< h2.purchase_date AND h2.purchase_date< h3.purchase_date AND p==p_1 AND p2==p_2 AND p3==p_3 ACCUM @@customers_with_pattern_b+=src ; /* Get all the customers exhibiting the end of the pattern */ customers_with_pattern_e= SELECT src FROM Start:src - (has_purchased>:h) - product:p WHERE p.product_id in end_pattern ACCUM @@customers_with_pattern_e+=src ; /* Get all the customers with the beginning of the pattern but no product on the end of the pattern */ @@final_list=@@customers_with_pattern_b MINUS @@customers_with_pattern_e; /* Return all the customers */ PRINT @@final_list; }

- Traverse a graph via three or more hops and then analyze the data encountered in that traversal

CREATE VERTEX user_account (

PRIMARY_ID account_id INT,

user_name STRING,

member_since DATETIME,

last_login DATETIME)

WITH primary_id_as_attribute="true"

CREATE VERTEX movie (

PRIMARY_ID movie_id INT,

title_type STRING,

primary_title STRING,

original_title STRING,

is_adult BOOL,

release_year INT,

runtime_minutes INT,

genres SET<STRING>)

WITH primary_id_as_attribute="true"

CREATE VERTEX cluster (PRIMARY_ID cluster_id INT, description STRING) WITH primary_id_as_attribute="true"

CREATE DIRECTED EDGE watched (FROM user_account, TO movie)

CREATE DIRECTED EDGE recommended_to (FROM movie, TO user_account)

CREATE DIRECTED EDGE belongs_to (FROM user_account, TO cluster)CREATE GRAPH streaming (user_account, movie, cluster, watched, recommended_to, belongs_to)USE GRAPH streaming

BEGIN

CREATE LOADING JOB load_streaming FOR GRAPH streaming {

DEFINE FILENAME user_data="/home/tigergraph/mydata/streaming/user.csv";

DEFINE FILENAME movie_data="/home/tigergraph/mydata/streaming/movies.csv";

DEFINE FILENAME clusters_data="/home/tigergraph/mydata/streaming/clusters.csv";

DEFINE FILENAME watched="/home/tigergraph/mydata/streaming/movies_watched/";

DEFINE FILENAME recommended_to="/home/tigergraph/mydata/streaming/movies_recommended/";

DEFINE FILENAME belongs_to="/home/tigergraph/mydata/streaming/users_clusters/";

LOAD user_data TO VERTEX user_account

VALUES (

$"id",

$"user_name",

$"member_since",

$"last_login")

USING header="true", separator=",";

LOAD movie_data TO VERTEX movie

VALUES (

$"id",

$"title_type",

$"primary_title",

$"original_title",

$"is_adult",

$"release_year",

$"runtime_minutes",

SPLIT($"genres","|"))

USING header="true", separator=",";

LOAD clusters_data TO VERTEX cluster

VALUES (

$"id",

$"cluster")

USING header="true", separator=",";

LOAD watched TO EDGE watched

VALUES (

$"user_id",

$"movie_id")

USING header="true", separator=",";

LOAD recommended_to TO EDGE recommended_to

VALUES (

$"movie_id",

$"user_id")

USING header="true",separator=",";

LOAD belongs_to TO EDGE belongs_to

VALUES (

$"user_id",

$"cluster_id")

USING header="true", separator=",";

}

ENDuse graph streaming

run loading job load_streaming+

- 95%

- Best global defaults Tangle to ~/.logseq/config/config.edn

;; This global config file is used by all graphs.

;; Your graph's logseq/config.edn overrides config keys in this file

;; except for maps which are merged.

;; As an example of merging, the following global and local configs:

;; {:shortcuts {:ui/toggle-theme "t z"}}

;; {:shortcuts {:ui/toggle-brackets "t b"}}

;;

;; would result in the final config:

;; {:shortcuts {:ui/toggle-theme "t z"

;; :ui/toggle-brackets "t b"}}

{

;; Currently, we support either "Markdown" or "Org".

:preferred-format "Org"

;; Preferred workflow style.

;; Value is either ":now" for NOW/LATER style,

;; or ":todo" for TODO/DOING style.

:preferred-workflow :todo

:journal/page-title-format "yyyy-MM-dd EEE"

;; ignore #+keyword: for parsing page references in orgmode

:ignored-page-references-keywords #{"author" "startup"}

:rich-property-values? true

;; Enable all your properties to have corresponding pages

:property-pages/enabled? false

;; Set this to true will convert

;; `[[Grant Ideas]]` to `[[file:./grant_ideas.org][Grant Ideas]]` for org-mode

;; For more, see https://github.com/logseq/logseq/issues/672

:org-mode/insert-file-link? true

:shortcuts {:editor/open-file-in-default-app "ctrl+o"}

}When you just can’t pry data from a client.

from faker_music import MusicProvider

# fake = Faker()

fake.add_provider(MusicProvider)

fake.music_genre()require 'csv'

require 'faker'

AEROSPACE_TERMS = [

"aeroacoustics", "aerobraking", "aeroelsticity", "aerospace architecture", "aerospace bearing",

"aerospace materials", "flight control systems", "signal acquisition", "antimatter rocket",

"arcject rocket", "astrodynamics", "beam powered propulsion", "bi-elliptic transfer",

"booster", "cabin pressurization", "centrifugal compressor", "collimated beam",

"compressor map", "constant speed drive", "cylinder stress", "digital datcom",

"dual mode propulsion rocket", "electrostatic ion thruster", "expander cycle",

"field emission electric propulsion", "helicopter flight control systems",

"aircraft flight control systems", "gas generator cycle", "gps", "gravitational slingshot",

"hall effect thruster", "instrument landing system", "interplanetary transport network",

"jet engine", "lander", "legrangian mechanics", "lithobraking", "magsail", "membrane mirror",

"monopropellant rocket", "multistage rocket", "orbit phasing", "orbital station keeping",

"pogo oscillation", "radar", "railgun", "remote manipulator system", "reaction control system",

"resistojet rocket", "reusable launch system", "satellite", "scramjet", "skyhook", "solar panel",

"solar thermal rocket", "space activity suit", "space elevator", "space fountain", "space plane",