Numpy & PyTorch vectorized implementation of various differential entropy estimators for ML & DL.

Information theory-inspired machine learning methods are gaining increasing interest, with estimation of the entropy as well as mutual information of random variables serving as their cornerstone.

To estimate the Shannon entropy of a discrete random variable, given its probability distribution, one can simply apply the definition of Shannon entropy

But when it comes to estimate differential entropy,

In such cases, we could make an assumption of the unknown distribution and expect the assumed distribution to have a closed-form expression for its entropy calculation, e.g., multivariate Gaussian.

Kernel Density Estimation(KDE) is one of commonly used methods to approximate probability density of a distribution, However, while a single kernel may have a closed-form expression for its entropy, the mixture of these kernels typically does not.

This project offers several entropy estimators for these mixture distributions, (mainly focus on mixture of Gaussian and mixture of uniform), implemented with both Numpy and PyTorch.

Most of the estimators are differentiable, making them suitable for optimization purposes.

Switch to your desired virtual/conda env with python >= 3.8:

- To install with numpy dependency only:

pip install mixentropy

- To install with both numpy and pytorch dependency:

pip install mixentropy[pth]

- (Optional) In order to run experiments, you may also need:

pip install matplotlib scipy

c: Weights of each components, which should sum up to one;mu: Positions of each component.- For Gaussian, mu indicates mean of each component;

- For uniform, mu indicates center position of each component.

sigma: "Spread" of each component.- For Gaussian, sigma is a stack of variance (or covariance matrix for multivariate Gaussian) of each component,

- For uniform, sigma is a stack of diagonal matrices, with each diagonal entry indicates interval length of a component on that dimension.

elem: component type, could be"gauss"or"uniform", for Gaussian and uniform respectively.

Some estimator may take extra arguments:

- Monte Carlo estimator needs

n_samplesto indicate how many datapoints will be sampled to estimate entropy. - Pairwise distance estimator needs

distto indicate which distance function to use (could be"kl"or"chernoff").

import numpy as np

import torch

from mixentropy.np.monte_carlo_estimator import estimate as h_mc_np

from mixentropy.np.kde_estimator import estimate as h_kde_np

from mixentropy.np.elk_estimator import estimate as h_elk_np

from mixentropy.np.pairwise_dist_estimator import estimate as h_pairwise_np

from mixentropy.pth.monte_carlo_estimator import estimate as h_mc_pt

from mixentropy.pth.kde_estimator import estimate as h_kde_pt

from mixentropy.pth.elk_estimator import estimate as h_elk_pt

from mixentropy.pth.pairwise_dist_estimator import estimate as h_pairwise_pt

# generate some random fake data

k = 100 # number of components

d = 10 # number of dimensions of each component

b = 4 # batch size

c = np.ones(k) / k # [k,], weights for k components

mu = np.random.normal(0., 1, size=(k, d)) # [k, d], n components, each has d dimensions

sigma = np.stack([np.eye(d)] * k) # [k, d, d], k components, each has a d*d covariance matrix

# estimate entropy of mixture of gaussian (numpy version)

ent_mc_np = h_mc_np(c, mu, sigma, n_samples=3000, elem='gauss')

ent_kde_g_np = h_kde_np(c, mu, sigma, elem='gauss')

ent_elk_g_np = h_elk_np(c, mu, sigma, elem='gauss')

ent_pd_kl_g_np = h_pairwise_np(c, mu, sigma, elem='gauss', dist='kl')

ent_pd_cf_g_np = h_pairwise_np(c, mu, sigma, elem='gauss', dist='chernoff')

# torch version to estimate on mixture of uniform

# notice that a batch axis is expanded to the left for all input

c_t = torch.tensor(np.stack([c] * b)) # [n,] -> [b, n]

mu_t = torch.tensor(np.stack([mu] * b)) # [k, d] -> [b, k, d]

sigma_t = torch.tensor(np.stack([sigma] * b)) # [k, d, d] -> [b, k, d, d]

ent_mc_u_pt = h_mc_pt(c_t, mu_t, sigma_t, n_samples=30000, elem='uniform')

ent_kde_u_pt = h_kde_pt(c_t, mu_t, sigma_t, elem='uniform')

ent_elk_u_pt = h_elk_pt(c_t, mu_t, sigma_t, elem='uniform')

ent_pd_kl_u_pt = h_pairwise_pt(c_t, mu_t, sigma_t, elem='uniform', dist='kl')

ent_pd_cf_u_pt = h_pairwise_pt(c_t, mu_t, sigma_t, elem='uniform', dist='chernoff')LogDet estimators take datapoints as input directly.

import numpy as np

import torch

from mixentropy.np.logdet_estimator import logdet_entropy as ld_np, logdet_joint_entropy as ldj_np

from mixentropy.pth.logdet_estimator import logdet_entropy as ld_pt, logdet_joint_entropy as ldj_pt

# make some random fake data

d = 10 # number of dimensions

k = 3000 # number of datapoints

b = 4 # batch size

beta = 1. # hyperparameter

mu1 = np.random.normal(0, 1, size=d) # [d,]

mu2 = np.random.normal(0, 1, size=d) # [d,]

sigma1 = (sigma1 := np.random.normal(0, 1, size=(d, d))) @ sigma1.T # [d, d]

sigma2 = (sigma2 := np.random.normal(0, 1, size=(d, d))) @ sigma2.T # [d, d]

x1 = np.random.multivariate_normal(mean=mu1, cov=sigma1, size=k) # [k, d]

x2 = np.random.multivariate_normal(mean=mu2, cov=sigma2, size=k) # [k, d]

x1x2 = np.stack([x1, x2]) # [2, k, d]

# estimate entropy with LogDet estimator (Numpy version)

ent_logdet1_np = ld_np(x1, beta)

ent_logdet2_np = ld_np(x2, beta)

joint_ent_logdet_np = ldj_np(x1x2, beta) # joint entropy of x1 and x2

# Pytorch version

x1_t = torch.tensor(np.stack([x1] * b)) # [b, k, d]

x2_t = torch.tensor(np.stack([x2] * b)) # [b, k, d]

x1x2_t = torch.tensor(np.stack([x1x2] * b)) # [b, 2, k, d]

ent_logdet1_pt = ld_pt(x1_t, beta)

ent_logdet2_pt = ld_pt(x2_t, beta)

joint_ent_logdet_pt = ldj_pt(x1x2_t, beta)NOTE: All estimators in Pytorch implementation take inputs with an extra batch dimension on the left.

NOTE: For simplicity and performance, estimators DO NOT validate theri input, e.g., check if

sigmais positive semi-definite or ifcsum up to one.

| Estimators | Distributions Supported | Differentiable |

|---|---|---|

| MonteCarlo | Mixture of Gaussian Mixture of Uniform |

❌ ❌ |

| KDE | Mixture of Gaussian Mixture of Uniform |

✅ ❌ |

| ELK | Mixture of Gaussian Mixture of Uniform |

✅ ✅ |

| PairwiseDist (KL) | Mixture of Gaussian Mixture of Uniform |

✅ ✅ |

| PairwiseDist (Bhat) | Mixture of Gaussian Mixture of Uniform |

✅ ✅ |

| LogDet | Multivariate Gaussian or Any other |

✅ ✅ |

Differential entropy is defined[4] as:

Given parameters of the mixture and a bunch of datapoints sampled from that mixture, one can compute the the log probability of each datapoint

PDF of Kernel Densiti Estimation (KDE)[5] is defined as:

where:

-

$n$ is the number of elements. -

$h$ is the bandwidth. -

$d$ is number of dimension/variate. -

$K$ is kernel function.

KDE entropy estimator[1] using the probabilities of components' mean

For

where:

-

$p_j(\cdot)$ is the pdf of$j_{th}$ element. -

$\mu_i$ is the mean of$i_{th}$ element.

From Jensen's Inequality:

we have:

where:

is a speicial case of probability product kernel[2] when

Thus we derived this entropy lower bound

When kernels

where:

When kernels

where:

-

$V_i$ is the volume of the hyper rectangle of$p_i$ -

$V_{i\cap j}$ is the volume of overlapping part between$p_i$ and$p_j$

Estimate entropy based on pairwise distance/divergence between components of the mixture[3].

With different distance function

where:

-

$X$ is a$d$ dimensional continuous random variable. -

$C$ is a discrete random variable, each$c_i$ indicates the weight of$i_{th}$ element, thus we have$c_i \geq 0, \sum_{i}^{n}c_i=1$ . -

$H(X|C)=\sum_i^n c_iH(p_i)$ , that is the weighted average of all components' entropy. -

$D(\cdot || \cdot)$ is the distance function.

When use Kullback-Leibler (KL) divergence[10] as the distance function, estimation is an upper bound on the entropy.

When use Bhattacharyya distance[9] as the ditance function, estimation is a lower bound on the entropy.

Logarithm Determinant (LogDet) Entropy Estimator[6]

Shannon differential entropy of multivariate Gaussian distribution can be derived as[7]:

Since:

where

We have:

To estimate mutual information between the input

In order to bypass deterministic issue, others purposed to add independent Gaussian noise

The covariance matrix of random variable

To eliminate artificial bias introduced by added noise, the original covariance matrix

- where

$X=[x_1, x_2,\cdots,x_n]$ represents$n$ samples of the random variable to be estimated, each sample$x$ in$X$ have$d$ dimensions, i.e.$X\in\mathbb{R}^{n\times d}$ .

To estimate joint entropy of

where each entry

It is easy to see that the size of covariance matrix

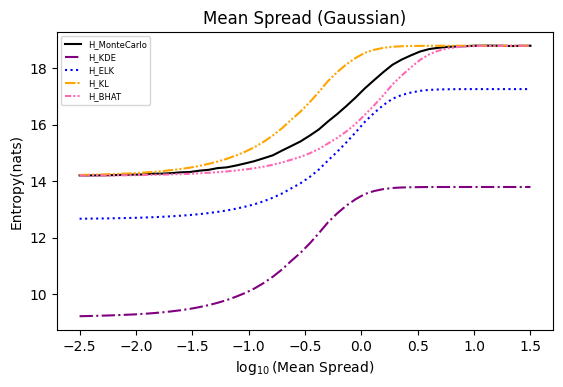

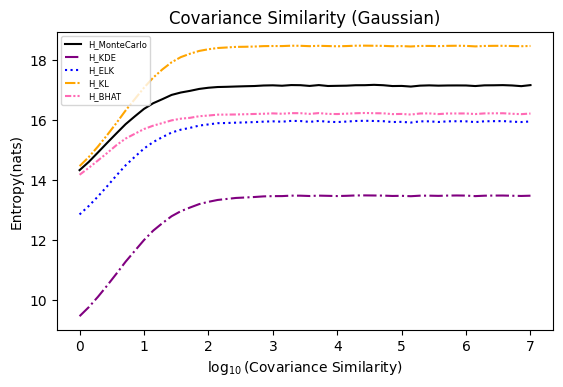

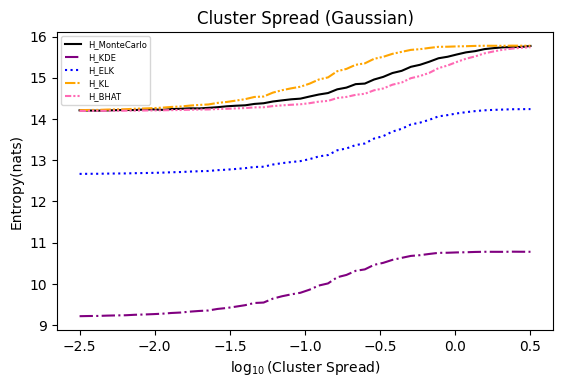

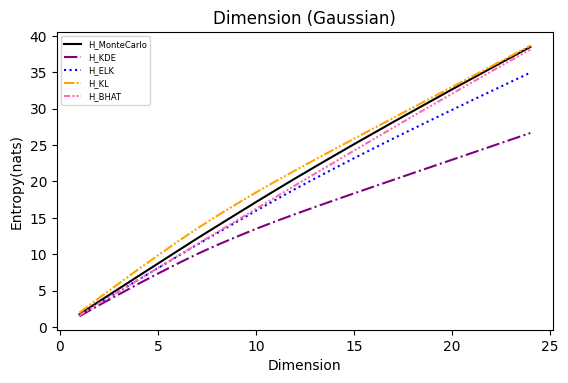

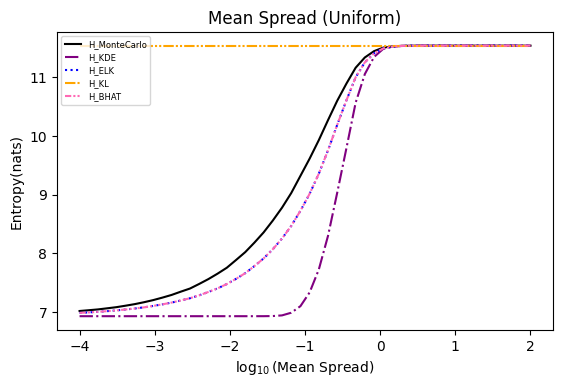

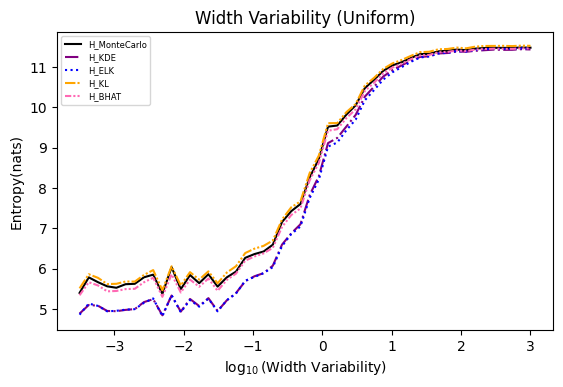

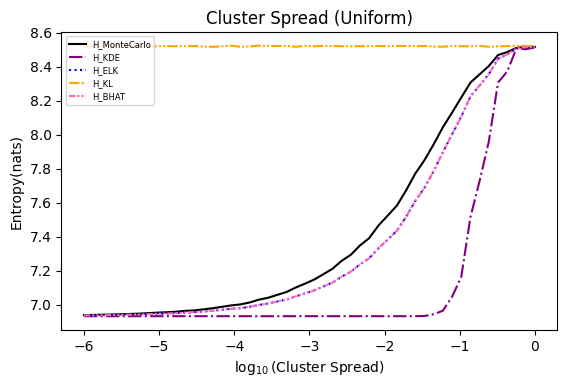

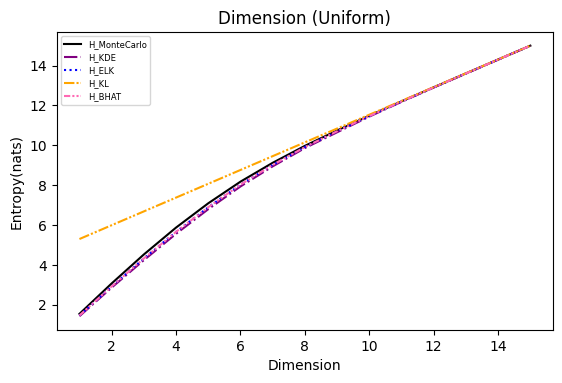

Numerical experiments are performed as described in [3], and following are the results.

NOTE: It is noted in [3] that the x axis of these experiments represents Natural logarithm of the hyperparameter

$\ln(\cdot)$ , while in the experiments conducted here, the result plot aligns with those presented in the paper using$\log_{10}(\cdot)$ .Also, please refer to [8] for the official implementation in Golang.

[1] Joe, H. (1989). Estimation of entropy and other functionals of a multivariate density. Annals of the Institute of Statistical Mathematics, 41, 683-697.

[2] Jebara, T., Kondor, R., & Howard, A. (2004). Probability product kernels. The Journal of Machine Learning Research, 5, 819-844.

[3] Kolchinsky, A., & Tracey, B. D. (2017). Estimating mixture entropy with pairwise distances. Entropy, 19(7), 361.

[4] https://en.wikipedia.org/wiki/Differential_entropy

[5] https://en.wikipedia.org/wiki/Kernel_density_estimation

[6] Zhouyin, Z., & Liu, D. (2021). Understanding neural networks with logarithm determinant entropy estimator. arXiv preprint arXiv:2105.03705.

[7] Cover, T. M. (1999). Elements of information theory. John Wiley & Sons.

[8] https://github.com/btracey/mixent/tree/master

[9] https://en.wikipedia.org/wiki/Bhattacharyya_distance

[10] https://en.wikipedia.org/wiki/Kullback%E2%80%93Leibler_divergence