The practical part of the course "Algorithmische Bioinformatik" (Algorithmic Bioinformatics) at the Freie Universität Berlin.

- Introduction

- Tools we used

- Day1: Quality Control with FastQC

- Day2: Mapping Workflow

- Day3: Variant Calling

- Day4: SNV Pathological Analysis

- Additional Information

- Supervisors

- Contributors

In this practice we've learned how to ...

- BWA (Burrows-Wheeler Aligner)

- SAMtools

- BEDTools

- IGV (Integrative Genomics Viewer)

Since the FastQC was already installed on the server, we could run it using the following command, applying the fastq function on 2 input files, in particularly the parts of them with fast.gz extension:

/group/albi-praktikum2023/software/fastqc -o /group/albi-praktikum2023/analysis/gruppe_3/aufgabe01 --noextract -f fastq /group/albi-praktikum2023/data/1000-Genome-Project/gruppe3/* .fastq.gzSince the reference genome was already indexed, we could start align the sequencing reads to the reference genome using BWA MEM. The following command was used to align small subset of reads:

/group/albi-praktikum2023/software/bwa-mem2 mem -t 50 /group/albi-praktikum2023/data/NCBI-reference/GRCh38.fna /group/albi-praktikum2023/analysis/gruppe_3/aufgabe02/test_gruppe3_1.fastq.gz > /group/albi-praktikum2023/analysis/gruppe_3/aufgabe02/test_output.samConvert the SAM file to BAM, sort, and index the aligned reads:

samtools view -S -b aligned_reads.sam > aligned_reads.bam

samtools sort aligned_reads.bam -o aligned_reads_sorted.bam

samtools index aligned_reads_sorted.bamThus, the filestructure of the folder should look like this:

hrad04@compute04:/group/albi-praktikum2023/analysis/gruppe_3/aufgabe02$ ls -a

. .. test_gruppe3_1.fastq.gz test_output.bam test_output.sam test_output_sorted.bam test_output_sorted.bam.baiAssess the quality of the mapping using SAMtools:

samtools flagstat -o json aligned_reads_sorted.bamThis was the output of the command:

{

"QC-passed reads": {

"total": 733319951,

"primary": 727401006,

"secondary": 0,

"supplementary": 5918945,

"duplicates": 0,

"primary duplicates": 0,

"mapped": 731726121,

"mapped %": 99.78,

"primary mapped": 725807176,

"primary mapped %": 99.78,

"paired in sequencing": 727401006,

"read1": 363700503,

"read2": 363700503,

"properly paired": 709068384,

"properly paired %": 97.48,

"with itself and mate mapped": 724443610,

"singletons": 1363566,

"singletons %": 0.19,

"with mate mapped to a different chr": 11264042,

"with mate mapped to a different chr (mapQ >= 5)": 5927328

},

"QC-failed reads": {

"total": 0,

"primary": 0,

"secondary": 0,

"supplementary": 0,

"duplicates": 0,

"primary duplicates": 0,

"mapped": 0,

"mapped %": null,

"primary mapped": 0,

"primary mapped %": null,

"paired in sequencing": 0,

"read1": 0,

"read2": 0,

"properly paired": 0,

"properly paired %": null,

"with itself and mate mapped": 0,

"singletons": 0,

"singletons %": null,

"with mate mapped to a different chr": 0,

"with mate mapped to a different chr (mapQ >= 5)": 0

}

}- Launch IGV.

- Import your sorted and indexed BAM file (

aligned_reads_sorted.bam).

What we've seen in IGV:

We solved this task in two different ways:

samtools depth -a aligned_reads_sorted.bam | awk '{cov[$1]+=$3; count[$1]++} END {for (chr in cov) print chr, cov[chr]/count[chr]}' > coverage_per_chromosome.txtbedtools genomecov -ibam aligned_reads_sorted.bam -g reference_genome.fasta.fai > genome_coverage.txtThe results can be seen in the files mapping/samtools_cov_per_chr.txt and mapping/bedtools_cov_per_chr.txt.

The task was to call variants from the aligned reads (the .bam file).

Before calling variants, we had to add read groups (RG) infromation to the .bam file. We used the AddOrReplaceReadGroups from the GATK Picard tools:

/group/albi-praktikum2023/software/picard.jar AddOrReplaceReadGroups \

I=/group/albi-praktikum2023/analysis/gruppe_3/gruppe3.bam \

O=/group/albi-praktikum2023/analysis/gruppe_3/aufgabe03/pickard_output.bam \

RGID=4 \

RGLB=lib1 \

RGPL=illumina \

RGPU=unit1 \

RGSM=20To harness the power of parallel computing, we split the .bam file into smaller files, each containing reads from a single chromosome.

For this purpose we wrote following c skript, which was based on the followig command:

samtools view -b input.bam chrN > chrN.bam#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

// Adjust these values based on your actual requirements

#define NUM_THREADS 24 // Example: Human chromosomes 1-22, X, and Y

char *chromosomes[] = {"chr1", "chr2", "chr3", "chr4", "chr5", "chr6", "chr7", "chr8", "chr9", "chr10",

"chr11", "chr12", "chr13", "chr14", "chr15", "chr16", "chr17", "chr18", "chr19",

"chr20", "chr21", "chr22", "chrX", "chrY"}; // List of chromosomes

typedef struct {

int thread_id;

char chromosome[10];

} thread_data;

void *splitBamFile(void *threadarg) {

thread_data *my_data;

my_data = (thread_data *) threadarg;

int tid = my_data->thread_id;

char *chr = my_data->chromosome;

printf("Thread %d splitting BAM for %s\n", tid, chr);

char command[256];

sprintf(command, "/group/albi-praktikum2023/software/samtools view -b /group/albi-praktikum2023/analysis/gruppe_3/aufgabe03/pickard_output.bam %s > %s.bam", chr, chr);

system(command);

printf("Thread %d finished splitting BAM for %s\n", tid, chr);

pthread_exit(NULL);

}

int main () {

pthread_t threads[NUM_THREADS];

thread_data thread_data_array[NUM_THREADS];

int rc;

long t;

for(t = 0; t < NUM_THREADS; t++) {

printf("In main: creating thread %ld for %s\n", t, chromosomes[t]);

thread_data_array[t].thread_id = t;

strcpy(thread_data_array[t].chromosome, chromosomes[t]);

rc = pthread_create(&threads[t], NULL, splitBamFile, (void *)&thread_data_array[t]);

if (rc) {

printf("ERROR; return code from pthread_create() is %d\n", rc);

exit(-1);

}

}

// Wait for all threads to complete

for(t = 0; t < NUM_THREADS; t++) {

pthread_join(threads[t], NULL);

}

printf("Main: BAM splitting completed. Exiting.\n");

pthread_exit(NULL);

}We used the GATK to perform Variant Calling. The following command were crusial for this task:

MarkDuplicatesto mark duplicates in the.bamfile.

gatk MarkDuplicates \

-I chrN.bam \

-O chrN_marked_duplicates.bam \

-M chrN_marked_dup_metrics.txtHaplotypeCallerto call variants.

gatk HaplotypeCaller \

-R reference.fasta \

-I chrN_marked_duplicates.bam \

-O chrN_raw_variants.vcfVariantFiltrationto filter the variants.

gatk VariantFiltration \

-R reference.fasta \

-V chrN_raw_variants.vcf \

-O chrN_filtered_snps.vcf \

--filter-expression "QD < 2.0 || FS > 60.0 || MQ < 40.0" \

--filter-name "SNP_FILTER"Also, we had to index each .bam file, which was done using the following command:

samtools index %s_marked.bamSimilarly to previous step, we wrote a c skript to run these commands in parallel:

#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#define NUM_THREADS 24 // This should match the number of chromosomes or tasks

typedef struct {

int thread_id;

char chromosome[24]; // Adjust size as needed

} thread_data;

void *processChromosome(void *threadarg) {

thread_data *my_data;

my_data = (thread_data *) threadarg;

int tid = my_data->thread_id;

char *chr = my_data->chromosome;

printf("Thread %d processing chromosome %s\n", tid, chr);

// Construct command strings (simplified here)

char markDupCmd[256];

sprintf(markDupCmd, "/group/albi-praktikum2023/software/gatk MarkDuplicates -I %s.bam -O %s_marked.bam -M %s_metrics.txt", chr, chr, chr);

char indexBamFileCmd[256];

sprintf(indexBamFileCmd, "samtools index %s_marked.bam", chr);

char callVarCmd[256];

sprintf(callVarCmd, "/group/albi-praktikum2023/software/gatk HaplotypeCaller -R /group/albi-praktikum2023/data/NCBI-reference/GRCh38.fna -I %s_marked.bam -O %s_raw.vcf", chr, chr);

char filterSNPsCmd[256];

sprintf(filterSNPsCmd, "/group/albi-praktikum2023/software/gatk VariantFiltration -R /group/albi-praktikum2023/data/NCBI-reference/GRCh38.fna -V %s_raw.vcf -O %s_filtered.vcf --filter-expression \"QD < 2.0 || FS > 60.0 || MQ < 40.0\" --filter-name \"SNP_FILTER\"", chr, chr);

// Execute commands

system(markDupCmd);

system(indexBamFileCmd);

system(callVarCmd);

system(filterSNPsCmd);

printf("Thread %d finished processing chromosome %s\n", tid, chr);

pthread_exit(NULL);

}

int main () {

pthread_t threads[NUM_THREADS];

thread_data thread_data_array[NUM_THREADS];

int rc;

long t;

char *chromosomes[] = {"chr1", "chr2", "chr3", "chr4", "chr5", "chr6", "chr7", "chr8", "chr9", "chr10", "chr11", "chr12", "chr13", "chr14", "chr15", "chr16", "chr17", "chr18", "chr19", "chr20", "chr21", "chr22", "chrX", "chrY"};

for(t = 0; t < NUM_THREADS; t++) {

printf("In main: creating thread %ld\n", t);

thread_data_array[t].thread_id = t;

strcpy(thread_data_array[t].chromosome, chromosomes[t]);

rc = pthread_create(&threads[t], NULL, processChromosome, (void *)&thread_data_array[t]);

if (rc) {

printf("ERROR; return code from pthread_create() is %d\n", rc);

exit(-1);

}

}

// Wait for all threads to complete

for(t = 0; t < NUM_THREADS; t++) {

pthread_join(threads[t], NULL);

}

printf("Main: program completed. Exiting.\n");

pthread_exit(NULL);

}After calling variants for each chromosome, we merged the resulting VCF files into a single VCF file using the MergeVcfs tool from the GATK:

gatk MergeVcfs \

-I chr1_filtered_snps.vcf \

-I chr2_filtered_snps.vcf \

... \

-O merged_filtered_snps.vcfFinally, we received the merged VCF file merged_filtered_snps.vcf, which was used for further analysis.

We had to perform in 5 steps on this stage.

- Checking what our file contains:

- Chromosome, f.e. chr1

- Position, f.e. 21896830

- ID, f.e. rs140261216

- Reference allele, f.e. CTG

- Alternate allele(s) f.e. CACAC,TATACACAC,TCACACA,TCACACACA,TCACACACACA

- Quality score (QUAL) f.e. .

- Filter status (FILTER) f.e. .

- Info fields (INFO) f.e. RS=139323070;RSPOS=6831944;dbSNPBuildID=134;SSR=0;SAO=0;VP=0x050000080005040036000100;GENEINFO=CAMTA1:23261;WGT=1;VC=SNV;INT;ASP;VLD;KGPhase1;KGPhase3;CAF=0.999,0.0009984;COMMON=1;TOPMED=0.99681447502548419,0.00318552497451580

- Interpret INFO field

- RS (Reference SNP ID) - a unique identifier in the dbSNP database

- RSPOS (Reference SNP Position) - the genomic position of the variant

- RV (Reference allele(s) and Variation allele(s)) - this field is unspecified and can vary in this context. It could specify the reference allele and the variant allele or other relevant information

- dbSNPBuildID - the version number of the dbSNP database in which this variant is included

- SSR (SubSNP rsID) - an internal reference number for the variant in the dbSNP database

- SAO (Variant Allele Origin) - a value indicating whether the variant allele was observed in the reference sequence (SAO=0) or not (SAO=1)

- VP (Variant properties) - a bitflag encoding various information about the variant

- GENEINFO - information about the gene affected by the variant

- WGT (Weight) - a weighting factor for the variant, usually 1

- VC (Variant Class) - the class of the variant

- ASP (Allele-specific properties) - information about the allele of the variant

- VLD (Variant Locus-specific database) - information about whether the variant is present in a specific locus-specific database.

- G5 (Allele frequency group) - information about the frequency of the variant allele in different population groups.

- KGPhase1 and KGPhase3 - information about the frequency of the variant allele in the 1000 Genome Phase 1 and Phase 3 databases, respectively.

- CAF (Combined Allele Frequency) - the combined allele frequency of the variant allele in different populations.

- COMMON - a flag indicating whether the variant is common in the population (COMMON=1) or not (COMMON=0).

- TOPMED - the allele frequency of the variant allele in the TOPMed database.

- Testing if the file contains only SNP (SNP)

zcat /group/albi-praktikum2023/data/dbSNP/dbSNP.vcf.gz | grep -v '^#' | awk '{if ($8 !~ /VT=SNP/ && $8 !~ /VC=SNV/) print}' | cut -f8zcat - command to decompress the file with .gz format on-the-fly grep -v '^#' - filters out header lines (lines starting with #) awk - program used to process and format text lines if ($8 !~ /VT=SNP/ && $8 !~ /VC=SNV/) - if-condition to find variants types and classes, which are not SNP or SNV print - if statemenet is true, makes output cut -f8 - extracts the eighth field (INFO) from each line of input

In output, f.e, were: RS=200339231;RSPOS=4325724;dbSNPBuildID=137;SSR=0;SAO=0;VP=0x05000000000504003e000200;WGT=1;VC=DIV;ASP;VLD;KGPhase1;KGPhase3;CAF=0.9846,0.003594;COMMON=1;TOPMED=0.99357320336391437,0.00642679663608562

So VC=DIV, it means file contains not only SNP.

- FIltering file dbSNP.vcf.gz and creating new file dbSNPfiltered.vcf, which contains only SNP(SNV)

zcat /group/albi-praktikum2023/data/dbSNP/dbSNP.vcf.gz | grep -v '^#' | awk -F '\t' '$8 ~ /VT=SNP/ || $8 ~ /VC=SNV/' > /group/albi-praktikum2023/analysis/gruppe_3/aufgabe03/dbSNPfiltered.vcf-F '\t' - this option sets the field separator to a tab character (\t). This is used to split each line into fields based on tabs '$8 ~ /VT=SNP/ || $8 ~ /VC=SNV/' - This condition checks if the eighth field ($8) contains the string "VT=SNP" OR "VC=SNV"

- Testing new created file, if it contains only SNP(SNV)

cat /group/albi-praktikum2023/analysis/gruppe_3/aufgabe03/dbSNPfiltered.vcf | grep -v '^#' | awk '{if ($8 !~ /VT=SNP/ && $8 !~ /VC=SNV/) print}' | cut -f8Ouput contained no strings, which means new file consist only of SNP

We tried to determine the sex of an individual by analyzing the ratio of heterozygous single nucleotide polymorphisms (SNPs) to total SNPs on the X chromosome.

- Setting the Path to the VCF File First, specify the path to the VCF (Variant Call Format) file containing SNP data for the X chromosome.

vcf_path="/group/albi-praktikum2023/analysis/gruppe_3/aufgabe03/chrX_filtered.vcf"- The following command counts the number of heterozygous SNPs present on the X chromosome.

heterozygous_snps=$(/group/albi-praktikum2023/software/bcftools view -H $vcf_path | awk '{if($10 ~ "0/1" || $10 ~ "1/0") print $0}' | wc -l)- Then we count the total number of SNPs present on the X chromosome.

total_snps=$(/group/albi-praktikum2023/software/bcftools view -H $vcf_path | wc -l)- The ratio is calculated as follows:

ratio=$(echo "scale=2; $heterozygous_snps / $total_snps" | bc)- Analysis Result: Based on the results of the analysis, with 87,296 heterozygous SNPs and a total of 156,862 SNPs on the X chromosome, the ratio is 0.55. This relatively high ratio suggests that the individual is likely female.

We compared the VCF files of different individuals to identify common and unique variants. We used the bcftools to perform this task.

Here is the bash script we used to compare the VCF files of different individuals:

#!/bin/bash

# Directories

input_vcf_dir="/group/albi-praktikum2023/SNPs"

output_dir_base="/group/albi-praktikum2023/analysis/gruppe_3/aufgabe04"

# Ensure the base output directory exists

mkdir -p "$output_dir_base"

# Initial count of unique SNPs in Individual 3

initial_unique=$(bcftools view -H "${input_vcf_dir}/gruppe3.vcf" | wc -l)

echo "Initial unique SNPs in Individual 3: $initial_unique"

# Define the list of groups to compare against (excluding group 3 itself if listed)

groups=(1 2 4 5 6 7 8 9 10 11 12)

for i in "${groups[@]}"; do

# Specific output directory for this comparison

output_dir="${output_dir_base}/unique_to_3_vs_${i}"

mkdir -p "$output_dir"

# Find unique SNPs for Individual 3 compared to Individual i

bcftools isec -c none -C "${input_vcf_dir}/gruppe3.vcf.gz" "${input_vcf_dir}/gruppe${i}.vcf.gz" -Oz -p "$output_dir"

# Count the number of unique SNPs for this comparison

# Assuming the unique SNPs are in the 0000.vcf.gz file created by bcftools isec

if [[ -f "${output_dir}/0000.vcf.gz" ]]; then

unique_snps=$(zcat "${output_dir}/0000.vcf.gz" | grep -v "^#" | wc -l)

else

unique_snps=0

fi

echo "Unique SNPs after including gruppe $i: $unique_snps"

decrease=$(echo "scale=2; 100 * ($initial_unique - $unique_snps) / $initial_unique" | bc)

echo "Decrease in unique SNPs: ${decrease}%"

doneThe result of the comparison we visualized in this logs:

Initial unique SNPs in Individual 3: 4129811

Unique SNPs after including gruppe 1: 902076

Decrease in unique SNPs: 78.15%

Unique SNPs after including gruppe 2: 1498732

Decrease in unique SNPs: 63.70%

Unique SNPs after including gruppe 4: 1750020

Decrease in unique SNPs: 57.62%

Unique SNPs after including gruppe 5: 1777832

Decrease in unique SNPs: 56.95%

Unique SNPs after including gruppe 6: 1767605

Decrease in unique SNPs: 57.19%

Unique SNPs after including gruppe 7: 1682734

Decrease in unique SNPs: 59.25%

Unique SNPs after including gruppe 8: 1673782

Decrease in unique SNPs: 59.47%

Unique SNPs after including gruppe 9: 1659713

Decrease in unique SNPs: 59.81%

Unique SNPs after including gruppe 10: 1525790

Decrease in unique SNPs: 63.05%

Unique SNPs after including gruppe 11: 1525718

Decrease in unique SNPs: 63.05%

Unique SNPs after including gruppe 12: 1598071

Decrease in unique SNPs: 61.30%

-----

Unique SNPs after including all groups: 226015

Decrease is: 94.52%The results show that the number of unique SNPs in Individual 3 decreases significantly when compared to other individuals. This suggests that Individual 3 shares a large number of SNPs with other individuals.

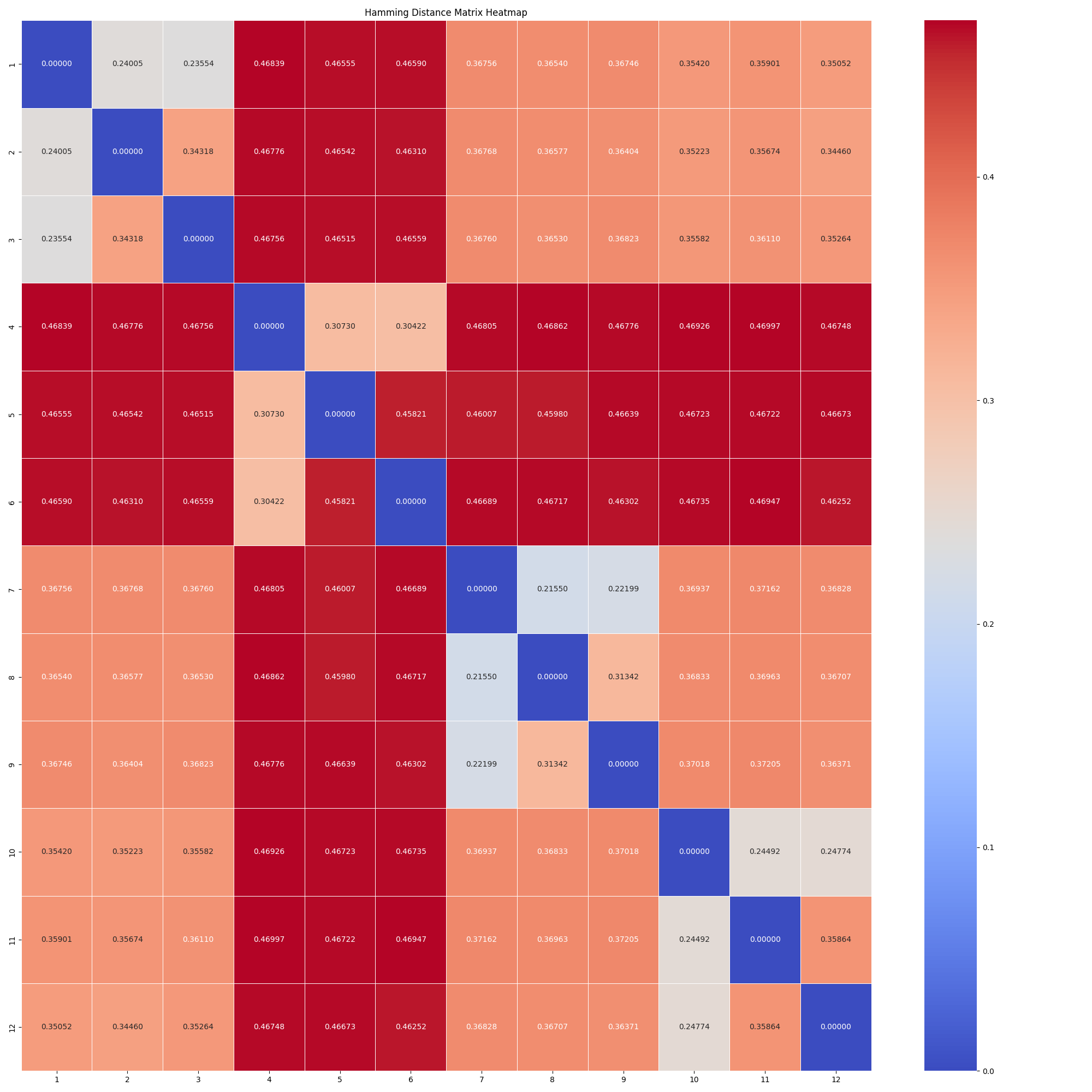

Next we computed the hamming distance between the VCF files of different individuals. The hamming distance is a measure of the number of positions at which the corresponding elements in two sequences are different. The script for this task can be found in vcf_comparison/compare_all_2_all.py.

The following heatmap shows the hamming distance between the VCF files of different individuals:

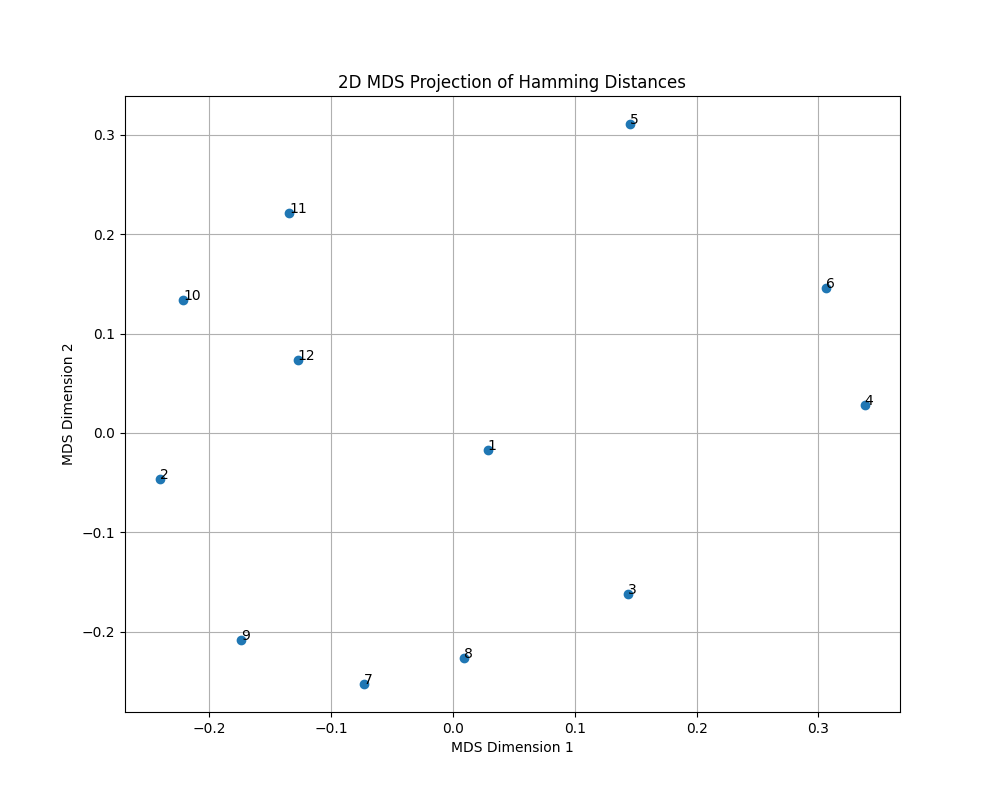

For further visualization, we used MDS (Multidimensional Scaling) to visualize the genetic distance between the individuals. The script for this task can be found in vcf_comparison/visualize_distance_matrix.py.

The following plot shows the genetic distance between the individuals:

As we can see from the heatmap and the MDS plot, the individuals are clustered based on their genetic distance. This suggests that the genetic distance between the individuals can be used to identify patterns and relationships between them. As we primarly worked on Individual 3, we can see that it is genetically closer to Individual 4, 5 and 6.

TODO

N/A

- Svenja Mehringer

- Dzmitry Hramyka

- Sofya Shorzhina

- Sofiia Ilnytska

- Maksim Danilchyk