Experiment on AdamW described in Fixing Weight Decay Regularization in Adam , which analyzed the implementations on current framework and point out a bug. Then they proposed AdamW to figure out this bug.

In the paper mentioned above, the author shows that

And most current implementation of Adam take the approach of

Note that if you do not use weight decay ( weight_decay == 0), this bug is free for you.

for simple classification

$ jupyter notebook

# then open adam_adamW_simple.ipynbfor cifa10

$ cd cifar

$ python main.pyfor auto encoder

$ cd autoencoder

$ python main.pyI delete the

p.data.addcdiv_(-step_size, exp_avg, denom) # L2 approach

# -> weight decay approach

p.data.add_(-step_size, torch.mul(p.data, group['weight_decay']).addcdiv_(1, exp_avg, denom) )

All the results are under weight_decay = 0.1

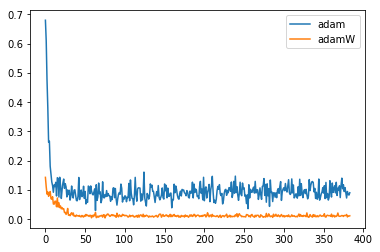

- simple classification problem

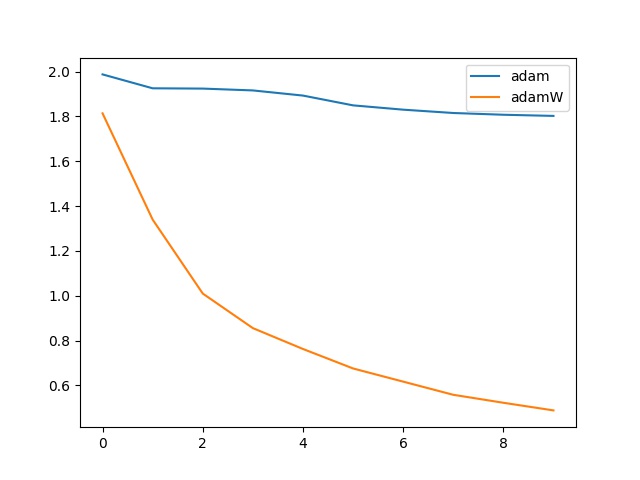

- cifar10 on VGG19

lr = 0.001

Initially the lr was set to 0.1, in this way we found that model under the Adam optimizer will not converge but AdamW will get converged. This means the figuration of Adam and AdamW can be various.

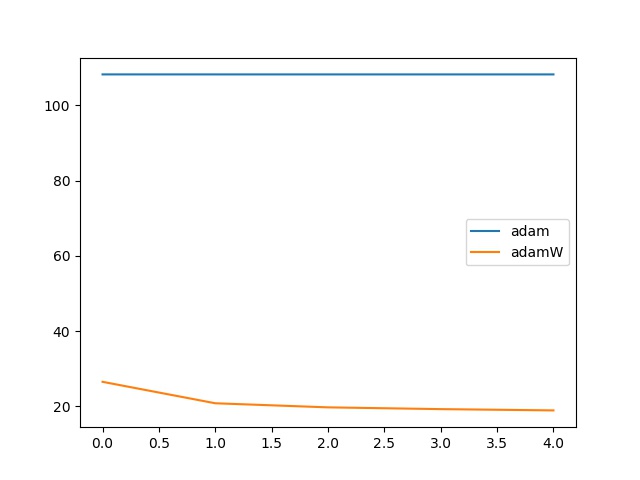

- Auto encoder on mnist dataset to 2d vector

The simple classification is from ref

The cifar10 baseline is from pytorch-cifar