This repository contains the code (in Torch) for the paper:

Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization

Xun Huang,

Serge Belongie

arXiv 2017

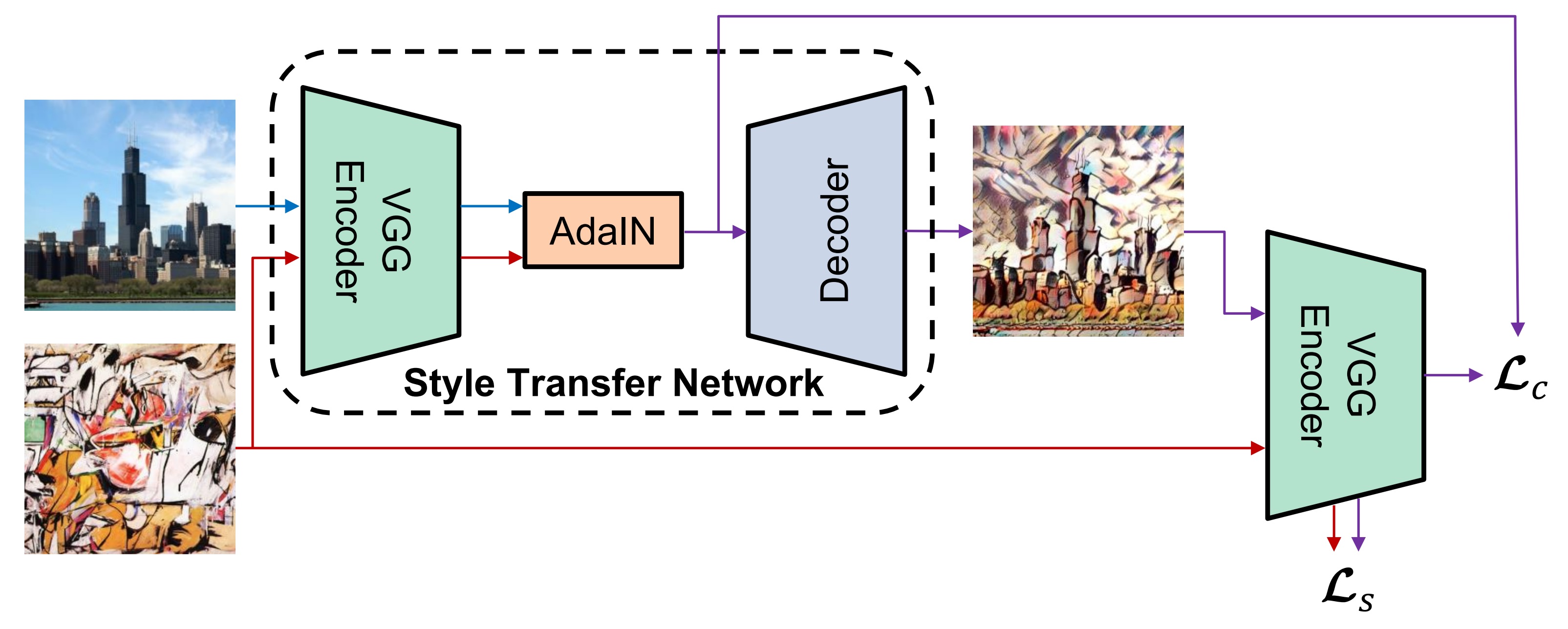

This paper proposes the first real-time style transfer algorithm that can transfer arbitrary new styles, in contrast to a single style or 32 styles. Our algorithm runs at 15 FPS with 512x512 images on a Pascal Titan X. This is around 720x speedup compared with the original algorithm of Gatys et al., without sacrificing any flexibility. We accomplish this with a novel adaptive instance normalization (AdaIN) layer, which is similar to instance normalization but with affine parameters adaptively computed from the feature representations of an arbitrary style image.

Optionally:

- CUDA and cuDNN

- cunn

- torch.cudnn

Video Stylization dependencies:

bash models/download_models.sh

This command will download a pre-trained decoder as well as a modified VGG-19 network. Our style transfer network consists of the first few layers of VGG, an AdaIN layer, and the provided decoder.

Use -content and -style to provide the respective path to the content and style image, for example:

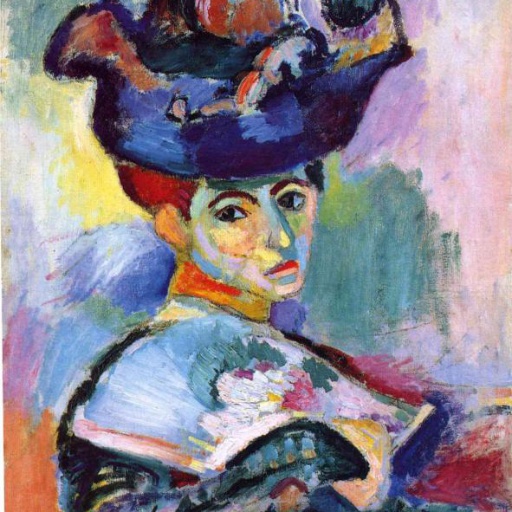

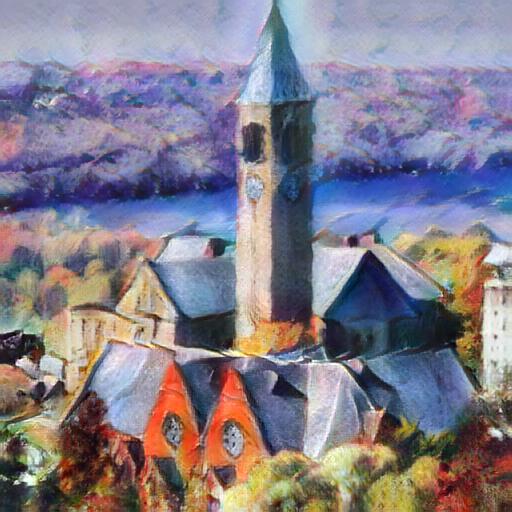

th test.lua -content input/content/cornell.jpg -style input/style/woman_with_hat_matisse.jpg

You can also run the code on directories of content and style images using -contentDir and -styleDir. It will save every possible combination of content and styles to the output directory.

th test.lua -contentDir input/content -styleDir input/style

Some other options:

-crop: Center crop both content and style images beforehand.-contentSize: New (minimum) size for the content image. Keeping the original size if set to 0.-styleSize: New (minimum) size for the content image. Keeping the original size if set to 0.

To see all available options, type:

th test.lua -help

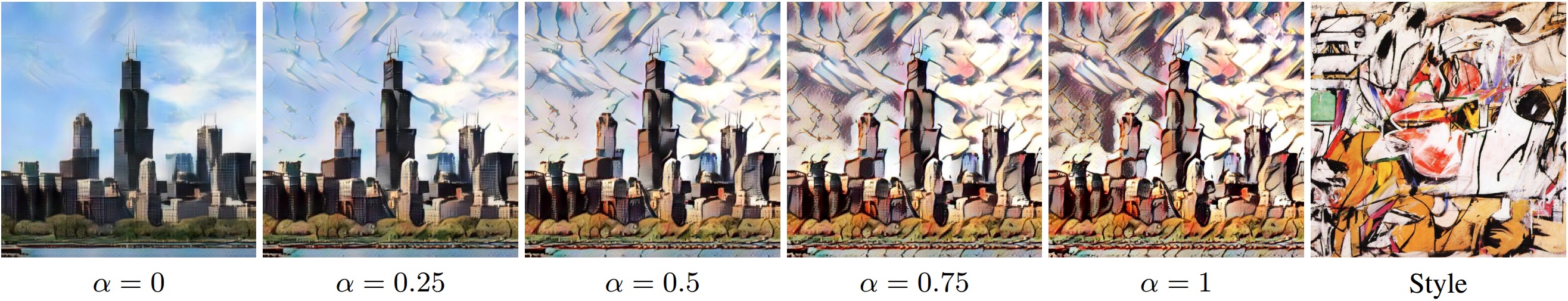

Use -alpha to adjust the degree of stylization. It should be a value between 0 and 1 (default). Example usage:

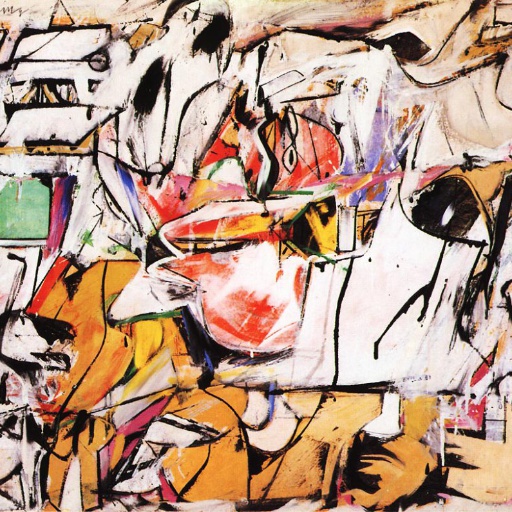

th test.lua -content input/content/chicago.jpg -style input/style/asheville.jpg -alpha 0.5 -crop

By changing -alpha, you should be able to reproduce the following results.

Add -preserveColor to preserve the color of the content image. Example usage:

th test.lua -content input/content/newyork.jpg -style input/style/brushstrokes.jpg -contentSize 0 -styleSize 0 -preserveColor

It is possible to interpolate between several styles using -styleInterpWeights that controls the relative weight of each style. Note that you also to need to provide the same number of style images separated be commas. Example usage:

th test.lua -content input/content/avril.jpg \

-style input/style/picasso_self_portrait.jpg,input/style/impronte_d_artista.jpg,input/style/trial.jpg,input/style/antimonocromatismo.jpg \

-styleInterpWeights 1,1,1,1 -crop

You should be able to reproduce the following results shown in our paper by changing -styleInterpWeights .

Use -mask to provide the path to a binary foreground mask. You can transfer the foreground and background of the content image to different styles. Note that you also to need to provide two style images separated be comma, in which the first one is applied to foreground and the second one is applied to background. Example usage:

th test.lua -content input/content/blonde_girl.jpg -style input/style/woman_in_peasant_dress_cropped.jpg,input/style/mondrian_cropped.jpg \

-mask input/mask/mask.png -contentSize 0 -styleSize 0

Currently, this does style transfer on a frame by frame basis. Style features are stored so that style image is processed only once. This creates a speedup of about 1.2-1.4x.

Note: This speedup does not happen if -preserveColor modifier is used.

Future work:

- Retrain network to incorporate motion information

- Add audio support

I will work on improvements in this repo and merge it back here once some major update comes.

bash styVid.sh input.mp4 style-dir-path

This generates 1 mp4 for each image present in style-dir-path. Other video formats are also supported. Next follow the instructions given by prompt.

To, change other parameters like alpha etc. edit line 53 of styVid.sh:

th testVid.lua -contentDir videoprocessing/${filename} -style ${styleimage} -outputDir videoprocessing/${filename}-${stylename}

bash styVid.sh input/videos/cutBunny.mp4 input/styleexample

This will first create two folder namely videos and videoprocessing. Then it will generate three mp4 files namely cutBunny-stylized-mondrian.mp4, cutBunny-stylized-woman_with_hat_matisse.mp4 and cutBunny-fix.mp4 in videos folder. I have included the files in examples/videoutput folder for reference.

The individual frames and output would be present in videoprocessing folder.

An example video with some results can be seen here on youtube.

For a 10s video with 480p resolution it takes about 2 minutes on a Titan X Maxwell GPU (12GB).

Coming soon.

If you find this code useful for your research, please cite the paper:

@article{huang2017adain,

title={Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization},

author={Huang, Xun and Belongie, Serge},

journal={arXiv preprint arXiv:1703.06868},

year={2017}

}

This project is inspired by many existing style transfer methods and their open-source implementations, such as:

- Image Style Transfer Using Convolutional Neural Networks, Gatys et al. [code (by Johnson)]

- Perceptual Losses for Real-Time Style Transfer and Super-Resolution, Johnson et al. [code]

- Texture Networks: Feed-forward Synthesis of Textures and Stylized Images, Ulyanov et al. [code]

- Improved Texture Networks: Maximizing Quality and Diversity in Feed-forward Stylization and Texture Synthesis, Ulyanov et al. [code]

- A Learned Representation For Artistic Style, Dumoulin et al. [code]

- Fast Patch-based Style Transfer of Arbitrary Style, Chen and Schmidt [code]

- Controlling Perceptual Factors in Neural Style Transfer, Gatys et al. [code]

- Artistic style transfer for videos, Ruder et al. [code]

If you have any questions or suggestions about the code or the paper, feel free to reach me (xh258@cornell.edu).

For any suggestions or questions about video stylization reach me at (sairao1996@gmail.com)