Python 3.6+ envrionment

Step 1: Install OpenAI Baselines System Packages OpenAI Instruction

sudo apt-get update && sudo apt-get install cmake libopenmpi-dev python3-dev zlib1g-devInstallation of system packages on Mac requires Homebrew. With Homebrew installed, run the following:

brew install cmake openmpiClone the repository to folder /DQN-DDPG_Stock_Trading:

git clone https://github.com/hust512/DQN-DDPG_Stock_Trading.git

cd DQN-DDPG_Stock_TradingUnder folder /DQN-DDPG_Stock_Trading, create a virtual environment

pip install virtualenvVirtualenvs are essentially folders that have copies of python executable and all python packages. Create a virtualenv called venv under folder /DQN-DDPG_Stock_Trading/venv

virtualenv -p python3 venvTo activate a virtualenv:

source venv/bin/activate

The master branch supports Tensorflow from version 1.4 to 1.14. For Tensorflow 2.0 support, please use tf2 branch. Refer to TensorFlow installation guide for more details.

- Install gym and tensorflow packages:

pip install gym pip install gym[atari] pip install tensorflow==1.14

- Other packages that might be missing:

pip install filelock pip install matplotlib pip install pandas

-

Clone the baseline repository to folder DQN-DDPG_Stock_Trading/baselines:

git clone https://github.com/openai/baselines.git cd baselines -

Install baselines package

pip install -e .

Run all unit tests in baselines:

pip install pytest

pytest

A result like '94 passed, 49 skipped, 72 warnings in 355.29s' is expected. Check the OpenAI baselines Issues or stackoverflow if fixes on failed tests are needed.

python -m baselines.run --alg=ppo2 --env=PongNoFrameskip-v4 --num_timesteps=1e4 --load_path=~/models/pong_20M_ppo2 --playA mean reward per episode around 20 is expected.

Register the RLStock-v0 environment in folder /DQN-DDPG_Stock_Trading/venv: From

DQN-DDPG_Stock_Trading/gym/envs/__init__.pyCopy following:

register(

id='RLStock-v0',

entry_point='gym.envs.rlstock:StockEnv',

)

register(

id='RLTestStock-v0',

entry_point='gym.envs.rlstock:StockTestEnv',

)into the venv gym environment:

/DQN-DDPG_Stock_Trading/venv/lib/python3.6/site-packages/gym/envs/__init__.py- Copy folder

DQN_Stock_Trading/gym/envs/rlstockinto the venv gym environment folder:

/DQN-DDPG_Stock_Trading/venv/lib/python3.6/site-packages/gym/envs- Open

/DQN-DDPG_Stock_Trading/venv/lib/python3.6/site-packages/gym/envs/rlstock/rlstock_env.py

/DQN-DDPG_Stock_Trading/venv/lib/python3.6/site-packages/gym/envs/rlstock/rlstock_testenv.pychange the import data path in these two files (cd into the rlstock folder and pwd to check the folder path).

Replace

/DQN-DDPG_Stock_Trading/baselines/baselines/run.pywith

/DQN-DDPG_Stock_Trading/run.pyGo to folder

/DQN-DDPG_Stock_Trading/

Activate the virtual environment

source venv/bin/activate

Go to the baseline folder

/DQN-DDPG_Stock_Trading/baselines

To train the model, run this

python -m baselines.run --alg=ddpg --env=RLStock-v0 --network=mlp --num_timesteps=1e4To see the testing/trading result, run this

python -m baselines.run --alg=ddpg --env=RLStock-v0 --network=mlp --num_timesteps=2e4 --playThe result images are under folder /DQN-DDPG_Stock_Trading/baselines.

(You can tune the hyperparameter num_timesteps to better train the model, note that if this number is too high, then you will face an overfitting problem, if it's too low, then you will face an underfitting problem.)

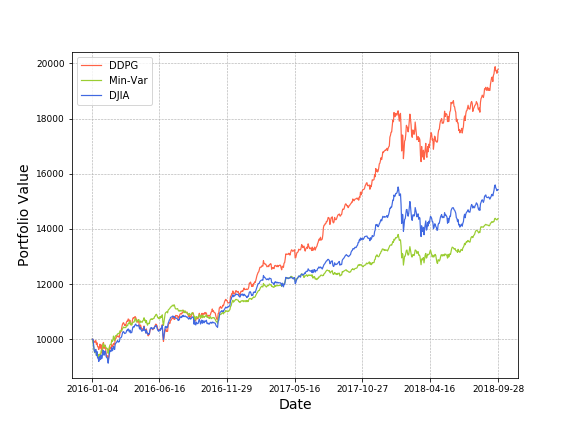

Compare to our result:

pip3 install opencv-python

pip3 install lockfile

pip3 install -U numpy

pip3 install mujoco-py==0.5.7Xiong, Z., Liu, X.Y., Zhong, S., Yang, H. and Walid, A., 2018. Practical deep reinforcement learning approach for stock trading, NeurIPS 2018 AI in Finance Workshop.