Reproduced code of ACM-MM 2023 <Improving Rumor Detection by Class-based Adversarial Domain Adaptation>

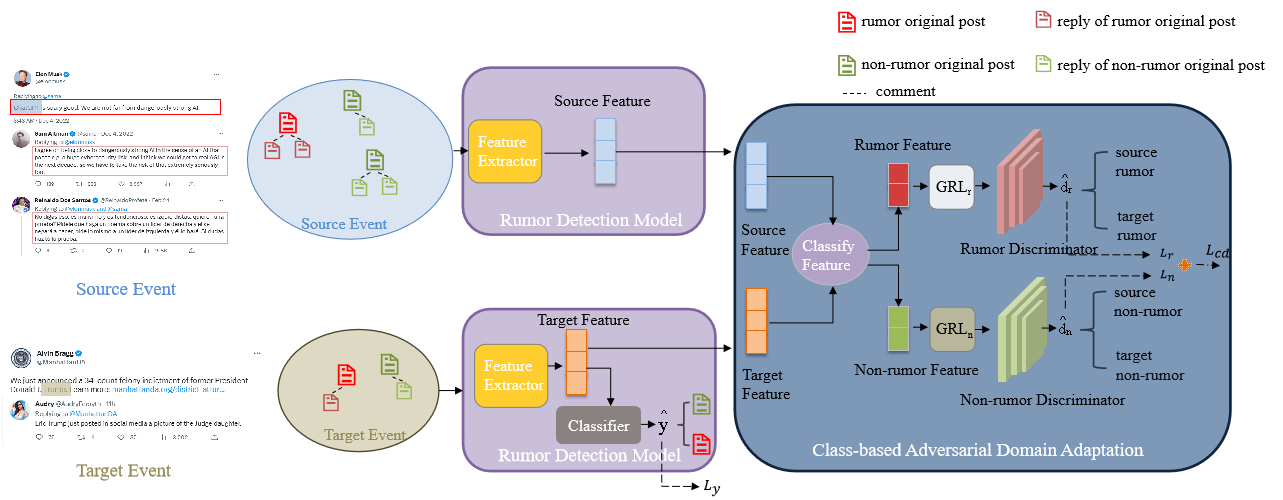

In the paper Improving Rumor Detection by Class-based Adversarial Domain Adaptation, there are two components: The rumour detection model and the class-based adversarial domain adaptation module.

In our codes, they are implemented by three modules: the feature extractor, the label classifier and the domain classifier, as shown in CADA.py.

To experiment the model's cross-domain detection ability, we use the Twitter dataset and Twitter-COVID19 dataset as the source domain and target domain datasets. They can be downloaded from NAACL-2022 ACLR. Download the Twitter and Twitter-COVID19 datasets, unzip them into directories ./data/in-domain and ./data/out-of-domain. The finalised directory architecture should be like this:

├── project_root/

│ ├── data/

│ │ ├── in-domain/

| | | | ├──Twitter/

| | | | ├──Twittergraph/

│ │ ├── out-of-domain/

| | | | ├──Twitter/

| | | | ├──Twittergraph/

│ ├── ...

│ ├── README.md

In the reproduce, we design the CADA as an individual object which takes feature extractor, label classifier and domain classifier as parameters. You can substitude the three components with any modules (eg. BERT, GCN, MLP). The original paper uses GACL and BERT as the framework of these three components. Instead, for the conveniece of implemetation, we use the BiGCN.

In the paper of the repository, the loss in the 2nd round of training is defined as

L = label_loss - domain_loss

However, this will make negative optimisation on the domain discriminator. Therefore, we follow the definition in the original paper of adversarial domain adaptation (DANN), and set the loss function as:

L = label_loss + domain_loss

The Gradient Reverse Layer will control the gradient flow and only optimise the domain discriminiator, and make adversarial learning on the feature extrator.

numpy

pytorch

torch_geometric

torch_scatter

sklearn

random

A detailed requirements file with version on my device is shown in environment.yml

python main.py