Tensorflow implementation of AttGAN - AttGAN: Facial Attribute Editing by Only Changing What You Want

-

Other AttGAN implementations

- AttGAN-PyTorch by Yu-Jing Lin

-

Closely related works

- An excellent work built upon our code - STGAN (CVPR 2019) by Ming Liu

- Changing-the-Memorability (CVPR 2019 MBCCV Workshop) by acecreamu

- Fashion-AttGAN (CVPR 2019 FSS-USAD Workshop) by Qing Ping

-

An unofficial demo video of AttGAN by 王一凡

-

See results.md for more results, we try higher resolution and more attributes (all 40 attributes!!!) here

-

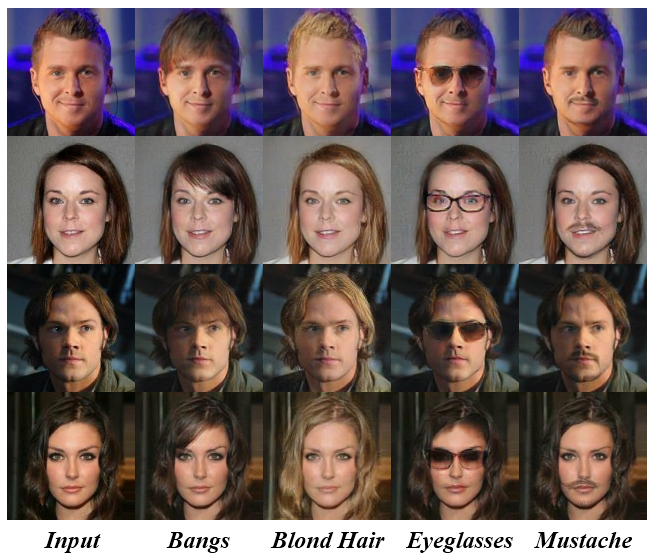

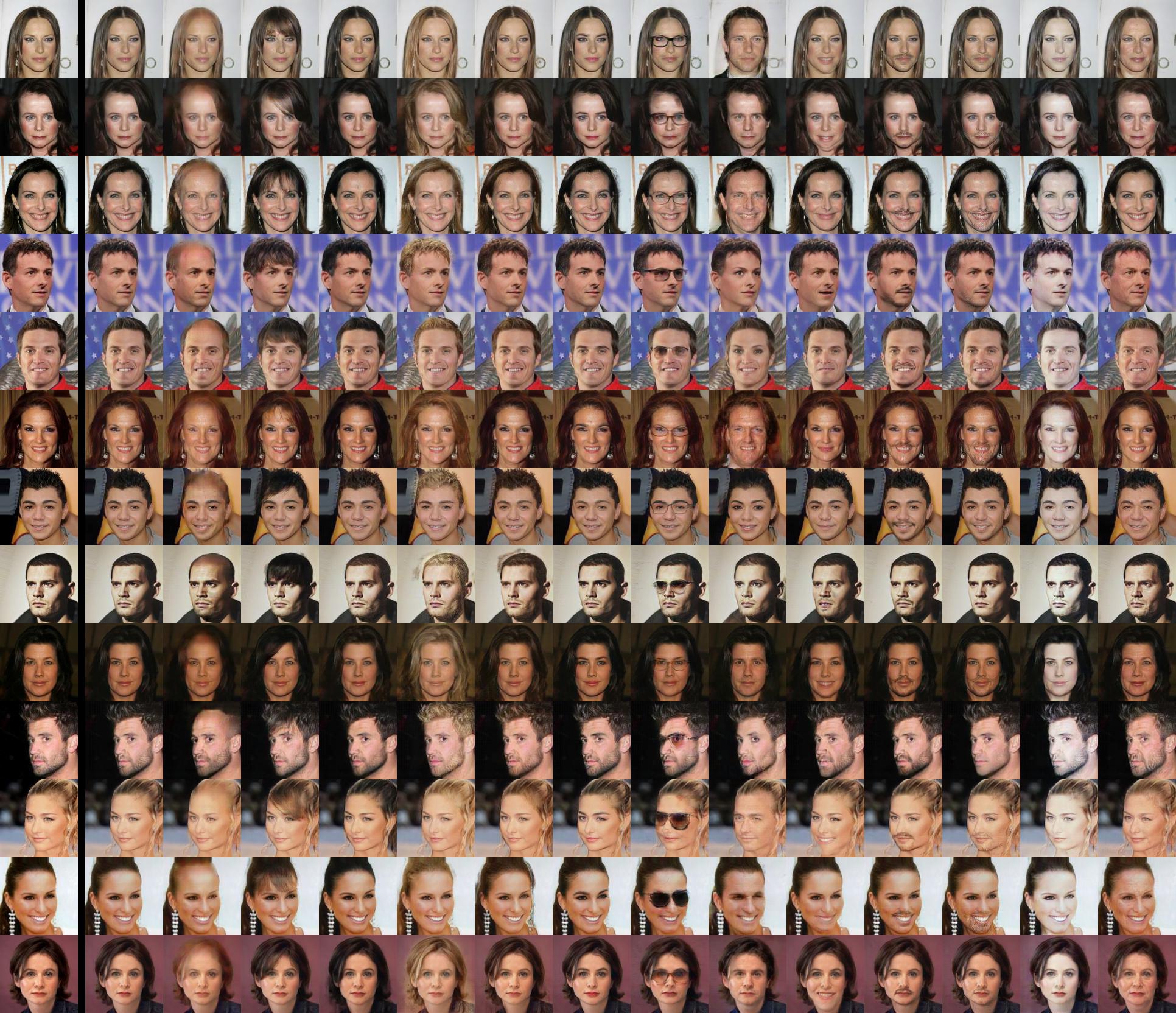

Inverting 13 attributes respectively

from left to right: Input, Reconstruction, Bald, Bangs, Black_Hair, Blond_Hair, Brown_Hair, Bushy_Eyebrows, Eyeglasses, Male, Mouth_Slightly_Open, Mustache, No_Beard, Pale_Skin, Young

-

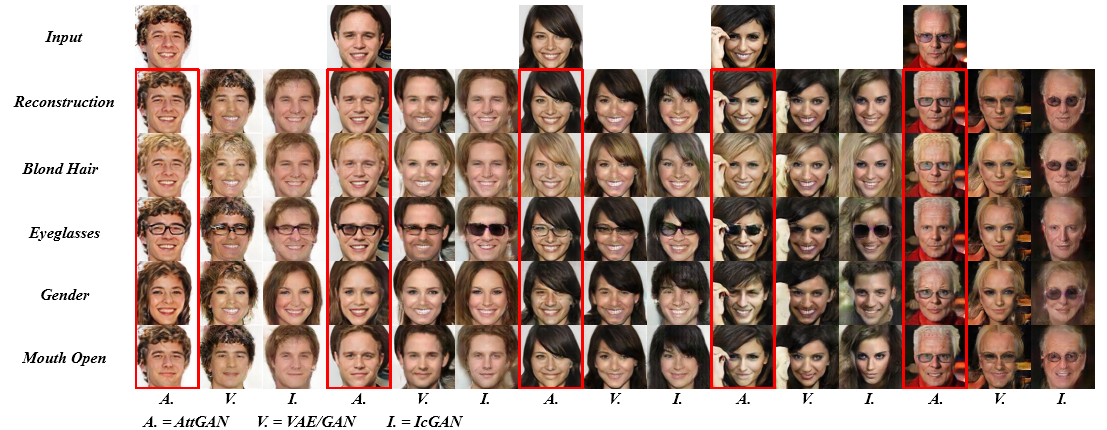

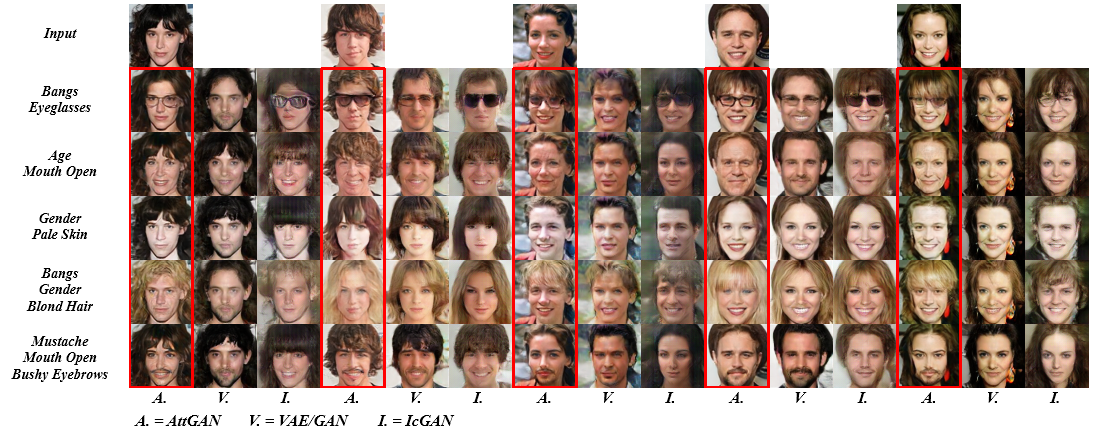

Comparisons with VAE/GAN and IcGAN on inverting single attribute

-

Comparisons with VAE/GAN and IcGAN on simultaneously inverting multiple attributes

-

Prerequisites

- Tensorflow 1.7 or 1.8

- Python 2.7 or 3.6

-

Dataset

-

Celeba dataset

- Images should be placed in ./data/img_align_celeba/*.jpg

- Attribute labels should be placed in ./data/list_attr_celeba.txt

- the above links might be inaccessible, the alternatives are

- img_align_celeba.zip

- list_attr_celeba.txt

-

HD-Celeba (optional)

- the images of img_align_celeba.zip are low resolution and uncropped, higher resolution and cropped images are available here

- the high quality data should be placed in ./data/img_crop_celeba/*.jpg

-

-

Well-trained models: download the models you need and unzip the files to ./output/ as below,

output ├── 128_shortcut1_inject1_none └── 384_shortcut1_inject1_none_hd -

Examples of training

-

see examples.md for more examples

-

training

-

for 128x128 images

CUDA_VISIBLE_DEVICES=0 \ python train.py \ --img_size 128 \ --shortcut_layers 1 \ --inject_layers 1 \ --experiment_name 128_shortcut1_inject1_none

-

for 384x384 images

CUDA_VISIBLE_DEVICES=0 \ python train.py \ --img_size 384 \ --enc_dim 48 \ --dec_dim 48 \ --dis_dim 48 \ --dis_fc_dim 512 \ --shortcut_layers 1 \ --inject_layers 1 \ --n_sample 24 \ --experiment_name 384_shortcut1_inject1_none

-

for 384x384 HD images (need HD-Celeba)

CUDA_VISIBLE_DEVICES=0 \ python train.py \ --img_size 384 \ --enc_dim 48 \ --dec_dim 48 \ --dis_dim 48 \ --dis_fc_dim 512 \ --shortcut_layers 1 \ --inject_layers 1 \ --n_sample 24 \ --use_cropped_img \ --experiment_name 384_shortcut1_inject1_none_hd

-

-

tensorboard for loss visualization

CUDA_VISIBLE_DEVICES='' \ tensorboard \ --logdir ./output/128_shortcut1_inject1_none/summaries \ --port 6006

-

-

Example of testing single attribute

CUDA_VISIBLE_DEVICES=0 \ python test.py \ --experiment_name 128_shortcut1_inject1_none \ --test_int 1.0

-

Example of testing multiple attributes

CUDA_VISIBLE_DEVICES=0 \ python test_multi.py \ --experiment_name 128_shortcut1_inject1_none \ --test_atts Pale_Skin Male \ --test_ints 0.5 0.5

-

Example of attribute intensity control

CUDA_VISIBLE_DEVICES=0 \ python test_slide.py \ --experiment_name 128_shortcut1_inject1_none \ --test_att Male \ --test_int_min -1.0 \ --test_int_max 1.0 \ --n_slide 10

If you find AttGAN useful in your research work, please consider citing:

@article{he2017attgan,

title={Attgan: Facial Attribute Editing by Only Changing What You Want},

author={He, Zhenliang and Zuo, Wangmeng and Kan, Meina and Shan, Shiguang and Chen, Xilin},

journal={arXiv preprint arXiv:1711.10678},

year={2017}

}