This is the repository for the implementation of "Constrained Neural Style Transfer for Decorated Logo Generation" by G. Atarsaikhan, B. K. Iwana and S. Uchida.

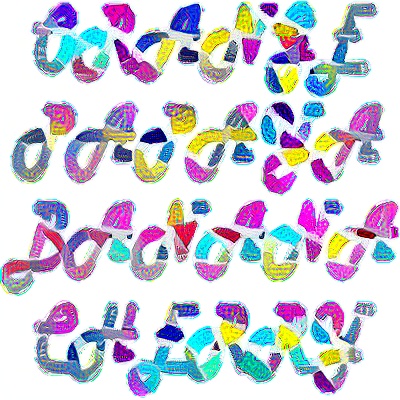

We propose "Distance transform loss" to be added upon the existing loss functions of neural style transfer. The "Distance transform loss" is the difference between distance transform images of the content image and generated image.

"Distance transform loss" is added onto the neural style transfer network.

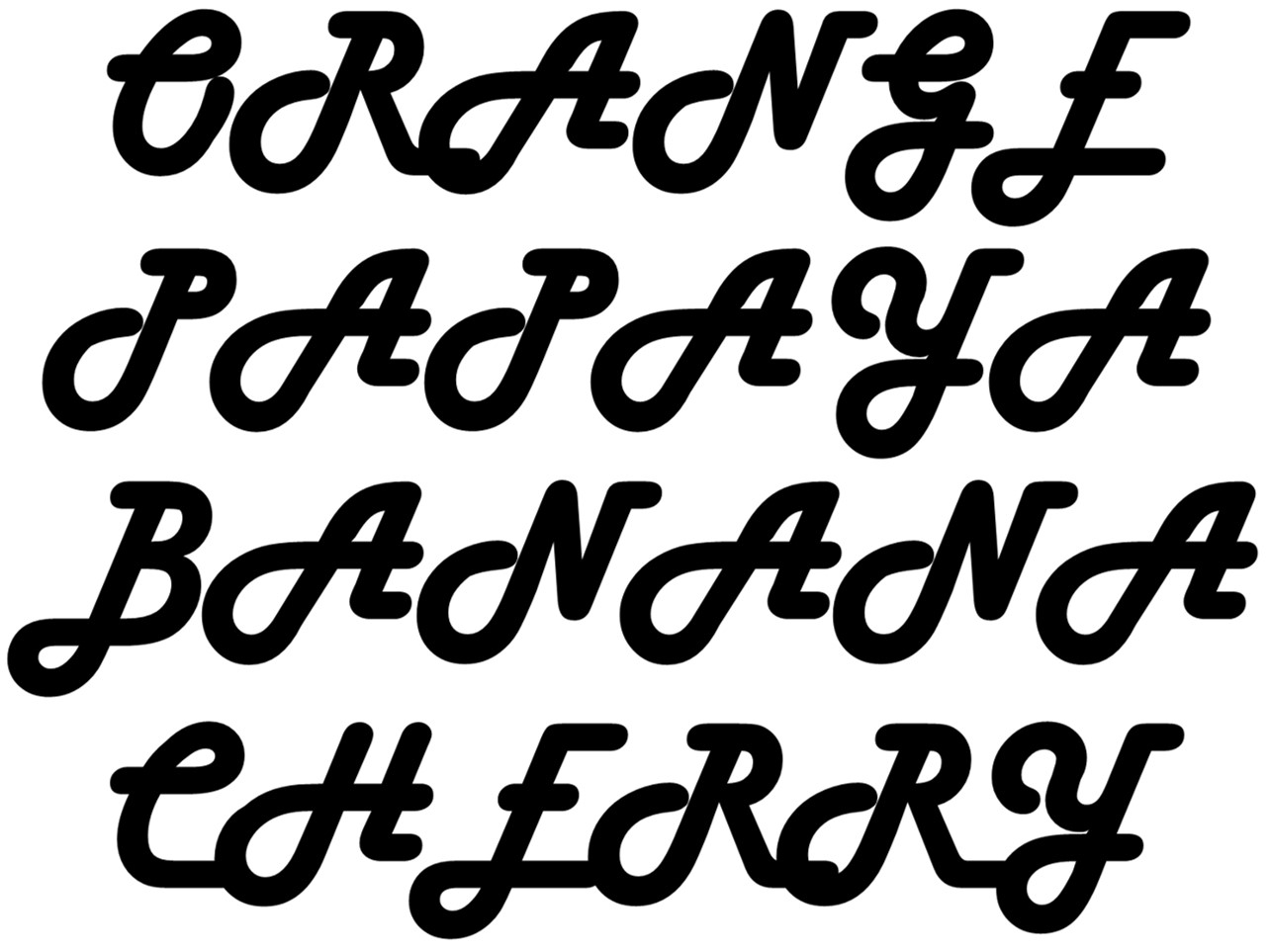

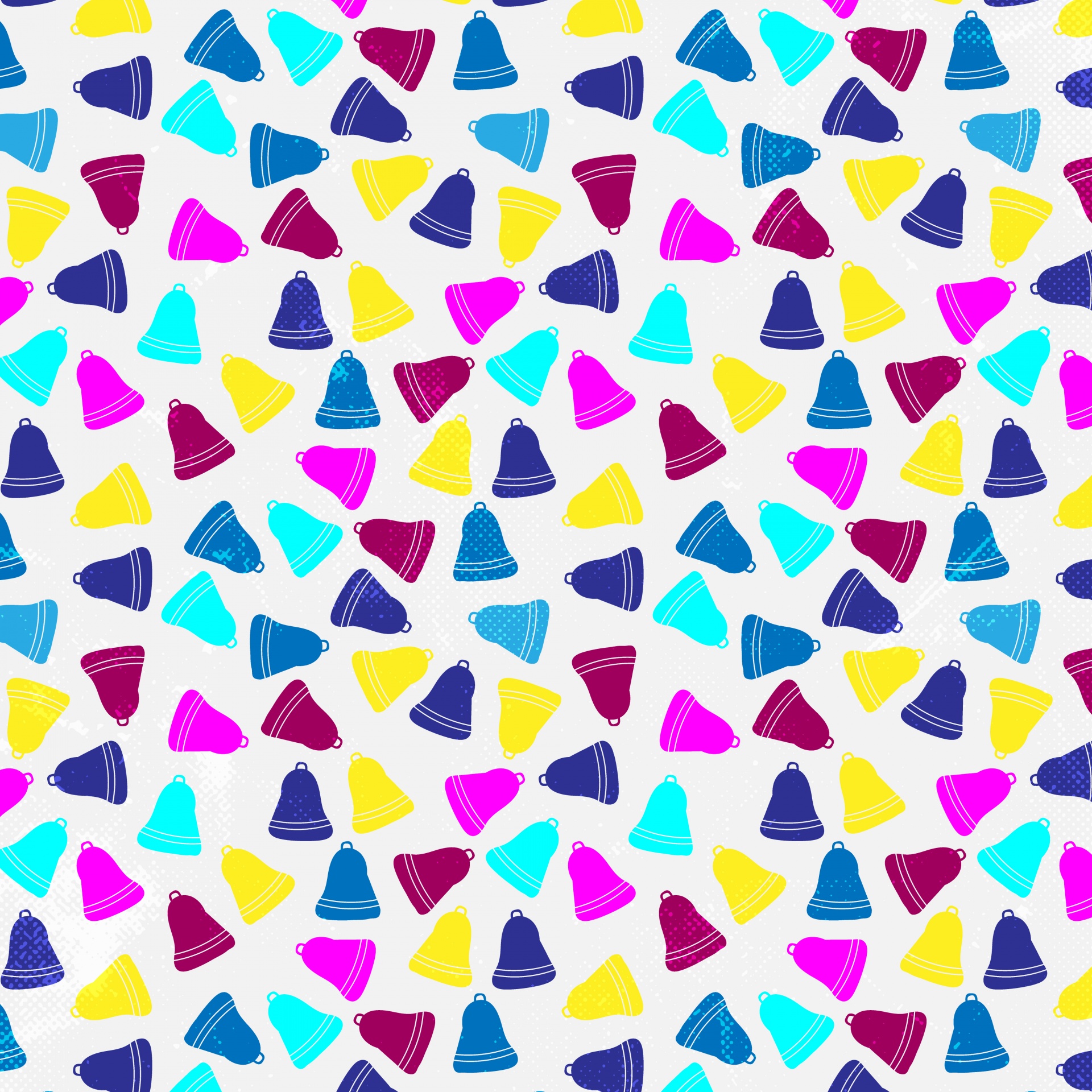

| Content image | Style image | Generated image |

|---|---|---|

|

|

|

|

|

|

|

|

|

-

Python >= 3.5

-

TensorFlow >= 1.8

-

Numpy & Scipy

-

OpenCV >= 3.x (cv2)

-

Matplotlib

-

Download the pre-trained weights for VGG network from here, and place it on the main folder. (~500MB)

python StyleTransfer.py -CONTENT_IMAGE <path_to_content_image> -STYLE_IMAGE <path_to_style_image>

alpha = 0.001 # More emphasize on content loss. Override with -alpha

beta = 0.8 # More emphasize on style loss. Override with -beta

gamma = 0.001 # More powerful constrain. Override with -gamma

EPOCH = 5000 # Set the number of epochs to run. Override with -epoch

IMAGE_WIDTH = 400 # Determine image size. Override with -width

w1~w5 = 1 # Style layrs to use. Override with -w1 ~ -w5

G.Atarsaikhan, B.K.Iwana and S.Uchida, "Contained Neural Style Transfer for Decorated Logo Generation", In Proceedings - 13th IAPR International Workshop on Document Analysis Systems, 2018.

@article{contained_nst_2018,

title={Contained Neural Style Transfer for Decorated Logo Generation},

author={Atarsaikhan, Gantugs and Iwana, Brian Kenji and Uchida, Seiichi},

booktitle={13th IAPR International Workshop on Document Analysis Systems},

year={2018}

}