This code provides various benchmark (and legacy) tasks for evaluating quality of visual representations learned by various self-supervision approaches. This code corresponds to our work on Scaling and Benchmarking Self-Supervised Visual Representation Learning. The code is written in Python and uses Caffe2 frontend as available in PyTorch 1.0. We hope that this benchmark release will provided a consistent evaluation strategy that will allow measuring the progress in self-supervision easily.

The goal of fair_self_supervision_benchmark is to standardize the methodology for evaluating quality of visual representations learned by various self-supervision approaches. Further, it provides evaluation on a variety of tasks as follows:

Benchmark tasks: The benchmark tasks are based on principle: a good representation (1) transfers to many different tasks, and, (2) transfers with limited supervision and limited fine-tuning. The tasks are as follows.

- Image Classification

- Low-Shot Image Classification

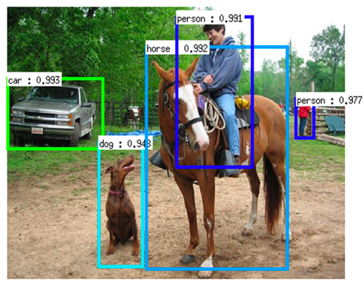

- Object Detection on VOC07 and VOC07+12 with frozen backbone for detectors:

These Benchmark tasks use the network architectures:

Legacy tasks: We also classify some commonly used evaluation tasks as legacy tasks for reasons mentioned in Section 7 of paper. The tasks are as follows:

- ImageNet-1K classification task

- VOC07 full finetuning

- Object Detection on VOC07 and VOC07+12 with full tuning for detectors:

fair_self_supervision_benchmark is CC-NC 4.0 International licensed, as found in the LICENSE file.

If you use fair_self_supervision_benchmark in your research or wish to refer to the baseline results published in the paper, please use the following BibTeX entry.

@article{goyal2019scaling,

title={Scaling and Benchmarking Self-Supervised Visual Representation Learning},

author={Goyal, Priya and Mahajan, Dhruv and Gupta, Abhinav and Misra, Ishan},

journal={arXiv preprint arXiv:1905.01235},

year={2019}

}

Please find installation instructions in INSTALL.md.

After installation, please see GETTING_STARTED.md for how to run various benchmark tasks.

We provide models used in our paper in the Model Zoo.

- Scaling and Benchmarking Self-Supervised Visual Representation Learning. Priya Goyal, Dhruv Mahajan, Abhinav Gupta, Ishan Misra. Tech report, arXiv, May 2019.