A real demo of Deep Learning project with preprocessing from development to production using code, ops and Machine Learning best practices. Production is a real time REST API.

Competences

- Code / Debug

- UnitTest, Test Driven Development principles

- Clean Code, Inversion Of Dependence, Functional Programing

- Build & Publish packages

- Git

- Linux Bash

- REST API

- Docker

- Kubernetes

Tools

- Pycharm (https://www.jetbrains.com/pycharm/)

- Python, pip, PipEnv, Conda

- Git & Github & Github Action

- AzureML (https://azure.microsoft.com/fr-fr/free/students?WT.mc_id=DOP-MVP-5003370)

- Docker

- Kubernetes & OpenShift or Azure

The name of our company: MLOps

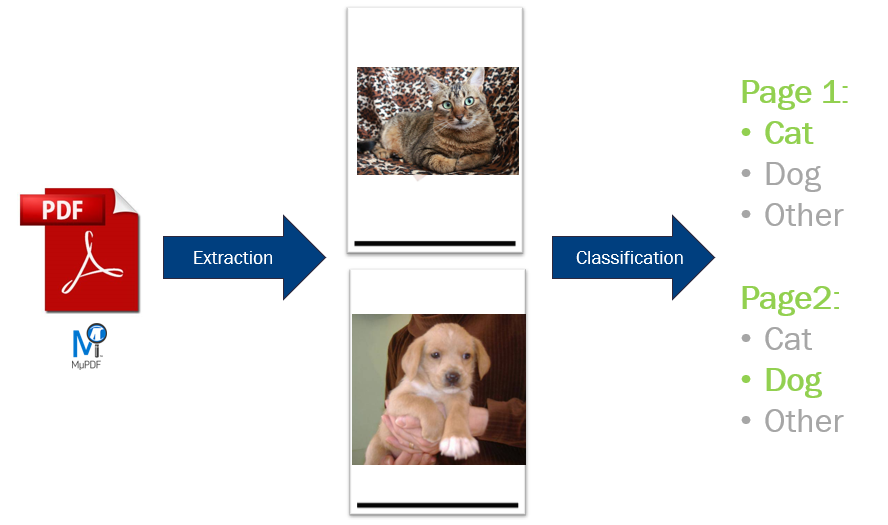

Today a team of indexers receive 10,000 PDF files a day containing either cats or dogs or something else. They must manually open each PDF to classify them.

We are going to automate this process. We will use a machine learning model to classify PDF files and expose it as a REST API. It will work in real time.

MLOps is involved in the entire life cycle of an AI project. This is all that will allow your AI project to go into production and then keep your project in production.

No MLOps practices, no production.

The deliverable of a project is not the AI model, it's how the AI model is generated.

The model must be reproducible.

The deliverable is:

- Versioned data

- Versioned code

We will use the following project structure. We will use mono-repository git in order to work together. It will help to retrain a model and deploy it in production from one manual action maximum.

Technologies choices have a huge impact on your development cost. You cannot use a hundred of libraries and expect to have a good result in a short time. It will be a nightmare to maintain and to debug.

Enterprises use Technologies RADAR to manage and maintain their technologies choice : https://github.com/axa-group/radar