Wuhan Textile University, Wuhan City, Hubei Province, China

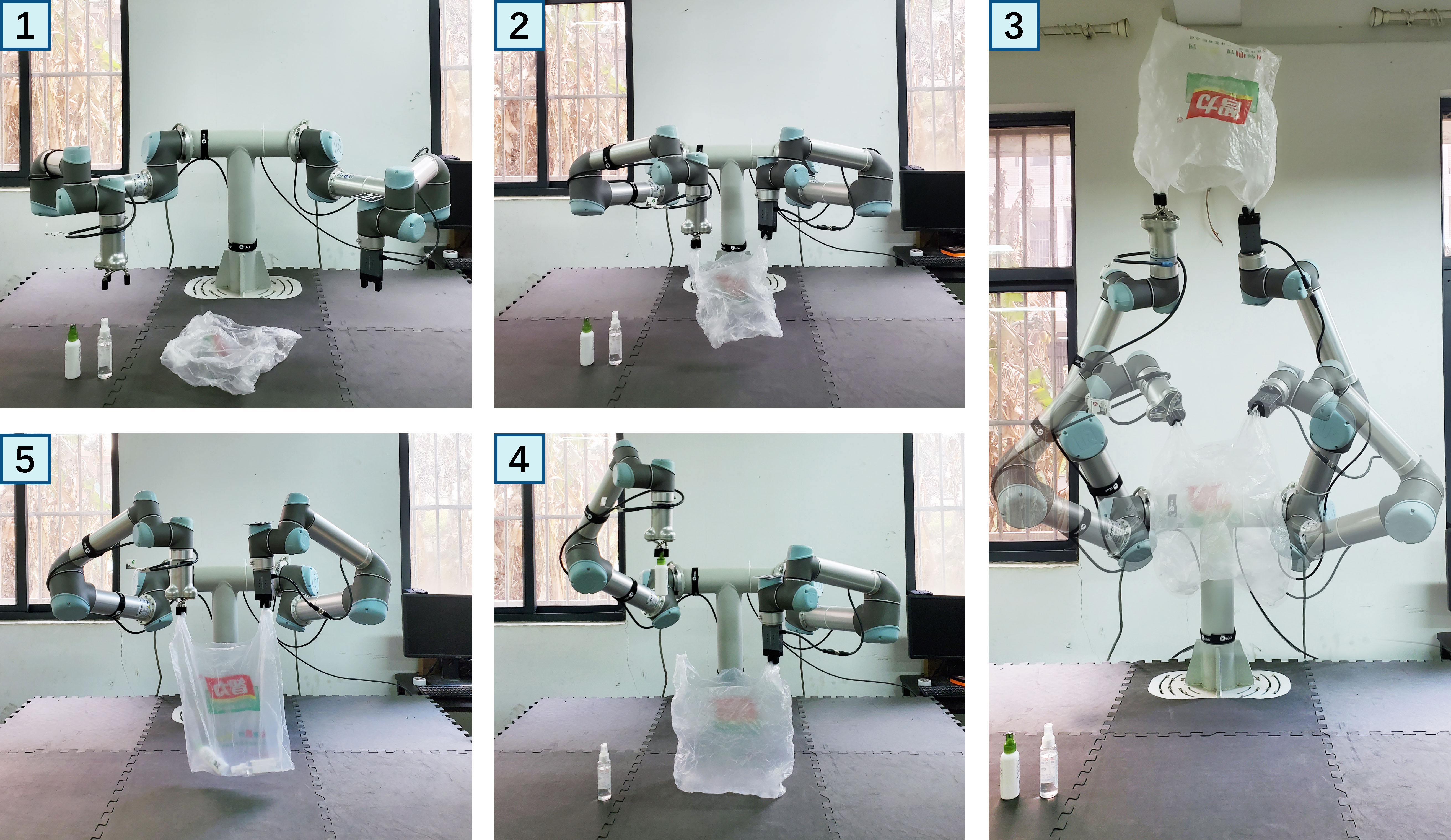

This repository contains code for training and evaluating ShakingBot in both simulation and real-world settings on a dual-UR5 robot arm setup for Ubuntu 18.04. It has been tested on machines with Nvidia GeForce RTX 2080 Ti.

- 1 Data Collection

- 1.1 Explanation

- 1.2 Installation

- 1.3 Show Datasets

- 2 Get Datasets

- 3 Network Training

Kinect v2 based data collection code

- The folder【get_rgb_depth】is used to get the raw data of Kinect V2, RGB image size is 1920×1080,Depth image size of 512×424.

- Since RGB images and depth images have different resolutions, they need to be aligned.The folder【colorized_depth】is used to align images.

- 【final_datasets】is used to processing of images to target resolution,Generate .png and .npy files.

- 【all_tools】Includes hardware kit for matlab to connect to Kinect V2.

Requirements

- Ubuntu 18.04

- Matlab R2020a

- Kinect V2

-

Preprocess the images collected in kinect v2

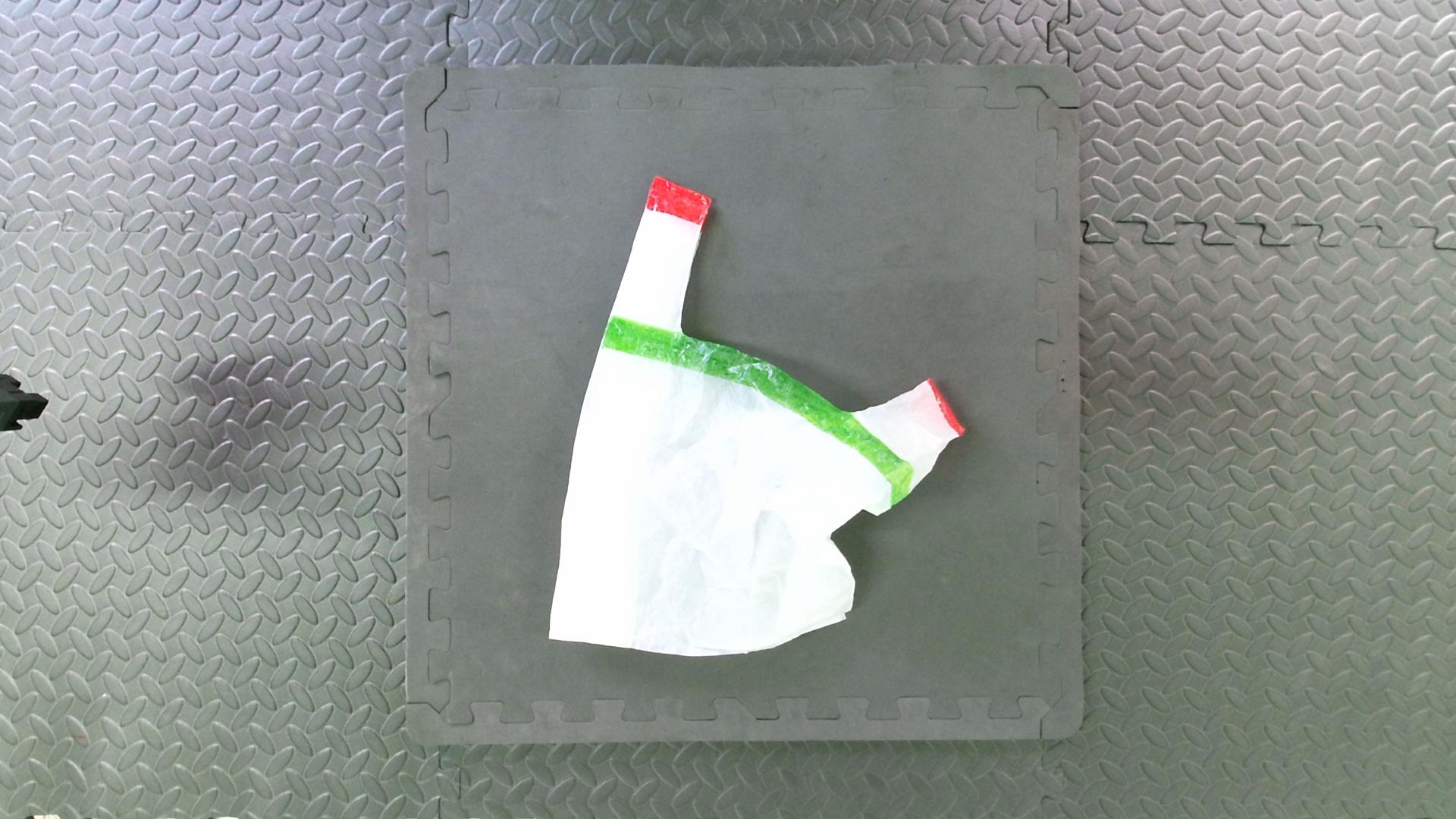

The paint color can be changed into others according to the pattern color of the bag, here is the color of the paint in one of the bags.

cd get_datasets python get_rgb_npy.py

-

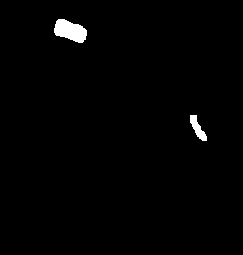

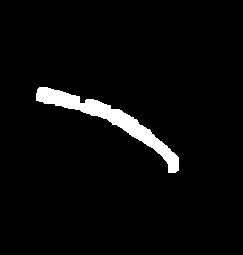

Get datasets label

python color_dectect.py

- Make a new virtualenv or conda env. For example, if you're using conda envs, run this to make and then activate the environment:

conda create -n shakingbot python=3.6 -y conda activate shakingbot - Run pip install -r requirements.txt to install dependencies.

cd network_training pip install -r requirements.txt

-

In the repo's root, get rgb and depth map

-

In the

configsfolder modifysegmentation.json -

Train Region Perception model

python train.py

-

In the repo's root, download the model weights

-

Then validate the model from scratch with

python visualize.py -

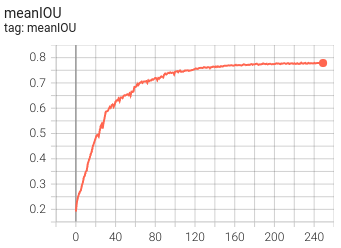

Training details can be viewed in the bag

cd network_training tensorboard --logdir train_runs/