Liuxin Qing* · Longteng Duan* · Guo Han* · Shi Chen*

(* Equal Contribution)

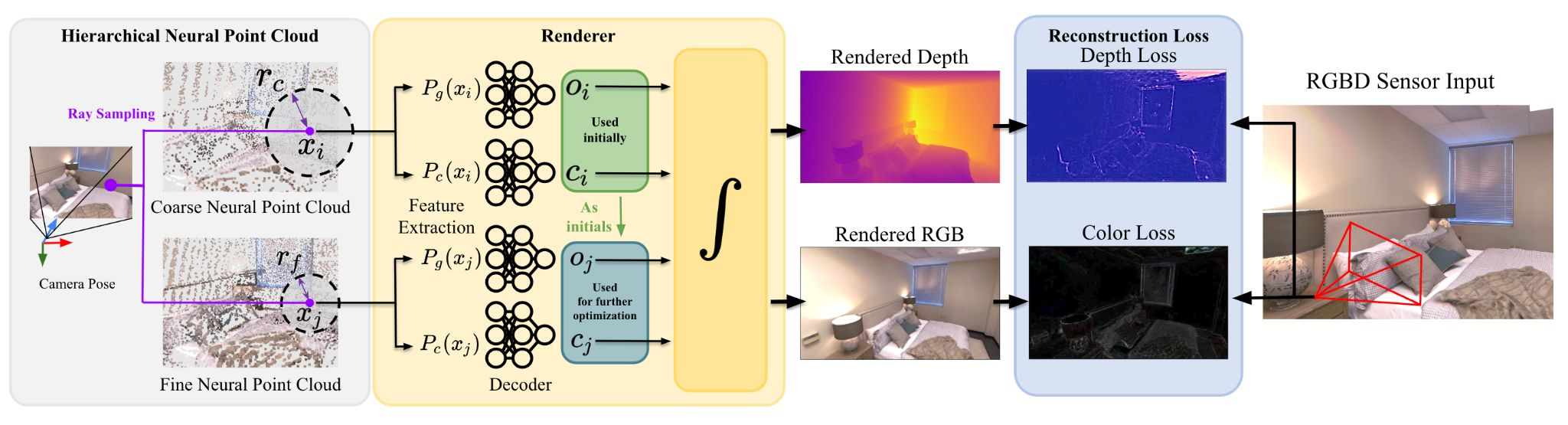

The pipeline of hierarchical Point-SLAM

- A coarse-to-fine hierarchical neural point cloud with different dynamic sampling radius.

- In mapping: optimize the neural features in coarse and fine point clouds independently.

- In tracking: begin by optimizing camera pose using coarse-level features, and subsequently integrate fine-level features for more refined enhancements.

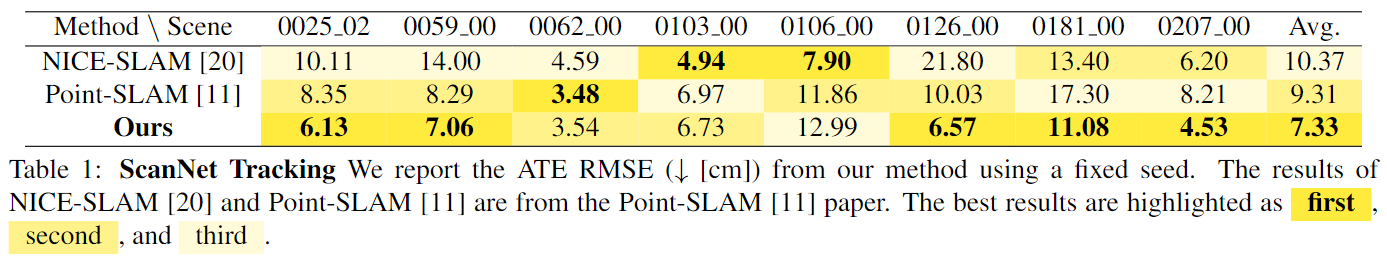

- Our results are shown below.

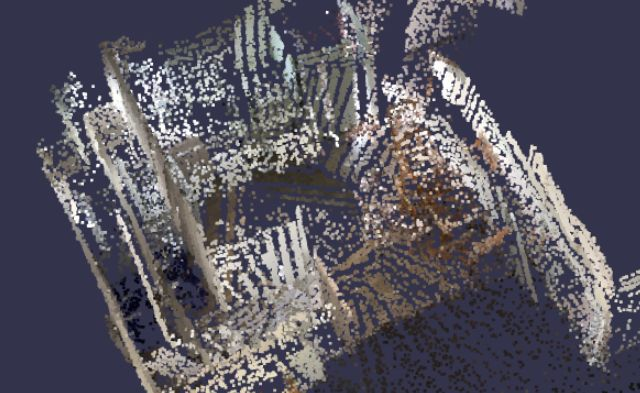

A comparison of the resulting neural point cloud

taken from ScanNet scene 0181 at the frame 2438.

Left: Point-SLAM. Right: Ours.

Table of Contents

Here we provide an overview of the repository's structure. Brief comments are added for explanation.

[Directory structure of this Repository (click to expand)]

Hierarchical-Point-SLAM

│ env.yaml # environment settings

│ README.md

│ repro_demo.sh # a demo shell file can be run on Euler cluster

│ run.py

│

├─configs # include all config files

│ │ point_slam.yaml # point-slam general config

│ │

│ └─ScanNet

│ scannet.yaml # point-slam config for scannet

│ scenexxxx.yaml # scannet scene specific configs

│

├─documents

│ poster.pdf

│ report.pdf

│

├─imgs # images shown in readme

│ xxx.png

│

├─pretrained # pretrained model for conv_onet

│ coarse.pt

│ color.pt

│ middle_fine.pt

│

└─src # include all core source files

│ common.py

│ config.py

│ Mapper.py # mapping process

│ neural_point.py # neural point cloud data class

│ Point_SLAM.py

│ Tracker.py # tracker process

│ __init__.py

│

├─conv_onet

│ │ config.py

│ │ __init__.py

│ │

│ └─models

│ decoder.py # MLP decoder for color and geometry information decoding

│ __init__.py

│

├─tools

│ cull_mesh.py

│ eval_ate.py

│ eval_recon.py

│ get_mesh_tsdf_fusion.py

│

└─utils

datasets.py

Logger.py

Renderer.py

Visualizer.py

One can create an anaconda environment called point-slam.

conda env create -f env.yaml

conda activate point-slam

To evaluate F-score, please install this library.

git clone https://github.com/tfy14esa/evaluate_3d_reconstruction_lib.git

cd evaluate_3d_reconstruction_lib

pip install .

Comment line 120 open3d==0.16.0 in env.yaml before proceeding.

module load StdEnv mesa/18.3.6 cudnn/8.2.1.32 python_gpu/3.10.4 eth_proxy hdf5/1.10.1 gcc/8.2.0 openblas/0.3.15 nccl/2.11.4-1 cuda/11.3.1 pigz/2.4 cmake/3.25.0

cd $SCRATCH

git clone git@github.com:guo-han/Hierarchical-Point-SLAM.git

cd $SCRATCH/Hierarchical-Point-SLAM

conda env create -f env.yaml python=3.10.4

conda activate point-slam

cd $SCRATCH

mkdir ext_lib

cd ext_lib

git clone --recursive https://github.com/intel-isl/Open3D

cd Open3D

git checkout tags/v0.9.0

git describe --tags --abbrev=0

git submodule update --init --recursive

mkdir build

cd build

cmake -DENABLE_HEADLESS_RENDERING=ON -DBUILD_GUI=OFF -DBUILD_WEBRTC=OFF -DUSE_SYSTEM_GLEW=OFF -DUSE_SYSTEM_GLFW=OFF -DPYTHON_EXECUTABLE=/cluster/home/guohan/miniconda3/envs/point-slam/bin/python .. # Remember to change the `DPYTHON_EXECUTABLE`

make -j$(nproc)

make install-pip-package

cd ../..

git clone https://github.com/tfy14esa/evaluate_3d_reconstruction_lib.git

cd evaluate_3d_reconstruction_lib

pip install .

More information about installing Open3D and evaluate_3d_reconstruction_lib.

Please follow the data downloading procedure on the ScanNet website, and extract color/depth frames from the .sens file using this code.

[Directory structure of ScanNet (click to expand)]

DATAROOT is ./Datasets by default. If a sequence (sceneXXXX_XX) is stored in other places, please change the input_folder path in the config file or in the command line.

DATAROOT

└── scannet

└── scans

└── scene0000_00

└── frames

├── color

│ ├── 0.jpg

│ ├── 1.jpg

│ ├── ...

│ └── ...

├── depth

│ ├── 0.png

│ ├── 1.png

│ ├── ...

│ └── ...

├── intrinsic

└── pose

├── 0.txt

├── 1.txt

├── ...

└── ...

We use the scene 0181 in ScanNet as demo, here is the link for the demo scene:

scene0181_00

All configs can be found under the ./configs folder.

- Open the

./configs/ScanNet/scenexxxx.yamlfiles. - Check and modify the

data/input_folderpath in configuration files according to your dataset location.

- In the

./configs/point_slam.yamlfile, set thewandb_dirvariable to your own path. - Ensure that the specified path exists and is accessible.

- In the

./configs/ScanNet/scannet.yamlfile, locate the variables:pointcloud/radius_hierarchy/mid/radius_add_maxpointcloud/radius_hierarchy/mid/radius_add_min

- Adjust the values of these variables to experiment with different coarse level radius values.

- To change the number of iterations for mapping and tracking, refer to the variables:

tracking/itersmapping/iters

- Note that these variables control the total number of iterations for both levels. The default ratio of iteration division between the two levels is 0.5. If you want to modify the mapping iteration ratio for the middle level specifically, check the variable

mapping/mid_iter_ratioin./configs/point_slam.yaml.

- Take into account that the settings specified in

point_slam.yamlwill be overwritten by those inscannet.yamlif they share the same key values. - Exercise caution when modifying variables to ensure that you are changing the intended ones.

Before running, make sure read the Configs section and modify all necessary configs for your running.

To run on the ScanNet, for example, scene0181, use the following command,

python run.py configs/ScanNet/scene0181.yaml

Please remember to modify the --output, --error, source [conda env path], output_affix, etc. inside repro_demo.sh, as well as the wandb_dir in configs/ScanNet/point_slam.yaml

cd $SCRATCH/Hierarchical-Point-SLAM

sbatch repro_demo.sh # run the job, remember to check args before each run

This project was undertaken as part of the 2023FS 3DV course at ETH Zurich. We would like to express our sincere appreciation for the valuable guidance and support provided by our supervisor, Erik Sandström.