Update (August 16, 2017) TensorFlow Implementation of Action Recognition using Visual Attention which introduces an attention based action recognition discriminator. The model inputed with a video will shift its attention along the frames, label each frame, and select the merging label with the highest frequency of occurance as the final label of the video.

Author's Theano implementation: https://github.com/kracwarlock/action-recognition-visual-attention

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention: https://arxiv.org/abs/1502.03044

Show, Attend and Tell's Tensorflow implementation: https://github.com/yunjey/show-attend-and-tell

Used Data: http://users.eecs.northwestern.edu/~jwa368/my_data.html

First, clone this repository and download the video data experimented in this project.

$ git clone https://github.com/Adopteruf/Action_Recognition_using_Visual_Attention

$ wget users.eecs.northwestern.edu/~jwa368/data/multiview_action_videos.tgzunzipping the download video data and copying it into '/data/image/' can be conducted manually. This code is written in Python2.7 and requires Tensorflow. Besides, there are several package and files needed to be install. Running the command below can help to download the necessary tools and download the VGGNet19 model in 'data/' directory.

$ cd Action_Recognition_using_Visual_Attention

$ pip2 install -r Needed_Package.txt

$ sudo add-apt-repository ppa:kirillshkrogalev/ffmpeg-next

$ sudo apt-get update

$ sudo apt-get install ffmpeg

$ wget http://www.vlfeat.org/matconvnet/models/imagenet-vgg-verydeep-19.mat -P data/For breaking the download videos into images along the frames and distribute them into three partitions including 'train/', 'val/', and 'test/' following the proporation: 0.6:0.2:0.2.

$ python pre-data.pyThen, we need to extract the features from images prepared for the further training.

$ python CNN.pyRun the command below to train the action recognition model.

$python train.py

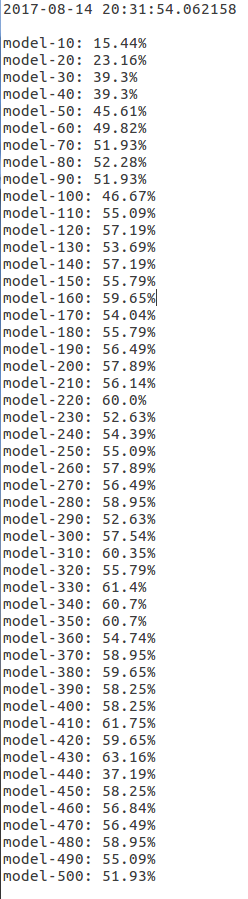

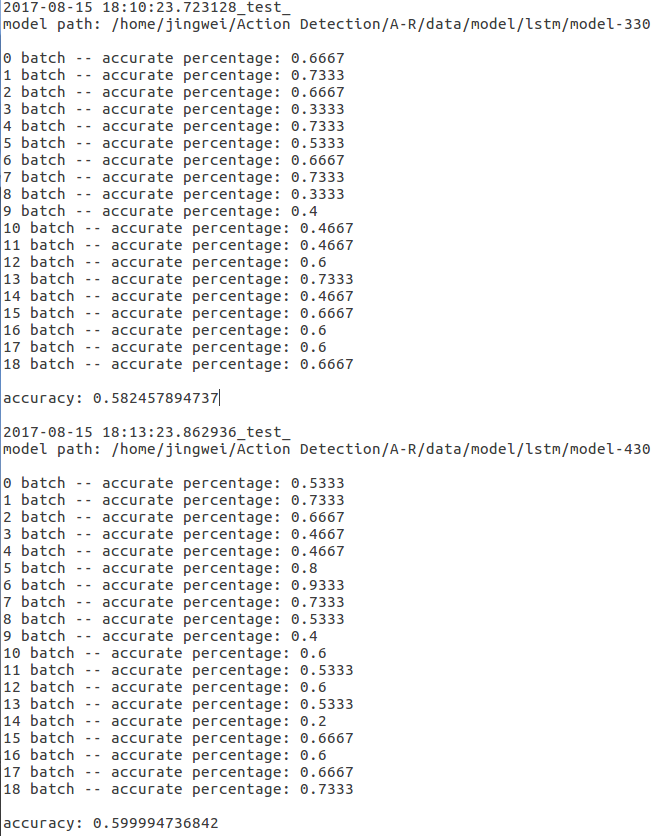

After enter this command, the model will be trained based on the 'train' data-set and the whole models saved during the training process will be examed based on 'val' data-set. The user can pick the model with highest performance and exam it based on 'test' data-set.

Here is an experimental case.

Here is one test case.

'stand up': 2

'pick up with one hand': 6

The selected labels for the 17 frames are: 2 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6

The final label: pick up with one hand

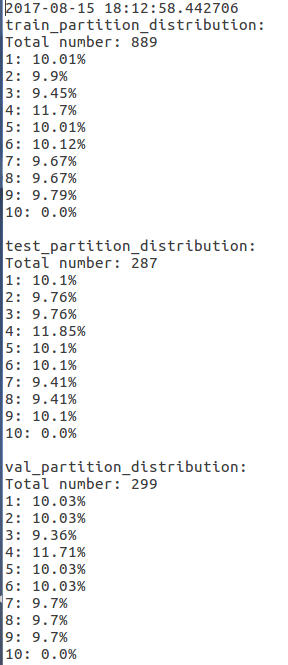

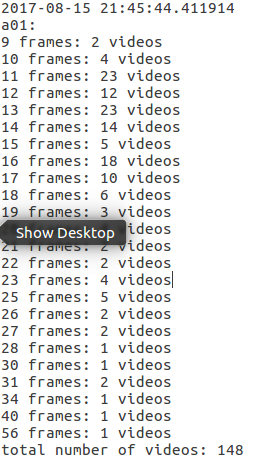

Besides, the codes: Data_Scanning.py and Image_Scanning.py can help to measure the data distribution of the corresponding data-set. For example,

$ python Data_Scanning.py$ python Image_Scanning.py