SSPNet: Scale and Spatial Priors Guided Generalizable and Interpretable Pedestrian Attribute Recognition

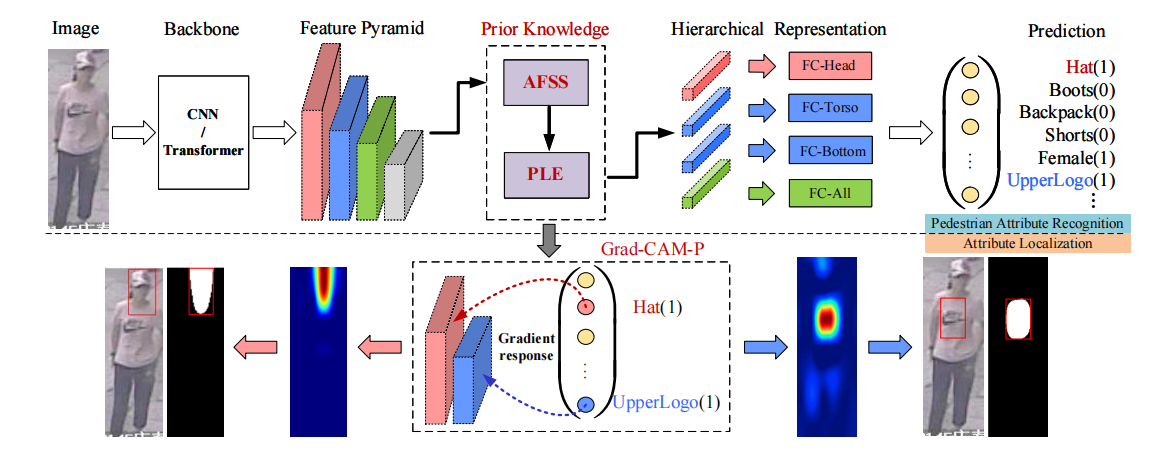

In this paper, we propose a SSPNet to learn generalizable and interpretable PAR model, which mainly comprise of AFSS and PLE modules based on the multi-label classification framework. The AFSS module can select feature map with proper scales for attributes of various granularity, while the PLE module aims to incorporate the spatial prior knowledge of attributes into the model learning process. From another perspective, AFSS identifies the most suitable scale of feature map, while PLE utilizes spatial priors to find the appropriate location of attribute in the feature map.

- PA100K [Paper][Github]

- RAP : A Richly Annotated Dataset for Pedestrian Attribute Recognition

- PETA: Pedestrian Attribute Recognition At Far Distance [Paper][Project]

- UPAR [Paper][Project]

- Market [Paper]

- The experimental code is based on Strong Baseline [Github], thanks to their work! The final performance of the SSPNet model will be affected by how Strong Baseline behaves in your local environment. If you find serious bugs, it is recommended that you run this project file on the original Strong Baseline.

- Directly using intermediate layer features as P1, P2, and P3 will degrade the model performance! If you need more feature maps like P1 and P2, please make sure to use the same up-sampling and smoothing operation. See _upsample_add( ) and smoothx( ) in resnet.py.

- Some suggestions on using human keypoint experiment. The speed of model training is maddening as each image needs to be loaded with specific human keypoint data. It is not recommended to try it unless you are really interested. I'll be experimenting with more efficient human keypoint utilization in my upcoming work, so please stay tuned.

- The code contains some experimental modules not mentioned in the paper, please delete or add them as needed.

- The code is not yet finished, so please give me some time to make it more complete.