DB-GPT is an experimental open-source project that uses localized GPT large models to interact with your data and environment. With this solution, you can be assured that there is no risk of data leakage, and your data is 100% private and secure.

Run on an RTX 4090 GPU.

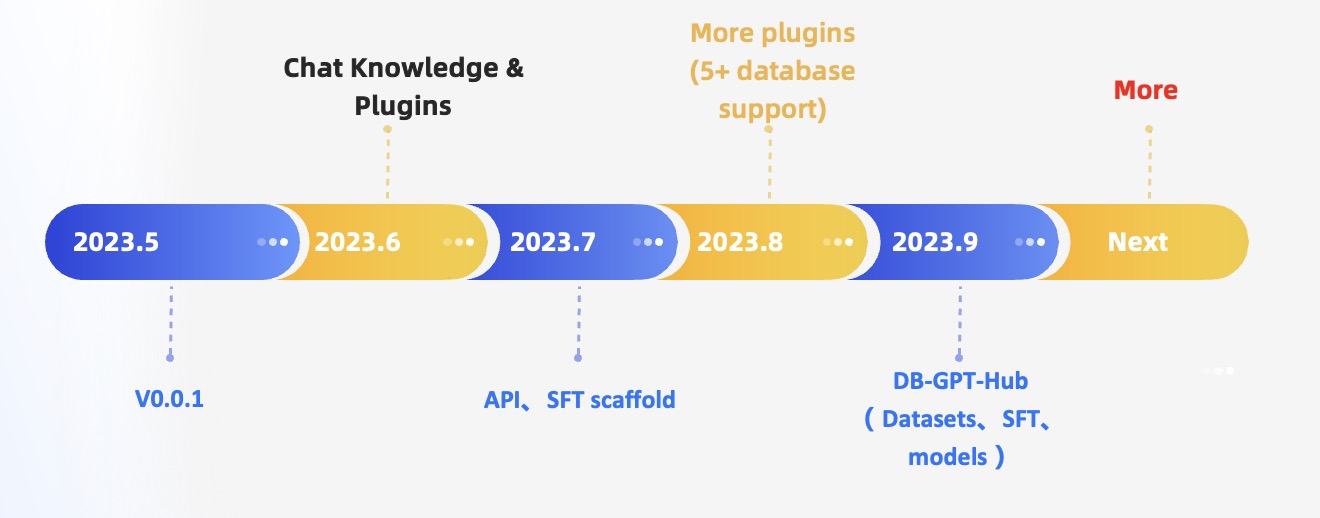

Currently, we have released multiple key features, which are listed below to demonstrate our current capabilities:

-

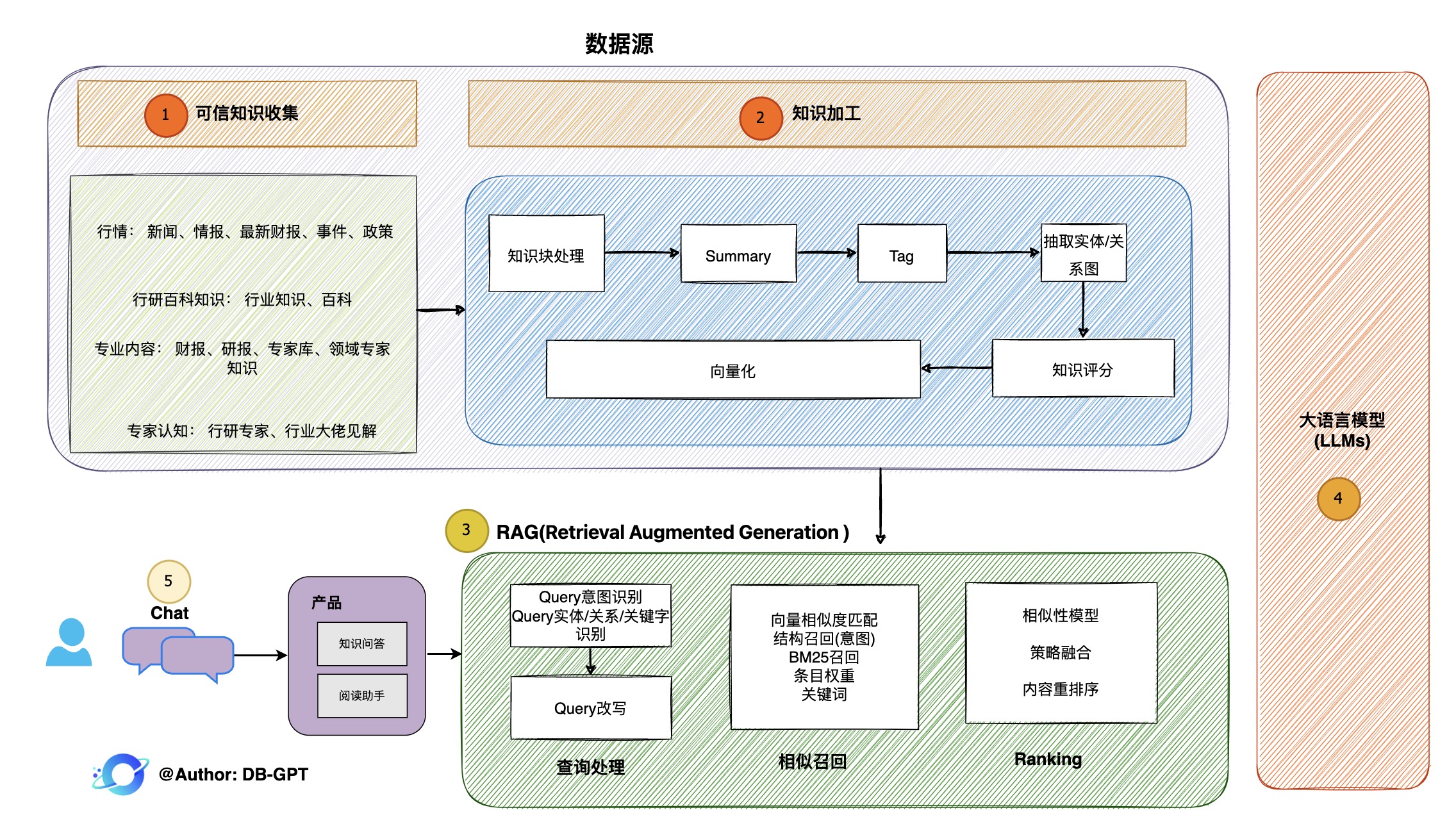

Private KBQA & data processing

The DB-GPT project offers a range of features to enhance knowledge base construction and enable efficient storage and retrieval of both structured and unstructured data. These include built-in support for uploading multiple file formats, the ability to integrate plug-ins for custom data extraction, and unified vector storage and retrieval capabilities for managing large volumes of information.

-

Multiple data sources & visualization

The DB-GPT project enables seamless natural language interaction with various data sources, including Excel, databases, and data warehouses. It facilitates effortless querying and retrieval of information from these sources, allowing users to engage in intuitive conversations and obtain insights. Additionally, DB-GPT supports the generation of analysis reports, providing users with valuable summaries and interpretations of the data.

-

Multi-Agents&Plugins

It supports custom plug-ins to perform tasks, natively supports the Auto-GPT plug-in model, and the Agents protocol adopts the Agent Protocol standard.

-

Fine-tuning text2SQL

An automated fine-tuning lightweight framework built around large language models, Text2SQL data sets, LoRA/QLoRA/Pturning, and other fine-tuning methods, making TextSQL fine-tuning as convenient as an assembly line. DB-GPT-Hub

-

Multi LLMs Support, Supports multiple large language models, currently supporting

Massive model support, including dozens of large language models such as open source and API agents. Such as LLaMA/LLaMA2, Baichuan, ChatGLM, Wenxin, Tongyi, Zhipu, etc.

- Vicuna

- vicuna-13b-v1.5

- LLama2

- baichuan2-13b

- baichuan-7B

- chatglm-6b

- chatglm2-6b

- falcon-40b

- internlm-chat-7b

- Qwen-7B-Chat/Qwen-14B-Chat

- RWKV-4-Raven

- CAMEL-13B-Combined-Data

- dolly-v2-12b

- h2ogpt-gm-oasst1-en-2048-open-llama-7b

- fastchat-t5-3b-v1.0

- mpt-7b-chat

- gpt4all-13b-snoozy

- Nous-Hermes-13b

- codet5p-6b

- guanaco-33b-merged

- WizardLM-13B-V1.0

- WizardLM/WizardCoder-15B-V1.0

- Llama2-Chinese-13b-Chat

- OpenLLaMa OpenInstruct

Etc.

-

Privacy and security

The privacy and security of data are ensured through various technologies, such as privatized large models and proxy desensitization.

-

Support Datasources

| DataSource | support | Notes |

|---|---|---|

| MySQL | Yes | |

| PostgreSQL | Yes | |

| Spark | Yes | |

| DuckDB | Yes | |

| Sqlite | Yes | |

| MSSQL | Yes | |

| ClickHouse | Yes | |

| Oracle | No | TODO |

| Redis | No | TODO |

| MongoDB | No | TODO |

| HBase | No | TODO |

| Doris | No | TODO |

| DB2 | No | TODO |

| Couchbase | No | TODO |

| Elasticsearch | No | TODO |

| OceanBase | No | TODO |

| TiDB | No | TODO |

| StarRocks | No | TODO |

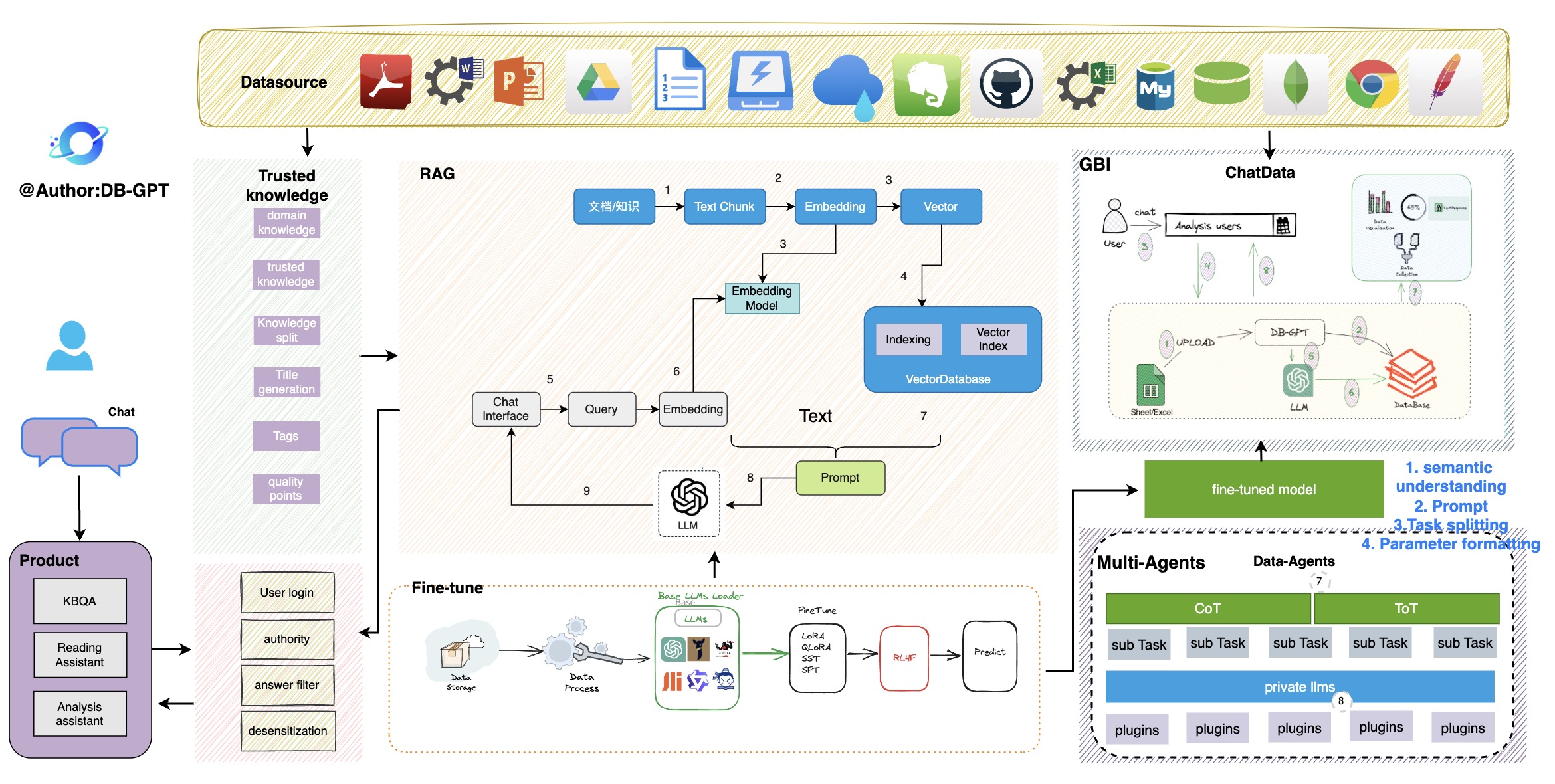

Is the architecture of the entire DB-GPT shown in the following figure:

The core capabilities mainly consist of the following parts:

- Multi-Models: Support multi-LLMs, such as LLaMA/LLaMA2、CodeLLaMA、ChatGLM, QWen、Vicuna and proxy model ChatGPT、Baichuan、tongyi、wenxin etc

- Knowledge-Based QA: You can perform high-quality intelligent Q&A based on local documents such as PDF, word, excel, and other data.

- Embedding: Unified data vector storage and indexing, Embed data as vectors and store them in vector databases, providing content similarity search.

- Multi-Datasources: Used to connect different modules and data sources to achieve data flow and interaction.

- Multi-Agents: Provides Agent and plugin mechanisms, allowing users to customize and enhance the system's behavior.

- Privacy & Secure: You can be assured that there is no risk of data leakage, and your data is 100% private and secure.

- Text2SQL: We enhance the Text-to-SQL performance by applying Supervised Fine-Tuning (SFT) on large language models

- DB-GPT-Hub Text-to-SQL performance by applying Supervised Fine-Tuning (SFT) on large language models.

- DB-GPT-Plugins DB-GPT Plugins Can run autogpt plugin directly

- DB-GPT-Web ChatUI for DB-GPT

In the .env configuration file, modify the LANGUAGE parameter to switch to different languages. The default is English (Chinese: zh, English: en, other languages to be added later).

- Please run

black .before submitting the code. Contributing guidelines, how to contribution

-

Multi Documents

- Excel, CSV

- Word

- Text

- MarkDown

- Code

- Images

-

RAG

-

Graph Database

- Neo4j Graph

- Nebula Graph

-

Multi-Vector Database

- Chroma

- Milvus

- Weaviate

- PGVector

- Elasticsearch

- ClickHouse

- Faiss

-

Testing and Evaluation Capability Building

- Knowledge QA datasets

- Question collection [easy, medium, hard]:

- Scoring mechanism

- Testing and evaluation using Excel + DB datasets

- Multi Datasource Support

- MySQL

- PostgreSQL

- Spark

- DuckDB

- Sqlite

- MSSQL

- ClickHouse

- Oracle

- Redis

- MongoDB

- HBase

- Doris

- DB2

- Couchbase

- Elasticsearch

- OceanBase

- TiDB

- StarRocks

- Cluster Deployment

- Fastchat Support

- vLLM Support

- Cloud-native environment and support for Ray environment

- Service Registry(eg:nacos)

- Compatibility with OpenAI's interfaces

- Expansion and optimization of embedding models

- multi-agents framework

- custom plugin development

- plugin market

- Integration with CoT

- Enrich plugin sample library

- Support for AutoGPT protocol

- Integration of multi-agents and visualization capabilities, defining LLM+Vis new standards

- debugging

- Observability

- cost & budgets

-

support llms

- LLaMA

- LLaMA-2

- BLOOM

- BLOOMZ

- Falcon

- Baichuan

- Baichuan2

- InternLM

- Qwen

- XVERSE

- ChatGLM2

-

SFT Accuracy

As of October 10, 2023, by fine-tuning an open-source model of 13 billion parameters using this project, the execution accuracy on the Spider evaluation dataset has surpassed that of GPT-4!

| name | Execution Accuracy | reference |

|---|---|---|

| GPT-4 | 0.762 | numbersstation-eval-res |

| ChatGPT | 0.728 | numbersstation-eval-res |

| CodeLlama-13b-Instruct-hf_lora | 0.789 | sft train by our this project,only used spider train dataset ,the same eval way in this project with lora SFT |

| CodeLlama-13b-Instruct-hf_qlora | 0.774 | sft train by our this project,only used spider train dataset ,the same eval way in this project with qlora and nf4,bit4 SFT |

| wizardcoder | 0.610 | text-to-sql-wizardcoder |

| CodeLlama-13b-Instruct-hf | 0.556 | eval in this project default param |

| llama2_13b_hf_lora_best | 0.744 | sft train by our this project,only used spider train dataset ,the same eval way in this project |

More Information about Text2SQL finetune

The MIT License (MIT)

We are working on building a community, if you have any ideas about building the community, feel free to contact us.