MagFace: A Universal Representation for Face Recognition and Quality Assessment

in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, Oral presentation.

Paper: arXiv

A toy example: examples.ipynb

Poster: GoogleDrive, BaiduDrive code: dt9e

Beamer: GoogleDrive, BaiduDrive, code: c16b

Presentation: TBD

NOTE: The original codes are implemented on a private codebase and will not be released. This repo is an official but abridged version. See todo list for plans.

@inproceedings{meng2021magface,

title={MagFace: A universal representation for face recognition and quality assessment},

author={Meng, Qiang and Zhao, Shichao and Huang, Zhida and Zhou, Feng},

booktitle=IEEE Conference on Computer Vision and Pattern Recognition,

year=2021

}

| Parallel Method | Float Type | Backbone | Dataset | Split FC? | Model | Log File |

|---|---|---|---|---|---|---|

| DDP | fp32 | iResNet100 | MS1MV2 | Yes | GoogleDrive, BaiduDrive code: wsw3 | Trained by original codes |

| DP | fp32 | iResNet50 | MS1MV2 | No | BaiduDrive code: tvyv | BaiduDrive, code: hpbt |

| DDP | fp32 | iResNet50 | MS1MV2 | Yes | BaiduDrive code: idkx | BaiduDrive, code: 66j1 |

Steps to calculate face qualities (examples.ipynb is a toy example).

- Extract features from faces with

inference/gen_feat.py. - Calculate feature magnitudes with

np.linalg.norm().

- install requirements.

- Prepare a training list with format

imgname 0 id 0in each line, as indicated here. In the paper, we employ MS1MV2 as the training dataset which can be downloaded from BaiduDrive or Dropbox. Userec2image.pyto extract images. - Modify parameters in run/run.sh and run it!

Note: Use Pytorch > 1.7 for this feature. Codes are mainly based on Aibee's mpu (author: Kaiyu Yue, will be released in middle of April).

How to run:

- Update NCCL info (can be found with the command

ifconfig) and port info in train_dist.py - Set the number of gpus in here.

- [Optional. Not tested yet!] If training with multi-machines, modify node number.

- [Optional. Not tested yet!] Enable fp16 training by setiing

--fp16 1in run/run_dist.sh. - run run/run_dist.sh.

Parallel training (Sec. 5.1 in ArcFace) can highly speed up training as well as reduce consumption of GPU memory. Here are some results.

| Parallel Method | Float Type | Backbone | GPU | Batch Size | FC Size | Split FC? | Avg. Throughput (images/sec) | Memory (MiB) |

|---|---|---|---|---|---|---|---|---|

| DP | FP32 | iResNet50 | v100 x 8 | 512 | 85742 | No | 1099.41 | 8681 |

| DDP | FP32 | iResNet50 | v100 x 8 | 512 | 85742 | Yes | 1687.71 | 8137 |

| DDP | FP16 | iResNet50 | v100 x 8 | 512 | 85742 | Yes | 3388.66 | 5629 |

| DP | FP32 | iResNet100 | v100 x 8 | 512 | 85742 | No | 612.40 | 11825 |

| DDP | FP32 | iResNet100 | v100 x 8 | 512 | 85742 | Yes | 1060.16 | 10777 |

| DDP | FP16 | iResNet100 | v100 x 8 | 512 | 85742 | Yes | 2013.90 | 7319 |

- Pytorch: FaceX-Zoo from JDAI.

TODO list:

- add toy examples and release models

- migrate basic codes from the private codebase

- add beamer (after the ddl for iccv2021)

- test the basic codes

- add presentation

- migrate parallel training

- release mpu (Kaiyu Yue, in April)

- test parallel training

- add evaluation codes for recognition

- add evaluation codes for quality assessment

- add fp16

- test fp16

- extend the idea to CosFace

20210331 test fp32 + parallel training and release a model/log

20210325.2 add codes for parallel training as well as fp16 training (not tested).

20210325 the basic training codes are tested! Please find the trained model and logs from the table in Model Zoo.

20210323 add requirements and beamer presentation; add debug logs.

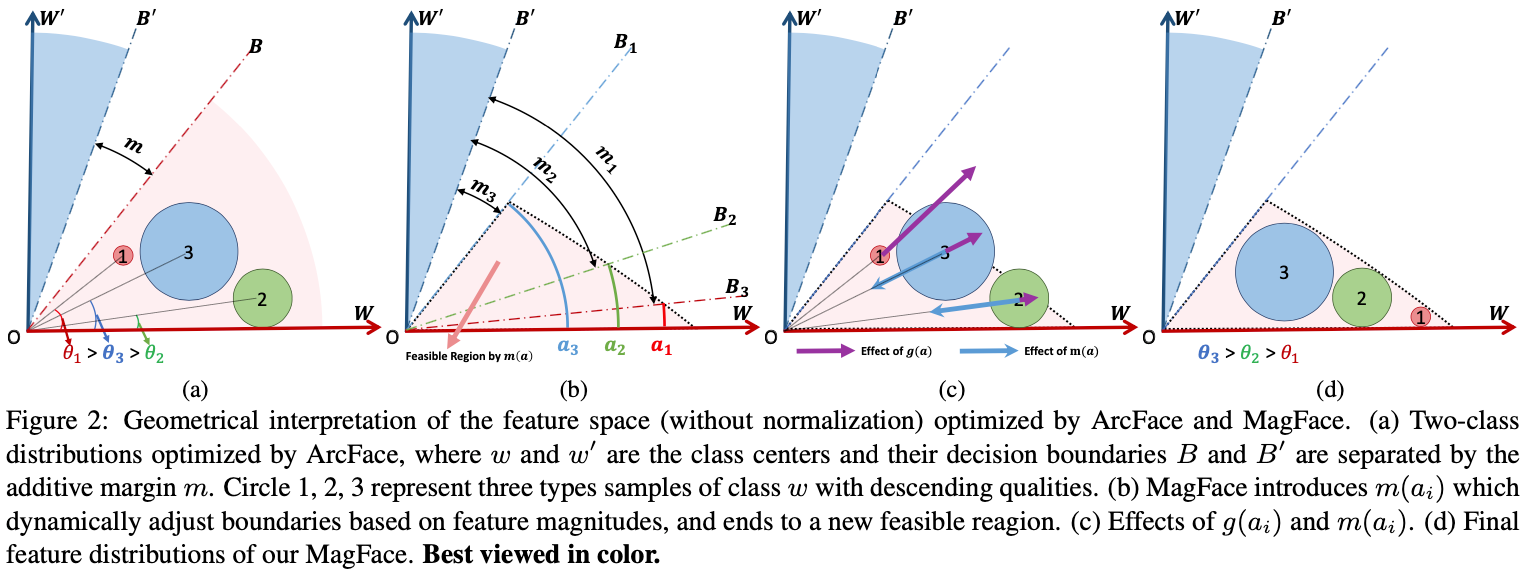

20210315 fix figure 2 and add gdrive link for checkpoint.

20210312 add the basic code (not tested yet).

20210312 add paper/poster/model and a toy example.

20210301 add ReadMe and license.