Learn Deep Learning The Hard Way.

It is a set of small projects on Deep Learning.

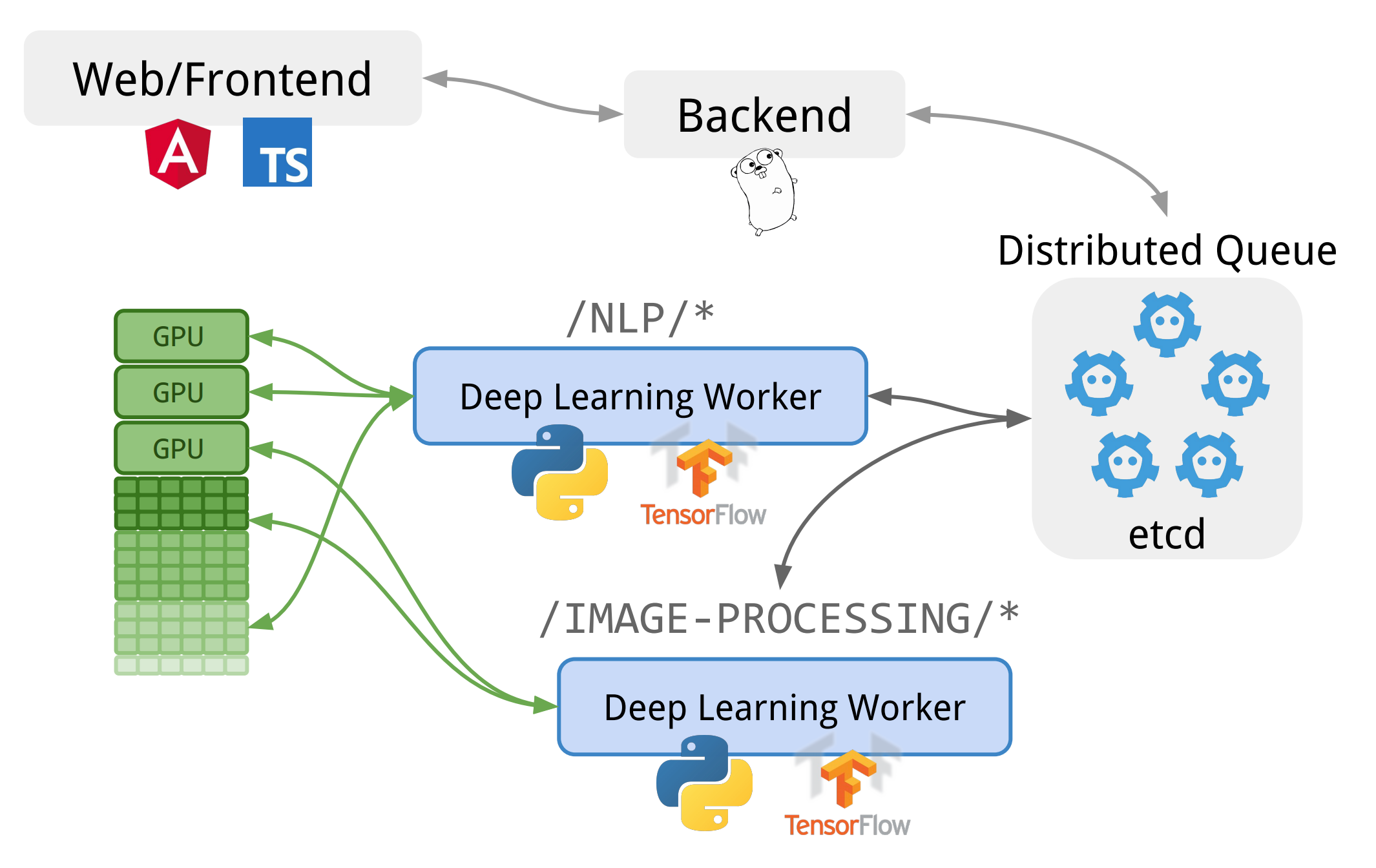

frontendimplements user-facing UI, sends user requests tobackend/*.backend/webschedules user requests onpkg/etcd-queue.backend/workerprocesses jobs from queue, and writes back the results.- Data serialization from

frontendtobackend/webis defined inbackend/web.Requestandfrontend/app/request.service.Request. - Data serialization from

backend/webtofrontendis defined inpkg/etcd-queue.Itemandfrontend/app/request.service.Item. - Data serialization between

backend/webandbackend/workeris defined inpkg/etcd-queue.Itemandbackend/worker/worker.py.

Notes:

- Why is the queue service needed? To process concurrent users requests. Worker has limited resources, serializing requests into the queue.

- Why Go? To natively use

embedded etcd. - Why etcd? To use etcd Watch API.

pkg/etcd-queueuses Watch to stream updates tobackend/workerandfrontend. This minimizes TCP socket creation and slow TCP starts (e.g. streaming vs. polling).

This is a proof-of-concept. In production, I would use: Tensorflow/serving to serve the pre-trained models, distributed etcd for higher availability.

To train cats 5-layer Deep Neural Network model:

DATASETS_DIR=./datasets \

CATS_PARAM_PATH=./datasets/parameters-cats.npy \

python3 -m unittest backend.worker.cats.model_testThis persists trained model parameters on disk that can be loaded by workers later.

To run application (backend, web UI) locally, on http://localhost:4200:

./scripts/docker/run-app.sh

./scripts/docker/run-worker-python3-cpu.sh

<<COMMENT

# to serve on port :80

./scripts/docker/run-reverse-proxy.sh

COMMENTOpen http://localhost:4200/cats and try other cat photos:

- https://static.pexels.com/photos/127028/pexels-photo-127028.jpeg

- https://static.pexels.com/photos/126407/pexels-photo-126407.jpeg

- https://static.pexels.com/photos/54632/cat-animal-eyes-grey-54632.jpeg

To update dependencies:

./scripts/dep/go.sh

./scripts/dep/frontend.shTo update Dockerfile:

# update 'container.yaml' and then

./scripts/docker/gen.shTo build Docker container images:

./scripts/docker/build-app.sh

./scripts/docker/build-python3-cpu.sh

./scripts/docker/build-python3-gpu.sh

./scripts/docker/build-r.sh

./scripts/docker/build-reverse-proxy.shTo run tests:

./scripts/tests/frontend.sh

./scripts/tests/go.sh

go install -v ./cmd/backend-web-server

DATASETS_DIR=./datasets \

CATS_PARAM_PATH=./datasets/parameters-cats.npy \

ETCD_EXEC=/opt/bin/etcd \

SERVER_EXEC=${GOPATH}/bin/backend-web-server \

./scripts/tests/python3.shTo run tests in container:

./scripts/docker/test-app.sh

./scripts/docker/test-python3-cpu.shTo run IPython Notebook locally, on http://localhost:8888/tree:

./scripts/docker/run-ipython-python3-cpu.sh

./scripts/docker/run-ipython-python3-gpu.sh

./scripts/docker/run-r.shTo deploy dplearn and IPython Notebook on Google Cloud Platform CPU or GPU:

GCP_KEY_PATH=/etc/gcp-key-dplearn.json ./scripts/gcp/ubuntu-python3-cpu.gcp.sh

GCP_KEY_PATH=/etc/gcp-key-dplearn.json ./scripts/gcp/ubuntu-python3-gpu.gcp.sh

# create a Google Cloud Platform Compute Engine VM with a start-up script

# to provision GPU, init system, reverse proxy, and others

# (see ./scripts/gcp/ubuntu-python3-gpu.ansible.sh for more detail)