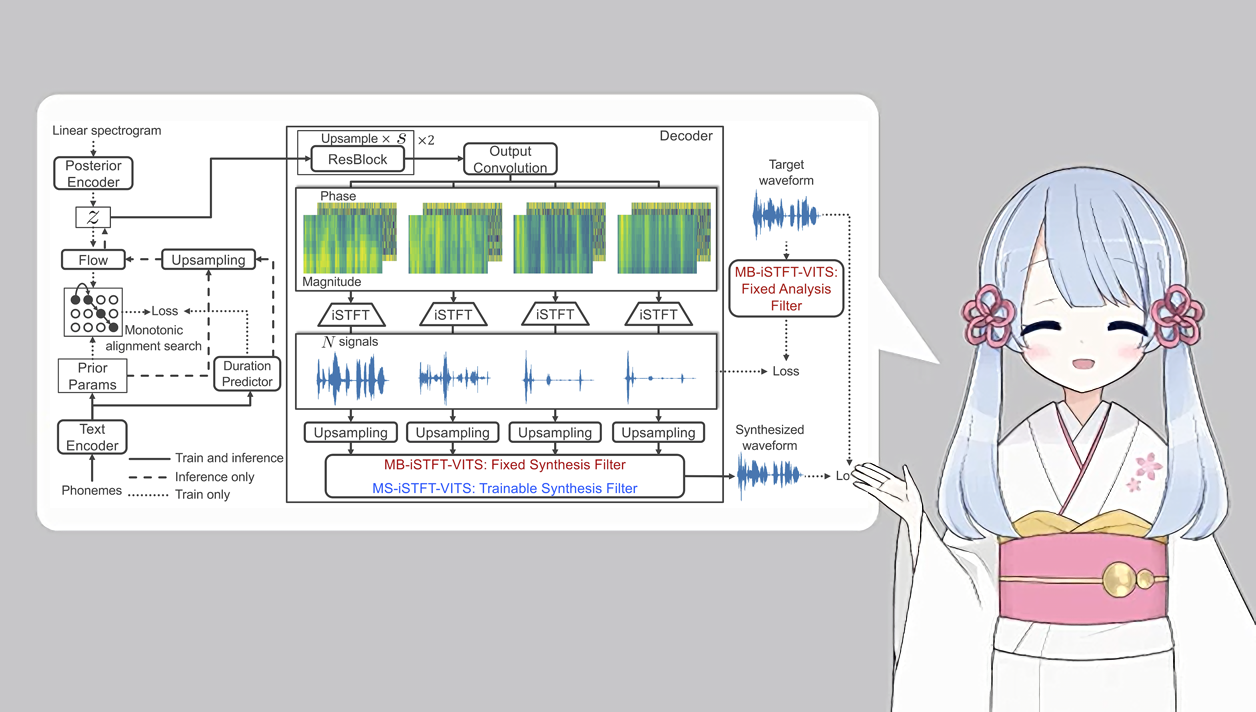

This is an multilingual implementation of MB-iSTFT-VITS to support conversion to various languages. MB-iSTFT-VITS showed 4.1 times faster inference time compared with original VITS!

Preprocessed Japanese Single Speaker training material is provided with つくよみちゃんコーパス(tsukuyomi-chan corpus). You need to download the corpus and place 100 .wav files to ./tsukuyomi_raw.

- Currently Supported: Japanese / Korean

- Chinese / CJKE / and other languages will be updated very soon!

Python >= 3.6 (Python == 3.7 is suggested)

git clone https://github.com/misakiudon/MB-iSTFT-VITS-multilingual.gitpip install -r requirements.txtYou may need to install espeak first: apt-get install espeak

"n_speakers" should be 0 in config.json

path/to/XXX.wav|transcript

- Example

dataset/001.wav|こんにちは。

Speaker id should start from 0

path/to/XXX.wav|speaker id|transcript

- Example

dataset/001.wav|0|こんにちは。

Japanese preprocessed manifest data is provided with filelists/filelist_train2.txt.cleaned and filelists/filelist_val2.txt.cleaned.

# Single speaker

python preprocess.py --text_index 1 --filelists path/to/filelist_train.txt path/to/filelist_val.txt --text_cleaners 'japanese_cleaners'

# Mutiple speakers

python preprocess.py --text_index 2 --filelists path/to/filelist_train.txt path/to/filelist_val.txt --text_cleaners 'japanese_cleaners'If your speech file is either not 22050Hz / Mono / PCM-16, the you should resample your .wav file first.

python convert_to_22050.py --in_path path/to/original_wav_dir/ --out_path path/to/output_wav_dir/# Cython-version Monotonoic Alignment Search

cd monotonic_align

mkdir monotonic_align

python setup.py build_ext --inplaceSetting json file in configs

| Model | How to set up json file in configs | Sample of json file configuration |

|---|---|---|

| iSTFT-VITS | "istft_vits": true, "upsample_rates": [8,8], |

ljs_istft_vits.json |

| MB-iSTFT-VITS | "subbands": 4,"mb_istft_vits": true, "upsample_rates": [4,4], |

ljs_mb_istft_vits.json |

| MS-iSTFT-VITS | "subbands": 4,"ms_istft_vits": true, "upsample_rates": [4,4], |

ljs_ms_istft_vits.json |

For tutorial, check config/tsukuyomi_chan.json for more examples

- If you have done preprocessing, set "cleaned_text" to true.

- Change

training_filesandvalidation_filesto the path of preprocessed manifest files. - Select same

text_cleanersyou used in preprocessing step.

# Single speaker

python train_latest.py -c <config> -m <folder>

# Mutiple speakers

python train_latest_ms.py -c <config> -m <folder>In the case of training MB-iSTFT-VITS with Japanese tutorial corpus, run the following script. Resume training from lastest checkpoint is automatic.

python train_latest.py -c configs/tsukuyomi_chan.json -m tsukuyomiAfter the training, you can check inference audio using inference.ipynb