This is the repo that reproduce the results for continuous control domains presented in the paper "Learning Multi-Level Hierarchies with Hindsight" (ICLR 2019) in Pytorch. Original repo in Tensorflow is at https://github.com/andrew-j-levy/Hierarchical-Actor-Critc-HAC-. The repo is inspired from "https://github.com/nikhilbarhate99/Hierarchical-Actor-Critic-HAC-PyTorch". The difference is that I use the domains (Ant-Four-Room, Ant-Reacher, UR5-Reacher, Inverted-Pendulum) in the original paper, while the other repo does not (it uses two custom and simpler domains - one of them is included in this repo as well).

- Install

pip3 install -e . - You will need MuJoCo to run

python3 run_hac.py --n_layers 2 --env hac-inverted-pendulum-v0 --retrain --timesteps 2000000 --seed 0 --group 2-level

python3 run_hac.py --n_layers 2 --env hac-inverted-pendulum-v0 --test --show --timesteps 2000000 --seed 0 --group 2-level

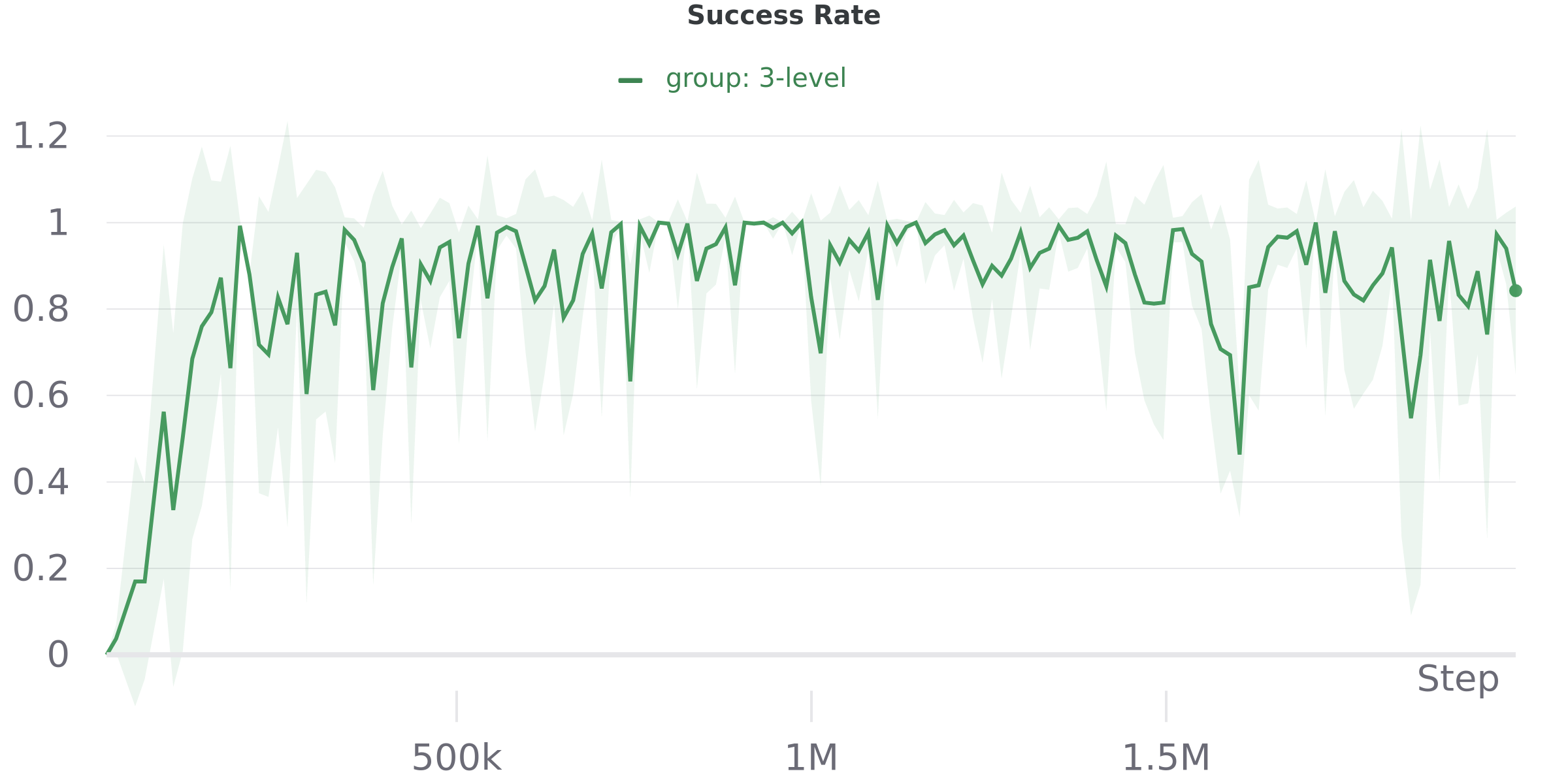

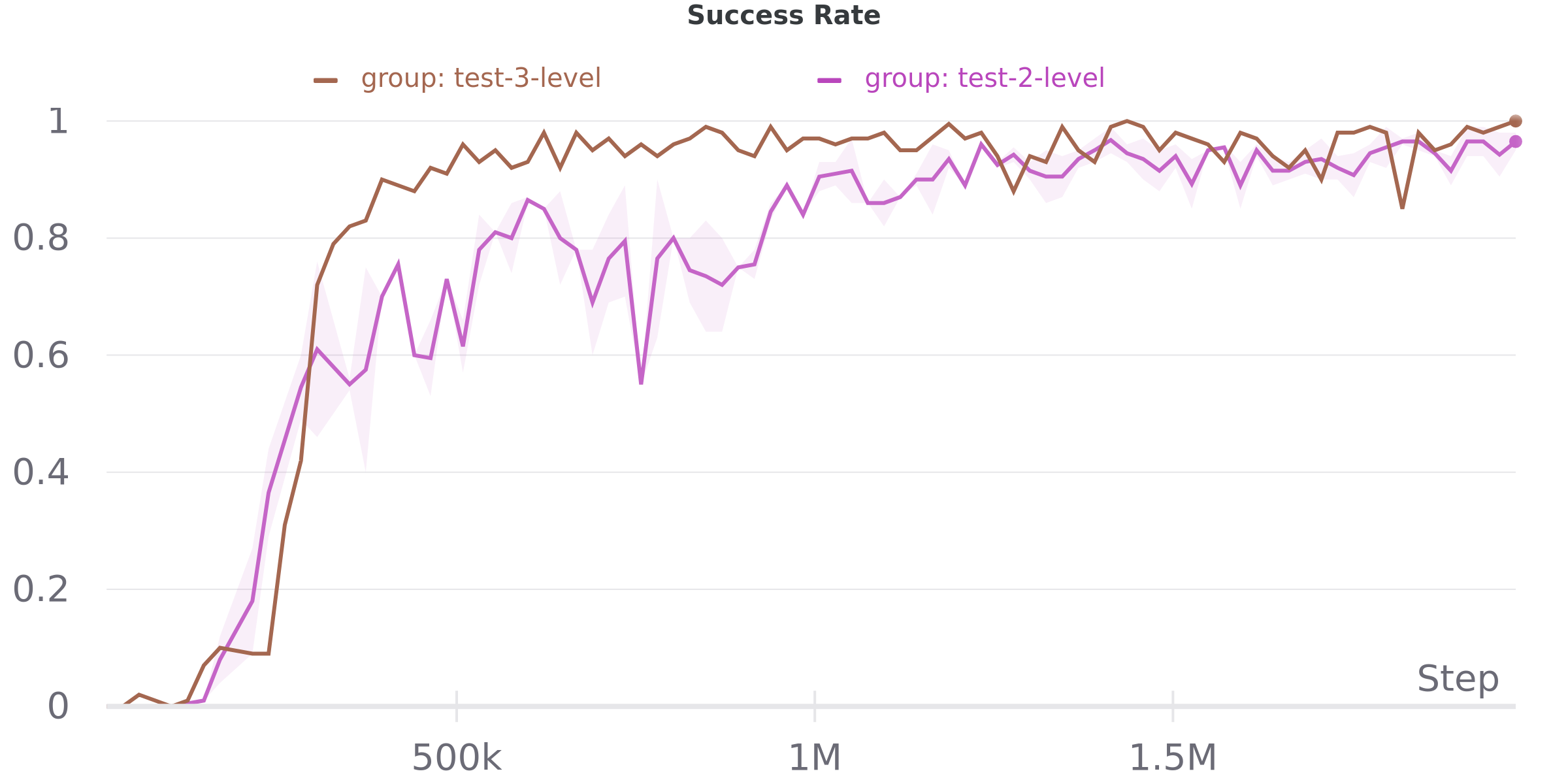

- Inverted-Pendulum (3 levels)

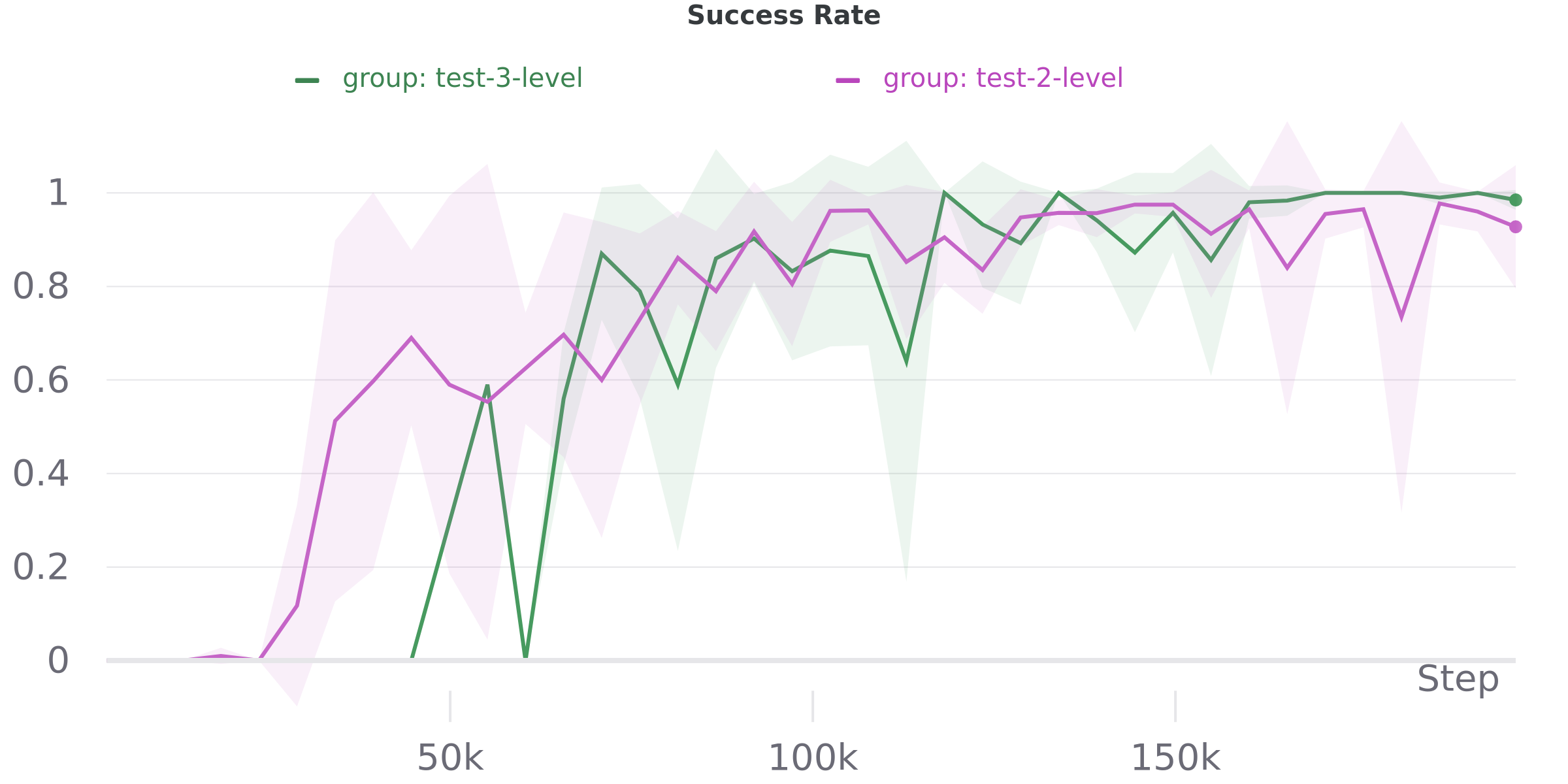

- Inverted-Pendulum

- Mountain-Car

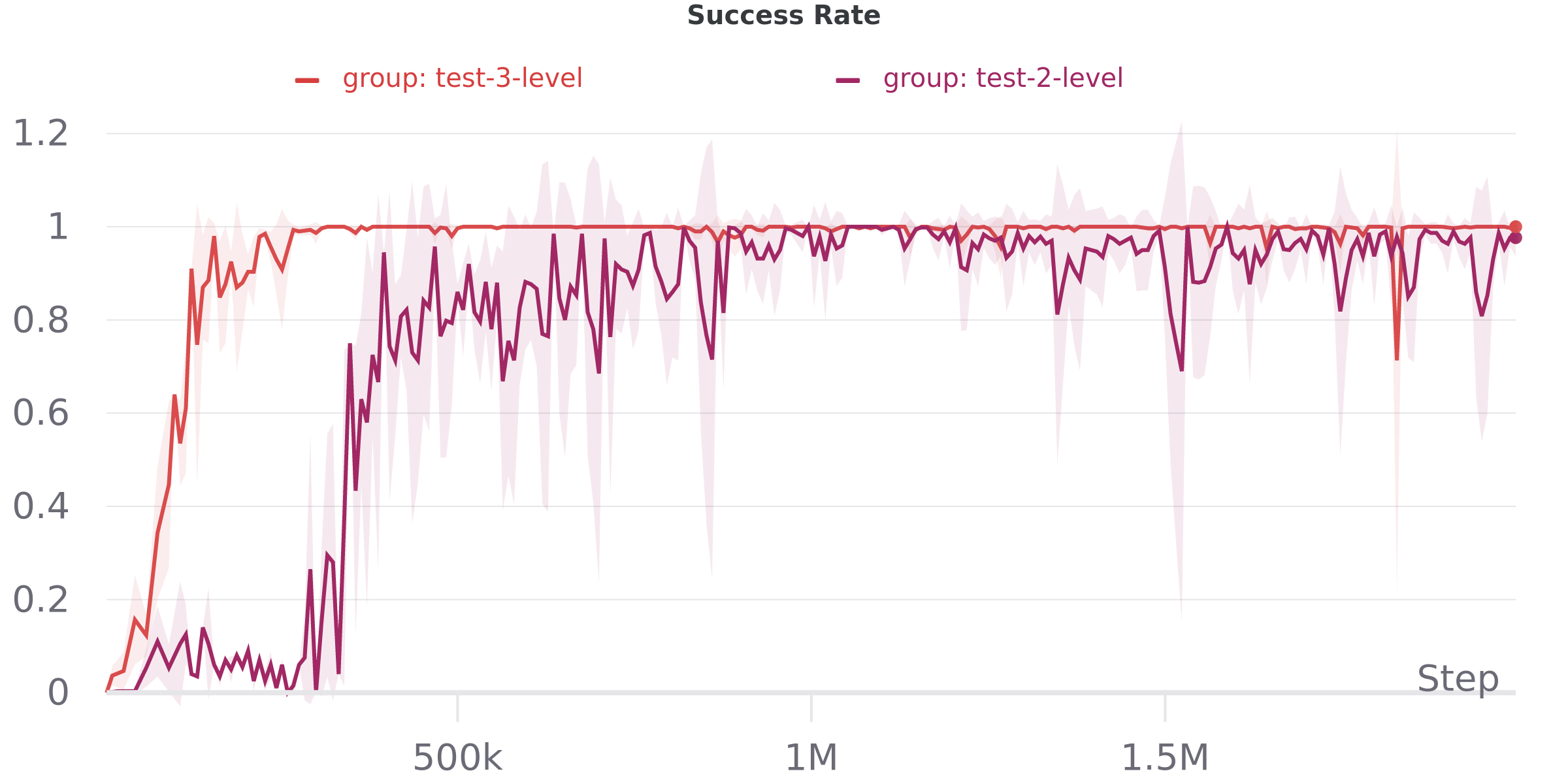

- UR5-Reacher

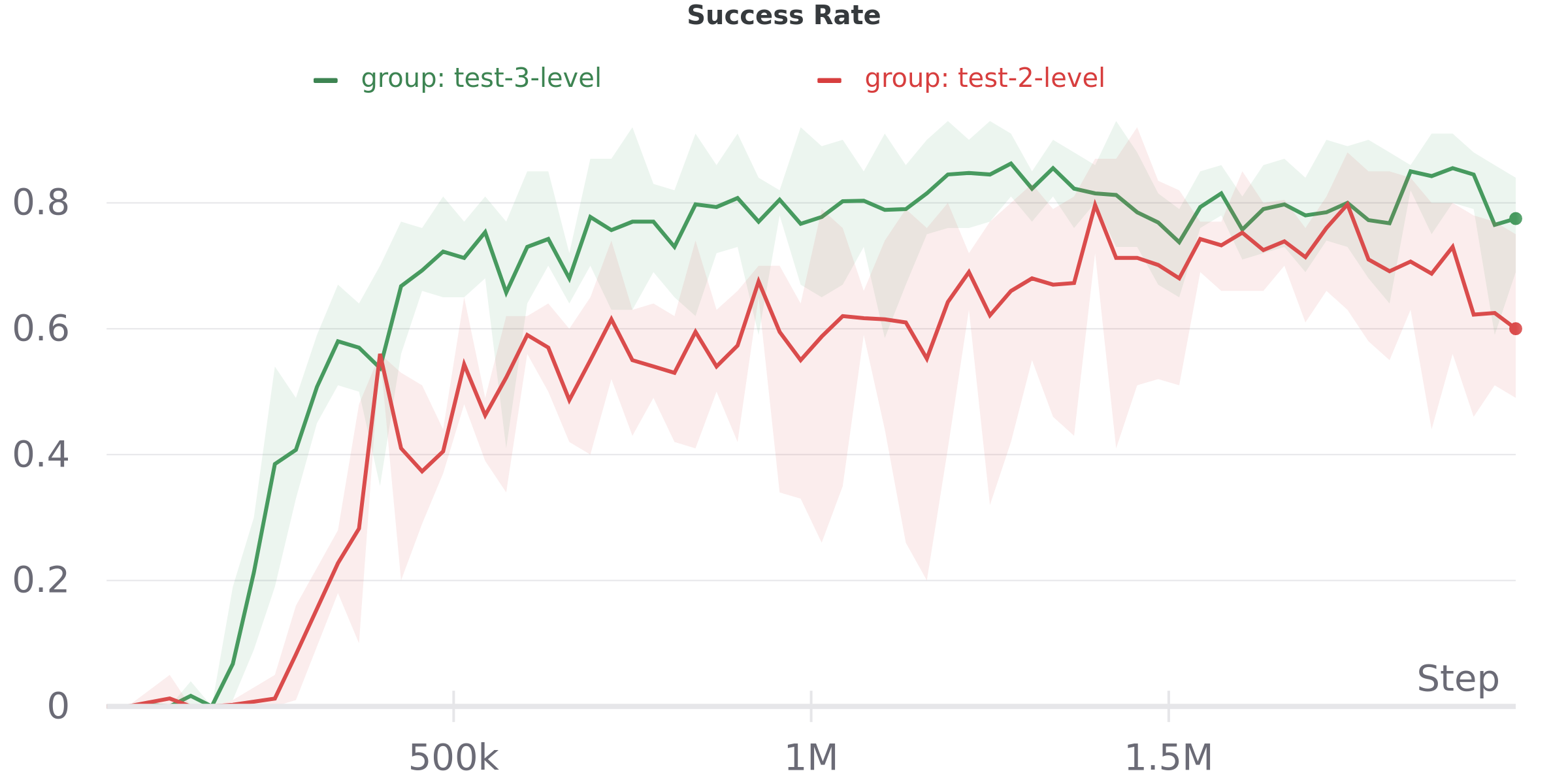

- Ant-Four-Rooms

- Ant-Reacher

To replay a saved policy: create a folder results/logs/hac/hac-ant-reacher-v0/2-levels and copy a saved policies into the folder, e.g., copy the whole 0/ folder in saved_policies/ant-reacher-2-levels. Then run the following command (the seed value must also be the name of the folder copied over):

python3 run_hac.py --n_layers 2 --env hac-ant-reacher-v0 --test --show --timesteps 2000000 --seed 0 --group 2-level