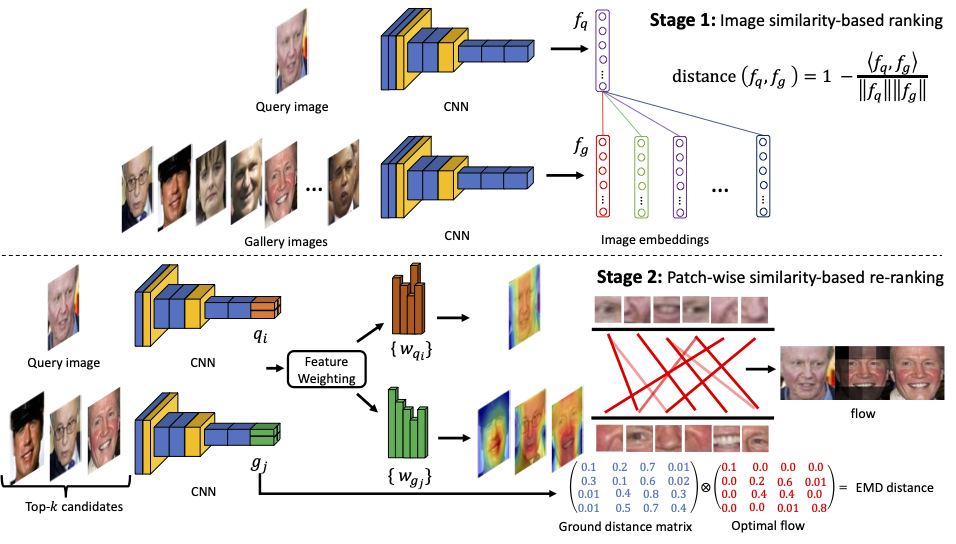

DeepFace-EMD: Re-ranking Using Patch-wise Earth Mover’s Distance Improves Out-Of-Distribution Face Identification

Official Implementation for the paper DeepFace-EMD: Re-ranking Using Patch-wise Earth Mover’s Distance Improves Out-Of-Distribution Face Identification (2021) by Hai Phan and Anh Nguyen.

If you use this software, please consider citing:

@article{hai2021deepface,

title={DeepFace-EMD: Re-ranking Using Patch-wise Earth Mover’s Distance Improves Out-Of-Distribution Face Identification},

author={Hai Phan, Anh Nguyen},

journal={arXiv preprint arXiv:2112.04016},

year={2021}

}

Python >= 3.5

Pytorch > 1.0

Opencv >= 3.4.4

pip install tqmd

-

Download LFW, out-of-distribution (OOD) LFW test sets, and pretrained models: Google Drive

-

Create the following folders:

mkdir data

mkdir pretrained

- Extract LFW datasets (e.g.

lfw_crop_96x112.tar.gz) todata/ - Copy models (e.g.

resnet18_110.pth) topretrained/

-

Run testing LFW images

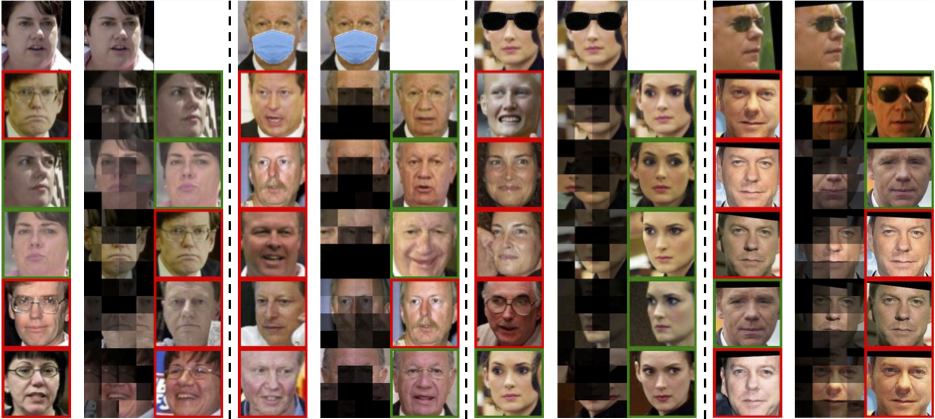

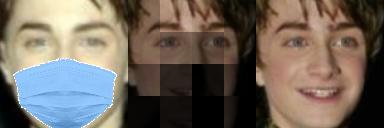

-mask,-sunglass,-crop: flags for using corresponding OOD query images (i.e., faces with masks or sunglasses or randomly-cropped images).

bash run_test.sh -

Run demo: The demo gives results of top-5 images of stage 1 and stage 2 (including flow visualization of EMD).

-mask: image retrieval using a masked-face query image given a gallery of normal LFW images.-sunglassand-crop: similar to the setup of-mask.- The results will be saved in the results/demo directory.

bash run_demo.sh -

Run retrieval using the full LFW gallery

- Set the argument

args.data_foldertodatain.shfiles.

- Set the argument

-

Make sure

lfw-align-128andlfw-align-128-crop70dataset indata/directory (e.g.data/lfw-align-128-crop70), ArcFace [2] modelresnet18_110.pthinpretrained/directory (e.g.pretrained/resnet18_110.pth). Run the following commands to reproduce the Table 1 results in our paper.-

Arguments:

- Methods can be

apc,uniform, orsc -l: 4 or 8 for4x4and8x8respectively.-a: alpha parameter mentioned in the paper.

- Methods can be

-

Normal LFW with 1680 classes:

python test_face.py -method apc -fm arcface -d lfw_1680 -a -1 -data_folder data -l 4- LFW-crop:

python test_face.py -method apc -fm arcface -d lfw -a 0.7 -data_folder data -l 4 -crop- Note: The full LFW dataset have 5,749 people for a total of 13,233 images; however, only 1,680 people have two or more images (See LFW for details). However, in our normal LFW dataset, the identical images will not be considered in face identification. So, the difference between

lfwandlfw_1680is that thelfwsetup uses the full LFW (including people with a single image) but thelfw_1680uses only 1,680 people who have two or more images.

-

-

For other OOD datasets, run the following command:

- LFW-mask:

python test_face.py -method apc -fm arcface -d lfw -a 0.7 -data_folder data -l 4 -mask- LFW-sunglass:

python test_face.py -method apc -fm arcface -d lfw -a 0.7 -data_folder data -l 4 -sunglass

python visualize_faces.py -method [methods] -fm [face models] -model_path [model dir] -in1 [1st image] -in2 [2nd image] -weight [1/0: showing weight heatmaps]

The results are in results/flow and results/heatmap (if -weight flag is on).

- Facial alignment. See align_face.py for details.

- Install face_alignment to extract landmarks.

pip install scikit-image

pip install face-alignment

- For making face alignment with size of

160x160for Arcface (128x128) and FaceNet (160x160), the reference points are as follow (see functionalignmentin align_face.py).

ref_pts = [ [61.4356, 54.6963],[118.5318, 54.6963], [93.5252, 90.7366],[68.5493, 122.3655],[110.7299, 122.3641]]

crop_size = (160, 160)- Create a folder including all persons (folders: name of person) and put it to '/data'

- Create a

txtfile with format:[image_path],[label]of that folder (See lfw file for details) - Modify face loader: Add your

txtfile in function:get_face_dataloader.

MIT

- W. Zhao, Y. Rao, Z. Wang, J. Lu, Zhou. Towards interpretable deep metric learning with structural matching, ICCV 2021 DIML

- J. Deng, J. Guo, X. Niannan, and StefanosZafeiriou. Arcface: Additive angular margin loss for deepface recognition, CVPR 2019 Arcface Pytorch

- H. Wang, Y. Wang, Z. Zhou, X. Ji, DihongGong, J. Zhou, Z. Li, W. Liu. Cosface: Large margin cosine loss for deep face recognition, CVPR 2018 CosFace Pytorch

- F. Schroff, D. Kalenichenko, J. Philbin. Facenet: A unified embedding for face recognition and clustering. CVPR 2015 FaceNet Pytorch

- L. Weiyang, W. Yandong, Y. Zhiding, L. Ming, R. Bhiksha, S. Le. SphereFace: Deep Hypersphere Embedding for Face Recognition, CVPR 2017 sphereface, sphereface pytorch

- Chi Zhang, Yujun Cai, Guosheng Lin, Chunhua Shen. Deepemd: Differentiable earth mover’s distance for few-shotlearning, CVPR 2020 paper