Build a fully integrated pipeline to train your machine learning models with Tensorflow and Kubernetes.

This repo will guide you through:

- setting up a local environment with python, pip and tensorflow

- packaging up your models as Docker containers

- creating and configuring a Kubernetes cluster

- deploying models in your cluster

- scaling your model using Distributed Tensorflow

- serving your model

- tuning your model using hyperparameter optimisation

You should have the following tools installed:

- minikube

- kubectl

- ksonnet

- python 2.7

- pip

- sed

- an account on Docker Hub

- an account on GCP

- Gcloud

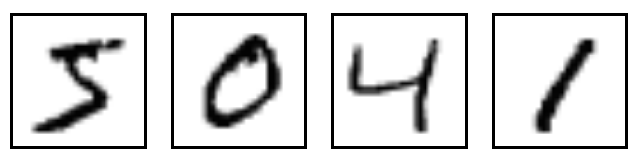

MNIST is a simple computer vision dataset. It consists of images of handwritten digits like these:

It also includes labels for each image, telling us which digit it is. For example, the labels for the above images are 5, 0, 4, and 1.

In this tutorial, you're going to train a model to look at images and predict what digits they are.

If you plan to train your model using distributed Tensorflow you should be aware of:

- you should use the Estimator API where possible.

- distribute Tensorflow works only with tf.estimator.train_and_evaluate. If you use the method train and evaluate it won't work.

- you should save your model with export_savedmodel so that Tensorflow serving can serve them

- you should use use tf.estimator.RunConfig to read the configuration from the environment. The Tensorflow operator in Kubedflow automatically populated the environment variables that are consumed by that class.

You can create a virtual environment for python with:

virtualenv --system-site-packages --python /usr/bin/python srcPlease note that you may have to customise the path for your

pythonbinary.

You can activate the virtual environment with:

cd src

source bin/activateYou should install the dependencies with:

pip install -r requirements.txtYou can test that the script works as expected with:

python main.pyYou can package your application in a Docker image with:

cd src

docker build -t learnk8s/mnist:1.0.0 .Please note that you may want to customise the image to have the username of your Docker Hub account instead of learnk8s

You can test the Docker image with:

docker run -ti learnk8s/mnist:1.0.0You can upload the Docker image to the Docker Hub registry with:

docker push learnk8s/mnist:1.0.0You can train your models in the cloud or locally.

You can create a local Kubernetes cluster with minikube:

minikube start --cpus 4 --memory 8096 --disk-size=40gOnce your cluster is ready, you can install kubeflow.

You can download the packages with:

ks init my-kubeflow

cd my-kubeflow

ks registry add kubeflow github.com/kubeflow/kubeflow/tree/v0.1.2/kubeflow

ks pkg install kubeflow/core@v0.1.2

ks pkg install kubeflow/tf-serving@v0.1.2

ks pkg install kubeflow/tf-job@v0.1.2You can generate a component from a Ksonnet prototype with:

ks generate core kubeflow-core --name=kubeflow-coreCreate a separate namespace for kubeflow:

kubectl create namespace kubeflowMake the environment the default environment for ksonnet with:

ks env set default --namespace kubeflowDeploy kubeflow with:

ks apply default -c kubeflow-coreTo use distributed Tensorflow, you have to share a filesystem between the master node and the parameter servers.

You can create an NFS server with:

kubectl create -f kube/nfs-minikube.yamlMake a note of the IP of the service for the NFS server with:

kubectl get svc nfs-serverReplace nfs-server.default.svc.cluster.local with the ip address of the service in kube/pvc-minikube.yaml.

The change is necessary since kube-dns is not configured correctly in the VM and the kubelet can't resolve the domain name.

Create the volume with:

kubectl create -f kube/pvc-minikube.yamlCreate a cluster on GKE with:

gcloud container clusters create distributed-tf --machine-type=n1-standard-8 --num-nodes=3You can obtain the credentials for kubectl with:

gcloud container clusters get-credentials distributed-tfGive yourself admin permission to install kubeflow:

kubectl create clusterrolebinding default-admin --clusterrole=cluster-admin --user=daniele.polencic@gmail.comYou can download the packages with:

ks init my-kubeflow

cd my-kubeflow

ks registry add kubeflow github.com/kubeflow/kubeflow/tree/v0.1.2/kubeflow

ks pkg install kubeflow/core@v0.1.2

ks pkg install kubeflow/tf-serving@v0.1.2

ks pkg install kubeflow/tf-job@v0.1.2You can generate a component from a Ksonnet prototype with:

ks generate core kubeflow-core --name=kubeflow-coreCreate a separate namespace for kubeflow:

kubectl create namespace kubeflowMake the environment the default environment for ksonnet with:

ks env set default --namespace kubeflowConfigure kubeflow to run in the Google Cloud Platform:

ks param set kubeflow-core cloud gcpDeploy kubeflow with:

ks apply default -c kubeflow-coreTo use distributed Tensorflow, you have to share a filesystem between the master node and the parameter servers.

Create a Google Compute Engine persistent disk:

gcloud compute disks create --size=10GB gce-nfs-diskYou can create an NFS server with:

kubectl create -f kube/nfs-gke.yamlCreate an NFS volume with:

kubectl create -f kube/pvc-gke.yamlYou can submit a job to Kubernetes to run your Docker container with:

kubectl create -f kube/job.yamlPlease note that you may want to customise the image for your container.

The job runs a single container and doesn't scale.

However, it is still more convenient than running it on your computer.

You can run a distributed Tensorflow job on your NFS filesystem with:

kubectl create -f kube/tfjob.yamlThe results are stored in the NFS volume.

You can visualise the detail of your distributed tensorflow job with Tensorboard.

You can deploy Tensorboard with:

kubectl create -f kube/tensorboard.yamlRetrieve the name of the Tensorboard's Pod with:

kubectl get pods -l app=tensorboardYou can forward the traffic from the Pod on your cluster to your computer with:

kubectl port-forward tensorboard-XX-ID-XX 8080:6006Please note that you should probably use an Ingress manifest to expose your service to the public permanently.

You can visit the dashboard at http://localhost:8080.

You can serve your model with Tensorflow Serving.

You can create a Tensorflow Serving server with:

kubectl create -f kube/serving.yamlRetrieve the name of the Tensorflow Serving's Pod with:

kubectl get pods -l app=tf-servingYou can forward the traffic from the Tensorboard's Pod on your cluster to your computer with:

kubectl port-forward tf-serving-XX-ID-XX 8080:9000Please note that you should probably use an Ingress manifest to expose your service to the public permanently.

You can query the model using the client:

cd src

python client.py --host localhost --port 8080 --image ../data/4.png --signature_name predict --model testPlease make sure your virtualenv is still active.

The model should recognise the digit 4.

The model can be tuned with the following parameters:

- the learning rate

- the number of hidden layers in the neural network

You could submit a set of jobs to investigate the different combinations of parameters.

The templated folder contains a tf-templated.yaml file with placeholders for the variables.

The run.sh script interpolated the values and submit the TFJobs to the cluster.

Before you run the jobs, make sure you have your Tensorboard running locally:

kubectl port-forward tensorboard-XX-ID-XX 8080:6006You can run the test with:

cd templated

./run.shYou can follow the progress of the training in real-time at http://localhost:8080.

You should probably expose your services such as Tensorboard and Tensorflow Serving with an ingress manifest rather than using the port forwarding functionality in kube-proxy.

The NFS volume is running on a single instance and isn't highly available. Having a single node for your storage may work if you run small workloads, but you should probably investigate Ceph, GlusterFS or rook.io as a way to manage distributed storage.

You should consider using Helm instead of crafting your own scripts to interpolate yaml files.