⏲️ Join the ZenML team on the MLOps Day

We are hosting a MLOps day where we'll be building a vendor-agnostic MLOps pipeline from scratch.

Sign up here to join the entire ZenML team in showcasing the latest release, answering the community's questions, and live-coding vendor agnostic MLOps features with the ZenML framework!

👀 What is ZenML?

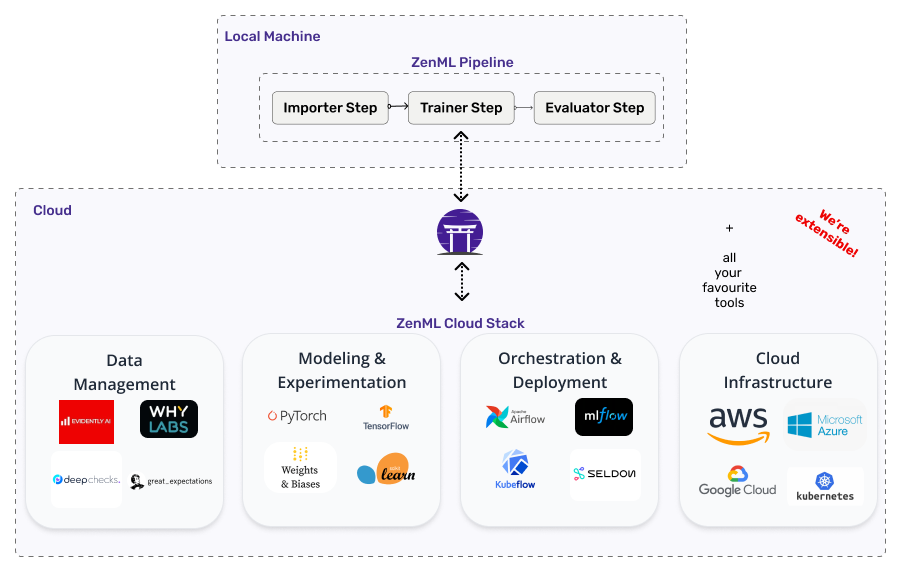

ZenML is an extensible, open-source MLOps framework for creating portable, production-ready MLOps pipelines. Built to enable collaboration among data scientists, ML Engineers, and MLOps Developers, it has a simple, flexible syntax, is cloud- and tool-agnostic, and has interfaces/abstractions that are thoughtfully designed for ML workflows.

At its core, ZenML pipelines execute ML-specific workflows from sourcing data to splitting, preprocessing, training, all the way to serving and monitoring ML models in production. There are many built-in features to support common ML development tasks. ZenML is not here to replace the great tools that solve these individual problems. Rather, it offers an extensible framework and a standard abstraction to write and build your workflows.

🎉 Version 0.8.1 out now! Check out the release notes here.

🤖 Why use ZenML?

ZenML pipelines are designed to be written early on the development lifecycle. Data scientists can explore their pipelines as they develop towards production, switching stacks from local to cloud deployments with ease. You can read more about why we started building ZenML on our blog. By using ZenML in the early stages of your project, you get the following benefits:

- Extensible so you can build out the framework to suit your specific needs

- Reproducibility of training and inference workflows

- A simple and clear way to represent the steps of your pipeline in code

- Batteries-included integrations: bring all your favorite tools together

- Easy switch between local and cloud stacks

- Painless deployment and configuration of infrastructure

📖 Learn More

| ZenML Resources | Description |

|---|---|

| 🧘♀️ ZenML 101 | New to ZenML? Here's everything you need to know! |

| ⚛️ Core Concepts | Some key terms and concepts we use. |

| 🗃 Functional API Guide | Build production ML pipelines with simple functions. |

| 🚀 New in v0.8.1 | New features, bug fixes. |

| 🗳 Vote for Features | Pick what we work on next! |

| 📓 Docs | Full documentation for creating your own ZenML pipelines. |

| 📒 API Reference | The detailed reference for ZenML's API. |

| 🍰 ZenBytes | A guided and in-depth tutorial on MLOps and ZenML. |

| 🗂️️ ZenFiles | End-to-end projects using ZenML. |

| ⚽️ Examples | Learn best through examples where ZenML is used? We've got you covered. |

| 📬 Blog | Use cases of ZenML and technical deep dives on how we built it. |

| 🔈 Podcast | Conversations with leaders in ML, released every 2 weeks. |

| 📣 Newsletter | We build ZenML in public. Subscribe to learn how we work. |

| 💬 Join Slack | Need help with your specific use case? Say hi on Slack! |

| 🗺 Roadmap | See where ZenML is working to build new features. |

| 🙋♀️ Contribute | How to contribute to the ZenML project and code base. |

🎮 Features

1. 💪 Write local, run anywhere

You only need to write your core machine learning workflow code once, but you can run it anywhere. We decouple your code from the environment and infrastructure on which this code runs.

Switching from local experiments to cloud-based pipelines doesn't need to be complicated. ZenML supports running pipelines wherever you want, for example by using Kubeflow, one of our built-in integrations, or any orchestrator of your choice. Switching from your local stack to a cloud stack is easy to do with our CLI tool.

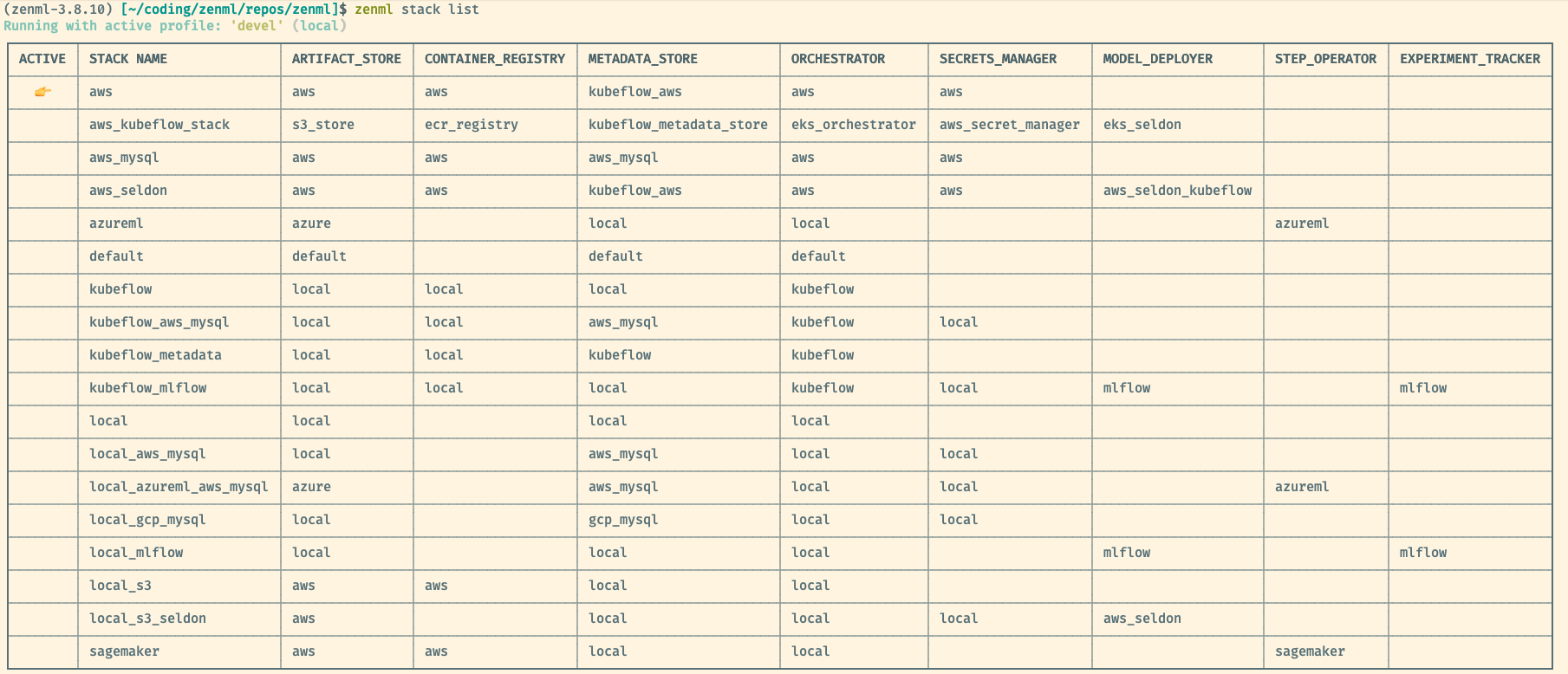

2. 🌈 All your MLOps stacks in one place

Once code is organized into a ZenML pipeline, you can supercharge your ML development with powerful integrations on multiple MLOps stacks. There are lots of moving parts for all the MLOps tooling and infrastructure you require for ML in production and ZenML aims to bring it all together under one roof.

We already support common use cases and integrations to standard ML tools via our stack components, from orchestrators like Airflow and Kubeflow to model deployment via MLflow or Seldon Core, to custom infrastructure for training your models in the cloud and so on. If you want to learn more about our integrations, check out our Examples to see how they work.

3. 🛠 Extensibility

ZenML's Stack Components are built to support most machine learning use cases. We offer a batteries-included initial installation that should serve many needs and workflows, but if you need a special kind of monitoring tool added, for example, or a different orchestrator to run your pipelines, ZenML is built as a framework making it easy to extend and build out whatever you need.

4. 🔍 Automated metadata tracking

ZenML tracks metadata for all the pipelines you run. This ensures that:

- Code is versioned

- Data is versioned

- Models are versioned

- Configurations are versioned

This also enables caching of the data that powers your pipelines which helps you iterate quickly through ML experiments. (Read our blogpost to learn more!)

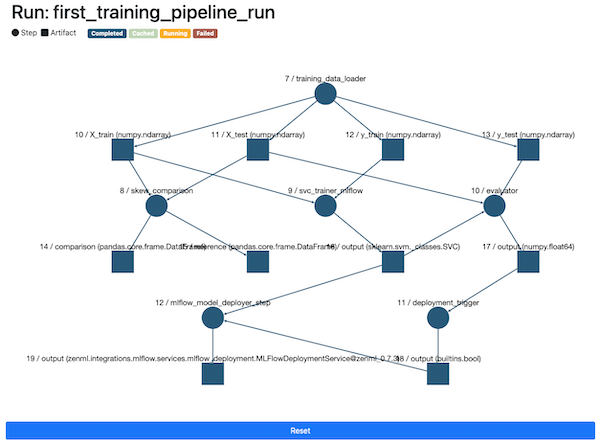

5. ➿ Continuous Training and Continuous Deployment (CT/CD)

Continuous Training (CT) refers to the paradigm where a team deploys training pipelines that run automatically to train models on new (fresh) data. Continuous Deployment (CD) refers to the paradigm where newly trained models are automatically deployed to a prediction service/server

ZenML enabled CT/CD by enabling the model preparation and model training with model deployment. With the built-in functionalities like Schedules, Model Deployers and Services you can create end-to-end ML workflows with Continuous Training and Deployment that deploys your model in a local environment with MLFlow integration or even in a production-grade environment like Kubernetes with our Seldon Core integration. You can also listed served models with the CLI:

zenml served-models list

Read more about CT/CD in ZenML here.

🤸 Getting Started

💾 Install ZenML

Requirements: ZenML supports Python 3.7, 3.8, and 3.9.

ZenML is available for easy installation into your environment via PyPI:

pip install zenmlAlternatively, if you’re feeling brave, feel free to install the bleeding edge: NOTE: Do so on your own risk, no guarantees given!

pip install git+https://github.com/zenml-io/zenml.git@main --upgradeZenML is also available as a Docker image hosted publicly on DockerHub. Use the following command to get started in a bash environment:

docker run -it zenmldocker/zenml /bin/bash🐛 Known installation issues for M1 Mac Users

If you have a M1 Mac machine and you are encountering an error while trying to install ZenML,

please try to setup brew and pyenv with Rosetta 2 and then install ZenML. The issue arises because some of the dependencies

aren’t fully compatible with the vanilla ARM64 Architecture. The following links may be helpful (Thank you @Reid Falconer) :

🚅 Quickstart

The quickest way to get started is to create a simple pipeline.

Step 1: Initialize a ZenML repo

zenml init

zenml integration install sklearn -y # we use scikit-learn for this exampleStep 2: Assemble, run, and evaluate your pipeline locally

import numpy as np

from sklearn.base import ClassifierMixin

from zenml.integrations.sklearn.helpers.digits import get_digits, get_digits_model

from zenml.pipelines import pipeline

from zenml.steps import step, Output

@step

def importer() -> Output(

X_train=np.ndarray, X_test=np.ndarray, y_train=np.ndarray, y_test=np.ndarray

):

"""Loads the digits array as normal numpy arrays."""

X_train, X_test, y_train, y_test = get_digits()

return X_train, X_test, y_train, y_test

@step

def trainer(

X_train: np.ndarray,

y_train: np.ndarray,

) -> ClassifierMixin:

"""Train a simple sklearn classifier for the digits dataset."""

model = get_digits_model()

model.fit(X_train, y_train)

return model

@step

def evaluator(

X_test: np.ndarray,

y_test: np.ndarray,

model: ClassifierMixin,

) -> float:

"""Calculate the accuracy on the test set"""

test_acc = model.score(X_test, y_test)

print(f"Test accuracy: {test_acc}")

return test_acc

@pipeline

def mnist_pipeline(

importer,

trainer,

evaluator,

):

"""Links all the steps together in a pipeline"""

X_train, X_test, y_train, y_test = importer()

model = trainer(X_train=X_train, y_train=y_train)

evaluator(X_test=X_test, y_test=y_test, model=model)

pipeline = mnist_pipeline(

importer=importer(),

trainer=trainer(),

evaluator=evaluator(),

)

pipeline.run()🐎 Get a guided tour with zenml go

For a slightly more in-depth introduction to ZenML, taught through Jupyter

notebooks, install zenml via pip as described above and type:

zenml goThis will spin up a Jupyter notebook that showcases the above example plus more on how to use and extend ZenML.

👭 Collaborate with your team

ZenML is built to support teams working together. The underlying infrastructure on which your ML workflows run can be shared, as can the data, assets and artifacts that you need to enable your work. ZenML Profiles offer an easy way to manage and switch between your stacks. The ZenML Server handles all the interaction and sharing and you can host it wherever you'd like.

zenml server up

Read more about collaboration in ZenML here.

🍰 ZenBytes

ZenBytes is a series of short practical MLOps lessons through ZenML and its various integrations. It is intended for people looking to learn about MLOps generally, and also for ML practitioners who want to get started with ZenML.

After you've run and understood the simple example above, your next port of call is probably either the fully-fleshed-out quickstart example and then to look at the ZenBytes repository and notebooks.

🗂️ ZenFiles

ZenFiles are production-grade ML use-cases powered by ZenML. They are fully fleshed out, end-to-end, projects that showcase ZenML's capabilities. They can also serve as a template from which to start similar projects.

The ZenFiles project is fully maintained and can be viewed as a sister repository of ZenML. Check it out here.

🗺 Roadmap

ZenML is being built in public. The roadmap is a regularly updated source of truth for the ZenML community to understand where the product is going in the short, medium, and long term.

ZenML is managed by a core team of developers that are responsible for making key decisions and incorporating feedback from the community. The team oversees feedback via various channels, and you can directly influence the roadmap as follows:

- Vote on your most wanted feature on our Discussion board. You can also request for new features here.

- Start a thread in our Slack channel.

🙋♀️ Contributing & Community

We would love to develop ZenML together with our community! Best way to get

started is to select any issue from the good-first-issue

label. If you

would like to contribute, please review our Contributing

Guide for all relevant details.

🆘 Where to get help

First point of call should be our Slack group. Ask your questions about bugs or specific use cases and someone from the core team will respond.

📜 License

ZenML is distributed under the terms of the Apache License Version 2.0. A complete version of the license is available in the LICENSE.md in this repository. Any contribution made to this project will be licensed under the Apache License Version 2.0.