This is a PyTorch implementation of PointMetaBase proposed by our paper "Meta Architecture for Point Cloud Analysis" (CVPR 2023).

Abstract: Recent advances in 3D point cloud analysis bring a diverse set of network architectures to the field. However, the lack of a unified framework to interpret those networks makes any systematic comparison, contrast, or analysis challenging, and practically limits healthy development of the field. In this paper, we take the initiative to explore and propose a unified framework called PointMeta, to which the popular 3D point cloud analysis approaches could fit. This brings three benefits. First, it allows us to compare different approaches in a fair manner, and use quick experiments to verify any empirical observations or assumptions summarized from the comparison. Second, the big picture brought by PointMeta enables us to think across different components, and revisit common beliefs and key design decisions made by the popular approaches. Third, based on the learnings from the previous two analyses, by doing simple tweaks on the existing approaches, we are able to derive a basic building block, termed PointMetaBase. It shows very strong performance in efficiency and effectiveness through extensive experiments on challenging benchmarks, and thus verifies the necessity and benefits of high-level interpretation, contrast, and comparison like PointMeta. In particular, PointMetaBase surpasses the previous state-of-the-art method by 0.7%/1.4/%2.1% mIoU with only 2%/11%/13% of the computation cost on the S3DIS datasets.

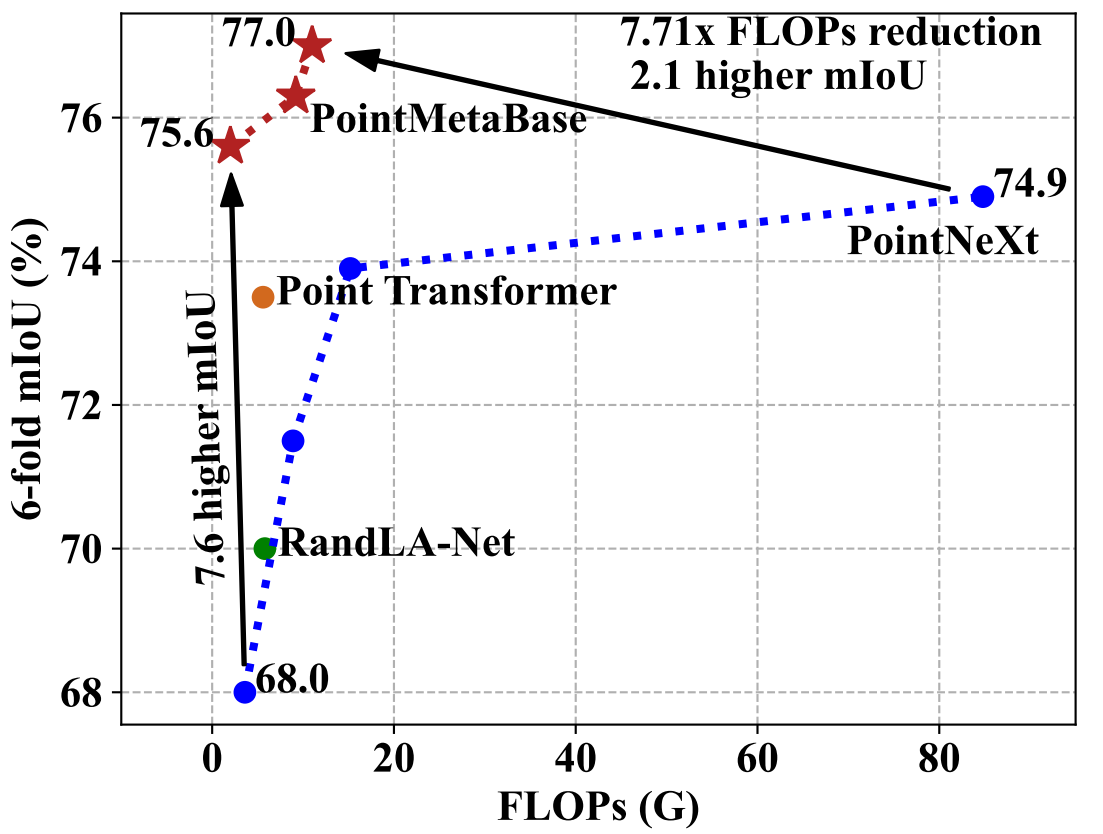

Figure 1: Segmentation performance of PointMetaBase on S3DIS. PointMetaBase surpasses the state-of-the-art method PointNeXt significantly with a large FLOPs reduction.

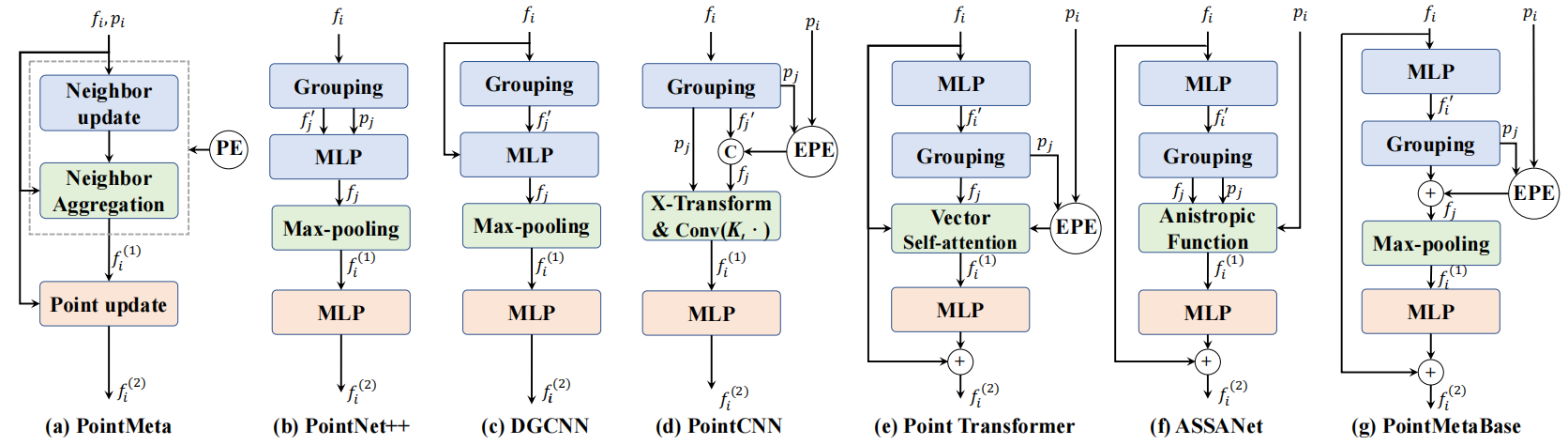

Figure 2: PointMeta and its instantiation examples. (a) PointMeta abstracts the computation pipeline of the building blocks for exsiting models into four meta functions: a neighbor update function, a

neighbor aggregation function, a point update function and

a position embedding function (implicit or explicit). The position embedding function is usually combined with the

neighbor update function or the aggregation function implicitly or explicitly. (b)~(f) Representive building blocks can naturally fit into

PointMeta. (g) Applying the summarized best practices, we do simple tweaks on the building blocks and propose PointMetaBase..

- Feb, 2023: PointMeta accepted by CVPR 2023.

- Nov, 2022: Code released.

source install.sh

Note:

-

the

install.shrequires CUDA 11.3; if another version of CUDA is used,install.shhas to be modified accordingly; check your CUDA version by:nvcc --versionbefore using the bash file; -

you might need to read

install.shfor a step-by-step installation if the bash file (install.sh) does not work for you by any chance; -

for all experiments, we use wandb for online logging. Run

wandb --loginonly at the first time in a new machine. Setwandn.use_wandb=Falseto use this function. Read the official wandb documentation if needed.

Please refer to PointNeXt tutorial to download the datasets.

Training logs & pretrained models are available in the columns with links through Google Drive.

TP: Throughput (instance per second) measured using an NVIDIA Tesla A100 40GB GPU and a 12 core Intel Xeon @ 2.40GHz CPU.

| Model | Area-5 mIoU/OA | Area-5 mIoU/OA (best) | 6-fold mIoU/OA | Params (M) | FLOPs (G) | TP (ins./sec.) |

|---|---|---|---|---|---|---|

| PointMetaBase-L | 69.5±0.3/90.5±0.1 | 69.723/90.702 | 75.6/90.6 | 2.7 | 2.0 | 187 |

| PointMetaBase-XL | 71.1±0.4/90.9±0.1 | 71.597/90.551 | 76.3/91.0 | 15.3 | 9.2 | 104 |

| PointMetaBase-XXL | 71.3±0.7/90.8±0.6 | 72.250/91.322 | 77.0/91.3 | 19.7 | 11.0 | 90 |

CUDA_VISIBLE_DEVICES=0 bash script/main_segmentation.sh cfgs/s3dis/pointmetabase-l.yaml wandb.use_wandb=True

CUDA_VISIBLE_DEVICES=0 bash script/main_segmentation.sh cfgs/s3dis/pointmetabase-l.yaml wandb.use_wandb=False mode=test --pretrained_path path/to/pretrained/model

CUDA_VISIBLE_DEVICES=0 python examples/profile.py --cfg cfgs/s3dis/pointmetabase-l.yaml batch_size=16 num_points=15000 flops=True timing=True

| Model | Val mIoU | Test mIoU | Params (M) | FLOPs (G) | TP (ins./sec.) |

|---|---|---|---|---|---|

| PointMetaBase-L | 71.0 | - | 2.7 | 2.0 | 187 |

| PointMetaBase-XL | 71.8 | - | 15.3 | 9.2 | 104 |

| PointMetaBase-XXL | 72.8 | 71.4 | 19.7 | 11.0 | 90 |

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python examples/segmentation/main.py --cfg cfgs/scannet/pointmetabase-l.yaml wandb.use_wandb=True

CUDA_VISIBLE_DEVICES=0 python examples/segmentation/main.py --cfg cfgs/scannet/pointmetabase-l.yaml mode=test dataset.test.split=val --pretrained_path path/to/pretrained/model

CUDA_VISIBLE_DEVICES=0 python examples/profile.py --cfg cfgs/scannet/pointmetabase-l.yaml batch_size=16 num_points=15000 flops=True timing=True

| Model | ins. mIoU/cls. mIoU | Params (M) | FLOPs (G) | TP (ins./sec.) |

|---|---|---|---|---|

| PointMetaBase-S (C=32) | 86.7±0.0/84.3±0.1 | 1.0 | 1.39 | 1194 |

| PointMetaBase-S (C=64) | 86.9±0.1/84.9±0.2 | 3.8 | 3.85 | 706 |

| PointMetaBase-S (C=160) | 87.1±0.0/85.1±0.3 | 22.7 | 18.45 | 271 |

CUDA_VISIBLE_DEVICES=0,1,2,3 python examples/shapenetpart/main.py --cfg cfgs/shapenetpart/pointmetabase-s.yaml wandb.use_wandb=True

CUDA_VISIBLE_DEVICES=0 python examples/shapenetpart/main.py --cfg cfgs/shapenetpart/pointmetabase-s.yaml mode=test wandb.use_wandb=False --pretrained_path path/to/pretrained/model

CUDA_VISIBLE_DEVICES=0 python examples/profile.py --cfg cfgs/shapenetpart/pointmetabase-s.yaml batch_size=64 num_points=2048 timing=True

| Model | OA/mAcc | Params (M) | FLOPs (G) | TP (ins./sec.) |

|---|---|---|---|---|

| PointMetaBase-S (C=32) | 87.9±0.2/86.2±0.7 | 1.4 | 0.6 | 2674 |

CUDA_VISIBLE_DEVICES=0 python examples/classification/main.py --cfg cfgs/scanobjectnn/pointmetabase-s.yaml wandb.use_wandb=True

CUDA_VISIBLE_DEVICES=0 python examples/classification/main.py --cfg cfgs/scanobjectnn/pointmetabase-s.yaml mode=test --pretrained_path path/to/pretrained/model

CUDA_VISIBLE_DEVICES=0 python examples/profile.py --cfg cfgs/scanobjectnn/pointnext-s.yaml batch_size=128 num_points=1024 timing=True flops=True

This repository is built on reusing codes of OpenPoints and PointNeXt. We recommend using their code repository in your research and reading the related article.

If you feel inspired by our work, please cite

@Article{lin2022meta,

title={Meta Architecure for Point Cloud Analysis},

author={Haojia Lin and Xiawu Zheng and Lijiang Li and Fei Chao and Shanshan Wang and Yan Wang and Yonghong Tian and Rongrong Ji},

journal = {arXiv:2211.14462},

year={2022},

}