This repository contains an implementation of reinforcement learning based on Deep-Q-Network (DQN), Double DQN, Dueling DQN, and Priority Experience Replay.

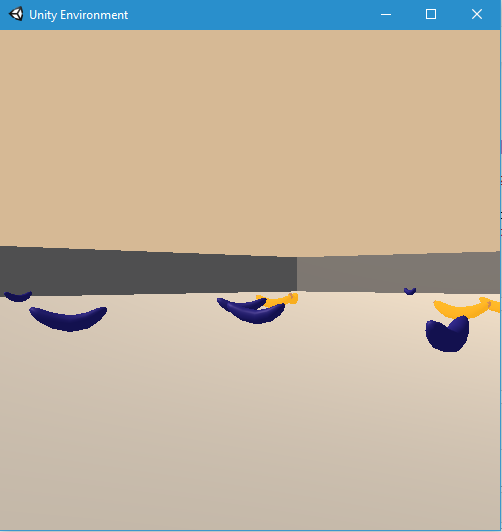

The target of the agent is to collect good bananas in a square world which is similar to the banana collector of Unity.

For each yellow banana that is collected, the agent is given a reward of +1. The blue ones give -1 reward.

The agent has 4 actions:

- move forward

- move backward

- turn left

- and turn right

The environment is considered as solved if the agent is winning an average of +13 points for 100 consecutive episodes.

A video of a trained agent can be found by clicking on the image here below

- report.pdf: a document that describes the details of the different implementation, along with ideas for future work

- jupyter notebook load_run_agent.ipynb: a notebook that can load and run the saved agents

- folder double_dqn: implementation of double deep q network (succeeded)

- folder dueling_double_dqn: implementation of double deep q network with a dueling network (succeeded)

- folder per_dueling_double_dqn: implementation of an agent with double DQN, dueling Network, and Prioritized Experience Replay all together (pending)

To run the codes, follow the next steps:

- Create a new environment:

- Linux or Mac:

conda create --name dqn python=3.6 source activate dqn- Windows:

conda create --name dqn python=3.6 activate dqn

- Perform a minimal install of OpenAI gym

- If using Windows,

- download swig for windows and add it the PATH of windows

- install Microsoft Visual C++ Build Tools

- then run these commands

pip install gym pip install gym[classic_control] pip install gym[box2d]

- If using Windows,

- Install the dependencies under the folder python/

cd python

pip install .- Create an IPython kernel for the

dqnenvironment

python -m ipykernel install --user --name dqn --display-name "dqn"-

Download the Unity Environment (thanks to Udacity) which matches your operating system

-

Start jupyter notebook from the root of this python codes

jupyter notebook- Once started, change the kernel through the menu

Kernel>Change kernel>dqn - If necessary, inside the ipynb files, change the path to the unity environment appropriately