Tensorflow implementation for reproducing main results in the paper StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks by Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaogang Wang, Xiaolei Huang, Dimitris Metaxas.

python 2.7

[Optional] Torch is needed, if use the pre-trained char-CNN-RNN text encoder.

[Optional] skip-thought is needed, if use the skip-thought text encoder.

In addition, please add the project folder to PYTHONPATH and pip install the following packages:

prettytensorprogressbarpython-dateutileasydictpandastorchfile

Data

- Download our preprocessed char-CNN-RNN text embeddings for birds and flowers and save them to

Data/.

- [Optional] Follow the instructions reedscot/icml2016 to download the pretrained char-CNN-RNN text encoders and extract text embeddings.

- Download the birds and flowers image data. Extract them to

Data/birds/andData/flowers/, respectively. - Preprocess images.

- For birds:

python misc/preprocess_birds.py - For flowers:

python misc/preprocess_flowers.py

Training

- The steps to train a StackGAN model on the CUB dataset using our preprocessed data for birds.

- Step 1: train Stage-I GAN (e.g., for 600 epochs)

python stageI/run_exp.py --cfg stageI/cfg/birds.yml --gpu 0 - Step 2: train Stage-II GAN (e.g., for another 600 epochs)

python stageII/run_exp.py --cfg stageII/cfg/birds.yml --gpu 1

- Step 1: train Stage-I GAN (e.g., for 600 epochs)

- Change

birds.ymltoflowers.ymlto train a StackGAN model on Oxford-102 dataset using our preprocessed data for flowers. *.ymlfiles are example configuration files for training/testing our models.- If you want to try your own datasets, here are some good tips about how to train GAN. Also, we encourage to try different hyper-parameters and architectures, especially for more complex datasets.

Pretrained Model

- StackGAN for birds trained from char-CNN-RNN text embeddings. Download and save it to

models/. - StackGAN for flowers trained from char-CNN-RNN text embeddings. Download and save it to

models/. - StackGAN for birds trained from skip-thought text embeddings. Download and save it to

models/(Just used the same setting as the char-CNN-RNN. We assume better results can be achieved by playing with the hyper-parameters).

Run Demos

- Run

sh demo/flowers_demo.shto generate flower samples from sentences. The results will be saved toData/flowers/example_captions/. (Need to download the char-CNN-RNN text encoder for flowers tomodels/text_encoder/. Note: this text encoder is provided by reedscot/icml2016). - Run

sh demo/birds_demo.shto generate bird samples from sentences. The results will be saved toData/birds/example_captions/.(Need to download the char-CNN-RNN text encoder for birds tomodels/text_encoder/. Note: this text encoder is provided by reedscot/icml2016). - Run

python demo/birds_skip_thought_demo.py --cfg demo/cfg/birds-skip-thought-demo.yml --gpu 2to generate bird samples from sentences. The results will be saved toData/birds/example_captions-skip-thought/. (Need to download vocabulary for skip-thought vectors toData/skipthoughts/).

Examples for birds (char-CNN-RNN embeddings), more on youtube:

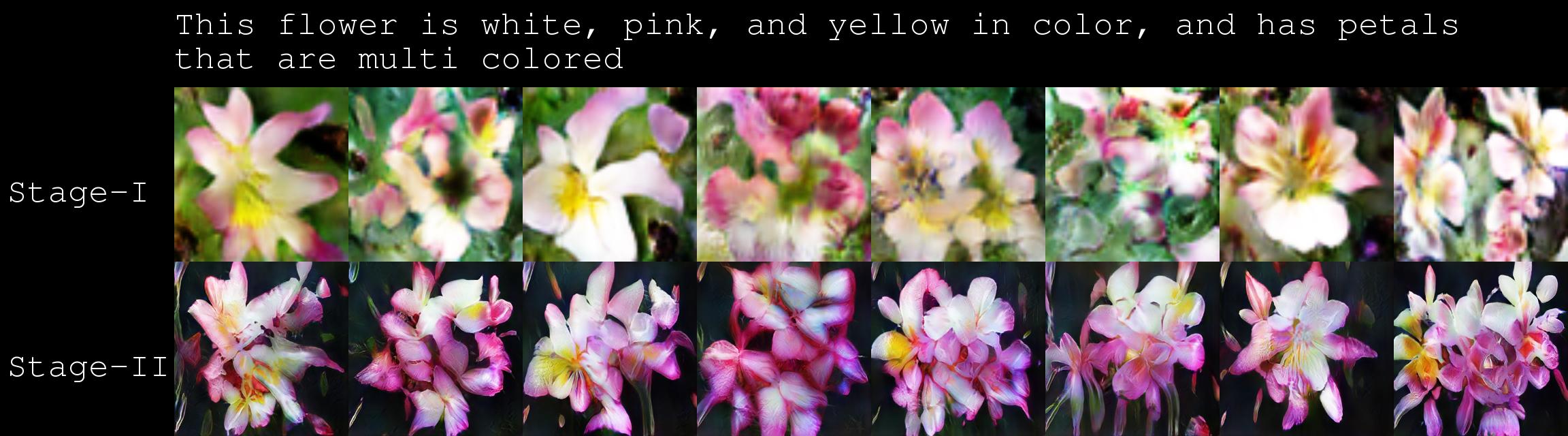

Examples for flowers (char-CNN-RNN embeddings), more on youtube:

Save your favorite pictures generated by our models since the randomness from noise z and conditioning augmentation makes them creative enough to generate objects with different poses and viewpoints from the same discription 😃

If you find StackGAN useful in your research, please consider citing:

@inproceedings{han2017stackgan,

Author = {Han Zhang and Tao Xu and Hongsheng Li and Shaoting Zhang and Xiaogang Wang and Xiaolei Huang and Dimitris Metaxas},

Title = {StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks},

Year = {2017},

booktitle = {{ICCV}},

}

Our follow-up work

- StackGAN++: Realistic Image Synthesis with Stacked Generative Adversarial Networks

- AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks [supplementary] [code]

References