This is the official PyTorch implementation of our paper: FoodSAM: Any Food Segmentation.

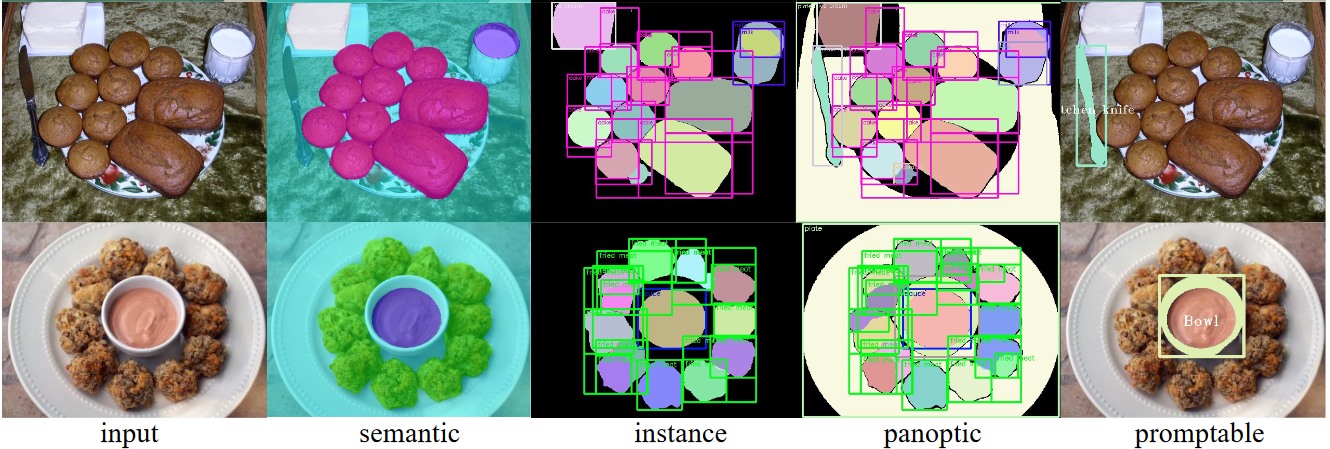

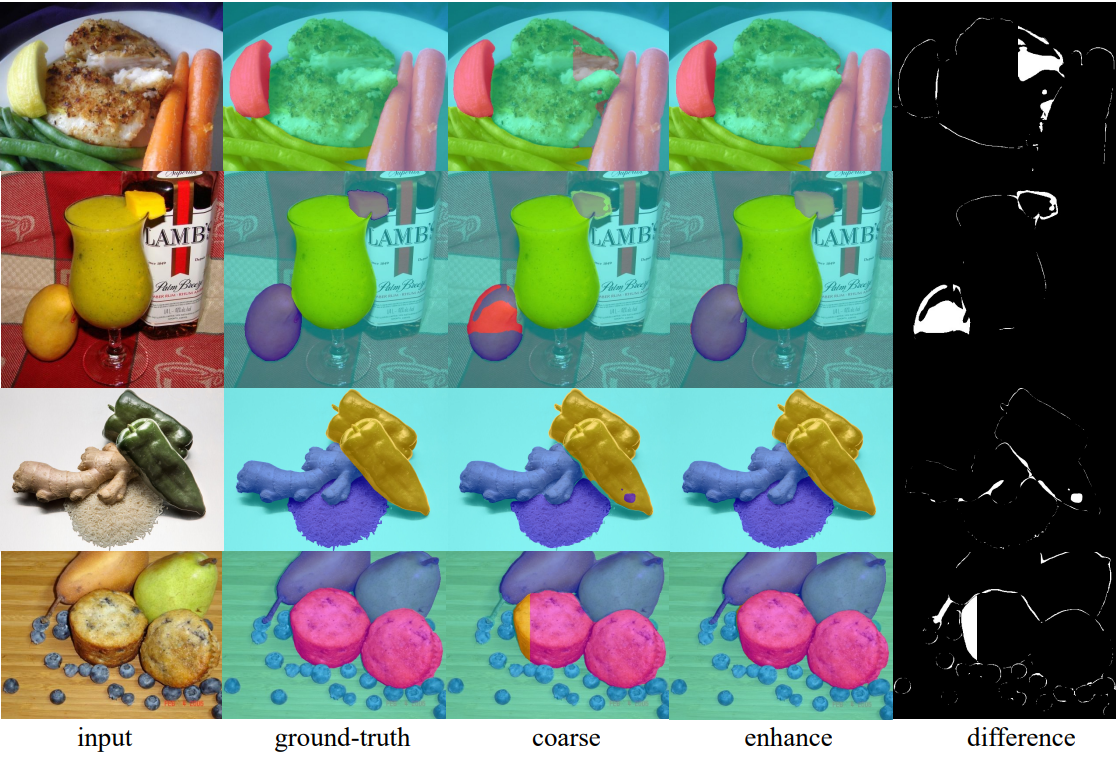

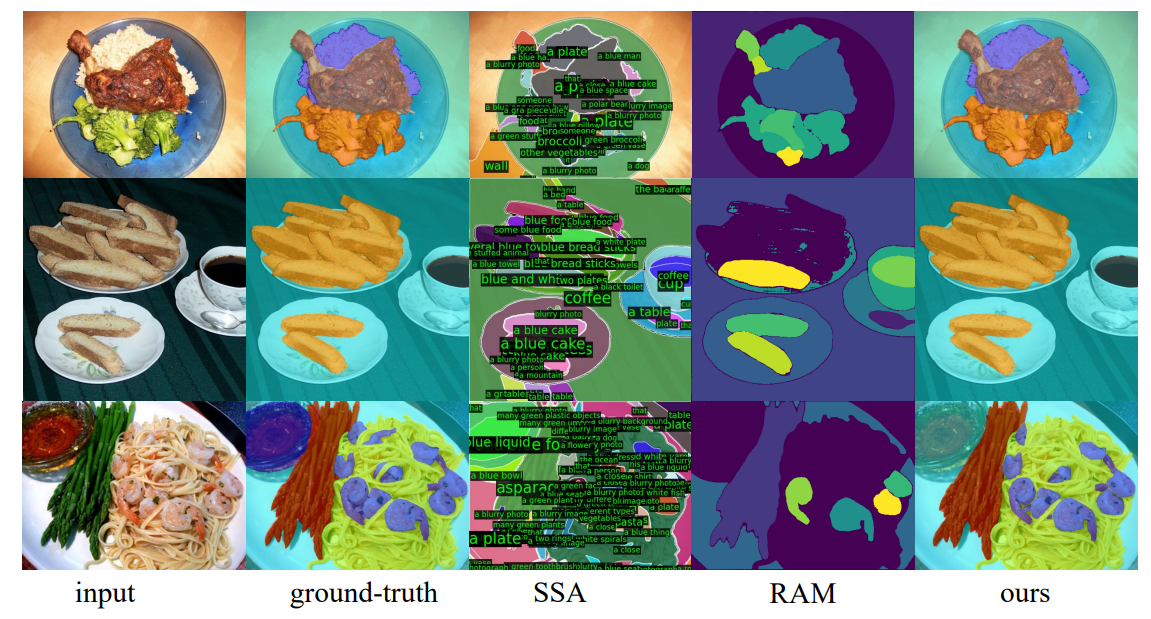

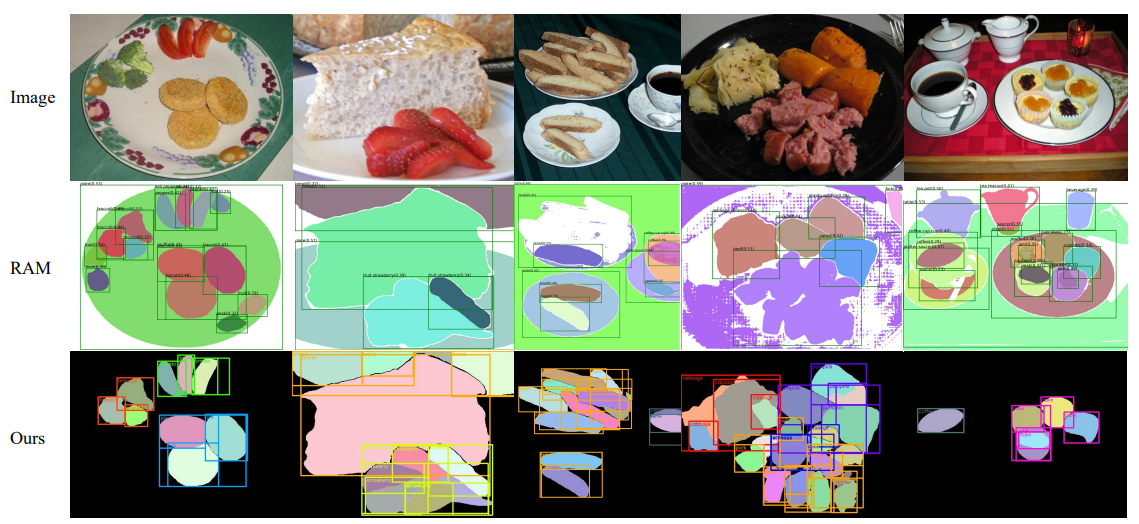

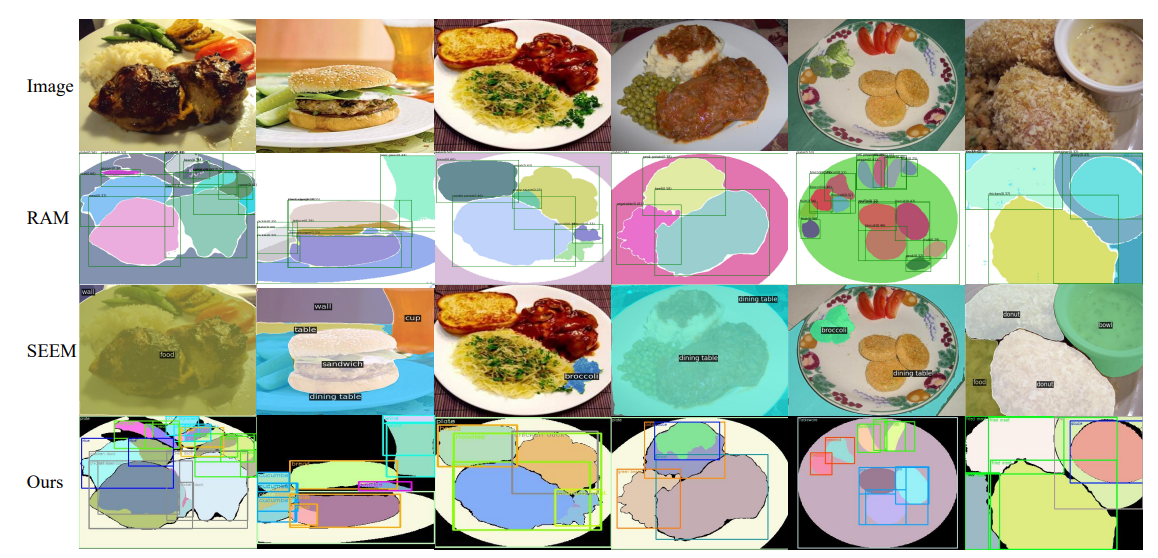

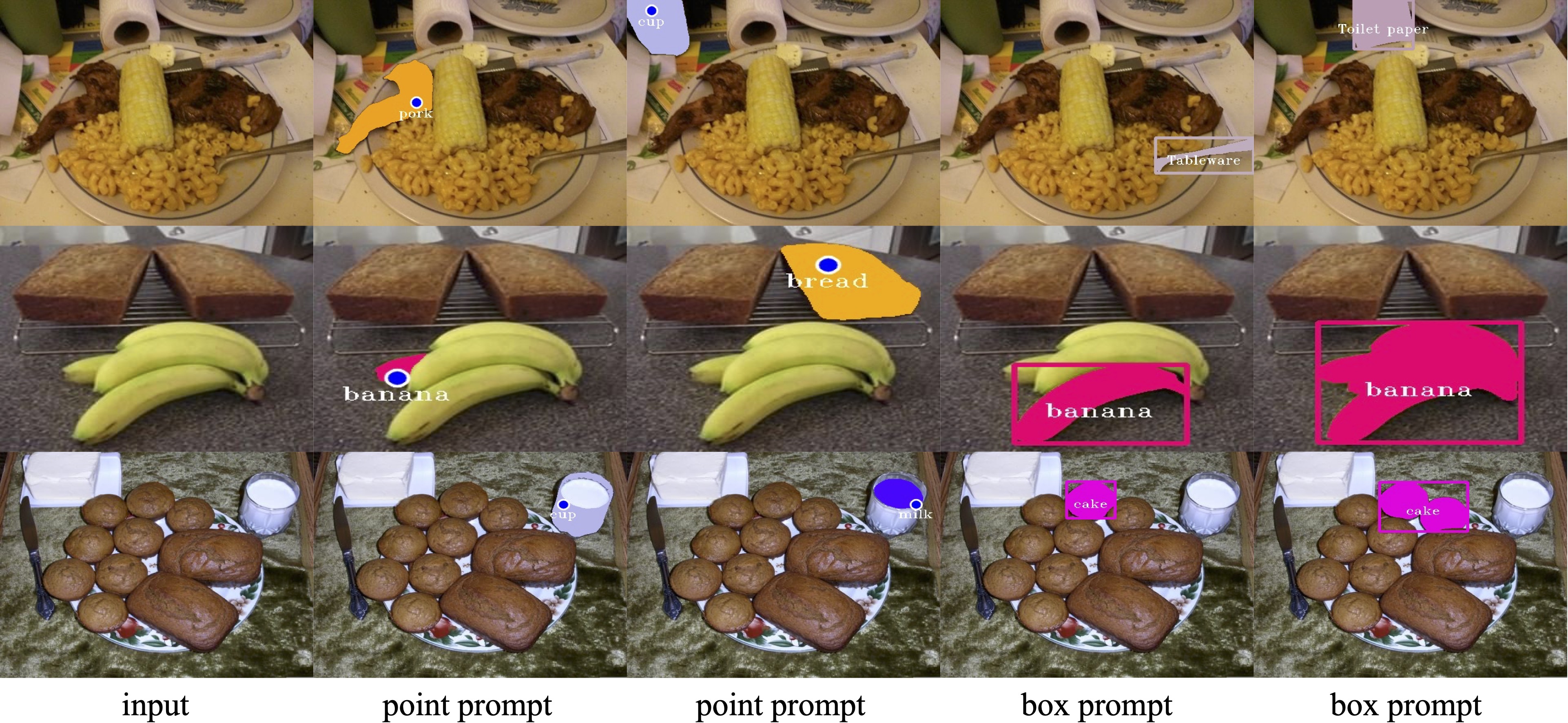

Segment anything Model(SAM) demonstrates significant performance on various segmentation benchmarks, showcasing its impressing zero-shot transfer capabilities on 23 diverse segmentation datasets. However, SAM lacks the class-specific information for each mask. To address the above limitation and explore the zero-shot capability of the SAM for food image segmentation, we propose a novel framework, called FoodSAM. This innovative approach integrates the coarse semantic mask with SAM-generated masks to enhance semantic segmentation quality. Besides, it can perform instance segmentation on food images. Furthermore, FoodSAM extends its zero-shot capability to encompass panoptic segmentation by incorporating an object detector, which renders FoodSAM to effectively capture non-food object information. Remarkably, this pioneering framework stands as the first-ever work to achieve instance, panoptic, and promptable segmentation on food images.

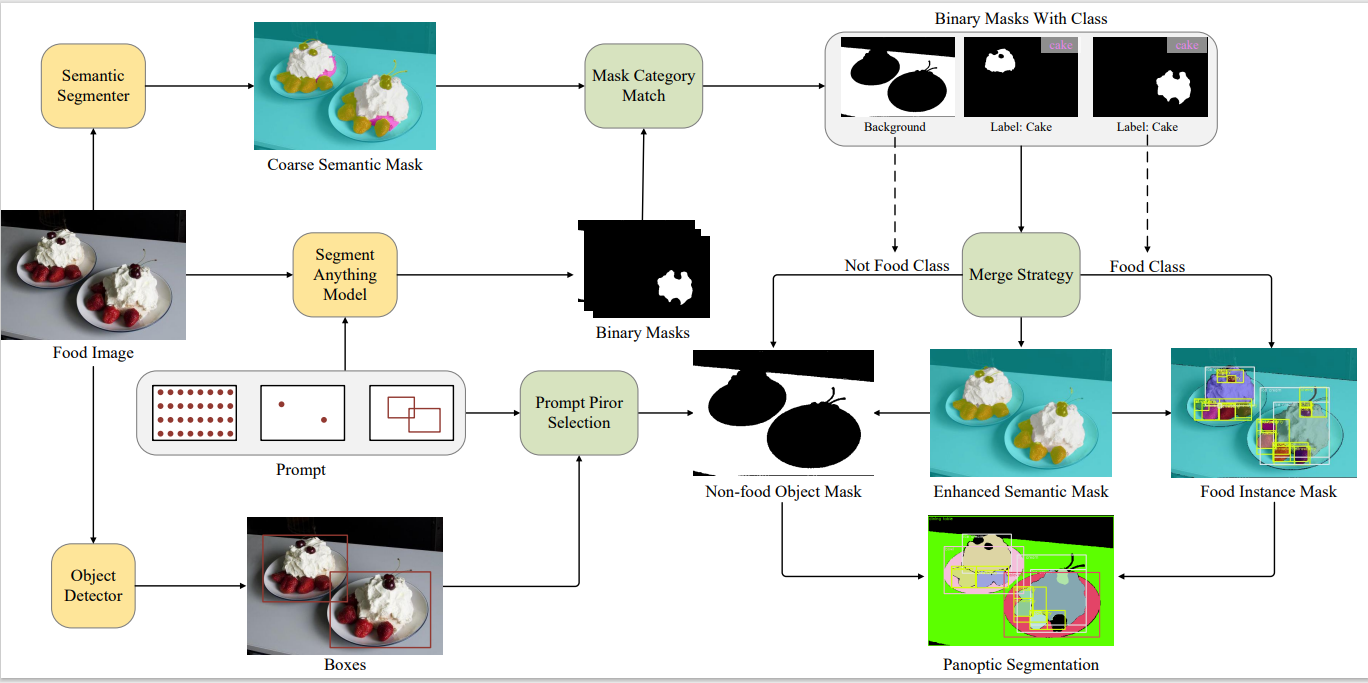

FoodSAM contains three basic models: SAM, semantic segmenter, and object detector. SAM generates many class-agnostic binary masks, the semantic segmenter provides food category labels via mask-category match, and the object detector provides the non-food class for background masks. It then enhances the semantic mask via merge strategy and produces instance and panoptic results. Moreover, a seamless prompt-prior selection is integrated into the object detector to achieve promptable segmentation.

Please follow our installation.md to install.

You can run the model for semantic and panoptic segmentation in a few command lines.

# semantic segmentation for one img

python FoodSAM/semantic.py --img_path <path/to/img> --output <path/to/output>

# semantic segmentation for one folder

python FoodSAM/semantic.py --data_root <path/to/folder> --output <path/to/output>

# panoptic segmentation for one img

python FoodSAM/panoptic.py --img_path <path/to/img> --output <path/to/output>

# panoptic segmentation for one folder

python FoodSAM/panoptic.py --data_root <path/to/folder> --output <path/to/output>

Furthermore, by setting args.eval to true, the model can output the semantic masks and evaluate the metrics.

Here are examples of semantic segmentation and panoptic segmentation on the FoodSeg103 dataset:

python FoodSAM/semantic.py --data_root dataset/FoodSeg103/Images --output Output/Semantic_Results --eval

python FoodSAM/panoptic.py --data_root dataset/FoodSeg103/Images --output Output/Panoptic_Results

| Method | mIou | aAcc | mAcc |

|---|---|---|---|

| SETR_MLA(baseline) | 45.10 | 83.53 | 57.44 |

| FoodSAM | 46.42 | 84.10 | 58.27 |

| Method | mIou | aAcc | mAcc |

|---|---|---|---|

| deeplabV3+ (baseline) | 65.61 | 88.20 | 77.56 |

| FoodSAM | 66.14 | 88.47 | 78.01 |

A large part of the code is borrowed from the following wonderful works:

The model is licensed under the Apache 2.0 license.

If you want to cite our work, please use this:

@misc{lan2023foodsam,

title={FoodSAM: Any Food Segmentation},

author={Xing Lan and Jiayi Lyu and Hanyu Jiang and Kun Dong and Zehai Niu and Yi Zhang and Jian Xue},

year={2023},

eprint={2308.05938},

archivePrefix={arXiv},

primaryClass={cs.CV}

}