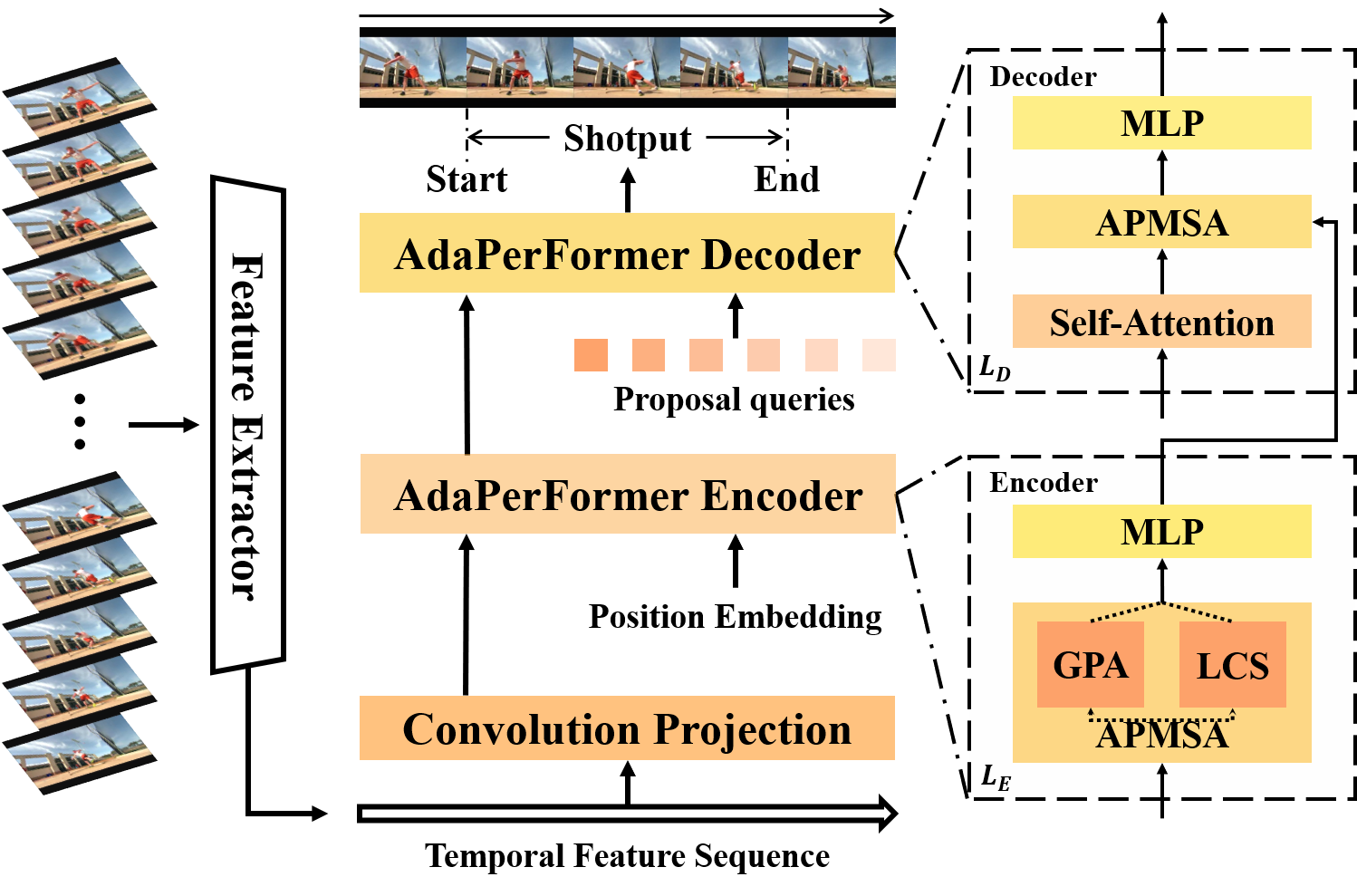

This code repo implements AdaPerFormer, described in the technical report: AdaPerFormer

The complete code will be made public after the accepted paper.

[2022.8.25] Update the arxiv URL. [2022.8.25] Update the project details.

The main components of this project are:

- ./configs: dataset config.

- ./datasets: Data loader and IO module.

- ./model: Our main model with all its building blocks.

- ./src: Startup script, including train and test.

- ./utils: Utility functions for training, inference and other utils.

- Linux

- Python >= 3.5

- CUDA >= 11.0

- GCC >= 4.9

- Other requirements:

pip install -r requirement.txt- Download the original video data from thuoms and use the I3D backbone to extract the features.

- Place I3D_features into the folder

./data

- The folder structure should look like follows:

This folder

│ README.md

│ ...

│

└───data/

│ └───thumos14/

│ │ └───i3d_features

│ │ └───annotations

│ └───...