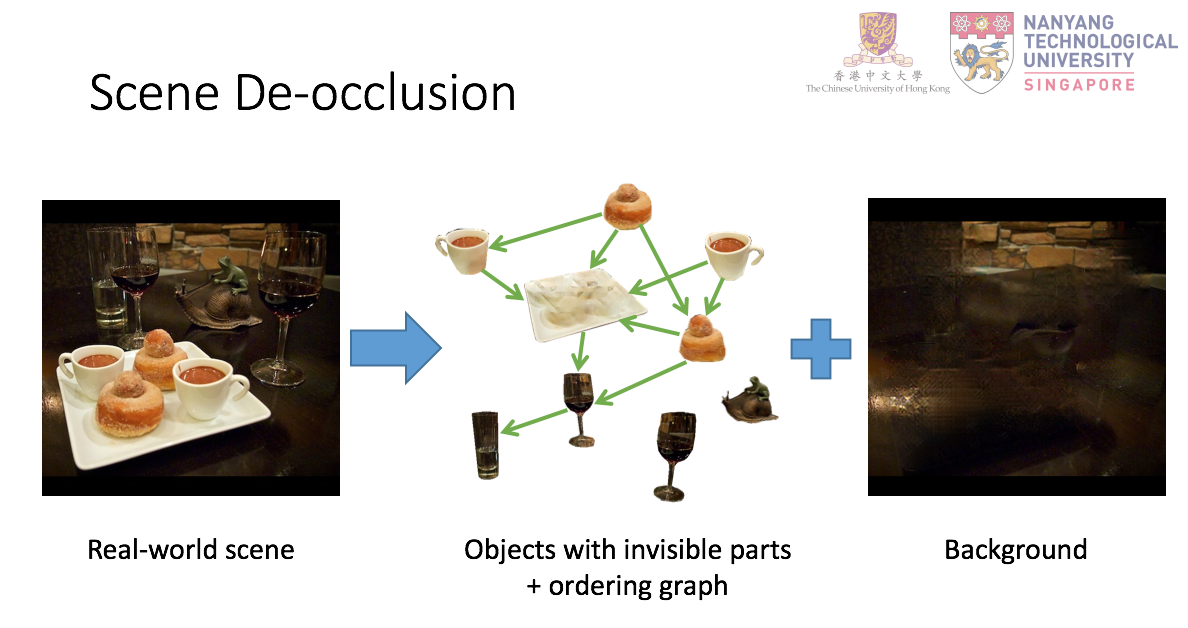

Xiaohang Zhan, Xingang Pan, Bo Dai, Ziwei Liu, Dahua Lin, Chen Change Loy, "Self-Supervised Scene De-occlusion", accepted to CVPR 2020 as an Oral Paper. [Project page].

For further information, please contact Xiaohang Zhan.

-

Watch the full demo video in YouTube or bilibili. The demo video contains vivid explanations of the idea, and interesting applications.

-

Below is an application of scene de-occlusion: image manipulation.

-

pytorch>=0.4.1

pip install -r requirements.txt

COCOA dataset proposed in Semantic Amodal Segmentation.

-

Download COCO2014 train and val images from here and unzip.

-

Download COCOA annotations from here and untar.

-

Ensure the COCOA folder looks like:

COCOA/ |-- train2014/ |-- val2014/ |-- annotations/ |-- COCO_amodal_train2014.json |-- COCO_amodal_val2014.json |-- COCO_amodal_test2014.json |-- ... -

Create symbolic link:

cd deocclusion mkdir data cd data ln -s /path/to/COCOA

KINS dataset proposed in Amodal Instance Segmentation with KINS Dataset.

-

Download left color images of object data in KITTI dataset from here and unzip.

-

Download KINS annotations from here corresponding to this commit.

-

Ensure the KINS folder looks like:

KINS/ |-- training/image_2/ |-- testing/image_2/ |-- instances_train.json |-- instances_val.json -

Create symbolic link:

cd deocclusion/data ln -s /path/to/KINS

LVIS dataset

- Download training and validation sets from here

-

Download released models here and put the folder

releasedunderdeocclusion. -

Run

demos/demo_cocoa.ipynbordemos/demo_kins.ipynb.

-

Train (taking COCOA for example).

sh experiments/COCOA/pcnet_m/train.sh # you may have to set --nproc_per_node=#YOUR_GPUS -

Monitoring status and visual results using tensorboard.

sh tensorboard.sh $PORT

-

Download the pre-trained image inpainting model using partial convolution here to

pretrains/partialconv.pth -

Convert the model to accept 4 channel inputs.

python tools/convert_pcnetc_pretrain.py

-

Train (taking COCOA for example).

sh experiments/COCOA/pcnet_c/train.sh # you may have to set --nproc_per_node=#YOUR_GPUS -

Monitoring status and visual results using tensorboard.

-

Execute:

sh tools/test_cocoa.sh

@inproceedings{zhan2020self,

author = {Zhan, Xiaohang and Pan, Xingang and Dai, Bo and Liu, Ziwei and Lin, Dahua and Loy, Chen Change},

title = {Self-Supervised Scene De-occlusion},

booktitle = {Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)},

month = {June},

year = {2020}

}

We used the code and models of GCA-Matting in our demo.